By: Sadequl Hussain | Comments (6) | Related: > Amazon AWS

Problem

We are planning to migrate our SQL Server databases to Amazon RDS. Currently we are hosting them on-premises (or on Amazon EC2 or Azure). What’s the best way to migrate them?

Or, we have a number of databases in our RDS SQL Server instances. We want to backup these databases separately and keep them accessible for more than 35 days. We want to restore any database from its last backup without restoring the whole instance. How can I do that?

Or, we need to backup our production SQL Server database in RDS and restore it in a different environment. Is there any simple way to do this?

Solution

In a series of tips on MSSQLTips a few years ago, we talked about Amazon RDS: the managed relational database platform from Amazon Web Service (AWS). In one of the tips of the series we talked about how to backup and restore RDS instances. We showed how RDS can automatically backup instances with a few simple configuration changes and how we can create ad-hoc snapshots for the instance.

These options are still available and still used in production systems worldwide. However, RDS has a come a long way since we published the post in 2014. One improvement has been the SQL Server native backup and restore feature where individual databases can be backed up and restored separately instead of the whole instance.

Amazon added this feature to RDS in 2016 and since then it has addressed a number of customer use-cases:

- A scheduled RDS instance backup is available for only 35 days. What if you want to keep database backups beyond that? You could create ad-hoc snapshots with some code, but how long can you keep those snapshots? Say you have to keep your database backups available for ten years to meet regulatory compliance. Even with a weekly snapshot of your RDS instance, that’s a lot of snapshots, and along that timeline, you would have to keep asking AWS to increase your snapshot quota. You will also be paying for the extra storage of those snapshots.

- RDS does not allow you to overwrite an existing instance when you restore its backup or snapshot. You would have to restore the backup to a new RDS instance and somehow move the data from there to the original instance. If you want to restore only a single database, that’s an overkill.

- Backup and restore is still a tried and tested method for migrating databases only if you have access to the storage system of both the source and target systems. That’s why it’s easy to port an on-premises database to a SQL Server hosted in EC2. Contrast that to RDS which is a managed platform. With RDS, you don’t have access to the underlying disks. You cannot copy a backup file to its D:\ drive and restore from there.

- Of course we can use a few other options like Amazon DMS (Data Migration Service), or a simple script-and-BCP approach, or use the data import and export wizard.

- For DMS, it may not always work if the source and destination systems are in different network segments, if the SQL Server instance is using Windows-only authentication etc. Also, DMS is best suited for use cases when the source database needs to have minimal downtime and data migration happens over a long period of time. DMS achieves this by continuously replicating data between a live system and a target RDS instance. This ensures minimal impact when the target database is ready to be switched into production. The replication is handled by “replication instances” which are EC2 boxes. And EC2 will cost money even if the migration time is small.

- For the manual scripting and data import with BCP, there may be problems if there are data constraint violations. You can work around those as well, for example dropping constraints from tables before importing data and then applying them back. However, this also involves more effort than necessary.

- You want the data in your SQL Server database to be encrypted at rest. You can use TDE (Transparent Data Encryption) with SQL Server in RDS and you can also use Amazon KMS (Key Management Service) keys to encrypt the RDS storage. However what if you want to encrypt the backup files as well?

In this tip we will show how you can backup individual databases from a SQL Server instance and restore them in Amazon RDS. You can follow the same technique for databases hosted on-premises or in the cloud (Amazon EC2 or other cloud providers). We will then see how to backup the databases from RDS into Amazon S3, a durable storage service from Amazon Web Service (AWS). We will also ensure the backup is encrypted with a key so it’s secured.

As you can understand, this is a tutorial for DBAs and system administrators who have at least some degree of familiarity with the Amazon cloud platform. We will assume you have some knowledge about:

- Amazon EC2

- Amazon RDS

- Amazon IAM Roles

- Amazon KMS

- Amazon S3

Use Case

For our use case we will create an Amazon EC2 instance running a SQL Server 2014 instance. This instance will be running a copy of the AdventureWorks database.

We will also create a SQL Server 2014 RDS instance and migrate the AdventureWorks database there.

To make this work, we will create an S3 bucket which will be accessible from both the RDS instance and the EC2 server. The SQL Server in EC2 would backup its database as usual and we will copy the backup to the S3 bucket. The RDS instance will then restore the backup from this same bucket. As we mentioned before, the steps should be similar for on-premises databases: all you have to do is copy the backup file to the S3 bucket.

Once the database goes “live” in RDS, we would want to back it up regularly. We will create a simple SQL Server job in the RDS instance which will back up the database to the same bucket once every night. Each backup will have a date/time stamp and will be encrypted. Once a backup is more than six months old, it will be moved to Amazon Glacier, a low-cost archival storage solution from Amazon. That way, we will ensure backups are both secured and retained for long time.

To let RDS access the S3 bucket, it will need to assume an IAM (Identity and Access Management) role with correct permissions. It will also need to use a key to encrypt backup files. The key will be generated, hosted and managed by Amazon Key Management Service (KMS).

Infrastructure Setup

Step 1: Create S3 Bucket

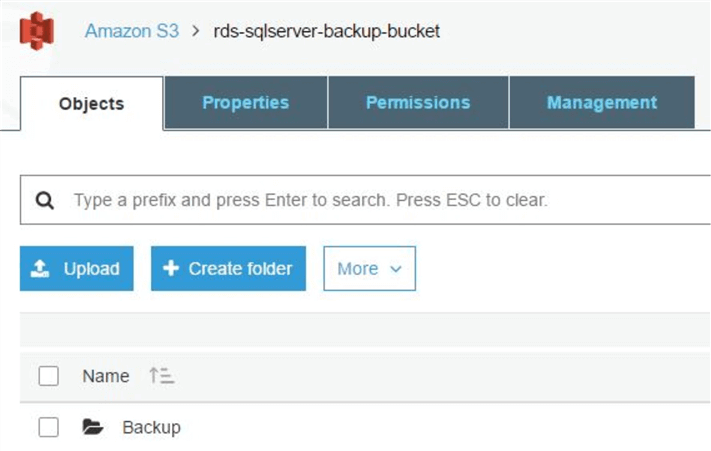

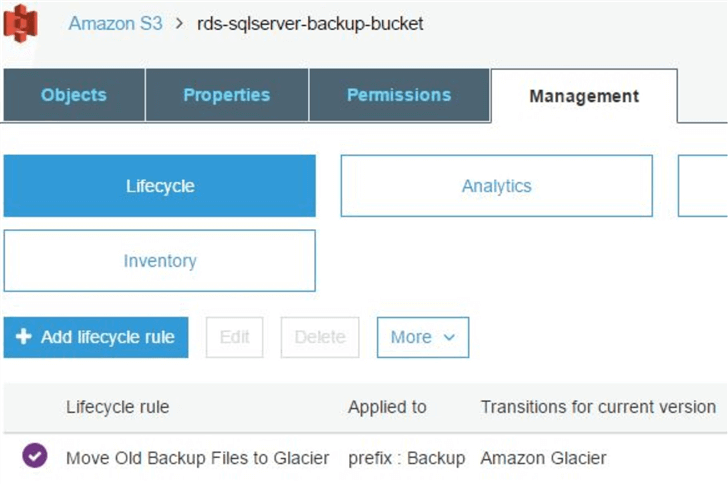

We will start by creating the S3 bucket. In the image below, we are creating an S3 bucket in the us-east-1 (North Virginia) region called “rds-sqlserver-backup-bucket”. Since bucket names are unique across all AWS accounts, you can’t use this bucket name in this region.

The bucket has a folder in it called “Backup” which will hold scheduled database backups from RDS. We are also using a lifecycle policy for the bucket. The policy enables bucket contents older than six months to be moved off to Amazon Glacier:

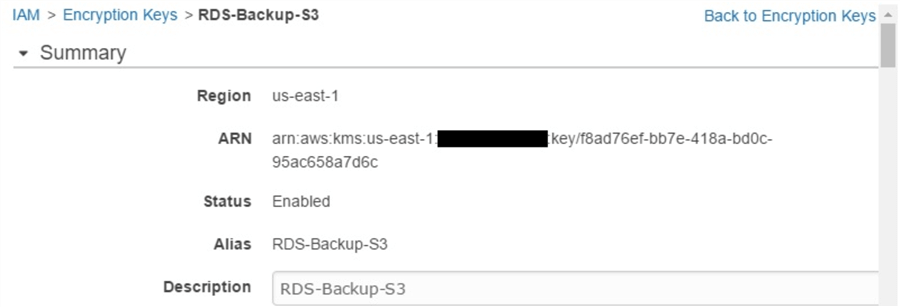

Step 2: Create KMS Key

Next, we will create an Amazon KMS key to encrypt SQL Server backup files. This key is managed by Amazon and will be rotated once every year:

Step 3: Create EC2 Instance and Backup Database

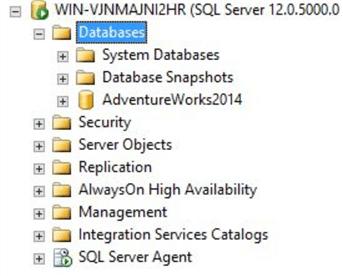

For our source system, we will create an EC2 instance running SQL Server 2014. Like the S3 bucket and the KMS key, the EC2 instance is created in the us-east-1 region. A copy of the AdvenstureWorks2014 database is restored there.

We will now backup the AdventureWorks2014 database from this machine and copy it to the S3 bucket we created before. There are few ways we can do this:

- We can install the AWS Command Line Interface (CLI) tool in the server and configure it with a user account which has “get” and “put” privileges on the bucket. We can then use the “aws s3 cp” command to copy the backup file to the bucket.

- We can create an IAM role with appropriate privileges on the S3 bucket and assign that role to the EC2 instance.

- We can copy the backup to our local machine or another server and upload it to S3.

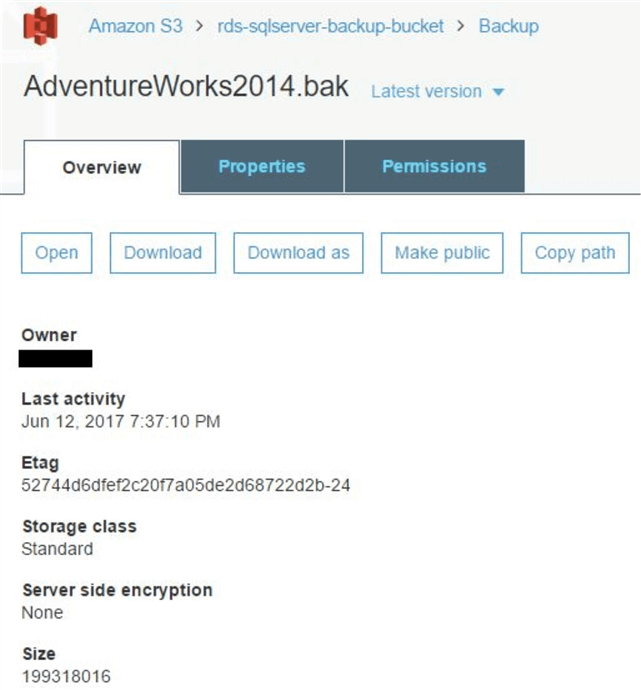

The first method is not considered secured, but for simplicity’s sake we followed it and copied the backup file to the S3 bucket:

Step 4: Create RDS instance

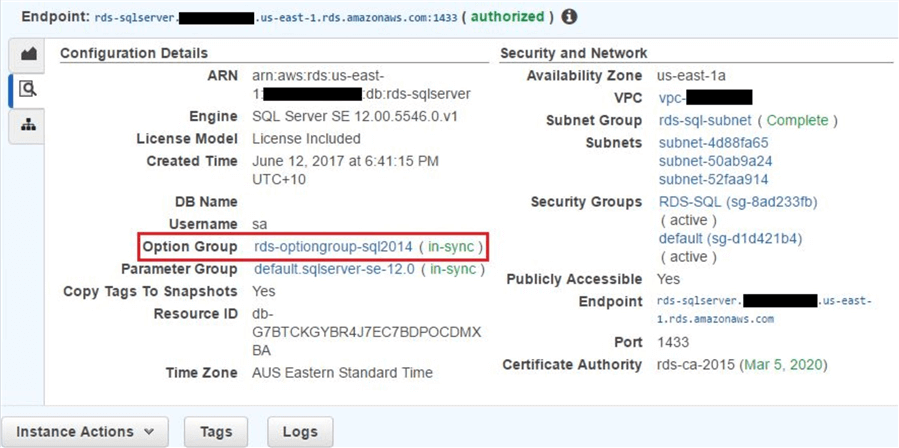

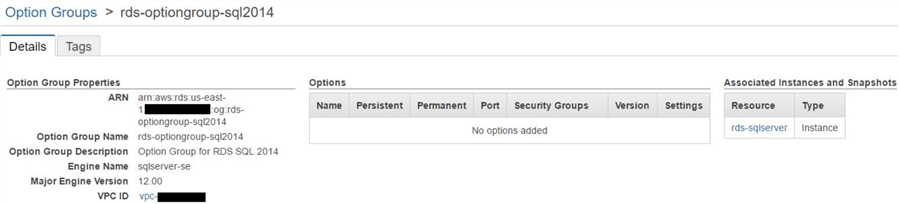

Finally we will create the Amazon RDS instance as a target environment for database restore and a source environment for database backup. Again, the instance is created in the us-east-1 region and running SQL Server 2014 Standard Edition. It’s also using a custom option group as shown below:

Step 5: Modify Option Group

As we can see, the option group we are using for the database instance is called rds-optiongroup-sql2014. Its properties are shown here:

The option group does not have any special options enabled at the moment. This will be the case for RDS SQL Servers not configured for native backup and restore. To enable native SQL backup and restore, we will have to add a specific option to this group. This option is called SQLSERVER_BACKUP_RESTORE. This is a special built-in option from Amazon RDS and allows a SQL Server RDS instance to access an S3 bucket and perform backup and restore from there. It also enables the instance to encrypt its backups with a KMS key.

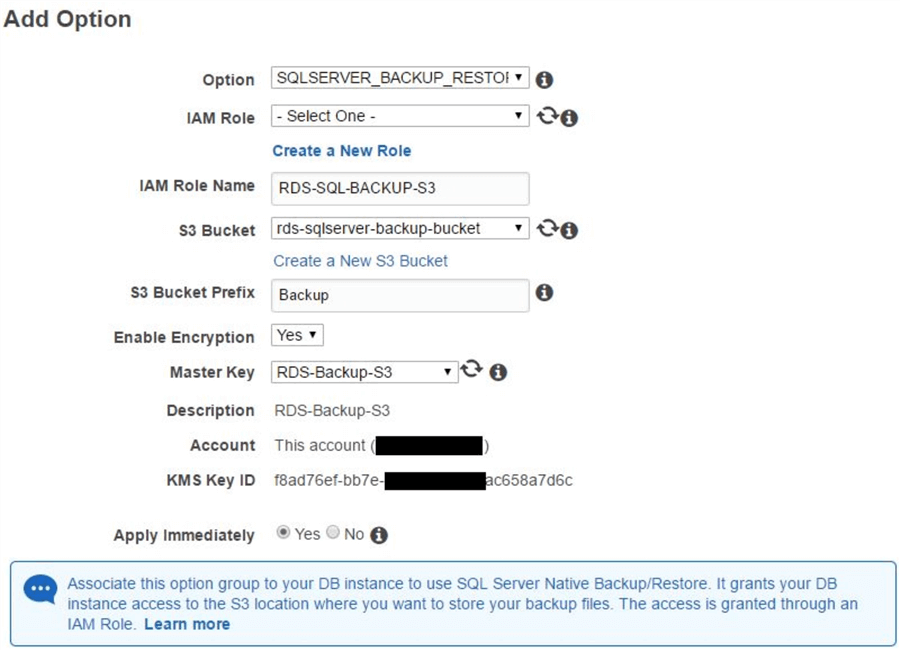

In the following images, we are adding this option to the option group. To edit the option group, we have to go to the “Option Groups” tab of the RDS console, select the option group “rds-optiongroup-sql2014”and click on the “Add Option” button at the top.

In the screen that comes up, there will be a few fields. In this case:

- We are choosing the option “SQLSERVER_BACKUP_RESTORE” from the drop down list. For SQL Server Standard Edition, this will be the only option available. The TDE (Transparent Data Encryption) will be available for SQL Server Enterprise Edition. Note the text at the bottom of the screen:

- Associate this option group to your DB instance to use SQL Server Native Backup/Restore. It grants your DB instance access to the S3 location where you want to store your backup files. The access is granted through an IAM Role

- We are not using any pre-created IAM role for the option group, so we are letting RDS create the IAM role for us and giving it a name “RDS-SQL-BACKUP-S3”.

- We are selecting the S3 bucket we created before (“rds-sqlserver-backup-bucket”) and specifying the “Backup” folder as a key prefix.

- We want the backups to be encrypted so we are enabling this option and selecting the KMS master key we created before (“RDS-Backup-S3”).

- We want the option to be added immediately to the option group and applied to the RDS instance.

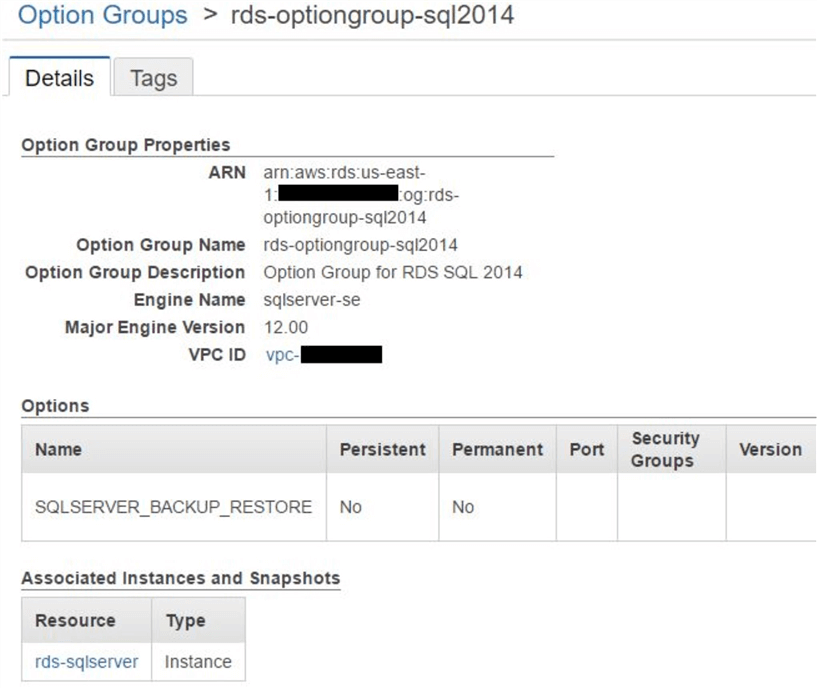

Once the values are chosen, we can save the option by clicking on the “Add Option” button. You can see the option group now has the new option added:

For the more advanced user, if you are interested to see what permissions the IAM role “RDS-SQL-BACKUP-S3” has, you can check its policy from the IAM console. This is what it looks like:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"kms:Decrypt",

"kms:DescribeKey",

"kms:GenerateDataKey"

],

"Resource": [

"arn:aws:kms:us-east-1:xxxxxxxxx:key/xxxxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxx"

]

},

{

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::rds-sqlserver-backup-bucket"

]

},

{

"Effect": "Allow",

"Action": [

"s3:GetObjectMetaData",

"s3:GetObject",

"s3:PutObject",

"s3:ListMultipartUploadParts",

"s3:AbortMultipartUpload"

],

"Resource": [

"arn:aws:s3:::rds-sqlserver-backup-bucket/Backup/*"

]

}

]

}

Restore SQL Server Database on Amazon RDS

Now that we have the infrastructure set up, it’s time to restore the database backup from the S3 bucket. Amazon RDS for SQL Server offers two different stored procedures for backup and restore:

- rds_backup_database: used to backup a single database to S3

- rds_restore_database: used to restore a single database backup from S3

Once you start a backup or restore process, you can track their progress or cancel it:

- rds_task_status: to track the progress of the backup or restore task

- rds_cancel_task: to cancel a running backup or restore task

All these stored procedures are shipped by Amazon as part of the SQL Server msdb database.

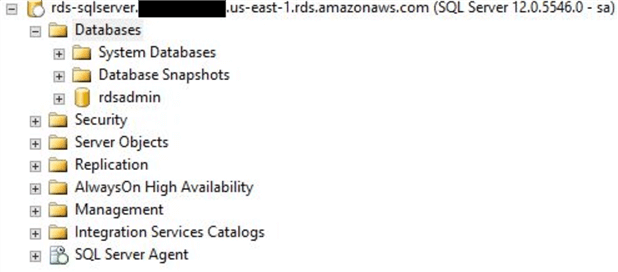

As the image shows, we have logged into our RDS instance as the RDS master user (we created the master user as “sa”):

We will now run the following command to restore the AdventureWorks2014.bak file as AdventureWorks database:

EXEC msdb.dbo.rds_restore_database @restore_db_name = 'AdventureWorks', @S3_arn_to_restore_from = 'arn:aws:s3:::rds-sqlserver-backup-bucket/Backup/AdventureWorks2014.bak', @KMS_master_key_arn = NULL GO

Note the parameters supplied to the stored procedure. We have to specify:

- The name of the database to restore.

- The Amazon Resource Name (ARN) of the backup file. For S3 objects, the ARN follows the format shown here.

- The KMS key used to encrypt the backup file. In this case the backup file was not encrypted by a KMS key, so we are leaving this value to NULL.

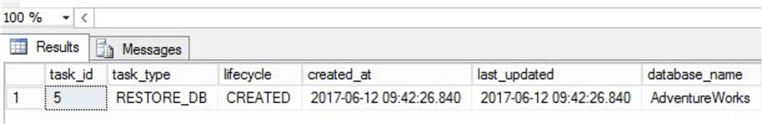

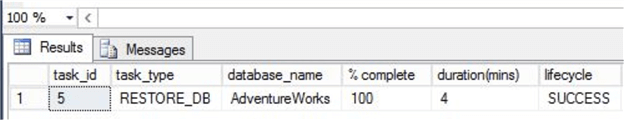

SQL Server will start off a restore task and assign it a task ID:

We can easily track the status of the task with this command:

EXEC msdb.dbo.rds_task_status @db_name ='AdventureWorks', @task_id = <task ID> GO

We can keep running this command to check the status of the restore task. The “lifecycle” column of the output will change to reflect the status which can be any of these:

- CREATED

- IN_PROGRESS

- SUCCESS

- CANCEL_REQUESTED

- CANCELLED

- ERROR

The “% complete” column will show how far the operation has completed. When finished successfully, the output will show a “% complete” value of 100 and a “lifecycle” value of “success”:

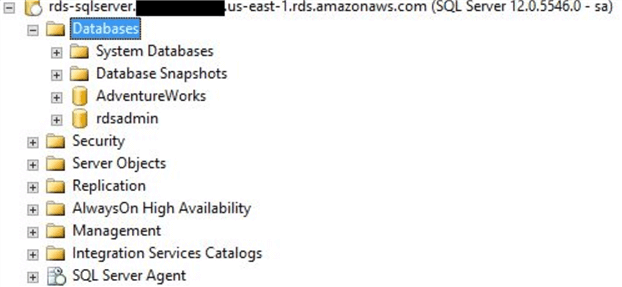

The AdventureWorks2014 database is now in our RDS instance:

SQL Server Database Backup on Amazon RDS

If enabled, RDS can create instance-level snapshots once every day. As long as the instance is running, a snapshot can be restored as another new instance (RDS does not allow the instance to be overwritten). The restore point can be within five minutes of any point-in-time in the last 35 days. This is a built-in feature of RDS. However, the instance backups are deleted when the instance itself is terminated. To protect against this, RDS will warn us to create one last manual snapshot before the instance is deleted.

Typically businesses would want their database backups available longer than 35 days. And once again, S3 can be an excellent solution for this. In the following code snippet we are creating a stored procedure to natively backup all user databases in a SQL Server RDS instance. It will encrypt the backups with a KMS key and copy them to a bucket. The backup file names will be unique with a date and timestamp.

The sensitive information in the code has been redacted for obvious reasons.

IF EXISTS (SELECT * FROM sys.sysobjects WHERE name = 'Backup_Databases_to_S3' AND xtype = 'P')

BEGIN

DROP PROCEDURE Backup_Databases_to_S3

END

GO

CREATE PROCEDURE Backup_Databases_to_S3 AS

BEGIN

DECLARE @BackupFileName varchar(50)

DECLARE @DBName sysname

DECLARE @S3ARN_Prefix nvarchar(100)

DECLARE @S3ARN nvarchar(100)

DECLARE @KMS_master_key_ARN nvarchar(100)

SET @S3ARN_Prefix = 'arn:aws:s3::::aws:s3:::rds-sqlserver-backup-bucket/Backup/'

SET @KMS_master_key_ARN = 'arn:aws:kms:us-east-1:XXXXXXXXXX:key/xxxxxxxx-xxxx-xxxx-xxxx-xxxx'

DECLARE DBBackup CURSOR FOR

SELECT name

FROM sys.databases

WHERE name NOT IN ('rdsadmin', 'master', 'model', 'msdb', 'tempdb')

AND state_desc = 'ONLINE'

AND user_access_desc = 'MULTI_USER'

AND is_read_only = 0

OPEN DBBackup

FETCH NEXT FROM DBBackup INTO @DBName

WHILE (@@FETCH_STATUS = 0)

BEGIN

SET @BackupFileName = @DBName + '_' + REPLACE(REPLACE(REPLACE(CONVERT(varchar,GETDATE(),20),'-',''),':',''),' ','') + '.bak'

SET @S3ARN = @S3ARN_Prefix + @BackupFileName

EXEC msdb.dbo.rds_backup_database

@source_db_name = @DBName,

@S3_arn_to_backup_to = @S3ARN,

@KMS_master_key_arn = @KMS_master_key_ARN,

@overwrite_S3_backup_file = 0

FETCH NEXT FROM DBBackup INTO @DBName

END

CLOSE DBBackup

DEALLOCATE DBBackup

END

GO

Note the rds_backup_database stored procedure being called in this script and the parameters being passed. We don’t have to specify the “KMS_master_key_arn” parameter if no encryption is needed.

Once the stored procedure runs, we can track the progress of the backups using the rds_task_status stored procedure. In this case we do not have to specify any specific task_id, because there will be multiple tasks generated: one per database backup.

We have now created an automated backup routine using the SQL Server RDS built-in commands. This can be scheduled from a SQL Server Agent job which can run once every night. However, the job itself will succeed every time it runs, because all it does is call a stored procedure. The actual backup processes are not tracked in the stored procedure.

To track the backup success or failure we can build an extra bit of logic into another job which will check the task outcomes of the last submitted batch of tasks. If any of the tasks is in “ERROR” status, the code can raise an error.

Enabling Backup Compression

Before we finish, let’s talk about backup compression. Although with S3, storage is not an issue anymore, you can still compress your backups before sending them through to S3. To enable backup compression, you need to run a special stored procedure. The following command shows how it’s enabled:

EXEC rdsadmin..rds_set_configuration 'S3 backup compression', 'true'

Note the stored procedure is located in the “rdsadmin” which is a system database that Amazon ships with SQL Server in RDS.

Conclusion

In this article we saw a much-awaited feature of SQL Server in Amazon RDS. Although it seems fairly trivial to get up and running native backup and restore, there are some caveats to it and we will talk about those in the next part of the series.

Next Steps

- Learn more about Amazon Key Management Service (KMS).

- Try SQL Server native backup and restore in a non-production system using the steps described.

- Learn how to create the infrastructure with automated code. For example, how can you use CloudFormation or other provisioning tools to create the S3 bucket, KMS key, IAM roles, RDS option groups, etc. This will save you time when you want to automate the migration of multiple database instances to RDS.

About the author

Sadequl Hussain has been working with SQL Server since version 6.5 and his life as a DBA has seen him managing mission critical systems.

Sadequl Hussain has been working with SQL Server since version 6.5 and his life as a DBA has seen him managing mission critical systems.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips