By: Tim Ford | Updated: 2009-02-09 | Comments (6) | Related: More > Database Administration

Problem

I've mentioned on a number of occasions I support an insane (I-N-S-A-N-E) number of databases and SQL Server instances. Doing so makes it difficult to pay as much attention to all aspects of your environment as you may need.

In a perfect situation the DBA sizes their transaction log files and database files appropriately, monitors activity and growth, and makes sure all their backups always succeed. However, most of us don't live in that perfect world. Whether it be funding, manpower, hardware constraints or simple lack of being able to control all aspects of space and time, we're only human and can only do so much. That is why I fight the battles that need fighting and micromanage those critical databases that require that level of diligence. The remaining 80% of my databases fall into the outliers of diligence. Once again, the 80/20 rule is in effect: 80% of my time is spent on maintaining 20% of my databases. It is because of that I build queries and constructs similar to what I am going to demonstrate today.

It's a tool I call LogWatch. It's a stored procedure that resides in a dedicated database on all my Microsoft SQL Server instances that I use to host all those scripts that I'd love to drop into the master database. Scripts for listing file sizes for all data and log files on my instance, custom-built index maintenance scripts, and backup scripts that run in-lieu of relying on maintenance plans just to name a few. Those of you who have followed my articles here on MSSQLTips.com will remember this as my iDBA database. The following is a script I use to keep a handle on those potentially out of control transaction logs.

Solution

The queries and temporary objects that are used for this script rely upon components in a tip I published in late 2008 titled Determine Free Space, Consumed Space and Total Space Allocated for Microsoft SQL Server Databases. In it I demonstrated how you could overcome the various random methodologies that exist in Microsoft SQL Server for returning log size, file size, and space consumed metrics by creating your own stored procedure to return all that information at once.

What LogWatch does instead is to tap into that same metadata, but drill into it further to isolate those cases where your transaction logs appear to have spun out of control in relation to the sizing of the data file. We've all seen this behavior before, and it happens for various reasons. You run across a database hosted on one of your instances and the log file is 10GB and the data file is only 5GB. (I'm not saying this has happened to me, but let's say I have a friend it's happened to.) At any rate you have a transaction log that appears to be grossly oversized. Why does this happen? A few reason are:

- Inadequate backup practices. Perhaps the database is highly transactional, running in Full recovery model, but logs are not being backed up with a proper frequency to match the activity.

- A database backup may have been skipped or failed due to issues with disk space, failure in an earlier step in the SQL Agent job running the backup, or other issue impacting successful backup processing.

- Poorly designed database that does not adequately commit transactions in the T/SQL code, thereby resulting in massive amounts of activity being logged before being written to the data file(s).

- Improper assignment of a recovery model for the database.

The usp_LogWatch stored procedure utilizes a favorite undocumented stored procedure of mine, sp_MSforeachdb to query each database on the instance, collecting sizing information for each database's file structure. It then returns results where the cumulative sizes for each database's log file is greater than 50% of the total size of the data files for that database. It only returns results where the log file is greater than 500MB. These thresholds are easily adjusted in the WHERE clause that returns results to the users and are only pertinent for my environment. Anything that falls outside of those limits are small fish that I really do not waste time in reviewing. Please note that in my environment I utilize a dedicated database for scripts of this nature. I also have a separate schema dependent upon function within this database. I utilize a schema called MetaBOT for this and similar scripts. I also have schemas called StatsBOT, IndexBOT, and BackupBOT. I'm pretty sure you can figure out why. If you do not make use of schemas you can simply change the code in the script below to utilize the dbo schema by replacing all references to MetaBOT with dbo. Let's take a look at the code below.

CREATE PROCEDURE [MetaBOT].[usp_LogWatch] AS

DECLARE @SQL VARCHAR(5000)

--Clean up temp objects if not properly done so previously

IF EXISTS (SELECT NAME FROM tempdb..sysobjects WHERE NAME = '#usp_LogWatch_Results')

BEGIN

DROP TABLE #usp_LogWatch_Results

END

--Create temporary table to store results

CREATE TABLE #usp_LogWatch_Results ([Database Name] sysname, [File Type] VARCHAR(4), [Total Size in Mb] INT)

--Create SQL script to run against all databases on the instance

SELECT @SQL =

'USE [?]

INSERT INTO #usp_LogWatch_Results([Database Name], [File Type], [Total Size in Mb])

SELECT DB_NAME(), [File Type] =

CASE type

WHEN 0 THEN ''Data'''

+

'WHEN 1 THEN ''Log'''

+

'END,

[Total Size in Mb] =

CASE ceiling([size]/128)

WHEN 0 THEN 1

ELSE ceiling([size]/128)

END

FROM sys.database_files

'

--Run the command against each database

EXEC sp_MSforeachdb @SQL

SELECT D.[Database Name], D.[Total Data File Size In Mb], L.[Total Log File Size In Mb],

CAST(CAST(L.[Total Log File Size In Mb] AS decimal(8,1))/CAST(D.[Total Data File Size In Mb]

AS decimal(8,1)) AS decimal(4,2)) AS [Log::Data Ratio]

FROM

(

SELECT [Database Name], [File Type], SUM([Total Size in Mb]) AS [Total Data File Size In Mb]

FROM #usp_LogWatch_Results

WHERE [File Type] = 'Data'

GROUP BY [Database Name], [File Type]

) AS D INNER JOIN

(

SELECT [Database Name], [File Type], SUM([Total Size in Mb]) AS [Total Log File Size In Mb]

FROM #usp_LogWatch_Results

WHERE [File Type] = 'Log'

GROUP BY [Database Name], [File Type]

) AS L ON D.[Database Name] = L.[Database Name]

WHERE L.[Total Log File Size In Mb] > 500 AND

CAST(CAST(L.[Total Log File Size In Mb] AS decimal(8,1))/CAST(D.[Total Data File Size In Mb]

AS decimal(8,1)) AS decimal(4,2)) > 0.5

ORDER BY CAST(CAST(L.[Total Log File Size In Mb] AS decimal(8,1))/CAST(D.[Total Data File Size In Mb]

AS decimal(8,1)) AS decimal(4,2)) DESC,

L.[Total Log File Size In Mb] DESC

--Clean up your temporary objects

DROP TABLE #usp_LogWatch_Results

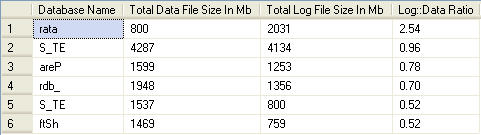

When created and run the results look something like this (database names changed to protect the innocent.)

The results are returned by database, total size of all associated data files, cumulative log file sizing, and a ratio that is calculated from dividing log file size by data file size.

Therefore, looking at the first record, you'll see that perhaps something is out of balance. This would definitely warrant investigation. Conversely, looking at the last record, the sizing may be acceptable, depending on the database behavior. This is why it is so important to have an understanding of the total environment you support, even if you do not have the resources to provide uniform support for the entire SQL Server domain. Not all returned results necessarily mean something is wrong. The important thing is that even though I do not have as much time as I need to address all my databases with the same attention I give to the 20% that consume the 80% of my time, I do need to have a handle on what is going on in my SQL Server environment and understand when the results look right or not.

Next Steps

- Review the Determine Free Space, Consumed Space and Total Space Allocated for Microsoft SQL Server Databases tip.

- Review these tips on backing up your databases, and recovery models.

- Run this query in your environment to determine if you need to perform log file maintenance, recovery model, or backup scheduling modifications.

- Stay tuned for the next tip in this series on what steps to take after this review.

About the author

Tim Ford is a Senior Database Administrator with MindBody.

Tim Ford is a Senior Database Administrator with MindBody.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2009-02-09