By: Scott Shaw | Updated: 2011-01-06 | Comments (19) | Related: > Backup

Problem

Our company virtualized a large number of SQL Servers to NetApp appliances. The infrastructure team purchased NetApp's application Snap Manager for SQL Server (SMSQL) for snapshot backup and recovery. I'm a DBA, not a storage administrator! What are some best practices prior to implementing this backup tool?

Solution

For this article I'll cover the basic steps for preparing your server to use SMSQL. If you have an existing server that will use the tool then be prepared to do some serious reconfiguring. I won't go into detail as to the underlying technology. For information on what is a snapshot, you can find it here http://blogs.netapp.com/uspub/2010/04/netapp-101-snapshot-your-datas-first-line-of-defense.html#more

Also, I don't work for NetApp and I'm not a NetApp evangelical, but whether we as DBAs like it or not it's becoming more common for large infrastructure changes, like virtualization, to force database administrators into hardware specific solutions. In this case because the storage team chose NetApps as the storage infrastructure there are certain benefits to using a tool such as SMSQL to backup and restore your databases. The benefits mostly come from its integration with the backend snapshot storage technology (Snapshot, SnapRestore, Flexclone) and correctly configuring the systems can significantly cut recovery times for even the largest databases. Still, there are some serious challenges to using what is essentially a storage application to backup SQL databases. As a DBA, it can be frustrating to have the storage design heavily impact how you backup and recover your databases.First off, for better or worse, the following items must be true in order to successfully implement snapshot backups with SMSQL:

- System database files cannot reside on the same LUN as user databases

- User database logfiles and datafiles need to be on separate LUNs

- Tempdb must have its own LUN

- A separate LUN needs to be created to store the Snapshots

- SQL must be installed on the server before installing SnapDrive and SMSQL

- ONLY database files can exist on the LUN. You'll need to put all application binaries and non-database files on different LUNs.

These restrictions tell us that at a minimum we will need 5 LUNs or, if you prefer, drive letters. If your system was a physical box that was then virtualized and has all the files on one drive then you will need to have the storage team add additional LUNs. You will need to physically move the data and log files to the appropriate drives.

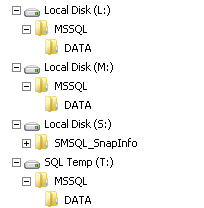

Here is a typical configuration:

In this configuration:

- L is for the user database logs.

- M is for the user database data.

- S holds the snapshots and

- T is for the tempdb files.

How you want to name the drive letters and organize the directory structure is completely up to you. The size of your S drive is determined by how many backups you take and store. Hopefully your storage administrator created dynamic LUNs which allows for easy expansion.

The system files can either be on your C drive or you can create a new dedicated LUN. They cannot be on the same LUN as user database files and they cannot be backed up using SMSQL. Why? Because in order to create a snapshot the application must quiesce the database and SQL does not allow you to freeze IO for system databases. You'll need to setup a separate maintenance plan for your system databases. In our company we use a central MSX server and use native SQL backups.

Things to Consider

You will need to consider the relationship between LUNs and volumes. Ideally each database should be on its own volume and LUN. This would mean that if you had 100 databases then you would need 200 LUNs (100 for data files and 100 for logs)! The reason behind this is that the snapshot occurs at the volume level. The snapshot must quiesce every file on the volume whether or not it is being backed up. If you have a large number of databases on the volume then the IO freeze may last too long and SQL will lose its connection to the databases. The recommendation is no more than 25 databases on a single volume. If IO freezes more than 15 seconds, problems can occur.

You will see significant increases in restore time if multiple databases are on the same volume. In this scenario SnapManager needs to snap the volume to a temporary location to restore the single database. It can't apply the snapshot for the volume because it would overwrite and corrupt all the other database files on the volume. This is also why no other application files can exist on the same LUN as your database files. I tend to have a one-database-per-volume configuration only if the database exceeds 200 GB. The beauty of this one-to-one relationship is that even a multi-terabyte database restores almost instantaneously.

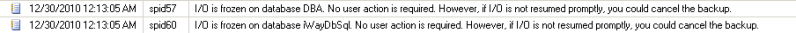

Finally, expect to see these messages in the SQL errorlog during your backup times.

The IO above was frozen for 5 seconds on a database named DBA. That's typical and doesn't seem like a long time, but be aware that some applications are sensitive to session timeouts and users may see their screens freeze or their connections drop. If this happens you'll need to either change the time your backup occurs or move the databases (especially the large ones) to their own volume.

Next Steps

- Get the SMSQL documentation SnapManager 5.0 for SQL Server: Best Practices Guide

- A great tip on Virtual Servers Technologies and SQL Server

- Some excellent tips from SQL MCM Brent Ozar: Virtualization Best Practices

- Look for additional tips on how to setup and schedule the backup jobs.

- Be very nice to your storage administrator. You'll need each other!

About the author

Scott Shaw is a Lead SQL Server DBA with extensive experience running SQL Server in a virtualized environment.

Scott Shaw is a Lead SQL Server DBA with extensive experience running SQL Server in a virtualized environment.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2011-01-06