By: Tim Ford | Updated: 2011-04-25 | Comments (2) | Related: 1 | 2 | More > Database Administration

Problem

I support thousands of databases and I'm running across situations where I have a database that has a data file of a couple hundred megabytes and the associated log file is gigabytes in size! I understand this is because I may not have my transaction log backups scheduled properly. What I'm interested in is an easy way to identify these situations where the SQL log file is too large, so I can go in and further analyze these problem databases without needing to look at each database.

Solution

Log file growth (and some would say bloating) is an issue many SQL Server DBAs face on a daily basis. When it comes down to it, the issue of log file growth is a direct outcome of configuring your database to run in a logged mode (FULL or Bulk-Logged) and either ignoring the need to - or forgetting to configure and schedule transaction log backups for the database. In most cases however this can occur if the activity being logged is at such a volume that your log backup schedule may not be as frequent as it should be or you may have encountered situations where some issue prevented backups to run as scheduled for a period of time.

I've created the following script that looks at the maximum log backup size in the master database tables that retain your backup metadata. It then compares that max size for each database to the size of the transaction log file for each database on the instance and then returns those databases where the historical maximum log backup file size is less than the current size of the transaction log.

Note: before running the following code you need to create the sp_foreachdb stored procedure from this previous tip written by Aaron Bertrand.

--+-----------------------------------------------

--Create necessary temp table for results

--+-----------------------------------------------

IF EXISTS

(

SELECT name

FROM tempdb.sys.[tables]

WHERE name LIKE '#trx_log_size%'

)

DROP TABLE #trx_log_size;

CREATE TABLE #trx_log_size

(

database_name nvarchar(128) NOT NULL,

[name] nvarchar(128) NOT NULL,

physical_name nvarchar(260) NOT NULL,

size_mb int NOT NULL

)

--+-----------------------------------------------

--Populate temp table with current log file sizes

--+-----------------------------------------------

EXEC [dbo].[sp_foreachdb]

@command = 'INSERT INTO [#trx_log_size] ([database_name], [name],

[physical_name], [size_mb])

SELECT N''?'', name, physical_name, size*8/1024

FROM [?].sys.database_files WHERE type = 1;',

@suppress_quotename = 1

--+-----------------------------------------------

/*

File size::backup size (Full recovery DBs)

ctl+shift+m to replace days param to include in

analysis of backup history

*/

--+-----------------------------------------------

SELECT

L.[database_name],

L.[physical_name],

L.[size_mb],

MAX(CEILING(BF.[backup_size]/1024/1024)) AS max_backup_file_size_mb,

L.[size_mb] - MAX(CEILING(BF.[backup_size]/1024/1024)) AS file_excess_mb

FROM msdb.dbo.[backupfile] BF

INNER JOIN msdb.dbo.[backupset] BS ON [BF].[backup_set_id] = [BS].[backup_set_id]

INNER JOIN [#trx_log_size] L ON [BS].[database_name] = L.[database_name]

INNER JOIN master.sys.[databases] SD ON L.[database_name] = SD.[name]

WHERE BS.[type] = 'L'

-- AND BS.[backup_start_date] > DATEADD(d,,GETDATE())

AND SD.[recovery_model_desc] = 'FULL'

GROUP BY SD.[name], L.[database_name], L.[physical_name], L.[size_mb]

HAVING L.[size_mb] > MAX(CEILING(BF.[backup_size]/1024/1024))

ORDER BY L.[size_mb] - MAX(CEILING(BF.[backup_size]/1024/1024)) DESC;

--+-----------------------------------------------

--Clean up your messes when you're done!

--+-----------------------------------------------

DROP TABLE #trx_log_size;

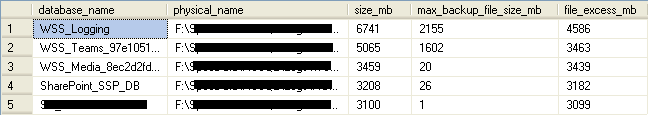

After creating the sp_foreachdb SP and running the above code in my environment I can see the databases that have issues as shown below.

To make this easier to analyze all results returned are converted to megabytes. The output is as follows:

- database_name - name of the database

- physical_name - physical file name for the transaction log file

- size_mb - current size of the transaction log file

- max_backup_file_size_info - maximum backup file size for a transaction log backup

- file_excess_mb - excess space that is being used by transaction log

Based on the output I can see I have 5 databases that have a potential issue. So, once you have the list of databases to review you can then proceed with analyzing each one individually to determine if you wish to reduce the size of the log file, alter the backup schedule, or some combination there-of.

Next Steps

- Review, download and run Aaron Bertrand's improved sp_msforeachdb code for this tip as well as other queries you need to run across all databases.

- Run the above code in your environment to see if you have any potential transaction log size issues.

- Note that the amount of history to take into consideration when compiling maximum backup size is supplied via a templated parameter. To use this parameter you need to uncomment the line and simply use the keystroke combination of Ctl+Shift+M and supply the parameter value that signifies the number of days you want to take into consideration for historical backup information. To learn more about templates read this tip.

About the author

Tim Ford is a Senior Database Administrator with MindBody.

Tim Ford is a Senior Database Administrator with MindBody.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2011-04-25