By: Brady Upton | Updated: 2013-06-25 | Comments (4) | Related: > Database Design

Problem

Forwarded records in SQL Server can cause performance issues on heap tables because the record outgrows the page and the database engine uses pointers to reference the data. For those that don't know what a heap is, it is a table without a clustered index. The best practice is to have a clustered index on every table, however, sometimes there are cases when a clustered index is not needed. In this case, DBA's should be aware of all heap tables and should be concerned about forwarded records causing poor performance. In this tip, we'll discuss forwarded records and how fix them.

Solution

Forwarded records are records in a SQL Server table that have grown too large for the page that it currently resides on. These types of records can only be found in heaps because tables with a clustered index keep the data sorted based on the clustered index. With a heap, once the record outgrows the page, the record is moved to a new page and a forwarding pointer is left in the original location to point to the new location.

Let's look at an example of a forwarding record.

First, let's create a table and insert some records:

CREATE TABLE [dbo].[Demo](

[ID] [int] IDENTITY(1,1),

[Server] [nvarchar](50) NULL,

[DatabaseName] [nvarchar](100) NULL,

[timestamp] [datetime] default getdate())

GO

CREATE INDEX idx_Server ON Demo(Server)

GO

INSERT INTO Demo

(Server,DatabaseName)

VALUES ('Server1', 'DB1')

INSERT INTO Demo

(Server,DatabaseName)

VALUES ('Server2', 'DB2')

INSERT INTO Demo

(Server,DatabaseName)

VALUES ('Server3', 'DB3')

GO 100

Next, let's use the following DMV query to check our table:

SELECT

OBJECT_NAME(ps.object_id) as TableName,

i.name as IndexName,

ps.index_type_desc,

ps.page_count,

ps.avg_fragmentation_in_percent,

ps.forwarded_record_count

FROM sys.dm_db_index_physical_stats (DB_ID(), NULL, NULL, NULL, 'DETAILED') AS ps

INNER JOIN sys.indexes AS i

ON ps.OBJECT_ID = i.OBJECT_ID

AND ps.index_id = i.index_id

WHERE OBJECT_NAME(ps.object_id) = 'Demo'

Notice that the HEAP index type has 0 forwarded records.

Next we will change the table definition by modifying the DatabaseName column.

ALTER TABLE Demo ALTER COLUMN [DatabaseName] nvarchar(MAX)

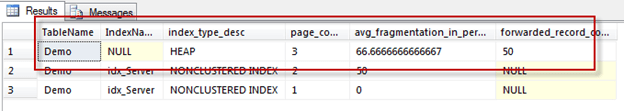

If we run the DMV query again you will see that the forwarded_record_count has changed (along with a lot of fragmentation):

The page count increased to 3 on the HEAP indicating that a new page was created and 50 forwarded records were created.

In a real world situation you will probably not know exactly when this happens so by changing the WHERE clause in the DMV query you can find all the forwarded records in a database. I would monitor heap tables at least monthly and if you notice high I/O on a heap, then that should be a hint that something needs to be looked at and possibly modified.

SELECT

OBJECT_NAME(ps.object_id) as TableName,

i.name as IndexName,

ps.index_type_desc,

ps.page_count,

ps.avg_fragmentation_in_percent,

ps.forwarded_record_count

FROM sys.dm_db_index_physical_stats (DB_ID(), NULL, NULL, NULL, 'DETAILED') AS ps

INNER JOIN sys.indexes AS i

ON ps.OBJECT_ID = i.OBJECT_ID

AND ps.index_id = i.index_id

WHERE forwarded_record_count > 0

On my Test DB, you will notice I have two HEAP tables that have forwarded records including the one I just created.

As discussed earlier, forwarded records can cause performance issues because the record locator points to a page and the DB engine has to go to that page, see the forwarding pointer, then go to the new page which can cause I/O issues.

There are two quick ways to fix forwarded records.

- Add a clustered index so the table won't be a HEAP.

- If adding a clustered index is not an option, use ALTER TABLE tablename REBUILD (only available in SQL Server 2008+) to rebuild the table (and also defragment).

ALTER TABLE Demo REBUILD

Next Steps

- Check out the MS Blog for more information regarding forwarded records.

- Learn more about Clustered tables vs. Heap Tables from Greg Robidoux's tip

About the author

Brady Upton is a Database Administrator and SharePoint superstar in Nashville, TN.

Brady Upton is a Database Administrator and SharePoint superstar in Nashville, TN.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2013-06-25