By: Daniel Farina | Updated: 2013-08-13 | Comments (13) | Related: 1 | 2 | 3 | 4 | 5 | 6 | > Fragmentation and Index Maintenance

Problem

Database physical file fragmentation isn't usually taken into consideration very often as a performance issue. But, if the data files are fragmented, the database engine will take longer to retrieve data because of seek overhead or rotational latency in mechanical disks. In addition, "A heavily fragmented file in an NTFS volume may not grow beyond a certain size", if you have Auto Grow enabled in some very unfortunate situations the process could fail with the error: "665 (The requested operation could not be completed due to a file system limitation.)".

In this tip we will view the impact of physical file fragmentation and how to solve it with Microsoft's Sysinternals tool Contig.

Solution

Physical file fragmentation is a matter of consideration when talking about system performance. But, we as DBA's don't give much importance to this topic and focus all of our energies on the database, which is our job, but sometimes we have to see the entire picture. In most cases, physical file fragmentation should be the last thing to check.

Let's see what can lead to file fragmentation.

- Many mid-sized organizations, to reduce costs, share the database server with other applications like SharePoint or a web server. In this scenario, the file system could be fragmented regardless of the database. And when the database needs to allocate disk space, it could be scattered.

- Repeated backup operations could lead to disk fragmentation, so you should backup to other disks or devices.

Impact of physical file fragmentation

Clustered indexes try to organize the index such that only sequential reads will be required. This is great if the .MDF is contiguous on disk, but not as helpful if the file is spread over the disk. Remember that SQL Server doesn't know about file allocation, that is an operating system task.

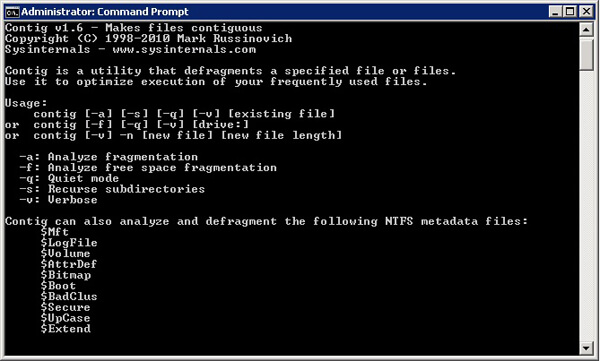

The Sysinternal's Contig tool

This is a free utility from Microsoft aimed to optimize individual files, or to create new files that are contiguous. You can download it for free here http://technet.microsoft.com/en-us/sysinternals/bb897428

It's very easy to use. With the contig -a option you can analyze the fragmentation of a specified file with the database online. To defrag the file simply run Contig [FileName].

Needless to say that in order to defrag a database, the database file must be OFFLINE.

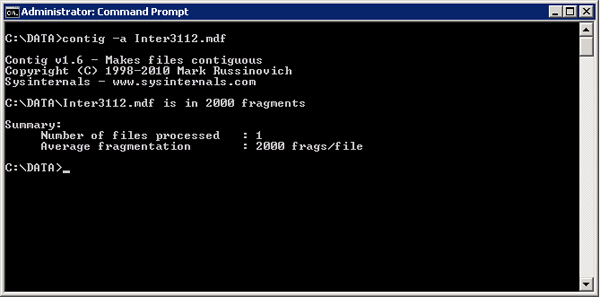

The test

I have created a physically fragmented database to show you the effects of physical file fragmentation on performance. The DB is 3.5 GB size and as you can see in the picture below it's heavily fragmented, there are 2000 fragments.

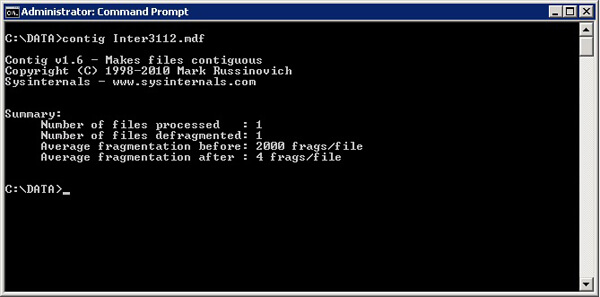

Now in order to defragment the data file we need to take the database OFFLINE and then run contig and then bring the database back ONLINE.

ALTER DATABASE [Inter3112] SET OFFLINE GO

Then we can use contig [Filename] as shown below to defragment. We can see after the command there are only 4 fragments.

ALTER DATABASE [Inter3112] SET ONLINE GO

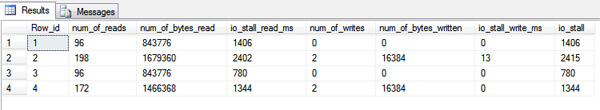

Checking Impact of File Fragmentation

The workload for the test was a simple DBCC CHECKTABLE.

DBCC CHECKTABLE ('inter3112.dbo.invoice')

GOWith the help of sys.dm_io_virtual_file_stats DMV which is out of the scope of this tip, we can see the total time, in milliseconds, users waited for I/O to be completed on the file (io_stall column).

I created a table in tempdb in order to store the results from sys.dm_io_virtual_file_stats and here is the script.

CREATE TABLE tempdb..file_stats

(

Row_ID INT IDENTITY (1, 1) ,

sample_ms INT,

num_of_reads BIGINT,

num_of_bytes_read BIGINT,

io_stall_read_ms BIGINT,

num_of_writes BIGINT,

num_of_bytes_written BIGINT,

io_stall_write_ms BIGINT,

io_stall BIGINT,

size_on_disk_bytes BIGINT,

CONSTRAINT Row_ID_PK PRIMARY KEY (Row_ID)

)

INSERT INTO tempdb..file_stats

SELECT sample_ms,

num_of_reads,

num_of_bytes_read,

io_stall_read_ms,

num_of_writes,

num_of_bytes_written,

io_stall_write_ms,

io_stall,

size_on_disk_bytes

FROM sys.dm_io_virtual_file_stats (DB_ID ('inter3112'), 1)

GORows 2 and 4 on the image below represent the execution of DBCC CHECKTABLE before and after the file defrag. As you can see, the io_stall value dropped about 1100ms after defragmenting from 2415ms to 1344ms.

Conclusion

Before you start defragmenting your database files you have to see what the best approach to your environment is and keep in mind that defragmenting is very I/O intensive. Also, this will be easier to do on smaller database files, because not as much contiguous disk space is required, and harder for larger databases.

Next Steps

- Read about fragmentation causes SQL Server Fragmentation What causes it (Part 3 of 9)

- Check how to avoid fragmentation SQL Server Fragmentation How to avoid it (Part 4 of 9)

- Create I/O Performance snapshots Creating IO performance snapshots to find SQL Server performance problems

- Identify I/O Bottlenecks How to Identify IO Bottlenecks in MS SQL Server

- Manage logical database fragmentation Managing SQL Server Database Fragmentation

- Read this tip and determine if Auto Grow is good for you Auto Grow, Auto Shrink and Physical File Fragmentation in SQL Server

About the author

Daniel Farina was born in Buenos Aires, Argentina. Self-educated, since childhood he showed a passion for learning.

Daniel Farina was born in Buenos Aires, Argentina. Self-educated, since childhood he showed a passion for learning.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2013-08-13