By: Aaron Bertrand | Updated: 2013-09-17 | Comments (20) | Related: > TSQL

Problem

From time to time, I see a requirement to generate random identifiers for things like users or orders. People want to use random numbers so that the "next" identifier is not guessable, or to prevent insight into how many new users or orders are being generated in a given time frame. They could use NEWID() to solve this, but they would rather use integers due to key size and ease of troubleshooting.

Let's say we want all users to have a random number between 1,000,000 and 1,999,999 - that's a million different user IDs, all 7 digits, and all starting with the number 1. We may use one of these calculations to generate a number in this set:

SELECT 1000000 + (CONVERT(INT, CRYPT_GEN_RANDOM(3)) % 1000000), 1000000 + (CONVERT(INT, RAND()*1000000) % 1000000), 1000000 + (ABS(CHECKSUM(NEWID())) % 1000000);

(These are just quick examples - there are probably at least a dozen other ways to generate a random number in a range, and this tip isn't about which method you should use.)

These seem to work great at the beginning - until you start generating duplicates. Even when you are pulling from a pool of a million numbers, you're eventually going to pull the same number twice. And in that case, you have to try again, and sometimes try again multiple times, until you pull a number that hasn't already been used. So you have to write defensive code like this:

DECLARE @rowcount INT = 0, @NextID INT = 1000000 + (CONVERT(INT, CRYPT_GEN_RANDOM(3)) % 1000000);

WHILE @rowcount = 0

BEGIN

IF NOT EXISTS (SELECT 1 FROM dbo.UsersTable WHERE UserID = @NextID)

BEGIN

INSERT dbo.Users(UserID /* , other columns */)

SELECT @NextID /* , other parameters */;

SET @rowcount = 1;

END

ELSE

BEGIN

SELECT @NextID = 1000000 + (CONVERT(INT, CRYPT_GEN_RANDOM(3)) % 1000000);

END

END

Never mind that this is really ugly, and doesn't even contain any transaction or error handling, this code will logically take longer and longer as the number of "available" IDs left in the range diminishes.

Solution

One idea I've had to "solve" this problem is to pre-calculate a very large set of random numbers; by paying the price of storing the numbers in advance, we can guarantee that the next number we pull won't have already been used. All it requires is a table and some code to pull the next number from the set. One way to populate such a table:

CREATE TABLE dbo.RandomIDs

(

RowNumber INT PRIMARY KEY CLUSTERED,

NextID INT

) WITH (DATA_COMPRESSION = PAGE);

-- data compression used to minimize impact to disk and memory

-- if not on Enterprise or CPU is your bottleneck, don't use it

;WITH x AS

(

SELECT TOP (1000000) rn = ROW_NUMBER() OVER (ORDER BY s1.[object_id])

FROM sys.all_objects AS s1

CROSS JOIN sys.all_objects AS s2

ORDER BY s1.[object_id]

)

INSERT dbo.RandomIDs(RowNumber, NextID)

SELECT rn, ROW_NUMBER() OVER (ORDER BY NEWID()) + 1000000

FROM x;

This took about 15 seconds to populate on my system, and occupied about 20 MB of disk space (30 MB if uncompressed). I'll assume that you have 20 MB of disk and memory to spare; if you don't, then this "problem" is likely the least of your worries. :-)

Now, in order to generate the next ID, we can simply delete the lowest RowNumber available, and output its NextID for use. We'll use a CTE to determine the TOP (1) row so that we don't rely on "natural" order - if you add a unique constraint to NextID, for example, the "natural" order may turn out to be based on that column rather than RowNumber. We'll also output the result into a table variable, rather than insert it directly into the Users table, because certain scenarios - such as foreign keys - prevent direct inserts from OUTPUT.

DECLARE @t TABLE(NextID INT); ;WITH NextIDGenerator AS ( SELECT TOP (1) NextID FROM dbo.RandomIDs ORDER BY RowNumber ) DELETE NextIDGenerator OUTPUT deleted.NextID INTO @t; INSERT dbo.Users(UserID /* , other columns */) SELECT NextID /* , other parameters */ FROM @t;

When we come close to exhausting the first million values (likely a good problem), we can simply add another million rows to the table (moving on to 2,000,000 to 2,999,999), and so on. It may be wise to set up some automation to periodically checking how many rows are left, so that you can re-populate well in advance of actually running out of numbers.

Performance Metrics for Generating Random Values in SQL Server

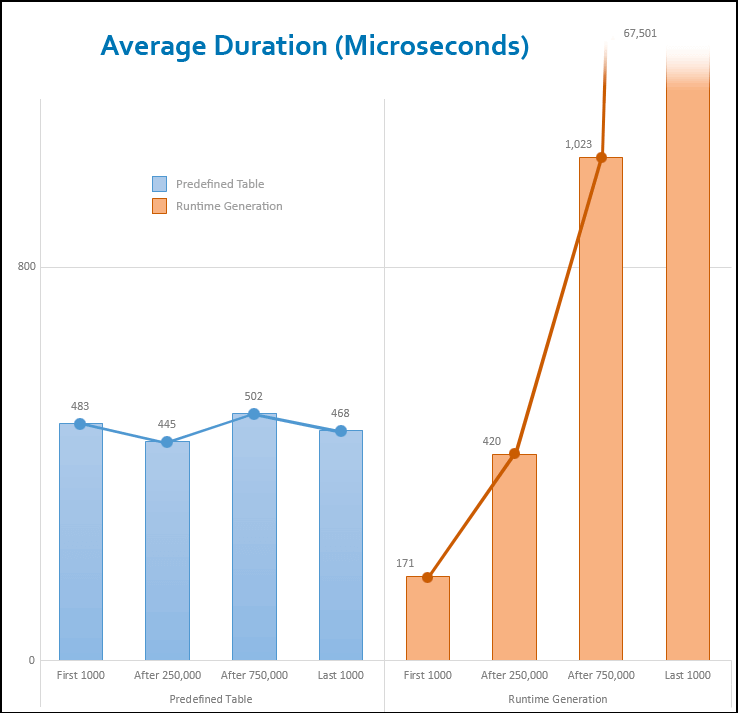

I ran both methods 1,000,000 times, filling the Users table up with these random UserID values. The following chart shows that, while generating a random number at runtime is faster out of the gates, the cost of duplicate checking (and retrying in the event of a collision) quickly overtakes the read cost of the predefined table, and grows rapidly and eventually exponentially as more and more values are used up:

In the first 1,000 inserts, there were zero collisions. In the last 1,000 inserts, the average collision count was over 584,000. This, of course, is a problem that doesn't occur when you *know* that the next number you pull can't possibly be a duplicate (unless someone has populated the Users table through some other means).

Conclusion

We can trade a bit of disk space and relatively predictable (but not optimal) performance for the guarantee of no collisions, no matter how many random numbers we've already used. This doesn't seem like a good trade in the early going, but as the number of ID values used increases, the performance of the predefined solution does not change, while the random numbers generated at runtime really degrades performance-wise as more and more collisions are encountered.

Next Steps

- I encourage you to perform your own testing to see if a predefined set of random numbers might make more sense in your environment.

- Review the following tips and other resources:

About the author

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2013-09-17