By: Aaron Bertrand | Updated: 2023-10-26 | Comments (4) | Related: > Dynamic SQL

Problem

Often when you are generating dynamic SQL you will want to validate the content of the query as opposed to just blindly executing it. Unfortunately, Management Studio can make it difficult to check that your command is complete, never mind to troubleshoot any errors that may occur when you do execute the command.

Solution

When you are building a dynamic SQL command, there are various ways that you can look at the text of the command without actually executing it. Let's look at a simple example. Let's say I want to generate an UPDATE STATISTICS command against all of the tables in a database. A simple way to do this would be:

DECLARE @sql NVARCHAR(MAX); SET @sql = N''; SELECT @sql = @sql + N' UPDATE STATISTICS ' + QUOTENAME(s.name) + '.' + QUOTENAME(t.name) + ' WITH FULLSCAN;' FROM sys.schemas AS s INNER JOIN sys.tables AS t ON s.[schema_id] = t.[schema_id]; SELECT @sql; -- EXEC sp_executesql @sql;

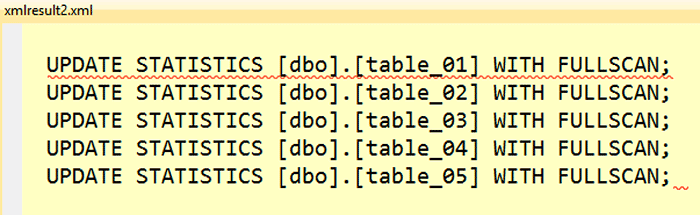

If I have 5 tables with relatively short names, this isn't a problem. The output (in Results to Text) looks like this:

UPDATE STATISTICS [dbo].[table_01] WITH FULLSCAN; UPDATE STATISTICS [dbo].[table_02] WITH FULLSCAN; UPDATE STATISTICS [dbo].[table_03] WITH FULLSCAN; UPDATE STATISTICS [dbo].[table_04] WITH FULLSCAN; UPDATE STATISTICS [dbo].[table_05] WITH FULLSCAN;

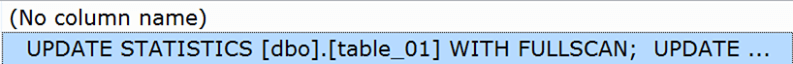

The challenge comes when the command is longer. We can simulate this by creating tables with slightly longer names; I created 10 tables with the following naming pattern, and in Results to Text the output is:

UPDATE STATISTICS [dbo].[my_tables_have_really_long_and_obnoxious_names_01] WITH FULLSCAN; UPDATE STATISTICS [dbo].[my_tables_have_really_long_and_obnoxious_names_02] WITH FULLSCAN; UPDATE STATISTICS [dbo].[my_tables_have_really_long_and_obnoxious_name

Notice that - according to the select - the command gets truncated. This isn't actually the case, which we can verify using LEN() or DATALENGTH():

SELECT LEN(@sql), DATALENGTH(@sql); ------ ------ 920 1840

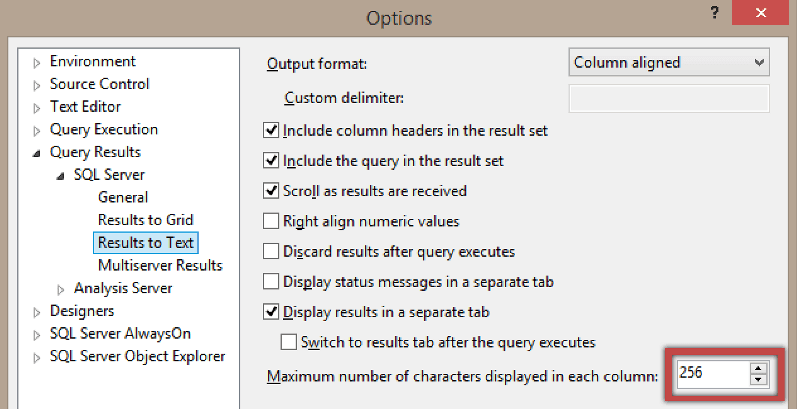

However, if we manually check the length of the output we see that, including the leading CR+LF, it is 256 characters. This is no coincidence; after all, the default in Management Studio is to only show 256 characters per column in Results to Text output (Tools > Options > Query Results > SQL Server > Results to Text):

We can increase this, but only up to a total character count of 8,192. This will bring SELECT - in Results to Text at least - up to the same limit afforded by the PRINT command. Both are obviously still problematic if the resulting string is going to exceed 8K.

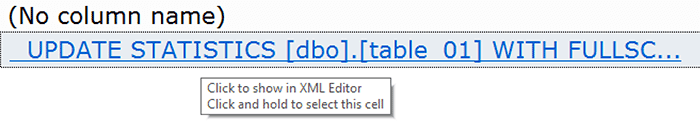

In Results to Grid, the output is a little harder to read, since the grid removes carriage returns and line feeds in order to fit the output into a single cell. Going back to my first five tables:

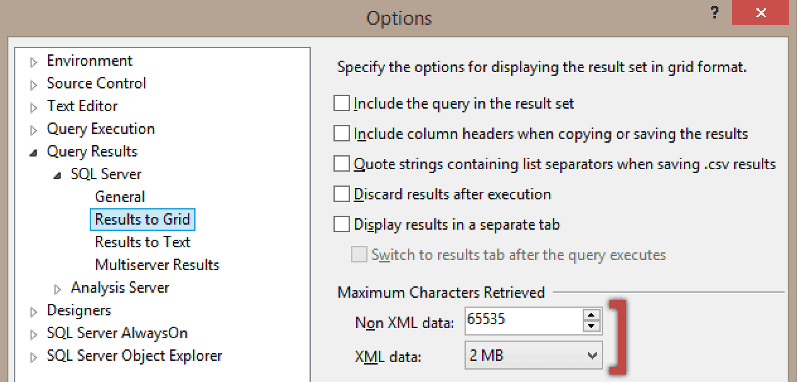

All the content is there, which you can verify by highlighting the cell, copying it, and pasting it into a new query window. Even the carriage returns are restored. However, this can still be a limiting approach, since the output of the grid is restricted to a maximum of 64K (65,535 characters):

There's a clue there about how we can move beyond this 64K barrier: use XML.

SELECT CONVERT(XML, @sql);

This generates the following output in the grid:

Some limitations here, too, though. For one, the only way to really look at the output is to click on the grid output; this will open in a new window, but it is not a true query window - there will be no color highlighting, and you can't execute from this window either:

I filed a bug about this ages ago, but it was dismissed.

Another problem with vanilla conversion to XML is that this will convert various entities into "safe" versions; for example, WHERE x > 10 will change to WHERE x > 10.

A better solution

It occurred to me that this would be yet another useful scenario for an ordered split function. By creating a table-valued function that splits a string on CR/LF, and returns each resulting sub-string in the original order, we could return all of the query - in either text or grid mode. First, let's create a Numbers table:

CREATE TABLE dbo.Numbers ( n INT PRIMARY KEY ) --WITH (DATA_COMPRESSION = PAGE);

For simplicity, I am going to limit the numbers (and hence the supported string length, in characters) to 1 million. You can make the Numbers table larger (and therefore support much longer strings) by adding an additional CROSS JOIN, which I've commented out, and changing the parameter to TOP.

;WITH n(n) AS

(

SELECT TOP (1000) number

FROM master.dbo.spt_values

WHERE [type] = N'P' AND number BETWEEN 1 AND 1000

ORDER BY number

)

INSERT dbo.Numbers(n)

SELECT TOP (1000000) n = ROW_NUMBER() OVER (ORDER BY n1.n)

FROM n AS n1 CROSS JOIN n AS n2 -- CROSS JOIN n AS n3

ORDER BY n;

With 1,000,000 rows, this table is 11 MB (with page compression) and 13 MB without. Certainly tolerable, especially since this will only be pulled into memory when you're testing dynamic SQL strings, or if you find it useful for other string splitting problems too (personally, I find Numbers tables and split functions immensely valuable).

You may want to support longer strings; of course you would probably want no more than a billion rows in this table - not only will that be a waste most of the time, your strings can't possibly be any bigger than that anyway, given that NVARCHAR(MAX) is limited to 2 GB (about a billion Unicode characters).

As an aside, supporting a billion rows will require a much larger table, which will likely take a much longer time to populate initially, and which will likely incur a healthy dose of log and tempdb activity. Do not try this on a small VM with limited disk space and memory.

Now, the inline table-valued function, which you can put in a central utility database, or master, or wherever you think it can be useful:

ALTER FUNCTION [dbo].[SplitStrings_Ordered]

(

@List NVARCHAR(MAX),

@Delimiter NVARCHAR(255)

)

RETURNS TABLE

WITH SCHEMABINDING

AS

RETURN

(

SELECT n, s = SUBSTRING(@List,n,CHARINDEX(@Delimiter,@List+@Delimiter,n)-n)

FROM

(

SELECT n FROM dbo.Numbers

WHERE n <= LEN(@List)

AND SUBSTRING(@Delimiter+@List,n,LEN(@Delimiter)) = @Delimiter

) AS y

);

GO

And finally, to present the output of the dynamic SQL command without worrying about truncation or XML entitization:

SELECT s FROM dbo.SplitStrings_Ordered(@sql, CHAR(13)+CHAR(10)) ORDER BY n;

The ORDER BY is important; it will ensure that each line gets returned in the correct sequence.

Next Steps

- If you frequently try to troubleshoot large, dynamic strings, consider employing a utility numbers table and split function to make this task easier.

- Read the following tips and other resources:

About the author

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2023-10-26