By: Tim Smith | Updated: 2014-06-30 | Comments (1) | Related: 1 | 2 | > Stored Procedures

Problem

We currently possess over 300 servers, most of which have at least 10 databases and most of those databases contain at least 100 stored procedures. We know that we only use a small fraction of these procedures and for our cleaning effort (or, in some cases, an effort to upgrade to 2012), we would like to know how to automate finding unused stored procedures.

Solution

We have numerous ways to approach this problem, most of which can become a headache themselves if we find ourselves making too many assumptions.

Using DMVs to Tell What Stored Procedures Are Used

You can find many T-SQL solutions which look for the last execution date of a stored procedure (usually from sys.dm_exec_procedure_stats) and store it by regularly updating a logging table. This solutions offers an effective approach for logging these data, provided that the last execution time is accurate and that the information in sys.dm_exec_procedure_stats is also accurate. The reason I don't prefer this method is because

- If we restart the server, the information is flushed. This means we must log these data before we restart the server.

- We have to be careful about assuming that if a stored procedure is not in this table, we can remove it. What if the stored procedure is called once a year (I use a procedure for an inflation calculating comparison with the Federal Reserve data, which I only call once a year from an application)? We would need to collect a lot of data (as far as time) before we can make safe assumptions.

- With this approach, we would still be wise to ask application and database developers if our assumption is correct.

The below TSQL code shows a derivative of this often recommended approach, using a stored procedure which would be called from a logging database on each server (one could also invert it to where it reports on procedures not used):

-- Table used in example

CREATE TABLE ProcedureLog(

DatabaseName VARCHAR(100),

ProcedureName VARCHAR(250),

LastExecutionDate DATE,

LastModifiedDate DATE

)

-- Procedure requires the database and table to be passed to it

CREATE PROCEDURE stp_LogProcedures

@db NVARCHAR(100), @tb NVARCHAR(250)

AS

BEGIN

DECLARE @s NVARCHAR(MAX)

SET @s = N'-- The procedure has been called again; we will re-insert it later with new date.

;WITH RemoveExisting AS(

SELECT @db DatabaseName

, o.name ProcedureName

, e.last_execution_time LastExecutionTime

, o.modify_date LastModifiedDate

FROM [sys].[dm_exec_procedure_stats] e

INNER JOIN ' + QUOTENAME(@db) + '.[sys].[procedures] o ON e.object_id = o.object_id

INNER JOIN [master].[sys].[databases] d on d.database_id = e.database_id

WHERE d.name = @db

)

DELETE FROM ' + QUOTENAME(@tb) + '

WHERE ProcedureName IN (SELECT ProcedureName FROM RemoveExisting)

AND DatabaseName = @db

-- The procedure has been deleted, so we will not log it anymore.

DELETE FROM ' + QUOTENAME(@tb) + '

WHERE ProcedureName NOT IN (SELECT name FROM ' + QUOTENAME(@db) + '.[sys].[procedures])

AND DatabaseName = @db

-- Insert the procedures who either have (1) new dates or (2) are new procedures and have been used.

;WITH AddNewExisting AS(

SELECT DISTINCT o.name ProcedureName

, MAX(e.last_execution_time) LastExecutionTime

, MAX(o.modify_date) ModifiedDate

FROM [sys].[dm_exec_procedure_stats] e

INNER JOIN ' + QUOTENAME(@db) + '.[sys].[procedures] o ON e.object_id = o.object_id

INNER JOIN [master].[sys].[databases] d on d.database_id = e.database_id

WHERE d.name = @db

GROUP BY o.name

)

INSERT INTO ' + QUOTENAME(@tb) + '

SELECT @db

, ProcedureName

, LastExecutionTime

, ModifiedDate

FROM AddNewExisting'

EXEC sp_executesql @s,N'@db NVARCHAR(100)',@db

ENDUsing a SQL Server Trace to Tell What Stored Procedures Are Used

Another approach is to use tracing to log when procedures are used; this also can be useful provided that (1) the tracing is always done or (2) we assume that the sample size of the timeframe we used when importing trace data is sufficient to make decisions about our findings. In both the above example and this tracing example, we seek what's currently being executed over a period of time and assume that this is reflective of what's needed. For instance, someone may call a stored procedure as a "test" in production (this happens), in which case we would then assume it's used based on execution. Since environments differ and we love to say in the SQL Server community that "it depends", these tools may be perfect for our environment, in which case we have a useful solution.

Using Application Source Code and PowerShell to Tell What Stored Procedures Are Used

I prefer to use an approach similar to source control and one suited very well for a company, organization or client devoted to using source control. This example can work outside of source control, provided that we have an organizational structure in place to interrogate code. For our example, we will assume that we have one server dedicated to an application called SourceControl; in many environments, we may have multiple applications per server, and that won't cause issues, provided that we note this in code. The below image shows the three stored procedures which our application refers to and which have been scripted:

In the folder FindUnusedProcedures, we have C#, PowerShell, SQL, and XML files. Hypothetically in this example, we don't know what procedures are used and our folder may contain more procedures than the ones we see. Note that having procedures as scripts does not mean that our applications calls them, so we should be careful about assuming, "They're scripted, therefore they're called in applications." Also, if we identify unused (or uncalled) stored procedures, we should be careful about removing them, and companies or clients may have different policies regarding unused stored procedures; most companies or clients will prefer that you script them before removing them, though a few companies or clients may prefer them to be removed outright. The below table is what we'll use to log unused stored procedures in our Logging database, which we'll see later in PowerShell. I will also note that we can approach this problem in an inverted manner - we can log every time we find a stored procedure referenced in code and what file we found it referenced in. A simple COUNT and GROUP BY will help us determine what's used and what's not based on the count.

CREATE TABLE UnusedStoredProcedures( DatabaseName VARCHAR(100), ProcedureName VARCHAR(250), CheckDate DATE DEFAULT GETDATE() )

Where will we check to verify if a procedure is used? In this case, we will use a file location which holds all the pertinent .sql, .cs, .xml and .ps1 files. Source control would offer numerous benefits here because the main code would be in one central place. Even if we don't use source control, though, when we begin investigating what procedures we don't use, we would need to have each developer per application execute and log this script separately and later put it together to see what's used and what's not. This solution would still simplify the process of finding unused procedures; ultimately, source control would be another step in the easy button direction.

With PowerShell, we will want to tell it the file location, where all the pertinent files to an application reside, as well as the server where all the necessary databases with their objects reside. We will loop through each procedure within each database and check if any of the files within the file location we passed have the procedure name. If PowerShell fails to find at least two references (by searching for the name as a pattern), then it logs the database and procedure name in the logging table. We use at least two references because we will generally have the stored procedure script saved, but that doesn't mean that any other code refers to the procedure.

Function FindUnusedStoredProcedures ($server, $githubfolder, $smolibrary)

{

## Let's pass in our dll SMO reference

Add-Type -Path $smolibrary

$serv = New-Object Microsoft.SqlServer.Management.Smo.Server($server)

## We don't want to check System databases

foreach ($d in $serv.Databases | Where-Object {$_.IsSystemObject -eq $false})

{

## We don't want to check System procedures

foreach ($proc in $d.StoredProcedures | Where-Object {$_.IsSystemObject -eq $false})

{

$p = $proc.Name

## Let's go through every file for this folder that has one of the extensions *.sql, *.cs, *.xml, *.ps1

$cnt = Get-ChildItem $githubfolder -Include @("*.sql", "*.cs", "*.xml", "*.ps1") -Recurse | Select-String -pattern $p

## Do we find fewer than 2 references?

if ($cnt.Count -lt 2)

{

## Log it

$scon = New-Object System.Data.SqlClient.SqlConnection

$scon.ConnectionString = "SERVER=$server;DATABASE=Logging;Integrated Security=true"

$record = New-Object System.Data.SqlClient.SqlCommand

$record.Connection = $scon

$record.CommandText = "INSERT INTO UnusedStoredProcedures (DatabaseName,ProcedureName) SELECT '$d', '$p'"

$scon.Open()

$record.ExecuteNonQuery()

$scon.Close()

$scon.Dispose()

}

}

}

}

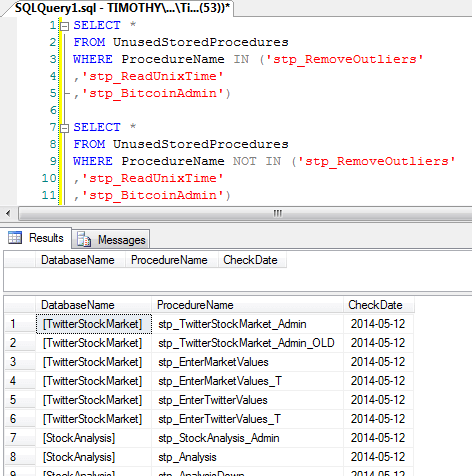

FindUnusedStoredProcedures -server "SERVER\INSTANCE" -githubfolder "C:\Users\Timothy Smith\Documents\GitHub\SourceControl\" -smolibrary "C:\Program Files (x86)\Microsoft SQL Server\100\SDK\Assemblies\Microsoft.SqlServer.Smo.dll"This function skips system databases and procedures, looks through every .sql, .cs, .xml and .ps1 file by using the Select-String function and passing in the procedure name as the pattern we're seeking. If PowerShell can't find more than one reference, it logs the database and procedure name. The below image shows the result of looping through an entire test server for this example with a specific folder being called (and as a note, we would want to point this at the largest folder possible where all our code is). As we can see, all of the procedures were logged as unused except the three being used for this example.

As I wrote earlier, we could also do an inverted approach, which the below TSQL and PowerShell code show - the TSQL table structure and PowerShell code which logs every time it finds a reference to a procedure and in what file:

CREATE TABLE ReferencedStoredProcedures( DatabaseName VARCHAR(100), ProcedureName VARCHAR(250), FileNameAndInfo VARCHAR(2000), CheckDate DATE DEFAULT GETDATE() )

Function FindReferencedStoredProcedures ($server, $githubfolder, $smolibrary)

{

Add-Type -Path $smolibrary

$serv = New-Object Microsoft.SqlServer.Management.Smo.Server($server)

foreach ($d in $serv.Databases | Where-Object {$_.IsSystemObject -eq $false})

{

foreach ($proc in $d.StoredProcedures | Where-Object {$_.IsSystemObject -eq $false})

{

$p = $proc.Name

$cnt = Get-ChildItem $githubfolder -Include @("*.sql", "*.cs", "*.xml", "*.ps1") -Recurse | Select-String -pattern $p

if ($cnt.Count -gt 0)

{

$scon = New-Object System.Data.SqlClient.SqlConnection

$scon.ConnectionString = "SERVER=$server;DATABASE=Logging;Integrated Security=true"

$record = New-Object System.Data.SqlClient.SqlCommand

$record.Connection = $scon

$record.CommandText = "INSERT INTO ReferencedStoredProcedures (DatabaseName,ProcedureName,FileNameAndInfo) SELECT '$d', '$p', '$cnt'"

$scon.Open()

$record.ExecuteNonQuery()

$scon.Close()

$scon.Dispose()

}

}

}

}

FindReferencedStoredProcedures -server "SERVER\INSTANCE" -githubfolder "C:\Users\Timothy Smith\Documents\GitHub\SourceControl\" -smolibrary "C:\Program Files (x86)\Microsoft SQL Server\100\SDK\Assemblies\Microsoft.SqlServer.Smo.dll"

Now, we have a record of every referenced stored procedure in code. If we know that all the code we searched is active and in production and that no other production or active code lies anywhere else, we can begin to assume that the procedures not listed in this table may be unused. In addition, PowerShell even highlights the line that it found the procedures referenced, which gives us another step in evaluating things if we have questions about what it returned.

But What About ...

We must face the reality that many environments are not organized and we should note where we may find some exceptions and how to deal with them, or what architecture we may evaluate for them.

- If an environment keeps active application code in the same folder (or location) as outdated or non-active code, we then run into the problem where we can't move forward until we separate the active from non-active code. Anything in production should be in a folder indicating that; I respect backups of applications, but they should not be in the same folder as something active in production.

- Some procedures may have vague names or names that are commonly used in code, this in and of itself will create problems. Whatever naming convention we use with our stored procedures, it should be distinct to where other objects won't possibly have the same name. If we don't follow this rule, in either example above, we may receive inaccurate results.

- If an application uses multiple databases with the same procedures, when only one database should have the procedures, we may see both sets of procedures as valid. Because we want to exercise caution here, that default is useful. To specify which procedures are used by which database, we would need to add a step to evaluate the connection in the code - for instance if we only call procedures using C#'s System.Data.SqlClient.SqlCommand in the form of SqlCommand cmd = new SqlCommand("EXECUTE stp_ourProcedure", scon);, we can find it easier than if we have completely different approaches to accessing SQL Server from various applications (some in XML, some with a class, etc.).

- Finally, another possibility is that we have a procedure that is commented out in code. We can expand our search to include possible comment lines, but if we do so, we will no longer be able to loop through every file because comments in PowerShell differ from TSQL and C#. An example of good practice when commenting out objects (in general) is to add to the object so that, as example, stp_UnreferencedProcedure becomes com-stp_UnreferencedProcedure, which allows for automating regardless of the language.

Finally, if we want to look through other files, for instance, a python or JSON file, we would simply add the extension to the -Include @() array ("*.py"). Some companies and clients haven't implemented source control and thus integrating this with each developer and comparing notes will still save large amounts of time from trying to guess or assume, only to have further meetings to confirm. I've witnessed these PowerShell scripts save 100+ hours in meetings alone.

Next Steps

- Begin organizing your servers and applications in a structured manner. As we'll see, by doing this, we can save huge sums of time.

- Create either alerts or logging to identify when things don't match the structure of your environment - for instance, note user stored procedures which don't begin (or end) with how your environment is customized.

- Test the script on a test database (or server) with a test project.

- Identify where you need to make appropriate changes to the (1) script(s), (2) your environments, (3) your team organization based on your tested findings.

- Remember, nothing beats good design and good planning.

About the author

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2014-06-30