By: Tim Smith | Updated: 2014-11-05 | Comments (2) | Related: > TSQL

Problem

In the previous tip, we imported text data by individual word and count. Now we want to know a few ways to evaluate these data, whether tracking the change through time or reporting counts through word selection and filtering. How can we do so in SQL Server?

Solution

Outside of directly mining for words to assist us with research (which is covered later in this tip), we have a few basic tools to help us become familiar with reporting on our results for text mining. In our first example, we want to look specifically at various keywords and note their changes over time. We can compare these changes to changes in financial data; for instance, what is the change in frequency in mentions of the word "inflation" by Federal Reserve officials and what is the change in CPI? Are these correlated? As a quick note here, one of the more common phrases you'll hear anytime you say the word "correlate" is "Correlation is not causation" which is inaccurate. The correct statement is that correlation may or may not be causation and we don't know, which is why we use control groups and other comparative data sets. An example, if your genetic parents don't have offspring, you won't either and there are currently 0 empirical exceptions to this rule. Also, how many people have you met who have died without living first? These serve as good reminders that we don't know if we don't investigate.

In the case of text mining, words may predict patterns, or predict nothing. George Soros discussed investor expectations and how these expectations affect the market (in his book The New Paradigm For Financial Markets); in some cases, expectations may be expressed verbally, in other cases they may be expressed numerically. For instance, if we all thought the world would run out of water in four days, how would we act today? What type of words would we be saying? What words would cross all cultures (within the same language)? What words might differ by culture? These are examples of questions we ask and look to answer when we begin reporting on text mining, and we can see how word usage might indicate (remember: might) expectations of future events.

In our first example, we want to measure the change of word usage each month. We'll populate some test data, then look at the change in the word usage:

CREATE TABLE Words ( ---- We're removing the IDENTITY(1,1) property, only for testing purposes in this tip WordID BIGINT, Word VARCHAR(250) NULL ) INSERT INTO Words VALUES (1,'inflation') , (2,'deflation') , (8,'unexpected') , (9,'concerned') CREATE TABLE FedStatements( WordID INT, WordCount INT, WordDate DATE DEFAULT GETDATE() ) ---- WordIDs in this example (remember from tip 1 these would be stored in our table Words): ---- 1 = inflation; 2 = deflation; 8 = unexpected; 9 = concerned INSERT INTO FedStatements VALUES (1,5,DATEADD(MM,-3,GETDATE())) , (2,4,DATEADD(MM,-3,GETDATE())) , (8,4,DATEADD(MM,-3,GETDATE())) , (9,0,DATEADD(MM,-3,GETDATE())) , (1,2,DATEADD(MM,-2,GETDATE())) , (2,2,DATEADD(MM,-2,GETDATE())) , (8,1,DATEADD(MM,-2,GETDATE())) , (9,3,DATEADD(MM,-2,GETDATE())) , (1,1,DATEADD(MM,-1,GETDATE())) , (2,8,DATEADD(MM,-1,GETDATE())) , (8,6,DATEADD(MM,-1,GETDATE())) , (9,3,DATEADD(MM,-1,GETDATE())) , (1,12,GETDATE()) , (2,5,GETDATE()) , (8,3,GETDATE()) , (9,7,GETDATE())

;WITH WordRates AS( SELECT DENSE_RANK() OVER (ORDER BY WordDate) ID , * FROM FedStatements ) SELECT w2.WordDate , w2.WordID , w1.WordCount , w2.WordCount , (((w2.WordCount - w1.WordCount)/CASE WHEN w1.WordCount = 0 THEN NULL ELSE CAST(w1.WordCount AS DECIMAL(5,2)) END)*100) ChangeFromPreviousDate FROM WordRates w1 INNER JOIN WordRates w2 ON w1.ID = (w2.ID - 1) AND w1.WordID = w2.WordID

With rate calculations, we want to handle divisions by 0 by NULLing the results because we can't calculate a rate change from 0 to any number, as it involves division by zero. If a developer wants an alternative to looking at the previous row, they can also use the LAG function, provided they are using the correct SQL Server version to do so; since clients may not always have that capability, DENSE_RANK() offers huge advantages. The above example shows the rate change at which words are used or not used from one month to another using a self joined CTE, and we could use the same to measure the data by day, week, or year, depending on how are data are stored. We could also change the above query to filter the data (within the WordRates CTE) by month or year; and we see this in the below query (note that I've added aggregate data from the previous year):

-- Let's add some data from the previous year INSERT INTO FedStatements VALUES (1,27,DATEADD(YY,-1,GETDATE())) , (2,62,DATEADD(YY,-1,GETDATE())) , (8,14,DATEADD(YY,-1,GETDATE())) , (9,41,DATEADD(YY,-1,GETDATE())) -- Let's review our data before we look at the change ;WITH WordRates_Year AS( SELECT DENSE_RANK() OVER (ORDER BY YEAR(WordDate)) ID , WordID , YEAR(WordDate) WordYear , SUM(WordCount) WordCount FROM FedStatements GROUP BY WordID, YEAR(WordDate) ) SELECT * FROM WordRates_Year ---- Now let's calculate the rate change from the previous year ;WITH WordRates_Year AS( SELECT DENSE_RANK() OVER (ORDER BY YEAR(WordDate)) ID , WordID , YEAR(WordDate) WordYear , SUM(WordCount) WordCount FROM FedStatements GROUP BY WordID, YEAR(WordDate) ) SELECT w2.WordYear , w2.WordID , w1.WordCount , w2.WordCount , (((w2.WordCount - w1.WordCount)/CASE WHEN w1.WordCount = 0 THEN NULL ELSE CAST(w1.WordCount AS DECIMAL(5,2)) END)*100) ChangeFromPreviousYear FROM WordRates_Year w1 INNER JOIN WordRates_Year w2 ON w1.ID = (w2.ID - 1) AND w1.WordID = w2.WordID

With these basic examples, we can use data to compare with other data - alone they may predict or describe few trends (though, exceptions to this will exist). From a storage perspective, I will generally save the raw data to a file, and retain the results of calculations because the results of these algorithms will be used in analysis with other data, and this prevents the issue with too much data being unused.

Following with the same table structure in the above code, and from what we learned in part I, we can also look at statements based on language dictionaries. Let's consider that cultures tend to use their own language - we don't expect to hear Muse singing about the economic effects of hyperinflation and we don't expect the Federal Reserve to hold discussions about using time effectively. The reason for this is the differing culture: music has its own culture and concerns are reflected through word choice, and the same holds true for finance.

We can create language dictionaries by storing the WordIDs of certain words that relate to topics, either overall by culture or specific. Through testing, I've found the more specific, the better if we're trying to assess the theme. In the below example, we insert test data into our table, create two test language dictionaries, and then perform a JOIN, and returning the percent of words from the statement in the language dictionary. We see that 50% of our values from the table FedStatements line up with the language dictionary of monetary; therefore, we might conclude that this test statement by the Federal Reserve was a monetary statement (as opposed to a shift statement, in this example).

---- Let's clear our table for this next example:

TRUNCATE TABLE FedStatements

INSERT INTO FedStatements (WordID,WordCount)

VALUES (1,11) -- "inflation"

, (2,4) -- "deflation"

, (3,6) -- "cpi"

, (5,7) -- "expected"

, (6,4) -- "wages"

, (7,8) -- "unemployment"

, (17,2) -- "however"

CREATE TABLE DataDictionary_Shift(

WordID INT

)

CREATE TABLE DataDictionary_Monetary(

WordID INT

)

INSERT INTO DataDictionary_Shift

VALUES (5) -- "expected"

, (10) -- "cautious"

, (4) -- "unexpected"

, (17) -- "however"

INSERT INTO DataDictionary_Monetary

VALUES (1) -- "inflation"

, (3) -- "cpi"

, (2) -- "deflation"

, (4) -- "stagflation"

SELECT 'Monetary' AS Label

, (SUM(f.WordCount))/(SELECT CAST(SUM(WordCount) AS DECIMAL(13,4)) FROM FedStatements) AS Total

FROM FedStatements f

INNER JOIN DataDictionary_Monetary dd ON f.WordID = dd.WordID

WHERE dd.WordID IS NOT NULL

UNION ALL

SELECT 'Shift' AS Label

, (SUM(f.WordCount))/(SELECT CAST(SUM(WordCount) AS DECIMAL(13,4)) FROM FedStatements) AS Total

FROM FedStatements f

INNER JOIN DataDictionary_Shift dd ON f.WordID = dd.WordID

WHERE dd.WordID IS NOT NULL

A third way of looking at these data would be a pivot table that allows us to see the word usage for the dates. In this case, instead of rates, we would be looking at the numeric value and I've found that quite a few clients like to see their data reported this way. Of the more popular pivot tables, about 50% or more of my requests tend to follow the same format where we pivot off three columns and the below procedure automates this process - note the documentation in that it depends on the stored procedure stp_OutputColumns. If you prefer, you can write the code directly to the procedure; however, outputting columns is useful in many other non-production OLTP contexts, thus I keep it as its own procedure:

CREATE PROCEDURE stp_ThreeColumnPivot

@PivotColumn VARCHAR(250),

@CalcColumn VARCHAR(250),

@NonPivotColumn VARCHAR(250),

@SourceTable VARCHAR(250),

@Function VARCHAR(10)

AS

BEGIN

DECLARE @c NVARCHAR(4000), @sql NVARCHAR(MAX)

DECLARE @s TABLE(

S NVARCHAR(4000)

)

INSERT INTO @s

-- See dependency in notes below this procedure:

EXECUTE stp_OutputColumns @PivotColumn, @SourceTable

SELECT @c = S FROM @s

SET @sql = N'SELECT ' + @NonPivotColumn + ', ' + @c + ' FROM (SELECT t.' + @NonPivotColumn + ', t.' + @PivotColumn + ', t.' + @CalcColumn + ' FROM ' + @SourceTable + ' t) p

PIVOT (' + @Function + '(' + @CalcColumn + ') FOR ' + @PivotColumn + ' IN (' + @c + ')) AS pv;'

EXEC sp_executesql @sql

END

/*

CREATE PROCEDURE stp_OutputColumns

@c_s NVARCHAR(100),

@t NVARCHAR(200)

AS

BEGIN

DECLARE @oltpps VARCHAR(400)

SELECT @oltpps = 'SELECT STUFF((SELECT DISTINCT ''],['' + t.' + COLUMN_NAME + ' FROM ' + TABLE_NAME + ' t FOR XML PATH('''')),1,2,'''') + '']'''

FROM INFORMATION_SCHEMA.COLUMNS

WHERE TABLE_NAME = @t

AND COLUMN_NAME = @c_s

EXEC(@oltpps)

END

*/

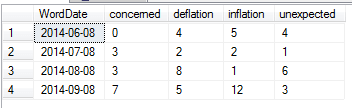

When we pivot the data set, we want to report each word as its own column and look at the count by month (a common format we find in Excel spreadsheets). We pass in five parameters to this procedure and it performs the work for us: @PivotColumn - the column that will become headers, @CalcColumn - the column that we want to perform the calculation, @NonPivotColumn - this is our "reported" column, @SourceTable - the underlying source of data, and @Function - our function. In the below case, we look at month, though we could change this to look at year by creating a table on the fly, using an aggregated SELECT statement and passing in the new table as the source for the above procedure. Before we can use this procedure, however, we need to create a table structure that is compatible with how it's used because we don't want WordIDs for headers. So, let's create a reporting table on the fly, call the procedure, then drop the reporting table:

SELECT w.Word , f.WordCount , f.WordDate INTO Report_FedStatements FROM FedStatements f INNER JOIN Words w ON f.WordID = w.WordID ---- We can double check our results: --SELECT * --FROM Report_FedStatements EXECUTE stp_ThreeColumnPivot 'Word','WordCount','WordDate','Report_FedStatements','MAX' DROP TABLE Report_FedStatements

Finally, I've used sentiment analysis for close to a decade and hold a less favorable view of it than some other data miners. While it has its use, more often than not, if a sentiment is intended and intense, we can find other verbal giveaways. In addition, the concept of sentiment (good vs. bad) assumes that the sentiment holds, the sentiment accurately reflects the person's view, and sentiment matters. Consider that no one talks, tweets, or writes about unicorns on the planet Mars eating blue watermelons, or that if an economic phenomena like the TED spread was historically high and no one was talking, tweeting or writing about it, that in and of itself would be a signal. We can see an example of this in history where modern biographers are fascinated by how John D. Rockefeller never talked about specific journalists, who wrote negatively about him, while none of these biographers are fascinated by the fact that Rockefeller also never spoke about the topic of broccoli's taste texture.

On the positive side, sentiment analysis with computers can make mistakes that human researchers can make in my observation and testing, so it has no advantage or disadvantage over human research. For instance, sarcastic statements (often used as a refutation for sentiment analysis) can confuse humans as easily as computers - suppose the researcher doesn't get the cultural reference - that would be a missed datum point. An automated algorithm might catch it if it is able to store track patterns in the person's speech and map them to cultural references and guess correctly at the intention. In addition, you don't need to speak to the average banker to learn that some people will say "This is how I spend my money" (at its value, humans and computers with these data would be misled) while the actual numbers from their bank account will completely contradict them; numeric data still has its precise advantage, and text data usually provide a useful addition to analysis.

Next Steps

- Test measuring text changes with other data and evaluate if you can see patterns using the above basic tools.

- If you look at neutral/filler words, like "the", can you also map out similar patterns to the other data?

- How would you use language dictionaries to filter out words that have multiple meanings?

About the author

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.

Tim Smith works as a DBA and developer and also teaches Automating ETL on Udemy.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2014-11-05