By: Bernard Black | Updated: 2014-11-07 | Comments | Related: > Backup

Problem

Due to HIPAA, (I work for a hospital) a vendor who has installed SQL Server along with an application on a hospital system, will not give you a login to the SQL Server. "It is mandated by HIPAA", he asserts. He further states "Only we and our application can connect to the database". As a dutiful DBA, you inquire about database backups and say: "Oh, since I can't connect and your application will be doing database backups, you'll be totally responsible for checking daily backups and recoverability". At this point the hemming and hawing starts and the vendor suggests you go to the backup directory and see if the backup file is sitting there. Every day, which does not make your day. Of course consistency checking, performance statistics, etc. are not even on the radar. You can always blame the vendor and get him to do something about those issues. But if you don't have a backup .....or one that's 3 weeks old, and you need to recover, your customer may want your scalp along with the vendor's. Also, what about MySQL database backups? Or how about PostGres backups? Any Windows Oracle setups? You want to check on these backups, but don't have any fancy tools and no budget for them. You now have over a dozen backups on a dozen servers that you have to check. Every day! What do you do?

Solution

As a DBA, you're paranoid. Things that should happen sometimes don't and the opposite, things that shouldn't happen sometimes do. You have SQL Servers you can't connect to. There are databases on these servers that might need to be recovered. The vendor won't let you connect. Maybe TCP/IP network connections are turned off along with named pipes. Maybe its a strange RDMS; I've had to check backups on a SIR 2000 RDMS. I bet not too many of you have seen one of those. Hopefully something on the system is dumping the database backups to disk file. You're only ability to check recoverability is to check when the file was made. If it's not being created when you think it should, its time to investigate.

To handle this, I created a PowerShell script that reads a table on my SQL Server DBA database on a server that I control. It checks the path and file name for when the backup file was created. The script dumps the results to a simple text report and notes whether the physical backup file is less than 24 hours old. This is emailed to you daily and a 15 minute process of checking now becomes a 1 minute process of reading the report.

In order to set this up, you do need to know which servers to monitor, the backup location and the database that should be backed up.

STEP 1: Create Tables for Backup File Properties

You need someplace to hold the filename and path information. I use a database called "is_dba" which contains various tables for general DBA work.

The table will contain 5 major pieces of info.

- The server the file resides on (is_server),

- The drive share OR the share to the backup folder (is_drive_letter). Usually I just create a share for the folder.

- The path (is_path), which I usually have 'none' because I'm using a share. The only time I use this is when I have a drive share, like D$, and then a path to where the data is.

- What string the backup file will start with (is_start)

- The backups file extension (is_extension) which often is BAK for SQL installs.

USE [is_dba]

GO

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

SET ANSI_PADDING ON

GO

CREATE TABLE [dbo].[is_nonsql_db](

[is_server] [varchar](50) NOT NULL,

[is_drive_letter] [varchar](50) NOT NULL,

[is_path] [varchar](100) NULL,

[is_start] [varchar](30) NOT NULL,

[is_extension] [char](10) NOT NULL,

[is_status] [char](1) NULL,

[is_date_stamp] [smalldatetime] NULL,

[is_identity] [int] IDENTITY(1,1) NOT NULL

) ON [PRIMARY]

GO

CREATE CLUSTERED INDEX [IX_is_nonsql_db] ON [dbo].[is_nonsql_db]

(

[is_server] ASC,

[is_identity] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF,

DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

GO

SET ANSI_PADDING OFF

GO

Here is a typical INSERT for the above table. Note that is_drive_letter field contains the share. The server name is "PROJECT" and the share name is "BACKUPS". The filename we'll be checking on is "WSS_Search.bak". You don't have to put in the full filename, only enough of it to differentiate it from other backup names. I used "WSS_Sea" and that was enough.

INSERT INTO [is_nonsql_db]( [is_server],[is_drive_letter],[is_path],[is_start],[is_extension],[is_status],[is_date_stamp]) VALUES( 'PROJECT','Backups','none','WSS_Sea','bak','A',Getdate())

STEP 2: PowerShell Script for Backup Verification

The script will read all the records in the is_non_sql table from database is_dba. It does this by creating a classical DataSet object from .NET and filling it with records. For each record inside this dataset loop, it will construct a path using a share or a drive share and path, along with a partial filename and extension. If a file is there that fits the bill it will print out the filename along with the modification date and a 'YES' if the file is within 24 hours, else 'NO'. If there is no file to report on it will print out an error. The loop continues until the last dataset record is read. The text file it has made has quite a few blank lines. We'll get rid of those blank lines by running it thru a SELECT-STRING cmdlet with a "\S" regular expression into another file. The new file will be nice and compact. Note that you'll have to change the server name in the script as well as the file locations for the output.

$SQLServer = "SERVERNAME" #use Server\Instance for named SQL instances! $SQLDBName = "is_dba"

$SqlQuery = "select is_server, is_drive_letter, is_path, is_start, is_start_len, is_extension, is_status, is_identity from is_nonsql_db order by is_server"

$SqlConnection = New-Object System.Data.SqlClient.SqlConnection

$SqlConnection.ConnectionString = "Server = $SQLServer; Database = $SQLDBName; Integrated Security = True"

$SqlCmd = New-Object System.Data.SqlClient.SqlCommand

$SqlCmd.CommandText = $SqlQuery

$SqlCmd.Connection = $SqlConnection

$SqlAdapter = New-Object System.Data.SqlClient.SqlDataAdapter

$SqlAdapter.SelectCommand = $SqlCmd

$DataSet = New-Object System.Data.DataSet

$SqlAdapter.Fill($DataSet, "Server_Names") | Out-Null

$SqlConnection.Close()

$file = "E:\SSIS\is_non_sql\ps_backups_file.txt"

write-output " Date of DB Backup Files " | out-file $file

write-output " Server Name File Name Alter Date < 24 Hrs ?" | out-file $file -append

write-output "------------ --------- ---------- ----------" | out-file $file -append

#Counter variable for rows

$intRow = 1

foreach ($row in $DataSet.Tables["Server_Names"].rows)

{

$Serv_Name = $row.is_server.trim() + " "

$Serv_Name20 = $Serv_Name.substring(0,19)

$instance = $row.is_server

###Write-Host $instance

if ($row.is_path -eq "none")

{

$path = "\\" + $row.is_server + "\" + $row.is_drive_letter + "\" + $row.is_start + "*." + $row.is_extension

}

else

{

$path = "\\" + $row.is_server + "\" + $row.is_drive_letter + "\" + $row.is_path + "\" + $row.is_start + "*." + $row.is_extension

}

$The_path = $path.trim()

Write-Host $path

####$path = "\\BHS-RLDBTEST\Backups\ReportServ*.bak"

$Daysback = "-30"

$Today = Get-Date

$Today_30 = $Today.AddDays($Daysback)

$Daysback = "-1"

$Today_1 = $Today.AddDays($Daysback)

#####Kudos to Chad Miller for next line.

SET-LOCATION C:

$F = Get-ChildItem $The_path -Recurse | Where {$_.LastWriteTime -ge $Today_30 } |Sort-Object {$_.LastWriteTime} -Descending | select -First 1

if ($F -ne $null) {

$outputArray = @()

foreach($F1 in $F)

{

$FName = $F1.Name + " "

$FName40 = $FName.substring(0,40)

$FWrTime = $F1.LastWriteTime.ToString("MM/dd/yyyy HH:mm")

$FLength = $F1.Length

###Write-Host $F1.Name

###Write-Host $FWrTime

###Write-Host $_.LastWriteTime

$difference = New-TimeSpan -Start $FWrTime -End $Today

$difference.Days

switch($difference.Days)

{

0 {$Message = " YES"}

default { $Message = " ....NO" }

}

$output = new-object psobject

$output | add-member noteproperty "Serv Name" $Serv_Name20

$output | add-member noteproperty "File Name" $FName40

$output | add-member noteproperty "Last WriteTime" $FWrTime

$output | add-member noteproperty "Less 24 Hr ?" $Message

$outputArray += $output

}

write-output $outputArray | sort "File Name" | format-table -autosize -HideTableHeaders | out-string -width 90 | out-file $file -append

}

else {

$err_out = "..........no files.........." + $row.is_server

write-output $err_out | out-file $file -append

} ##### end else null check

} ####end of server loop

#######....... Next line gets rid of blank lines in report file and sticks in new file

Select-String -Pattern "\S" -Path C:\Junk\ps_TEST.txt | ForEach-Object { $_.line

} | Set-Content -Path "E:\SSIS\is_non_sql\ps_backups_file_list.txt"

I really should mention that while I put this PowerShell script together, I got lots of help from all sorts of websites. Some but not all are: MSSQLTips.COM, powershell.com, stackoverflow.com (Chad Miller's tip on the SET-LOCATION c: helped immensely), powershellpro.com, windowsitpro.com, powershellmagazine.com, blogs.technet.com/b/heyscriptingguy. These sites have lots of tips/tricks on PowerShell.

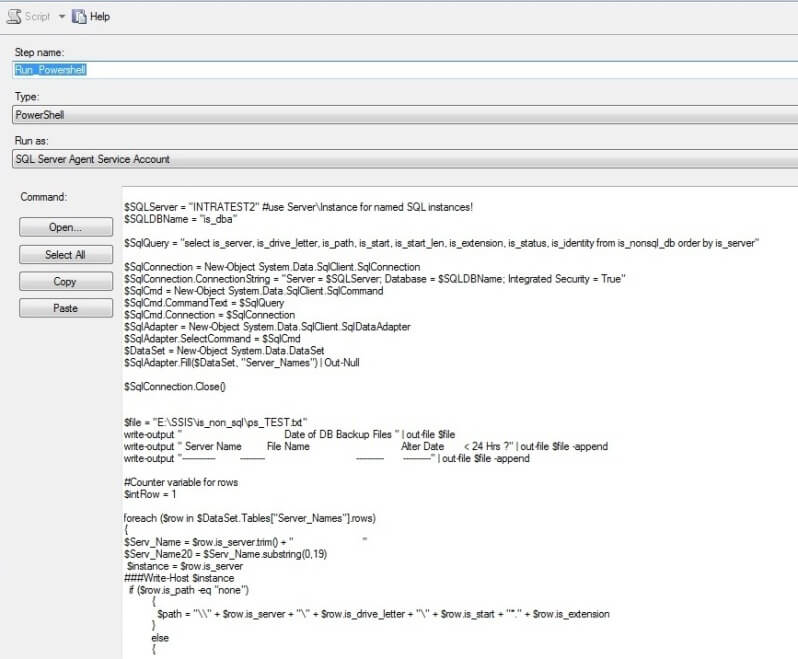

STEP 3: Automate the Report Generation with SQL Server Agent

Now you can put the above script in a SQL Server Agent Job. Use Management Studio and create a new job. The script will run under type=PowerShell. Simply cut and paste the script in and run the parser to make sure all is OK. Make sure you've got the correct server name in the script. The job needs to run under a dba account that has rights to the shares it will be looking at. The job reads the is_nonsql_db table and begins a loop for each record in the table. The script then puts together a string that it uses in GET-CHILDITEM statement. It will use those results to create a string that gets written out in a WRITE-OUTPUT line. Here is what the job setup would look like.

STEP 4: Reviewing the Report Data

Here is the output that goes to a good old text file. Note the error lines where there weren't any files to look at. The server names were altered to protect the innocent.

Date of DB Backup Files Server Name File Name Alter Date < 24 Hrs ? ------------ --------- ---------- ---------- ..........no files..........XXXXXeb1 XXXXXallcopy central_full.BAK 10/13/2014 18:23 YES ..........no files..........XXXXXuadramed XXXXXware3 tdds_backup.sql 10/14/2014 05:35 YES XXXXXware3 monitorauditlog_backup.sql 10/14/2014 02:24 YES XXXXXware4 tdds_backup.sql 10/14/2014 05:47 YES XXXXXware4 monitorauditlog_backup.sql 10/14/2014 02:48 YES XXXXXprodascent ACSystem.mdf 10/13/2014 22:30 YES XXXXXprodascent ACSystem_log.ldf 10/13/2014 22:30 YES XXXXXi CISChartingDB8_db_201410132121.BAK 10/13/2014 21:25 YES XXXXXohnson JCIAuditTrails_db_201410131712.BAK 10/13/2014 17:12 YES XXXXXharmemr1 MPIFDB_full.BAK 10/13/2014 17:17 YES XXXXXable2 ABACUS.BAK 10/13/2014 22:17 YES XXXXXbes SOPHOS3_full.BAK 10/13/2014 22:23 YES XXXXXctx-es EdgeSight_backup_201410132121.bak 10/13/2014 21:22 YES XXXXXre-db1 BTIHW.db 10/13/2014 23:15 YES XXXXXATALINK lfs_main_current.bak 10/14/2014 00:00 YES XXXXXs mantisbt_backup.sql 10/13/2014 21:29 YES XXXXXCDB MHS_backup.bak 10/13/2014 22:15 YES XXXXXs2 CMAlarmLog_full.BAK 10/13/2014 19:28 YES XXXXX1 ht2029_dump.BAK 10/13/2014 20:17 YES XXXXXct WSS_Search.bak 10/13/2014 21:32 YES XXXXXct WSS_Con.bak 10/13/2014 21:32 YES XXXXXct SharePoint_Con.bak 10/13/2014 21:31 YES XXXXXct SharePoint_Ad.bak 10/13/2014 21:31 YES XXXXXct PWA_Rep.bak 10/13/2014 21:31 YES XXXXXct PWA_Arch.bak 10/13/2014 21:30 YES XXXXX3 EncoreDB_full.BAK 10/14/2014 10:50 YES XXXXX WSS_Content_DB_Full.BAK 10/13/2014 19:09 YES XXXXX SharePoint_AdminContent_923209e1-60eb-43 10/13/2014 18:57 YES XXXXXNPRODDB variansystem_2014_10_13_23_8_2_773.bak 10/12/2014 23:09 NO

STEP 5: Accessing the Report

There are two good ways to view the report. Create a shortcut on your desktop or create a job to email the report to you. Here's how to email it to yourself. Create another job, or another step in your report job. This job/step will run a T-SQL script that invokes Database Mail. Of course it assumes you've turned on Database Mail. It grabs a text file as an attachment and sends it out. Here is the code.

EXEC sp_send_dbmail @profile_name='DBA', @recipients='[email protected]', @subject='Outcast DB Backups Report', @body='Attached shows SQL Databases that have grown since yesterday', @file_attachments= 'E:\SSIS\is_non_sql\ps_backups_file_list.txt'

Next Steps

- Create some alerts based on report results

- Add vendor to email list to rub his/her nose in it

- Get last SQL DB backup date with PowerShell

- Do SQL Backups with PowerShell

- All PowerShell Tips

About the author

Bernard Black is a DBA for Beacon Health System in South Bend, IN and has been working with databases for over 15 years.

Bernard Black is a DBA for Beacon Health System in South Bend, IN and has been working with databases for over 15 years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2014-11-07