By: Koen Verbeeck | Updated: 2015-10-29 | Comments | Related: > Integration Services Best Practices

Problem

Recently there was the webcast SSIS Best Practices and Tuning Tips on MSSQLTips.com. One of the best practices is to turn on logging in the SSIS package and to log essential information to a SQL Server table. How should this be implemented?

Solution

There are several possibilities in SQL Server Integration Services (SSIS) to handle logging:

- Using the package log providers. These will be discussed in this tip and can be used in all versions of SSIS.

- When using the project deployment model in SSIS, the SSIS catalog handles logging for you. The logging data is stored inside the SSISDB database. This option is only available since SSIS 2012 and up.

- You can implement your own custom logging. Here, you can use the Execute SQL Tasks for example to write log information to a table. This option is the most flexible, but requires the most work as well. For more information, take a look at the tip Custom Logging in SQL Server Integration Services SSIS.

The SSIS log providers offers you a method of logging pre-defined events to a certain destination. The possible destinations are:

- Text file - Most likely the fastest option available.

- SQL Server Table - The biggest advantage of this destination is that you can easily query the log results.

- SQL Server Profiler - The events are logged to a trace filed, which can be captured in Profiler.

- Windows Event log

- XML file

From my experience with real-life production systems only the text file or the SQL Server table are used, although the XML file has been spotted in very rare occasions. My personal preference is the SQL Server table because you can query it, but it's also easy to archive or compress data. In this tip I'll focus on the SQL Server table since it is the most flexible option, but the text file is very similar to setup.

Logging to a SQL Server Table

To test the logging, I used a very simple package with only one Execute SQL Task on the control flow. This SQL Task will throw a divide-by-zero error, which we will try to capture in the logging.

To go to the logging configuration, you can either right-click on the control flow and select Logging... or you can go to the SSIS menu in the task bar and select Logging... over there.

This will open up the logging configuration menu. The first thing to do is select the type of logging provider you want to use and to click the Add... button.

This will add a new log provider, which you still have to configure. Note that it's possible to add multiple log providers into one single package. The next step is to specify a connection manager to the database where you want to keep the logging table. It's not possible to specify a table: SSIS will create one for you with a specific name and a specific set of columns.

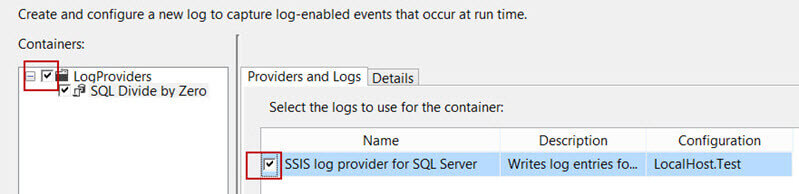

Now you can specify for which tasks logging should take place. By deselecting tasks in the left pane, you can configure for which tasks the log provider should log events. Don't forget to select the log provider in the right pane as well. Note: LogProviders is the name of my test package.

The final step is selecting which events the log provider should write to the SQL Server log table. These can be found in the Details tab.

The most frequently used events are OnError and OnWarning. Other events are more likely used for specific debugging purposes. Some task or containers may have specific events that are not available for other tasks. For example, the Diagnostic event is only available at the package level.

Now let's run the package to take a look at the generated logging. SSIS will create a new table in the configured database with the name dbo.sysssislog. You can find this table listed under the system tables.

This table has the following columns:

- id - An identity column which is also the primary key of the table

- event - The type of log event, for example OnError

- computer - The machine running the package

- operator - The user ID running the package

- source - The task or package that generated the event

- sourceid - The GUID of the source task, container or package

- executionid - A GUID unique to the execution of a package. You can use this ID to link different logging rows to one specific execution.

- starttime - Start time of the task or container

- endtime - End time of the task or container

- datacode - An optional integer code which can contain the result of running the task or container. E.g. 0 corresponds with success, 1 with failure.

- databytes - A byte array specific to the log message

- message - a message specific to the event logged. This can be for example the error message or the warning.

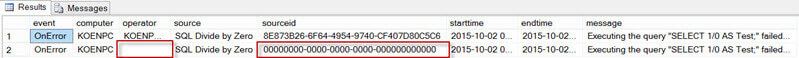

We can use the following statement to query the log table:

SELECT [event] ,[computer] ,[operator] ,[source] ,[starttime] ,[endtime] ,[message] FROM [dbo].[sysssislog];

With logging defined at the package level, the following rows are returned:

SSIS Logging Provider Considerations

As can be seen in the previous screenshot, the package itself logs the start and the end of the package execution. There's also the error message at the package level and a warning that the maximum amount of errors have been reached. The Execute SQL Task also logs the same error message. Because errors propagate from the source of the error all the way up to the package level, it's possible the same error message gets logged multiple times. The tip Continue a Foreach loop after an error in a SQL Server Integration Services package explains error propagation in more detail and also explains how you can prevent this if needed.

Another method is to simply enable logging only on the Execute SQL Task.

By doing so, we can reduce the logging to only one single row:

This really shows the flexibility of the SSIS log providers: you can choose logging preferences up to any task or container. Furthermore, in the Details tab of the log provider, you can click the advanced button to optionally choose which columns are being logged.

For the deselected columns, the log provider will log default values to the log table.

The logging providers are very flexible and ideal for logging errors and warning messages, but it can be cumbersome to retrieve other information, such as the number of rows written to a destination in a data flow. One option would be to log the OnInformation event, as this event logs a message stating how many rows have been written to the destination component.

You could filter the logging table for messages like this and then parse the message to get the number of rows. The built-in logging of the SSIS catalog or custom logging are however more straight forward methods for retrieving row counts.

Logging from a Script Task

As mentioned before, some tasks have specific events. The script task is no exception: it has a special event called ScriptTaskLogEntry that allows you to log messages to the logging table.

To actually log from the script task, you need to use the Dts.Log method which accepts three parameters:

- Log message

- Datacode

- Databytes

The first one is the most important one of course, the other two can just be dummy values.

When we run the package, we can see the custom log message in the logging table:

Conclusion

In this tip we have discussed the integrated logging providers in an Integration Services package. These logging providers can log information to various destinations and are very flexible in their use. You can specify which tasks or containers can log messages, which events are logged and which columns are being logged.

Next Steps

- If you are using an Integration Services version below 2012, make sure logging is turned on to improve troubleshooting and debugging.

- More tips about logging in SSIS:

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2015-10-29