By: Daniel Farina | Updated: 2015-12-01 | Comments (1) | Related: More > Integration Services Development

Problem

You have a SQL Server Integration Services package to import a text file, but it contains binary zeros 0x00 instead of spaces and the strings get truncated. In this tip I will show you a workaround for this issue.

Solution

When you work as a SQL Server Integration Services developer usually you have to deal with heterogeneous data sources. Flat files are the most used container for information interchange between different platforms. This is especially the case if the data comes from a mainframe, but sometimes the data does not come as expected.

What is a binary zero and why it matters?

The binary zero is the hexadecimal number 0x00. We call it a binary zero because of how this zero is stored. For example, if you have a text file containing a numeric column with the number zero, if you open the file with a Hex editor you will see that the number is stored as 0x30. In contrary, if your text file contains a binary zero and you open it with Notepad or any other text editor you will see a regular blank space like those between words, so you will not notice its presence.

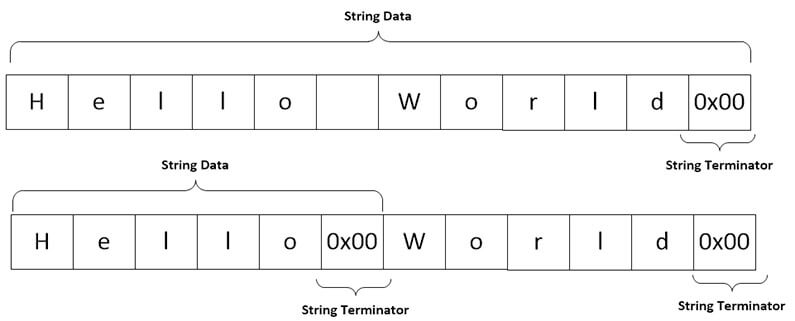

The problem with binary zeros is that they are often used as string terminators in Integration Services as well as many other programming languages. When Integration Services processes a string column containing a binary zero, Integration Services will consider the first occurrence of the binary zero as the string terminator and will truncate the remaining data. The next image will illustrate this concept.

According to Microsoft it is not a bug, it's a feature by design (https://support.microsoft.com/en-us/kb/922648). They suggest that if text contains a binary zero you should use a binary data type.

Why a flat file can contain a binary zero?

This is very common when you process flat files created from a mainframe environment. It's because the Cobol programmer didn't initialize the record structure that he used to create the text file.

Let's walk through an example

First we create a sample database.

USE [master] GO CREATE DATABASE [TestDB] CONTAINMENT = NONE ON PRIMARY ( NAME = N'TestDB_file1', FILENAME = N'E:\MSSQL\TestDB_1.mdf', SIZE = 128MB , MAXSIZE = UNLIMITED, FILEGROWTH = 64MB) LOG ON ( NAME = N'TestDB_log_file1', FILENAME = N'E:\MSSQL\TestDB_1.ldf', SIZE = 8MB, MAXSIZE = 2048GB, FILEGROWTH = 8MB) GO ALTER DATABASE TestDB SET RECOVERY SIMPLE GO

Now we create the customers table.

USE TestDB

GO

IF OBJECT_ID('Customers','U') IS NOT NULL

BEGIN

DROP TABLE Customers

END

GO

CREATE TABLE Customers

(

CustomerID INT IDENTITY (1, 1) NOT NULL,

CustomerName VARCHAR (50) NOT NULL,

CustomerAddress VARCHAR (50) NOT NULL,

CustomerCategory TINYINT NOT NULL,

CustomerBalance DECIMAL(10,2) NOT NULL

)

The next script will insert some test data that you can use to create a sample test file and modify it with a hex editor. Also you can download a test file and the code used in this tip from this link.

USE TestDB

INSERT INTO dbo.Customers

( CustomerName ,

CustomerAddress ,

CustomerCategory ,

CustomerBalance

)

SELECT 'Stefanie Alexander' ,

'749 East Rocky New Road' ,

1 ,

81594.46

UNION ALL

SELECT 'Lee Buckley' ,

'30 North Green First Avenue' ,

4 ,

99099.99

UNION ALL

SELECT 'Daniel Gallaghery' ,

'377 East Green Oak Avenue' ,

5 ,

56397.47

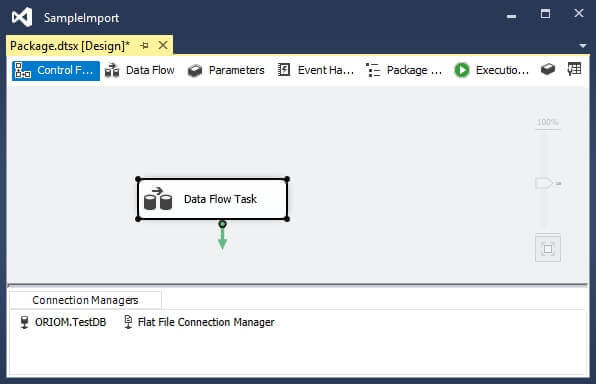

Create a new Integration Services package with one data flow task, a flat file connection and a database connection.

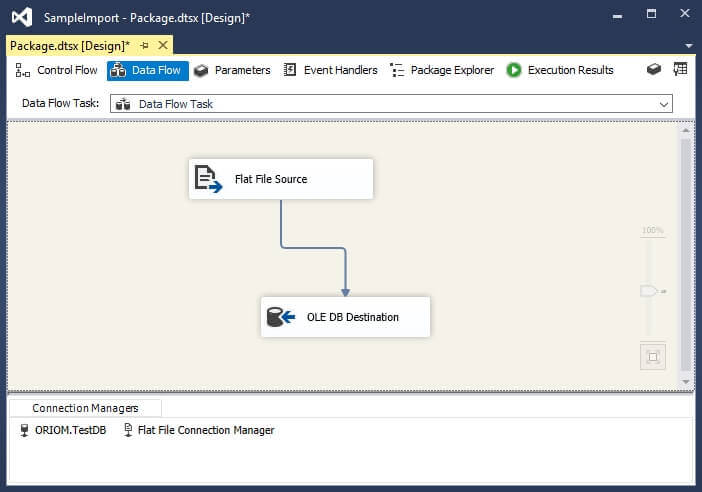

In order to configure the columns for the flat file I suggest that you use Ron Kirchgessner's tip SSIS - Configure a source flat file with a fixed width from an existing table. Then set up the data flow task with one OLEDB Destination to the customers table and a flat file source pointing to the sample file location.

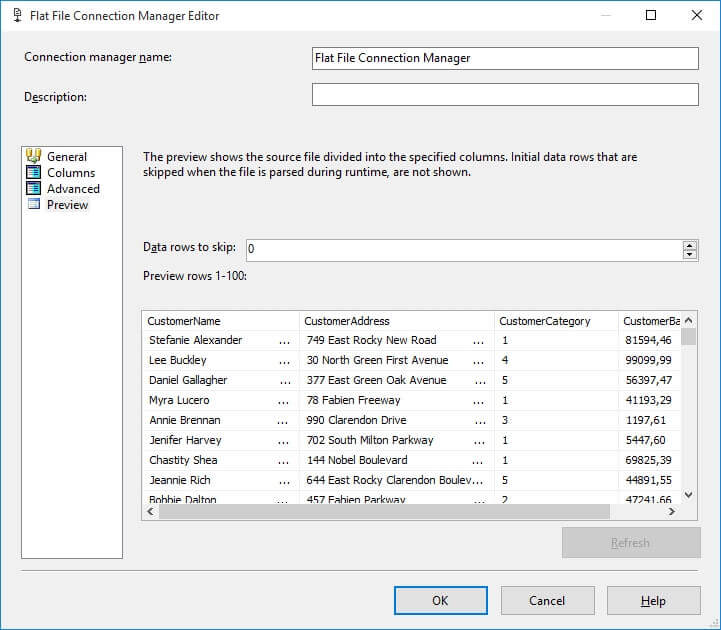

If we take a look at the preview page of the flat file connection we can see that it shows the data properly.

Now if we run the package and then take a look at the table we can see that data has been truncated.

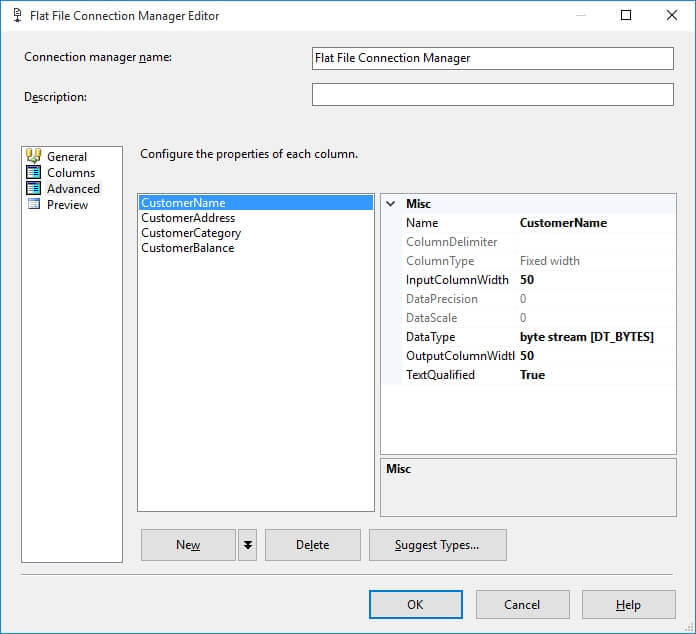

In order to fix this we must first change the data types of string columns CustomerName and CustomerAddress from String (DT_STR) to Bytestream (DT_BYTES) as shown on the image below.

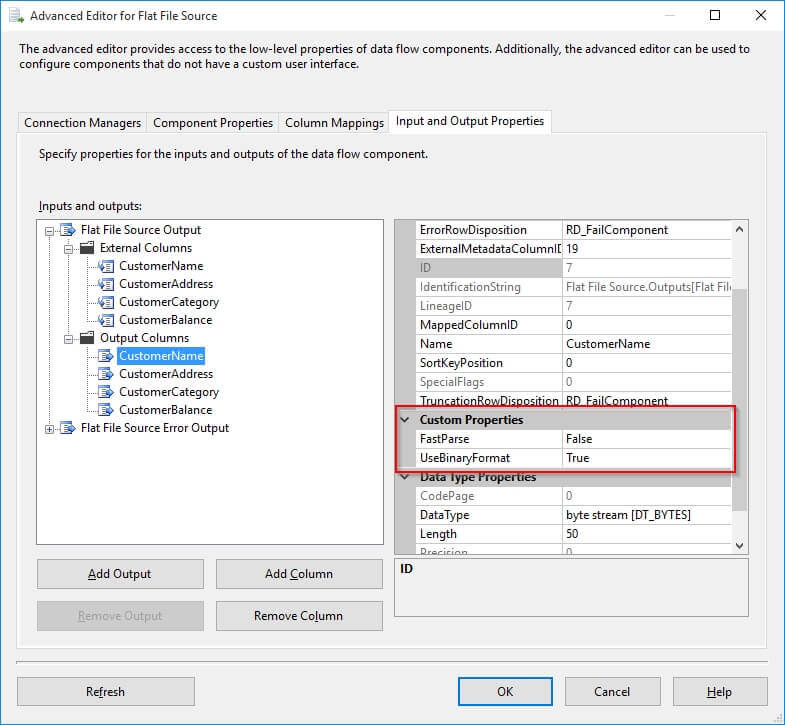

Although we have specified that those columns are of bytestream datatype, we must specify in the flat file source advanced editor that the data needs to be treated as a binary format.

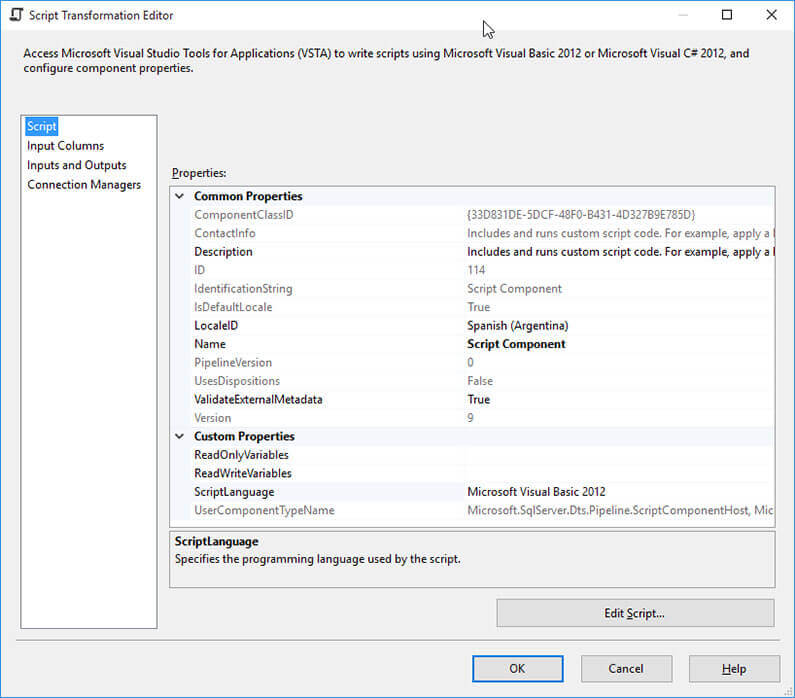

The next step is to add a Script component transformation task. I feel more comfortable using VB.Net to code, but if you prefer you can use C# too.

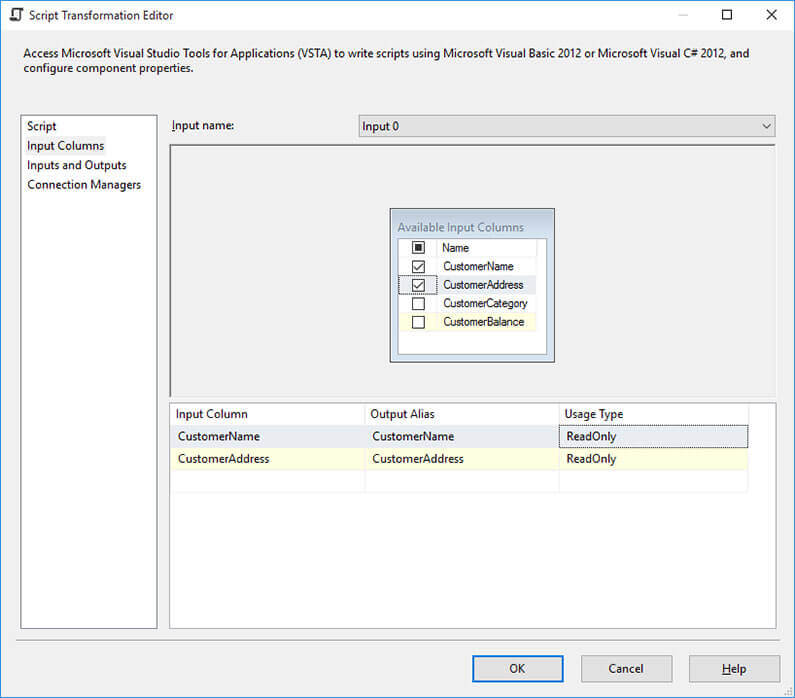

Select the CustomerName and CustomerAddress columns as read only input on the Input Columns page.

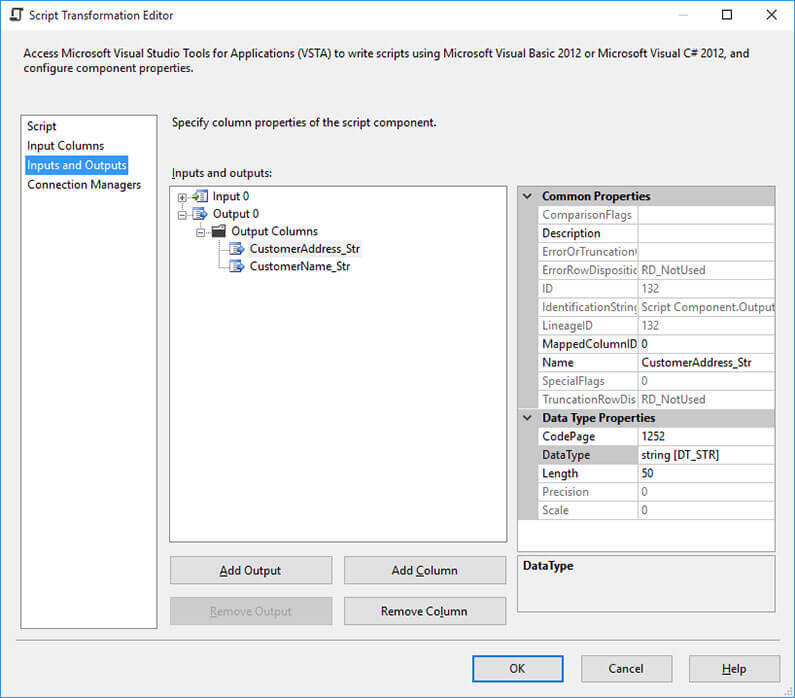

On the Inputs and Outputs page add two output columns CustomerName_Str and CustomerAddress_Str of string data type.

Paste the following VB .NET code into the script editor. This code replaces any occurrence of byte zero to hex byte 20 which is the byte code for a space character. After this, on the OLEDB destination map the columns CustomerName_Str and CustomerAddress_Str to CustomerName and CustomerAddress respectively.

Public Overrides Sub Input0_ProcessInputRow(ByVal Row As Input0Buffer)

Row.CustomerNameStr = ReplaceBinaryZero(Row.CustomerName)

Row.CustomerAddressStr = ReplaceBinaryZero(Row.CustomerAddress)

End Sub

Private Function ReplaceBinaryZero(ByVal ByteStream As Byte()) As String

Dim i As Integer

For i = 1 To ByteStream.GetLength(0) - 1

If ByteStream(i) = 0 Then

ByteStream(i) = &H20

End If

Next

ReplaceBinaryZero = System.Text.ASCIIEncoding.ASCII.GetString(ByteStream).Trim

End Function

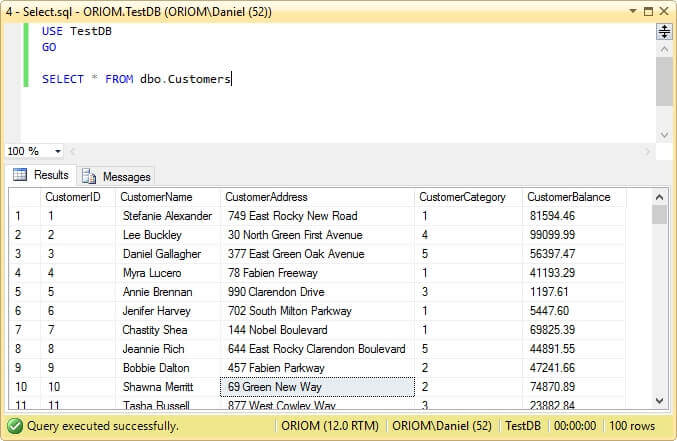

Now when you run the package and do a SELECT from the customers table you will see that the table contains the data in the proper way.

Next Steps

- You can download the sample text file as well as all the code form this link.

- For more information about conversion between data types check out this tip: SQL Server Integration Services Data Type Conversion Testing.

- If you work with flat files created on Mainframe environments you will benefit from reading my other tips Importing Mainframe Data with SQL Server Integration Services and Importing Mainframe Data including Packed Numbers with SQL Server Integration Services.

- Due to the fact that the solution proposed in this tip contains a script transformation, you can take advantage from parallelizing the workload with Balanced Data Distributor Transformation. In the following tip I explain its usage: Parallelize Data Flows with SSIS Balanced Data Distributor Transformation.

- If you want to enrich your SSIS packages and make them look more professional, take a look at Integration Services Best Practices Tips Category.

- Also check out SQL Server Business Intelligence Tips and Tricks.

About the author

Daniel Farina was born in Buenos Aires, Argentina. Self-educated, since childhood he showed a passion for learning.

Daniel Farina was born in Buenos Aires, Argentina. Self-educated, since childhood he showed a passion for learning.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2015-12-01