By: Bhavesh Patel | Updated: 2018-07-13 | Comments (3) | Related: > Performance Tuning

Problem

I often have the need to move older SQL Server data from one table to another table for archiving purposes or other special needs. I looked at various ways of doing this to find an approach with the least impact. In this tip, we will look at different ways this can be done and the results I found with each approach.

Solution

The following shows different approaches I took to insert data from one table into a different table in SQL Server. I show the example and the differences in execution time.

Setup SQL Server Test Environment

First, we will setup a test database, table and load some data. My model database is set to FULL recovery, so my new database will be enabled with the FULL recovery model.

USE MASTER

GO

CREATE DATABASE Testing_Operation

GO

USE Testing_Operation

GO

CREATE TABLE Test_Mst

(

id INT PRIMARY KEY IDENTITY (1,1),

testcol1 nvarchar(max),

testcol2 nvarchar(max),

testcol3 nvarchar(max),

testcol4 nvarchar(max),

testcol5 nvarchar(max),

chrActive CHAR (1) DEFAULT 'Y'

)

GO

CREATE TABLE Test_Mst_History

(

id INT,

testcol1 nvarchar(max),

testcol2 nvarchar(max),

testcol3 nvarchar(max),

testcol4 nvarchar(max),

testcol5 nvarchar(max),

chrActive CHAR (1)

)

GO

INSERT INTO Test_Mst

SELECT REPLICATE('A',5000),

REPLICATE('B',5000),

REPLICATE('C',5000),

REPLICATE('D',5000),

REPLICATE('E',5000),

'Y'

GO 10000

Using SQL Server INSERT INTO

I need to transfer data from Test_Mst to Test_Mst_History.

I will use INSERT INTO in SQL Server to see how this approach works.

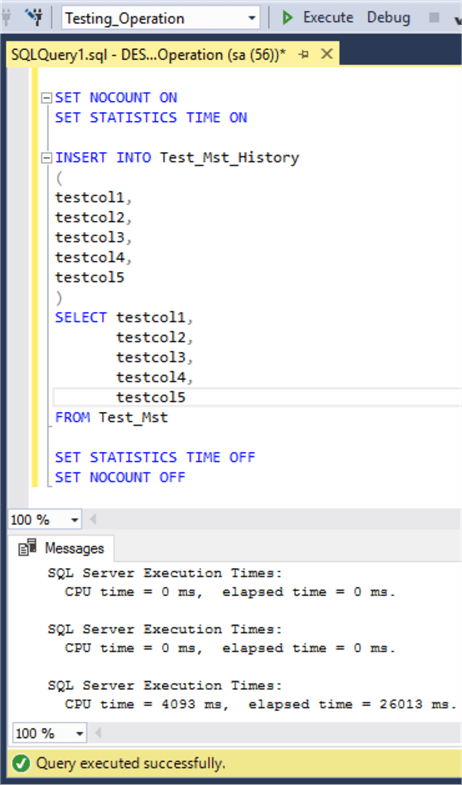

SET NOCOUNT ON SET STATISTICS TIME ON INSERT INTO Test_Mst_History ( testcol1, testcol2, testcol3, testcol4, testcol5 ) SELECT testcol1, testcol2, testcol3, testcol4, testcol5 FROM Test_Mst SET STATISTICS TIME OFF SET NOCOUNT OFF

As we can see below, the statement took time 26013ms to complete. As mentioned, the database is currently in the Full recovery model.

Change SQL Server Database Recovery Model

It might be possible to reduce the time if we used the Bulk-Logged recovery model. Under the “BULK_LOGGED” recovery, for bulk operations the transactions are not fully logged so this may help in the execution time.

My database is running under the full recovery model. For the purpose of minimal logging, I am going to change recovery model to BULK_LOGGED as follows.

ALTER DATABASE Testing_Operation SET RECOVERY BULK_LOGGED

After changing the recovery model, I have flushed the destination table using a truncate command, then executed the same script from above.

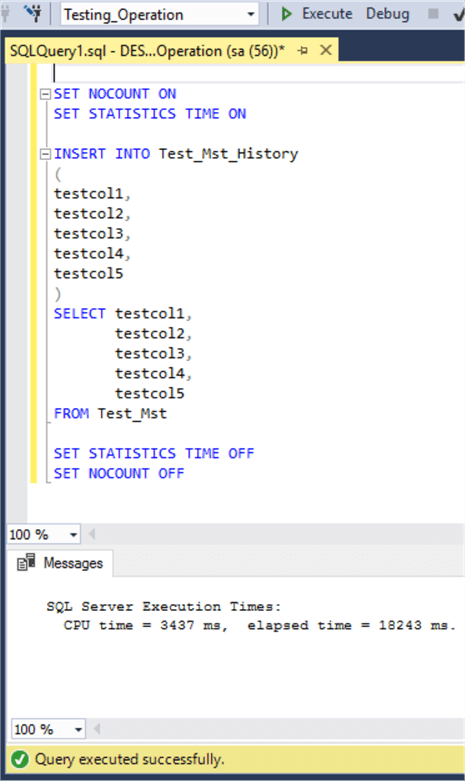

TRUNCATE TABLE Test_Mst_History GO SET NOCOUNT ON SET STATISTICS TIME ON INSERT INTO Test_Mst_History ( testcol1, testcol2, testcol3, testcol4, testcol5 ) SELECT testcol1, testcol2, testcol3, testcol4, testcol5 FROM Test_Mst SET STATISTICS TIME OFF SET NOCOUNT OFF

After changing the recovery model to “BULK_LOGGED”, this completed in 18243ms versus 26013ms.

Use SQL Server SELECT INTO Statement Instead of INSERT INTO Statement

Now we will try using SELECT INTO. I will leave the database in the BULK-LOGGED recovery model.

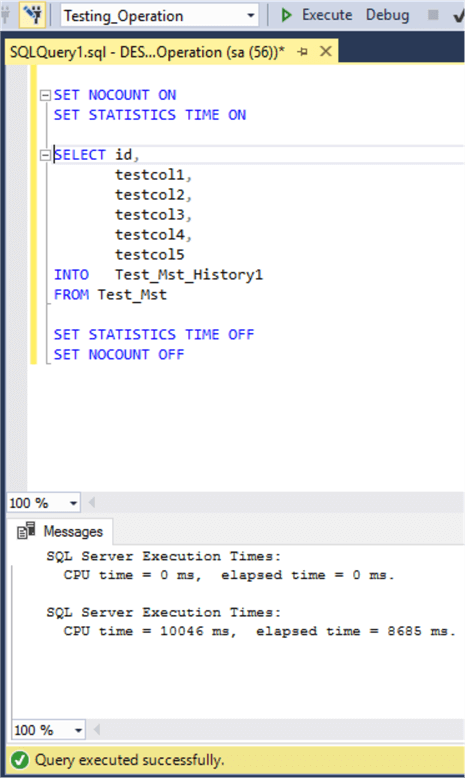

SET NOCOUNT ON SET STATISTICS TIME ON SELECT id, testcol1, testcol2, testcol3, testcol4, testcol5 INTO Test_Mst_History1 FROM Test_Mst SET STATISTICS TIME OFF SET NOCOUNT OFF

I got the result down to 8685ms. The SELECT INTO will create a new table versus inserting data into a table that has already been setup.

We don't have control of putting the data into an existing table, but a change in SQL Server 2017 gives us the ability to select a specific filegroup where the table is created. There are some other limitations to using this approach as well, but SELECT INTO could be a good approach for some requirements.

Use TABLOCK hint to boost SQL Server INSERT INTO Performance

The next thing I tried was to an INSERT INTO with a TABLOCK to see if this helps.

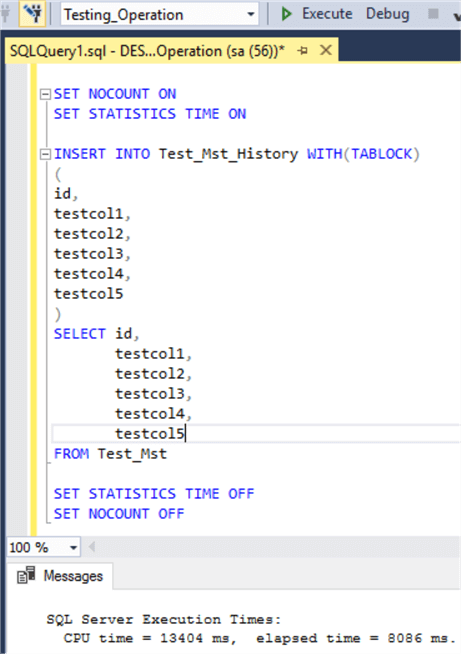

TRUNCATE TABLE Test_Mst_History GO SET NOCOUNT ON SET STATISTICS TIME ON INSERT INTO Test_Mst_History WITH(TABLOCK) ( testcol1, testcol2, testcol3, testcol4, testcol5 ) SELECT testcol1, testcol2, testcol3, testcol4, testcol5 FROM Test_Mst SET STATISTICS TIME OFF SET NOCOUNT OFF

After the flushing destination table, I executed the statement again using the hint TABLOCK and I got the result down to 8086ms.

I used TABLOCK for the destination table. Currently my destination table does not have any constraints or a clustered index. This means the destination table is treated as a HEAP and the database is still in the Bulk-Logged recovery model. When importing data into a heap by using INSERT INTO you can enable optimize logging and locking for the statement by specifying the TABLOCK hint for the target table. By specifying the TABLOCK hint, a shared lock is applied to the entire table instead of at the row or page level.

Use SWITCH TO in SQL Server

The last approach I took was using SWITCH TO, to move the data to a new table.

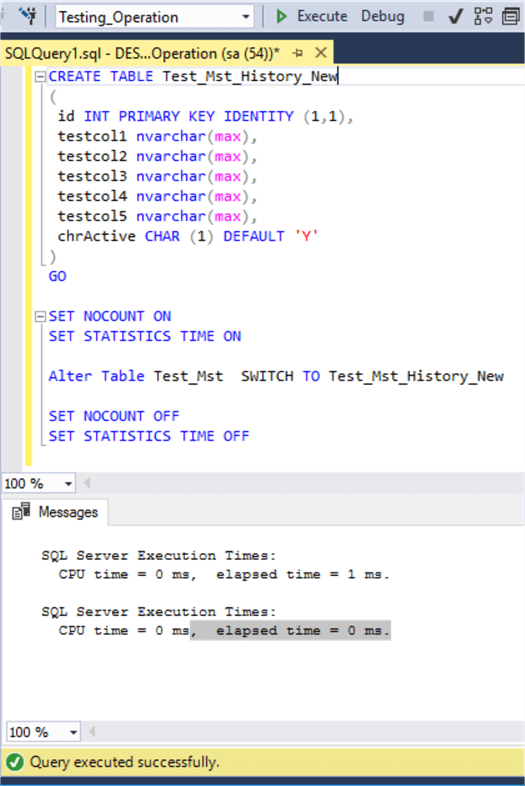

CREATE TABLE Test_Mst_History_New ( id INT PRIMARY KEY IDENTITY (1,1), testcol1 nvarchar(max), testcol2 nvarchar(max), testcol3 nvarchar(max), testcol4 nvarchar(max), testcol5 nvarchar(max), chrActive CHAR (1) DEFAULT 'Y' ) GO SET NOCOUNT ON SET STATISTICS TIME ON ALTER TABLE Test_Mst SWITCH TO Test_Mst_History_New SET STATISTICS TIME OFF SET NOCOUNT OFF

We can see below that this showed that it took less than 1ms to complete. This moves all of the data from Test_Mst to Test_Mst_History_New.

Basically, the SWITCH TO command is available for moving data between partitions from different tables. We use the ALTER TABLE command to move the data to a new partition Test_Mst_History_New. Here the partition is not allocated, which means the data moves across the tables. First, this ensures the table definitions are the same before applying the SWITCH TO command.

Disable Constraints Before Inserting SQL Server data

This example was very simple where the table had no indexes, constraints or foreign keys. If your table has any of these, for optimizing insert operations we can disable these before inserting the data and rebuild the indexes after as well as enabling constraints afterwards. For more information check out Disable Foreign Key Constraints with INSERT and UPDATE Statements.

Other Options to Improve Performance

We also need to observe the version of SQL Server that is being used, because there may be an update that helps improve performance. For example, we might find poor performance of concurrent insertion with temp tables in SQL Server 2016 and 2017 running on Windows. SQL plans run in parallel due to concurrency insertion contention in tempdb which was resolved in a SQL Server 2016 Service Pack.

There are different query level, trace level and constraint level options available which can be useful for getting better performance. In addition, there are certain points which could impact, directly or indirectly, insert operations like:

- Server Infrastructure - Make sure the system is robust to get better performance for SQL Server operations. Because if the server is running under resource pressure this could cause performance issues.

- IO latency - Very important factor, because ultimately we need to write to the database files on disk. It has been proven that SSD drives are best for reducing DISK-IO bottlenecks versus SCSI or SATA drives.

- ACID Properties and Isolation - When a transaction processing system creates a transaction, this property ensures that the transaction has certain characteristics. For these examples, I want to insert data into a new table, but the values are fetched from another table, therefore the isolation level could be factor a when fetching data from this table. So, we need to ensure the query is running under the appropriate isolation level in SQL Server.

- Database Configuration - For getting better results, the database configuration can impact performance such as where the database files are placed on disk and also the configuration of TempDB.

Next Steps

- Test these options on a test or development server prior to rollout in production.

- Take a look at these related articles:

- Insert in SQL Server.

- Troubleshooting slow disk IO in SQL Server.

- Demonstrations of Transaction Isolation Levels in SQL Server

- ACID properties in SQL Server

About the author

Bhavesh Patel is a SQL Server database professional with 10+ years of experience.

Bhavesh Patel is a SQL Server database professional with 10+ years of experience.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2018-07-13