By: Douglas Correa | Updated: 2018-10-24 | Comments (2) | Related: > TSQL

Problem

When you need to update SQL Server data in columns that have data types like VARCHAR(MAX), NVARCHAR(MAX) and VARBINARY(MAX) that contain values like JSON or XML script without have performance issues.

Solution

SQL Server 2005 introduced new large value data types to replace the deprecated text, ntext and image data types. These data types can store up to 2^31-1 bytes of data. Updates can be made without rewriting the entire column value, but there is no difference to inserting a large value with a regular INSERT.

To update a large value data type, the UPDATE can use the WRITE clause as follows:

UPDATE dbo.LargeTableObject SET col.WRITE (expression, @offset, @length ) WHERE id = 1; GO

You can see the difference between a regular UPDATE and UPDATE with the WRITE clause.

Example of using SQL Server UPDATE with WRITE Clause

Using this method can be a good choice and to demonstrate it I’ll create a table with a varchar(max) column data type.

DROP TABLE IF EXISTS LargeTableObjectGO CREATE TABLE LargeTableObject ( id BIGINT IDENTITY ,col1 VARCHAR(MAX) )GO

Next, a row is inserted. I'm using a JSON file in this example. You can download the JSON file from here.

INSERT INTO dbo.LargeTableObject (col1)SELECT BulkColumn FROM OPENROWSET (BULK 'C:\temp\citylots.json', SINGLE_CLOB) as j GO

We can see how SQL Server saves this data, by running the query below.

SELECT OBJECT_NAME(I.object_id)

, I.name

, AU.total_pages

, AU.used_pages

, AU.data_pages

, P.rows

, AU.type_desc

FROM sys.allocation_units AS AUINNER JOIN sys.partitions AS P ON AU.container_id = P.partition_id

INNER JOIN sys.indexes AS I ON I.index_id = P.index_id AND I.object_id = P.object_id

WHERE P.object_id = OBJECT_ID('LargeTableObject')

GO

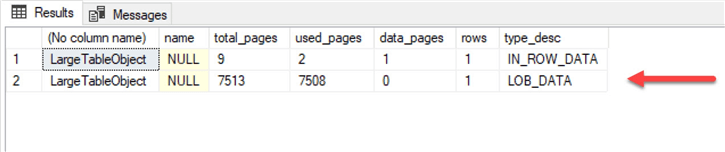

The image below shows we are using LOB_DATA pages and we are using 7513 total pages (each page is 8K) to store this data, which is about 60MB.

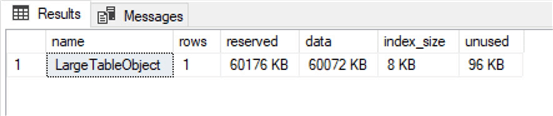

We can also see this data if we you sp_spaceused on this table.

The next step is clear the plan cache and run a checkpoint to clear the transaction log. Also, we will set statistics io on to get how many pages are needed for the update.

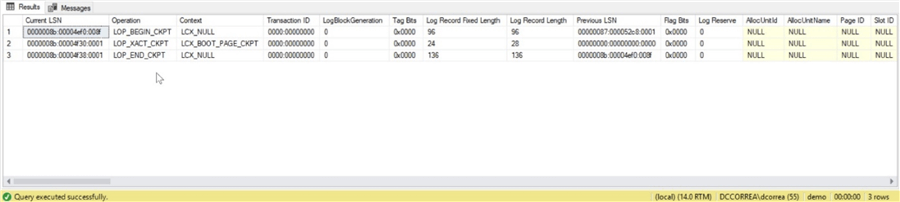

DBCC FREEPROCCACHE DBCC DROPCLEANBUFFERS CHECKPOINT SET STATISTICS IO ON SELECT * FROM fn_dblog(null,null) GO

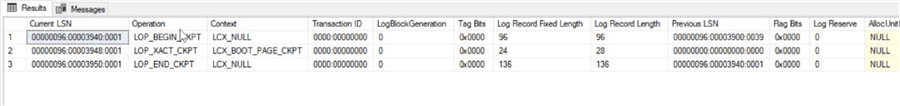

With the function fn_dblog you can check to see that the log is clean.

Before use the WRITE clause, let’s update the column adding text at the end using a regular update.

UPDATE dbo.LargeTableObject

SET col1 = col1 + REPLICATE(' regular update ', 2 )

WHERE id = 1

GO

The statistics io shows the following:

Table 'Worktable'. Scan count 0, logical reads 7, physical reads 0, read-ahead reads 0, lob logical reads 77360, lob physical reads 0, lob read-ahead reads 25557.

(1 row affected)

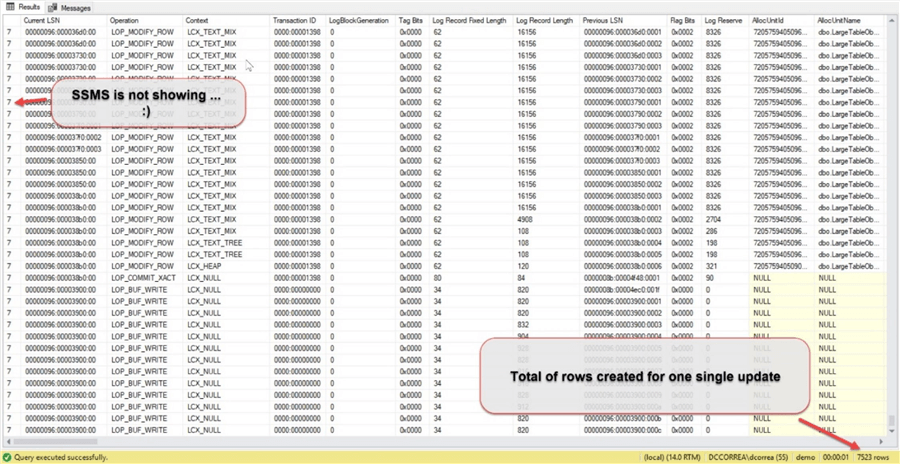

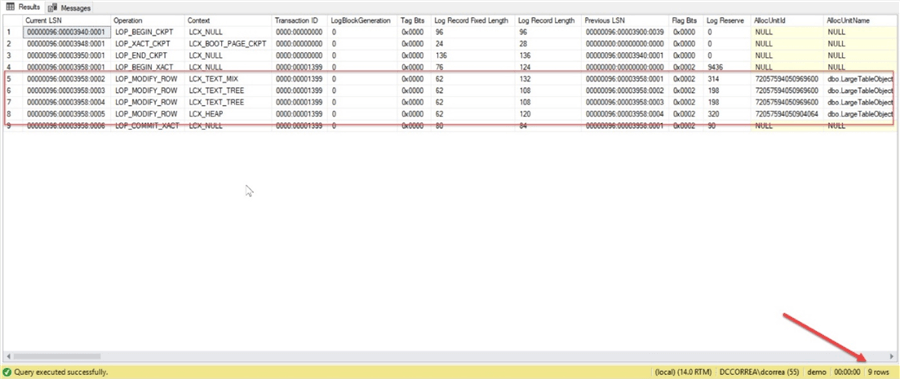

And, let’s take a look at the transaction log again.

SELECT * FROM fn_dblog(null,null) GO

We can see there are a lot more rows now.

The next step I will use the WRITE clause for the UPDATE, but before that I’ll clean my plan cache and transaction log again.

DBCC FREEPROCCACHE DBCC DROPCLEANBUFFERS CHECKPOINT SELECT * FROM fn_dblog(null,null) GO

We will do a similar update as we did above, but use the WRITE clause.

UPDATE dbo.LargeTableObject

SET col1.WRITE (REPLICATE(' write update ', 2 ), NULL , NULL )

WHERE id = 1

GO

The statistics io for this update is as follows. This is a big difference than the regular update.

(1 row affected)

Now let's look at the transaction log.

SELECT * FROM fn_dblog(null,null) GO

We can see there are a lot less rows after the update using the WRITE clause.

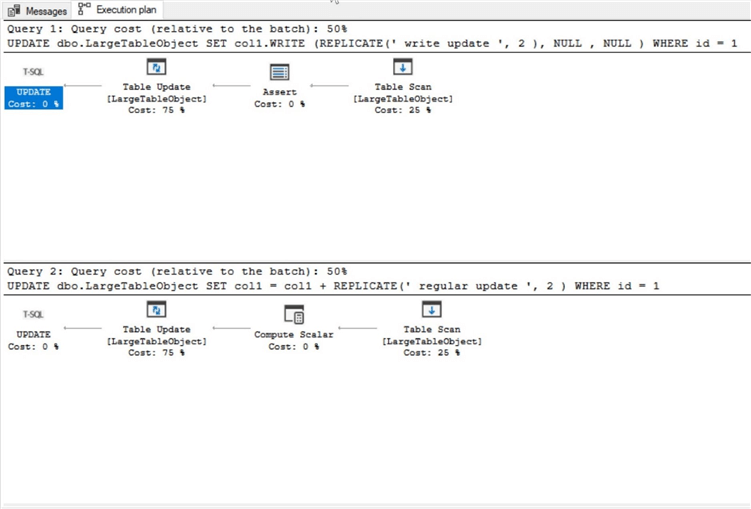

Compare Execution Plans

If we look at the execution plans for both queries, we can see they almost look the same. But as we saw, there is a lot less activity when using the WRITE clause.

Conclusion

There is a big difference when using WRITE clause and this improvement is a good reason to change the way you update large value data types. The execution plans will not show what’s the better choice, so you need to use set statistics io to get more information.

Next Steps

- Getting IO and time statistics for SQL Server Queries

- How to read the SQL Server Database Transaction Log

- More about the WRITE clause here.

About the author

Douglas Correa is a database professional, focused on tuning, high-availability and infrastructure.

Douglas Correa is a database professional, focused on tuning, high-availability and infrastructure.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2018-10-24