By: Aaron Bertrand | Updated: 2019-01-11 | Comments | Related: > Functions User Defined UDF

Problem

I recently wrote about scalar UDF inlining, a new feature in SQL Server 2019 that should drastically improve workloads with a high volume of user-defined functions. But if you can't move to SQL Server 2019, or can't benefit from that feature due to complexity or other factors, are there other things that can be done to improve performance?

Solution

There are definitely some approaches you can use to reclaim some of the CPU cycles and time forever lost inside function execution. In this tip I'll talk about four of them (well, five, really). Let's say we have the following – a simple table tracking barbers and the number of haircuts each barber has performed.

CREATE TABLE dbo.Barbers

(

BarberID int,

HaircutCount int

);

Next, we can fill it with some rows, some of which have NULL for the HaircutCount (new barbers, perhaps):

INSERT dbo.Barbers(BarberID, HaircutCount)

SELECT ABS(object_id),

CASE WHEN ABS(object_id) % 50 < 10

THEN NULL -- roughly 20% = NULL

ELSE ABS(object_id) % 200

END

FROM sys.all_objects;

On my system, this yields 2,302 rows, with the number of haircuts ranging from 1 to 200, and 490 rows being NULL (about 21.3%). Note: Distribution on your system may vary.

Finally, we have a query where we want to calculate the end of year bonus for each barber. The bonus is tiered:

- All barbers get a flat rate of $5 per haircut;

- Barbers who performed more than 50 haircuts get an additional $20; and,

- Barbers who performed more than 100 haircuts get an additional $50.

This logic is easy to express in a number of ways, but all are relatively verbose. One is as follows:

SELECT

BarberID,

HaircutCount,

Bonus = HaircutCount * 5

+ CASE WHEN HaircutCount > 50 THEN 20 ELSE 0 END

+ CASE WHEN HaircutCount > 100 THEN 50 ELSE 0 END

FROM dbo.Barbers;

Now, we're talking about this because logic like this tends to get shuffled away to a scalar user-defined function, both to simplify the query, and to abide by the "don't repeat yourself" principle (in cases where this bonus is calculated in multiple places):

CREATE FUNCTION dbo.CalculateBonus

(

@HaircutCount int

)

RETURNS int

AS

BEGIN

RETURN @HaircutCount * 5

+ CASE WHEN @HaircutCount > 50 THEN 20 ELSE 0 END

+ CASE WHEN @HaircutCount > 100 THEN 50 ELSE 0 END;

END

GO

Of course, when you swap that function call in, it makes the query look a lot better:

SELECT

BarberID,

HaircutCount,

Bonus = dbo.CalculateBonus(HaircutCount)

FROM dbo.Barbers;

And it works fine on small resultsets. But once your data starts scaling, performance degrades rapidly. So, what are some of the things we can do to improve this situation?

Use the option WITH SCHEMABINDING

As described in a previous tip by Atif Shehzad, we see that in many cases simply adding WITH SCHEMABINDING can simplify optimization and improve performance. The change to the function is simple:

CREATE FUNCTION dbo.CalculateBonus_WithSchemabinding

(

@HaircutCount int

)

RETURNS int

WITH SCHEMABINDING

AS

BEGIN

RETURN @HaircutCount * 5

+ CASE WHEN @HaircutCount > 50 THEN 20 ELSE 0 END

+ CASE WHEN @HaircutCount > 100 THEN 50 ELSE 0 END;

END

GO

Use the option RETURNS NULL ON NULL INPUT

Jonathan Kehayias recently blogged about this option, and I have to admit it was a new discovery to me, too. The basic purpose of this option is to prevent the function being called at all if you know that, when you put NULL in, you're going to get NULL out. This is the case here, and is probably the case in a lot of scenarios. Again, the change is simple:

CREATE FUNCTION dbo.CalculateBonus_WithReturnsNullOnNullInput

(

@HaircutCount int

)

RETURNS int

WITH RETURNS NULL ON NULL INPUT

AS

BEGIN

RETURN @HaircutCount * 5

+ CASE WHEN @HaircutCount > 50 THEN 20 ELSE 0 END

+ CASE WHEN @HaircutCount > 100 THEN 50 ELSE 0 END;

END

GO

Use both options together

If either of these options can provide a better result, why not add both options to the function and see if the improvement is even better?

CREATE FUNCTION dbo.CalculateBonus_WithBoth

(

@HaircutCount int

)

RETURNS int

WITH SCHEMABINDING, RETURNS NULL ON NULL INPUT

AS

BEGIN

RETURN @HaircutCount * 5

+ CASE WHEN @HaircutCount > 50 THEN 20 ELSE 0 END

+ CASE WHEN @HaircutCount > 100 THEN 50 ELSE 0 END;

END

GO

Rewrite as inline table-valued function

One option that can provide encapsulation without hurting performance is an inline table-valued function. This allows the optimizer to fold the UDF logic into the outer query, eliminating row-by-row processing and allowing for more accurate cardinality estimates. This new function:

CREATE FUNCTION dbo.CalculateBonus_TableValued

(

@HaircutCount int

)

RETURNS TABLE

WITH SCHEMABINDING

AS

RETURN (SELECT Bonus =@HaircutCount * 5

+ CASE WHEN @HaircutCount > 50 THEN 20 ELSE 0 END

+ CASE WHEN @HaircutCount > 100 THEN 50 ELSE 0 END);

GO

This requires a slight change to the outer query (admittedly, this is not always possible):

SELECT

b.BarberID,

b.HaircutCount,

f.Bonus

FROM dbo.Barbers AS b

CROSS APPLY dbo.CalculateBonus_TableValued(b.HaircutCount) AS f;

And of course…

…removing the function altogether and just including the logic in the query. I understand this, too, is not always possible. This query was shown above but, for completeness:

SELECT

BarberID,

HaircutCount,

Bonus = HaircutCount * 5

+ CASE WHEN HaircutCount > 50 THEN 20 ELSE 0 END

+ CASE WHEN HaircutCount > 100 THEN 50 ELSE 0 END

FROM dbo.Barbers;

Performance Testing

To set up for comparing performance between these approaches, we can run the following preparation code, including temp tables to collect function output to avoid both overwhelming SSMS and introducing ASYNC_NETWORK_IO to the mix:

SET NOCOUNT ON; -- clear things up:

DBCC FREEPROCCACHE;

DBCC DROPCLEANBUFFERS; -- collect the data in a #temp table to prevent all

-- the rendering from killing Management Studio

DROP TABLE IF EXISTS #coll1,#coll2,#coll3,#coll4,#coll5,#coll6;

CREATE TABLE #coll1(a int, b int, c int);

CREATE TABLE #coll2(a int, b int, c int);

CREATE TABLE #coll3(a int, b int, c int);

CREATE TABLE #coll4(a int, b int, c int);

CREATE TABLE #coll5(a int, b int, c int);

CREATE TABLE #coll6(a int, b int, c int);

Then we can run the following batches, where we calculate the bonus for all barbers 20 times:

INSERT #coll1 WITH (TABLOCKX) SELECT BarberID, HaircutCount,

dbo.CalculateBonus(HaircutCount)

FROM dbo.Barbers AS b;

GO 20 INSERT #coll2 WITH (TABLOCKX) SELECT BarberID, HaircutCount,

dbo.CalculateBonus_WithSchemaBinding(HaircutCount)

FROM dbo.Barbers AS b;

GO 20 INSERT #coll3 WITH (TABLOCKX) SELECT BarberID, HaircutCount,

dbo.CalculateBonus_WithReturnsNullOnNullInput(HaircutCount)

FROM dbo.Barbers AS b;

GO 20 INSERT #coll4 WITH (TABLOCKX) SELECT BarberID, HaircutCount,

dbo.CalculateBonus_WithBoth(HaircutCount)

FROM dbo.Barbers AS b;

GO 20 INSERT #coll5 WITH (TABLOCKX) SELECT BarberID, HaircutCount, HaircutCount * 5

+ CASE WHEN HaircutCount > 50 THEN 20 ELSE 0 END

+ CASE WHEN HaircutCount > 100 THEN 50 ELSE 0 END

FROM dbo.Barbers;

GO 20 INSERT #coll6 WITH (TABLOCKX) SELECT b.BarberID, b.HaircutCount, f.Bonus

FROM dbo.Barbers AS b

CROSS APPLY dbo.CalculateBonus_TableValued(b.HaircutCount) AS f;

GO 20

Then we can look at simple places in SQL Server that help us understand query performance, like dynamic management views sys.dm_exec_query_stats (which will capture all of the above queries) and sys.dm_exec_function_stats (which will only capture the first four). Here is a query against query stats:

SELECT

[Query] = CASE

WHEN t.text LIKE N'%(H%

THEN SUBSTRING(t.text, 65, CHARINDEX(N'(H', t.text)-65)

WHEN t.text LIKE N'%(b.H%'

THEN 'TableValued'

ELSE 'No function'

END,

execution_count,

total_elapsed_time, -- microseconds

avg_elapsed_time = CONVERT(decimal(12,2), total_elapsed_time*1.0/execution_count)

FROM sys.dm_exec_query_stats AS s

CROSS APPLY sys.dm_exec_sql_text(s.sql_handle) AS t

WHERE t.text LIKE N'%dbo.Barbers%'

AND t.text NOT LIKE N'%query_stats%'

ORDER BY avg_elapsed_time;

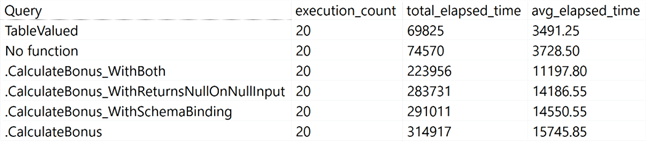

Results:

We can see that the two WITH options separately each provide a small performance boost, but when combined, the improvement is much more substantial. Nothing beats removing the function altogether or using a table-valued function, though, and I was surprised to see the latter slightly outperform the former.

Next we can run a query against function stats (and again, the inline table-valued function won't get captured here):

SELECT

Function_Name = OBJECT_NAME([object_id]),

execution_count,

total_elapsed_time -- microseconds

FROM sys.dm_exec_function_stats

ORDER BY execution_count;

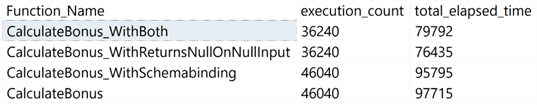

Results:

The values for execution_count are equal to the number of rows times the number of executions (2,302 * 20 = 46,040) – but for the first two functions, the number of rows is reduced to the number of non-NULL values (1,812 * 20 = 36,240). This makes it clear how not calling the function at all, when you don't have to, can have a significant effect. As an added bonus, those skipped executions don't get captured by trace, extended events, or any internal tracking.

Summary

Clearly the options WITH SCHEMABINDING and WITH RETURNS NULL ON NULL INPUT can have a dramatic impact on query runtime, even if it's not as dramatic as an inline table-valued function (or removing the function call altogether). Not all scenarios allow for disruptive changes – changing the syntax of the query will sometimes violate support agreements, and sometimes it's impossible. But long story short, even if you can't move to the latest or next version of SQL Server, there is hope – you can try a few of these options to help improve your scalar user-defined function performance.

Next Steps

Read on for related tips and other resources:

- Improve SQL Server Scalar UDF Performance in SQL Server 2019 with Scalar UDF Inlining

- Improve Performance of UDFs with NULL ON NULL INPUT

- Benefits of SCHEMABINDING in SQL Server

- Using schema binding to improve SQL Server UDF performance

- CREATE FUNCTION (Transact-SQL)

- Create User-defined Functions (Database Engine)

About the author

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.

Aaron Bertrand (@AaronBertrand) is a passionate technologist with industry experience dating back to Classic ASP and SQL Server 6.5. He is editor-in-chief of the performance-related blog, SQLPerformance.com, and also blogs at sqlblog.org.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-01-11