By: Fikrat Azizov | Updated: 2019-01-16 | Comments | Related: More > Integration Services Data Flow Transformations

Problem

I was helping diagnose underperforming ETL jobs for one of my clients. They had SQL Server Agent Jobs which were calling SSIS packages to populate their DWH and these jobs were running much slower than they used to and were failing occasionally. Using only SQL Server Management Studio (SSMS) I was able to diagnose the source of the problem and recommend a solution. I'm going to share some simple techniques you can use to diagnose underperforming ETL jobs.

Solution

Many organizations have an in-house developed Data Warehouses (DWH), populated by SSIS packages. In a typical scenario, SSIS packages read from operational databases, do some transformations and write to the DWH. At a high level, SSIS package inefficiencies could be explained by poorly designed source queries, inefficient transformations, slow destination flows or incorrect package configurations.

Although SSIS packages can handle a variety of data movement and transformation tasks, the solution described here focuses on ETL packages, feeding a DWH.

DWH database models usually consist of large fact tables and relatively small dimension tables. Fact tables often contain timestamp fields indicating row insertion date/time. We will use these timestamps to draw conclusions about possible sources of performance bottlenecks.

Demonstration

To demonstrate, I've downloaded and installed the WideWorldImportersDW-Standard database from here. I created a copy of the Fact.Order table with the name [Fact].[OrderDest], which includes a timestamp field TrxDateTimeautomaticallypopulated by the GetDate() function, please see the below script:

CREATE TABLE [Fact].[OrderDest]( [Order Key] [bigint] NOT NULL, [City Key] [int] NOT NULL, [Customer Key] [int] NOT NULL, [Stock Item Key] [int] NOT NULL, [Order Date Key] [date] NOT NULL, [Picked Date Key] [date] NULL, [Salesperson Key] [int] NOT NULL, [Picker Key] [int] NULL, [WWI Order ID] [int] NOT NULL, [WWI Backorder ID] [int] NULL, [Description] [nvarchar](100) NOT NULL, [Package] [nvarchar](50) NOT NULL, [Quantity] [int] NOT NULL, [Unit Price] [decimal](18, 2) NOT NULL, [Tax Rate] [decimal](18, 3) NOT NULL, [Total Excluding Tax] [decimal](18, 2) NOT NULL, [Tax Amount] [decimal](18, 2) NOT NULL, [Total Including Tax] [decimal](18, 2) NOT NULL, [Lineage Key] [int] NOT NULL, [TrxDateTime] [datetime] NULL, CONSTRAINT [PK_Fact_OrderDest] PRIMARY KEY CLUSTERED ([Order Key] ASC)) GO ALTER TABLE [Fact].[OrderDest] ADD CONSTRAINT [DF_OrderDest_TrxDateTime] DEFAULT (getdate()) FOR [TrxDateTime] GO

The Fact.Order table contains around 15 million rows and I will use this query to populate the table.

INSERT INTO [Fact].[OrderDest] ( [City Key], [Customer Key], [Stock Item Key], [Order Date Key], [Picked Date Key], [Salesperson Key], [Picker Key], [WWI Order ID], [WWI Backorder ID], [Description], [Package], [Quantity], [Unit Price], [Tax Rate], [Total Excluding Tax], [Tax Amount], [Total Including Tax], [Lineage Key] ) SELECT [City Key], [Customer Key], [Stock Item Key], [Order Date Key], [Picked Date Key],

[Salesperson Key], [Picker Key], [WWI Order ID], [WWI Backorder ID], [Description], [Package], [Quantity], [Unit Price], [Tax Rate], [Total Excluding Tax], [Tax Amount], [Total Including Tax], [Lineage Key] FROM [Fact].[Order]

Next, I created a simple SSIS package, added a data flow task and included source and destination adapters for the Fact.Order and Fact.OrderDest tables and created a direct flow between them:

Finally, I created a SQL Server Agent Job and used it to trigger the SSIS package.

Troubleshooting Steps

To be able to clarify where the job has spent most of the time, we need to find answers for questions like:

- What time did the job start and how long it lasted?

- When did the first batch arrive in the destination table?

- How long it took between the first and last batches inserted into the destination table?

- How frequent were batch inserts into the destination table?

We can get answers for the first question from the job history using the following script. Please note, you will need to replace the job name with your ETL job name.

SELECT top 10 t.last_run_date, t.last_run_time, t.last_run_duration RunDuration, t.last_run_outcome FROM msdb..sysjobsteps t INNER JOIN msdb..sysjobs tt ON t.job_id = tt.job_id WHERE tt.name = 'ETL' order by t.last_run_date desc, t.last_run_time desc

As you can see from the below screenshot, the job started at 16:22:55 and lasted 4 min 5 sec (duration is shown in the format hhmmss):

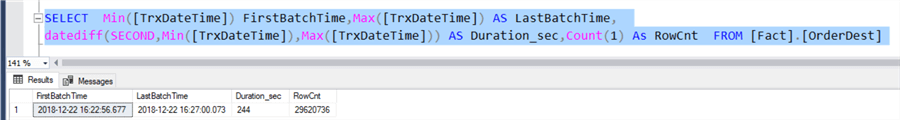

Next, we use the destination database (WideWorldImportersDW-Standard) and run the following script to get timestamps of the first and last batches, as well as the time interval between the batches:

SELECT Min([TrxDateTime]) AS FirstBatchTime, Max([TrxDateTime]) AS LastBatchTime, datediff(SECOND,Min([TrxDateTime]), Max([TrxDateTime])) AS Duration_dec, Count(1) AS RowCnt FROM [Fact].[OrderDest]

Here is the query result:

Here are some observations from this query output:

- The first batch was inserted shortly after the job start

- The time interval between the first and last batches was almost the same as the entire job duration

We can draw the following conclusions:

- The source queries were performing well

- There were no blocking transformations within the package which could have delayed the first batch

Now, let's examine the batch sizes in the destination table:

SELECT [TrxDateTime], count(1) As BatchSize FROM [Fact].[OrderDest] (nolock) GROUP BY [TrxDateTime]

As you can see from the below query results, the batch sizes were 9903 rows, which is close to the default setting of 10,000 rows for the SSIS Data Flow's DefaultBufferMaxRows configuration setting.

Based on these findings, we can draw the following conclusions:

- The OLE DB destination has the correct Data Access Mode setting (table or view-fast load). We can tell this, because the batch size is greater than 1. If this setting was set to 'Table or view' we'd have much longer execution time (see OLE DB destination settings for more details).

- The package's performance could be improved by increasing the DefaultBufferMaxRows setting, as well as the DefaultBufferSize setting, which is 10MB by default. Both of these settings could allow almost a 10 fold increase, which could result in the package running several times faster (see Data flow buffer performance for more details), if the server has enough memory and disk throughput.

SSIS Package Optimization

Let's increase the DefaultBufferMaxRows and DefaultBufferSize settings (to 20000 and to 20971520) and rerun the job:

As you can see from the below screen, the batch sizes have doubled, with slightly longer periods between the batches:

The job duration has dropped almost 25% (from 244 to 207 sec)

I've experimented with a few different values for the defaultBufferMaxRows and defaultBufferSize and here are the performance results:

| defaultBufferMaxRows | defaultBufferSize | Job duration (sec) |

|---|---|---|

| 10,000 | 10,485,760 | 244 |

| 20,000 | 20,971,520 | 207 |

| 40,000 | 41,943,040 | 249 |

| 80,000 | 83,886,080 | 380 |

The above results suggest that increasing the buffer sizes were beneficial up to some point (20K rows in my case), but then performance deteriorated, because of resource constraints on the host machine. Depending on how powerful your server is, you may get up to a 10 times performance improvement. So, it would be wise to test these settings to figure out the optimal setting for your system.

Conclusion

Although SSIS performance may require more investigation in certain cases, it makes sense to start with simple steps to eliminate obvious problems. In my case, the client's developer team verified and confirmed that buffer settings were the default values and after increasing these settings, the performance has been improved.

Next Steps

- Read about OLE Db destination settings

- Read about Data flow buffer performance

- Check out the SSIS Resources on MSSQLTips.com

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-01-16