By: Bhavesh Patel | Updated: 2019-02-21 | Comments | Related: > Performance Tuning

Problem

SQL Server writes to the transaction log whenever a modification like an insert, update or delete happens in a database. As you probably know, every database has a separate transaction log file. If a transaction is very large it will consume a lot of space in the transaction log and the log file may need to grow to accommodate the transaction. It might be possible that a log file takes up the free disk space or grows to its maximum size and causes the "SQL error code: 9002 The transaction log for database is full." In this tip we will look at a query that can be run to show currently running queries and how much transaction log space they are consuming.

Solution

First, we will cover how the transaction log is used, then we will look at a query and an example to capture queries that are running including how much transaction log space they are using.

SQL Server Transaction Log Workflow

At a minimum, a SQL Server transaction log physical file exists for every database along with the data file(s). The transaction log file has an extension of .LDF and the data file has an extension .MDF. In addition, you can configure additional files for the database based on your needs. For deeper learning about the log, you can check out Brady Upton's SQL Server transaction log tutorial.

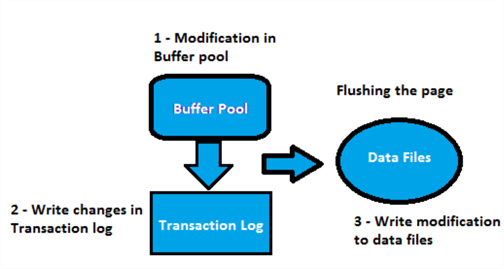

Here I will explain write ahead logging for a transaction.

As per MSDN, the role of the write-ahead transaction log is recording the data modification to disk. SQL Server WAL (write-ahead transaction log) guarantees that no data modification is written to disk before the associated log record is written to disk. This allows for the ACID properties for a transaction to be followed.

- The page containing the existing data rows are fetched into the buffer pool. The transaction is marked at the start in the transaction log.

- Data is then modified in the buffer pool. The modified page is written to the transaction log.

- On committing the transaction, the transaction end is marked in the transaction log and the transaction log records are written to disk.

- Writing a modified data page from the buffer cache to disk is called flushing the page. SQL Server has logic that prevents a dirty page from being flushed before the associated log record is written. The checkpoint process periodically scans the buffer cache for buffers with pages from a specified database and writes all dirty pages to disk.

- Log records are then written to disk when the log buffers are flushed.

- For each log record associated with the transaction they are individually linked in a chain using backward pointers that speed-up the rollback of the transaction.

- In a SQL Server startup, the database performs a recovery process. The transaction log is read sequentially bringing the data files up to date. Uncommitted transactions are rolled back and committed transactions are roll forwarded.

SQL error code: 9002 The transaction log for database is full

In a database in full recovery, the section of the log file last written to is called the active portion of the log. This active log must include every part of all uncommitted transactions. This means the current transaction is not committed or rolled back. When the transaction is long running, then the log might grow very large, because the log cannot be truncated in full recovery due to the active transaction. In such circumstances, it might cause error "SQL error code: 9002 The transaction log for database is full."

DMVs to Capture SQL Server Transaction Log Usage

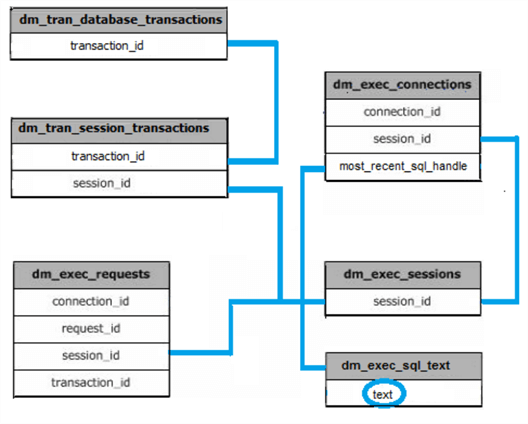

These are the DMVs we will be using to capture the information in the query we will cover further down in the article.

sys.dm_tran_database_transactions

- This DMV returns the transaction information at the database level.

sys.dm_tran_session_transactions

- This lets us capture information for associated transactions and sessions.

sys.dm_exec_sessions

- sys.dm_exec_sessions is a server-scope view that shows information about all active user connections and internal tasks. This information includes client version, client program name, client login time, login user, current session settings and more. Dynamic management views: sys.dm_exec_connections, sys.dm_exec_sessions, and sys.dm_exec_requests are mapped to the sys.sysprocesses system table. Here is nice explanation of sys.dm_exec_sessions by K. Brian Kelley.

sys.dm_exec_connections

- sys.dm_exec_connections returns information about the connections established to the instance of SQL Server and the details of each connection. This returns server wide connection information for SQL Server.

sys.dm_exec_requests

- sys.dm_exec_requests returns the information about each request that is executing within SQL Server. Here is an explanation of sys.dm_exec_requests by K. Brian Kelley. We can use the statement_start_offset and statement_end_offset to get the actual query text.

sys.dm_exec_sql_text

- sys.dm_exec_sql_text returns the query text of the SQL batch identified by the specific handle (sql_handle | plan_handle).

Relationship Diagram

Here is a relationship diagram that shows how these all interact.

Setup a SQL Server Test Environment

First, we will setup a SQL Server database, table and load some test data.

-- Listing 1

USE MASTER

GO

CREATE DATABASE TranlogUsage

GO

USE TranlogUsage

GO

CREATE Table CustTransaction

(

id INT PRIMARY KEY IDENTITY(1,1),

Col1 NVARCHAR(500),

Col2 NVARCHAR(500),

Col3 NVARCHAR(500),

Col4 NVARCHAR(500),

Col5 NVARCHAR(500),

Col6 NVARCHAR(500)

)

GO

-- insert sample data

;WITH

p1 as (select 1 as C union all select 1),

p2 as (select 1 as C from p1 as A, p1 as B),

p3 as (select 1 as C from p2 as A, p2 as B),

p4 as (select 1 as C from p3 as A, p3 as B),

p5 as (select 1 as C from p4 as A, p4 as B),

p6 as (select 1 as C from p5 as A, p5 as B),

tally as (select row_number() over(order by C) as N from p6

)

INSERT INTO CustTransaction

SELECT

'T1' + replicate ('0',200),

'T2' + replicate ('0',200),

'T3' + replicate ('0',200),

'T4' + replicate ('0',200),

'T5' + replicate ('0',200),

'T6' + replicate ('0',200)

FROM tally

WHERE N between 1 and 1000000

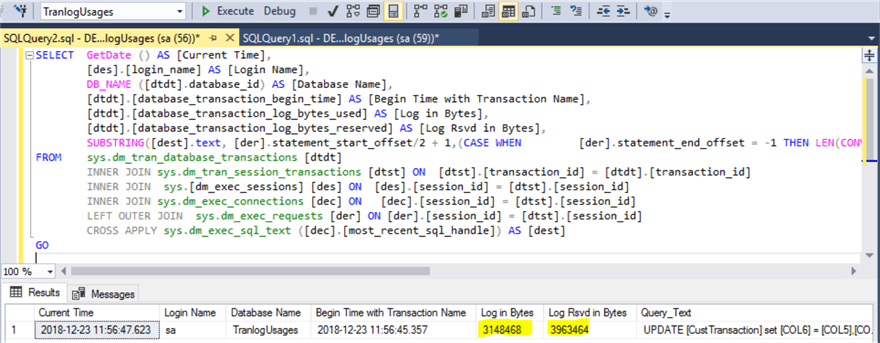

Query to find SQL Queries Using Transaction Log Space

Here is the query to return running T-SQL statements which are using space in the transaction log files. Down below we will walk through an example.

-- Listing 2 SELECT GETDATE() AS [Current Time], [des].[login_name] AS [Login Name], DB_NAME ([dtdt].database_id) AS [Database Name], [dtdt].[database_transaction_begin_time] AS [Transaction Begin Time], [dtdt].[database_transaction_log_bytes_used] AS [Log Used Bytes], [dtdt].[database_transaction_log_bytes_reserved] AS [Log Reserved Bytes], SUBSTRING([dest].text, [der].statement_start_offset/2 + 1,(CASE WHEN [der].statement_end_offset = -1 THEN LEN(CONVERT(nvarchar(max),[dest].text)) * 2 ELSE [der].statement_end_offset END - [der].statement_start_offset)/2) as [Query Text] FROM sys.dm_tran_database_transactions [dtdt] INNER JOIN sys.dm_tran_session_transactions [dtst] ON [dtst].[transaction_id] = [dtdt].[transaction_id] INNER JOIN sys.dm_exec_sessions [des] ON [des].[session_id] = [dtst].[session_id] INNER JOIN sys.dm_exec_connections [dec] ON [dec].[session_id] = [dtst].[session_id] LEFT OUTER JOIN sys.dm_exec_requests [der] ON [der].[session_id] = [dtst].[session_id] CROSS APPLY sys.dm_exec_sql_text ([dec].[most_recent_sql_handle]) AS [dest] GO

Example to Capture Queries Using Transaction Log Space

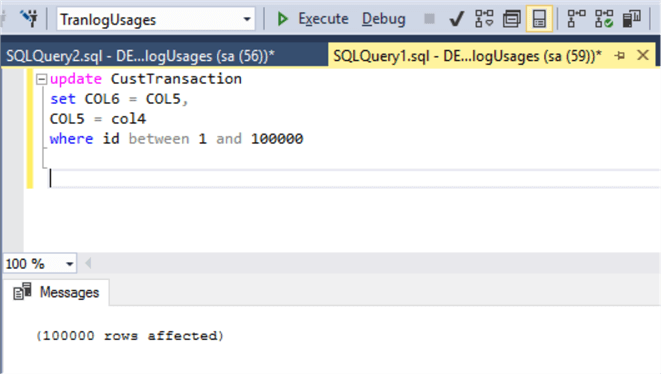

Below we will open two query windows. In the first query window we will run an UPDATE against the data we just created. This should hopefully run long enough so we can capture the usage of the transaction log in the second query window.

Session 1: Run Update Query

Run this query in one query window. You may need to increase 100,000 to a bigger number to capture the output in query window 2 if your system is very fast.

UPDATE CustTransaction SET Col6 = Col5, Col5 = Col4 WHERE ID BETWEEN 1 AND 1000000

Session 2: Get queries using SQL Server transaction log

Below we run the query from Listing 2 and this shows us the above query that is running and the space used in the transaction log that is highlighted below.

Create SQL Server Job to Find Queries Consuming Log Space

I prepared a SQL Server Agent Job to monitor for T-SQL statements that are impacting the transaction log space. These are the job steps:

- Created database Maintenance with simple recovery model and create table Audit_Queries_Impacting_Log to store the audit data.

- Created Job with two steps; In the first step the SQL logs are captured to the audit table. In the second step, we remove old historical data and only keep the last week.

- Job Scheduling: This job will run every 30 seconds.

You can modify this as needed to meet your requirements.

USE MASTER

GO

CREATE DATABASE Maintenance

GO

ALTER DATABASE Maintenance SET RECOVERY SIMPLE

GO

USE Maintenance

GO

CREATE TABLE Audit_Queries_Impacting_Log

(

CaptureTime DATETIME,

LoginName NVARCHAR(256),

DatabaseName NVARCHAR(256),

TranBeginTime DATETIME,

LogUsedBytes BIGINT,

LogReservedBytes BIGINT,

QueryText NVARCHAR(MAX)

)

GO

-- create SQL Agent Job

USE [msdb]

/****** Object: Job [TransactionLogMonitoring] Script Date: 23/12/2018 1:59:02 PM ******/

BEGIN TRANSACTION

DECLARE @ReturnCode INT,

@schedule_uid UNIQUEIDENTIFIER = NEWID()

SELECT @ReturnCode = 0

/****** Object: JobCategory [[Uncategorized (Local)]] Script Date: 23/12/2018 1:59:02 PM ******/

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'[Uncategorized (Local)]' AND category_class=1)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'[Uncategorized (Local)]'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'TransactionLogUseMonitoring',

@enabled=1,

@notify_level_eventlog=0,

@notify_level_email=0,

@notify_level_netsend=0,

@notify_level_page=0,

@delete_level=0,

@description=N'No description available.',

@category_name=N'[Uncategorized (Local)]',

@owner_login_name=N'sa', @job_id = @jobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Step1] Script Date: 23/12/2018 1:59:02 PM ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Step1',

@step_id=1,

@cmdexec_success_code=0,

@on_success_action=3,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N' INSERT INTO Audit_Queries_Impacting_Log

SELECT

GETDATE() AS [Current Time],

[des].[login_name] AS [Login Name],

DB_NAME ([dtdt].database_id) AS [Database Name],

[dtdt].[database_transaction_begin_time] AS [Transaction Begin Time],

[dtdt].[database_transaction_log_bytes_used] AS [Log Used Bytes],

[dtdt].[database_transaction_log_bytes_reserved] AS [Log Reserved Bytes],

SUBSTRING([dest].text, [der].statement_start_offset/2 + 1,(CASE WHEN [der].statement_end_offset = -1 THEN LEN(CONVERT(nvarchar(max),[dest].text)) * 2 ELSE [der].statement_end_offset END - [der].statement_start_offset)/2) as [Query Text]

FROM

sys.dm_tran_database_transactions [dtdt]

INNER JOIN sys.dm_tran_session_transactions [dtst] ON [dtst].[transaction_id] = [dtdt].[transaction_id]

INNER JOIN sys.dm_exec_sessions [des] ON [des].[session_id] = [dtst].[session_id]

INNER JOIN sys.dm_exec_connections [dec] ON [dec].[session_id] = [dtst].[session_id]

LEFT OUTER JOIN sys.dm_exec_requests [der] ON [der].[session_id] = [dtst].[session_id]

CROSS APPLY sys.dm_exec_sql_text ([dec].[most_recent_sql_handle]) AS [dest]

WHERE [des].[session_id] <> @@spid

AND ([dtdt].[database_transaction_begin_time] > 0 OR [dtdt].[database_transaction_log_bytes_reserved] > 0 )

GO',

@database_name=N'Maintenance',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Step2] Script Date: 23/12/2018 1:59:03 PM ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Step2',

@step_id=2,

@cmdexec_success_code=0,

@on_success_action=1,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'USE Maintenance

GO

DELETE TOP(1000)

FROM Audit_Queries_Impacting_Log

WHERE CaptureTime < DATEADD(DAY,-7,GETDATE())',

@database_name=N'Maintenance',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id = 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule @job_id=@jobId, @name=N'My Schedule',

@enabled=1,

@freq_type=4,

@freq_interval=1,

@freq_subday_type=2,

@freq_subday_interval=30,

@freq_relative_interval=0,

@freq_recurrence_factor=0,

@active_start_date=20181223,

@active_end_date=99991231,

@active_start_time=0,

@active_end_time=235959,

@schedule_uid= @schedule_uid

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name = N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

COMMIT TRANSACTION

GOTO EndSave

QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

GO

Summary

- For optimizing update/delete/insert operations you need to minimize the transaction in terms of data volume.

- Try to execute update/delete/insert statements in smaller batches in lieu of executing one large batch.

- You should tune the indexes the query uses or could use.

- You could also think about using partitioning techniques to run smaller batches.

- Use a proper growth size for the transaction log file instead of using a growth percentage.

- Monitor for deadlock events and try to minimize.

- If possible, assign a separate disk drive for the transaction log.

- Make sure your database recovery model is correct to meet your business needs.

- Schedule regular transaction log backups to maintain database log size.

- For critical situations where the transaction log has grown very large, look at shrinking the database log file (Tutorial | Tip).

Next Steps

- Kindly run on a test server first before rolling out to production.

- Here is some additional reading:

About the author

Bhavesh Patel is a SQL Server database professional with 10+ years of experience.

Bhavesh Patel is a SQL Server database professional with 10+ years of experience.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-02-21