By: Ron L'Esteve | Updated: 2019-01-24 | Comments | Related: > Azure

Problem

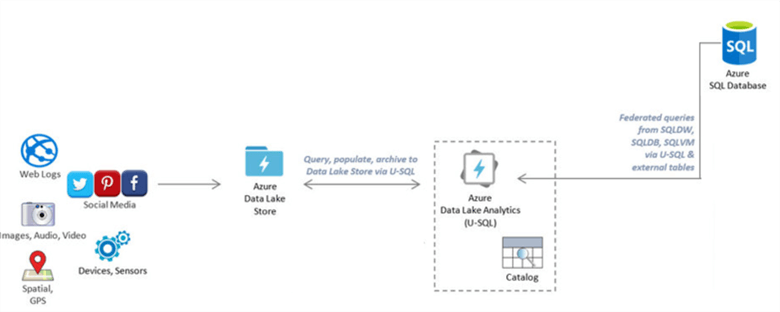

With the dawn of a new era known as the Big Data revolution, there has come an increasing need and demand to join incoming hot big data from either IoT devices or Social Media feeds that reside in Azure Data Lake to data-warehouses and data-marts that reside in Azure SQL DB for further processing, merging and analysis. This article aims at solving and bridging that gap with a real-world example.

Solution

In this article I will create a solution which will join files that reside in Azure Data Lake Store Azure with Azure SQL DB Data using federated U-SQL queries in Azure Data Lake analytics.

U-SQL is a powerful language which allows for querying big data rather quickly. For external systems, such as Microsoft Azure SQL Database, this can be achieved with federated queries against data sources. To query these external data sources, a data source object will need to be created and then referenced that abstracts the connection information as well as information about its capabilities to execute query expressions passed and translated from U-SQL to the its local query engine.

The following diagram illustrates the architectural solution that we will be executing:

Prerequisites

There are a few pre-requisites that must be set up:

- First, you will need an Azure Subscription which will be used in the PowerShell login script. If you do not have an Azure account, you can navigate to portal.azure.com to sign up for one.

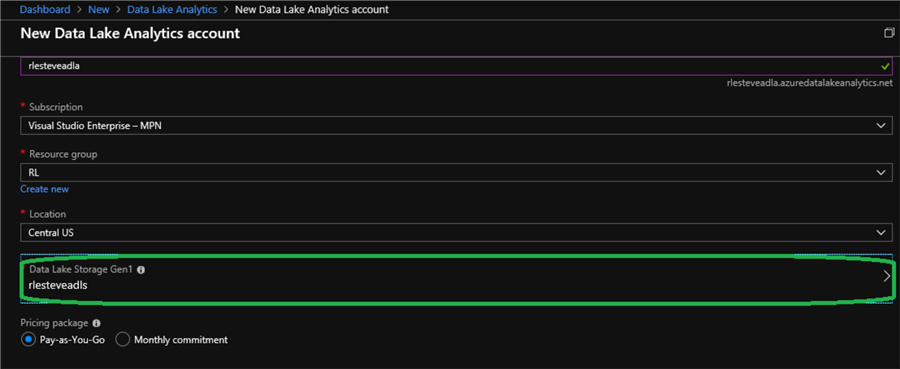

- Next, you will need both an Azure Data Lake Analytics (ADLA) and Azure Data Lake Store (ADLS). The Data Files will reside in ADLS and ADLA will be the driving analytics engine which will process the large data sets and join them to Azure SQL DB. In the Azure portal, create a Data Lake Analytics Resource. Note that you can create a Data Lake Storage Account at the same time within the same blade as seen in the illustration below.

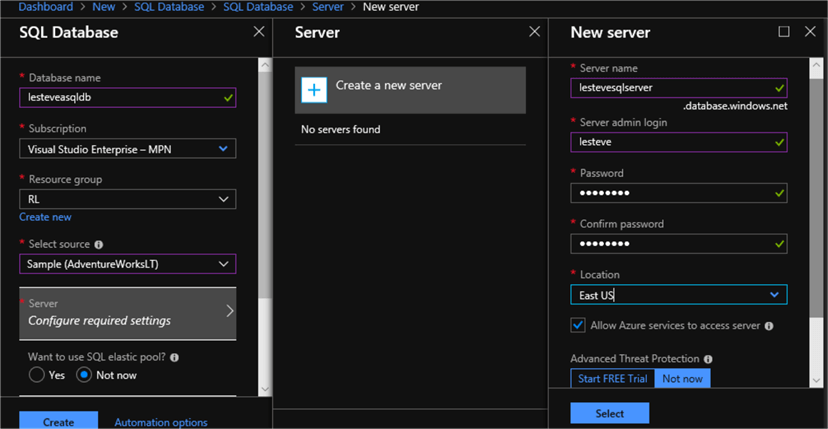

You'll also need either an Azure SQL Database (ASQLDB) or Azure SQL Data warehouse (ASQLDWH) with SQL login/password. Data from our Datamart or Datawarehouse will reside here. For the purpose of this article, let's assume we are using ASQLDB so we'll go ahead and create one.

- Azure PowerShell: We will execute some federated query setup routines using PowerShell.

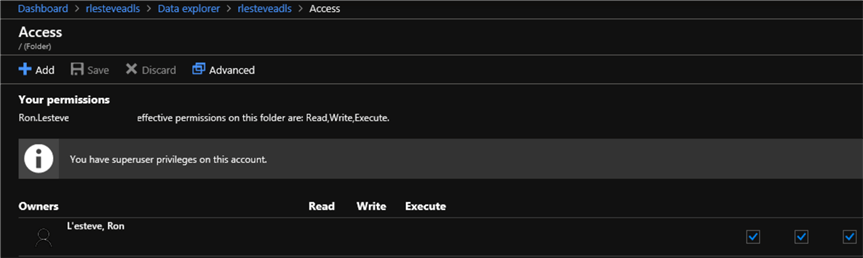

- Read/Execute Permissions on your ADLS (data level) to be

able to create the Catalog Secret

- Navigate to ADLS in Azure Portal

- Click Data Explorer

- Click Access

- Validate you have Read/Execute permissions.

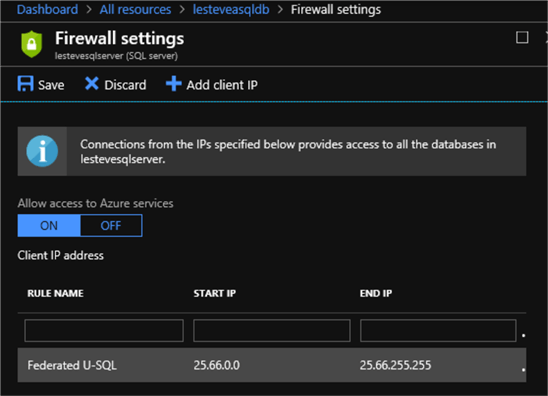

- Allow IP range in the ASQLDB server firewall for the ADLA services that

fire the U-SQL queries

- Navigate to your ASQLDB server in Azure Portal

- Click Settings

- Click Firewall

- Create new rule with range 25.66.0.0 to 25.66.255.255

This is typically the safest range to utilize, however you can run the following PowerShell script to find you ADLA region and then reference Microsoft's region-specific ranges in the event that the ranges have changed:

$DataLakeAnalyticsAccount = "rlesteveadla"; (Get-AzureRmDataLakeAnalyticsAccount -Name $DataLakeAnalyticsAccount).Location;

Setting Up ADLA Federated Querying

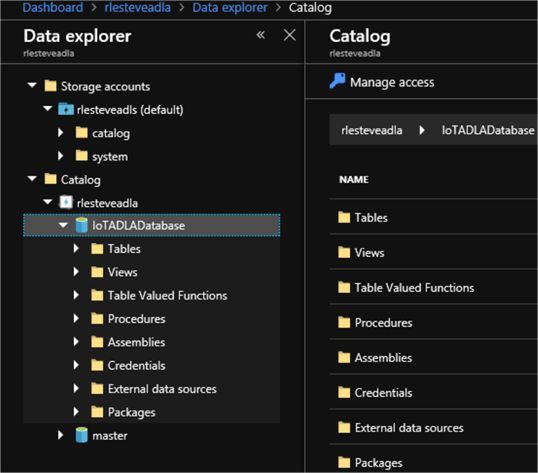

- Create the ADLA Database using a U-SQL query:

DROP DATABASE IF EXISTS IoTADLADatabase; CREATE DATABASE IoTADLADatabase;

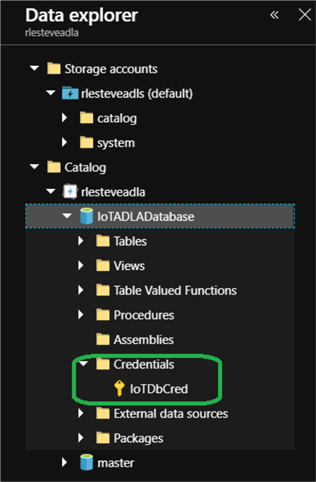

- Create a credential for a catalog specifying host and port. The New-AzureRmDataLakeAnalyticsCatalogCredential cmdlet creates a new credential to use in an Azure Data Lake Analytics catalog for connecting to external data sources.

#Login (login pop up appears) Login-AzureRmAccount #Show your available Azure Subscriptions Get-AzureRmSubscription #Connect to the Azure Subscription in which your ADLA Database exists Set-AzureRMContext -SubscriptionId 00000000-0000-0000-0000-000000000000 New-AzureRmDataLakeAnalyticsCatalogCredential -AccountName "rlesteveadla" ` -DatabaseName "IoTADLADatabase" ` -CredentialName "IoTDbCred" ` -Credential (Get-Credential) ` -DatabaseHost "lestevesqlserver.database.windows.net" -Port 1433;

(Note that while creating the credential, ensure that you are referencing the correct port for the SQL Server otherwise there will be connection issues when we attempt to create the external data source in the next step)

To remove the credential, run the following PowerShell Script and then re-create it using the previously defined step:

Remove-AzureRmDataLakeAnalyticsCatalogCredential -AccountName "rlesteveadla" `

-DatabaseName "IoTADLADatabase" `

-Name "IoTDbCred"

Once the credential is created correctly, it will appear under Credentials:

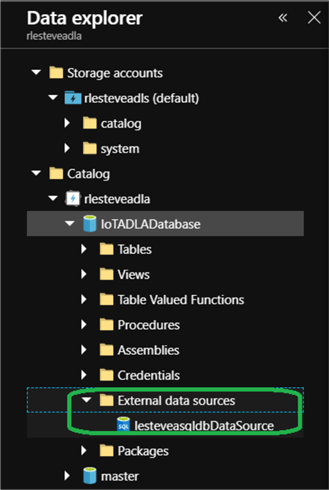

Create Data Source in ADLA Database with a reference to the ASQLDB using the U-SQL query below:

// Create External Data source on AZURESQLDB USE DATABASE IoTADLADatabase; CREATE DATA SOURCE IF NOT EXISTS lesteveasqldbDataSource FROM AZURESQLDB WITH ( PROVIDER_STRING = "Database=lesteveasqldb;Trusted_Connection=False;Encrypt=True" , CREDENTIAL = IoTDbCred , REMOTABLE_TYPES = (bool, byte, sbyte, short, ushort, int, uint, long, ulong, decimal, float, double, string, DateTime) );

Once the data source is created, it will appear under External Data Sources:

Create an External Table in ADLA Database based on the Data Source using the U-SQL query below:

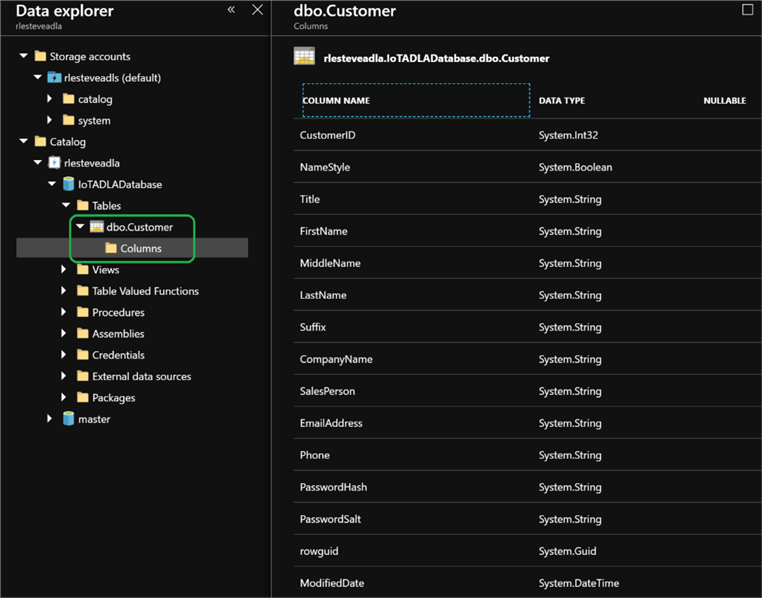

// CREATE EXTERNAL TABLE // FROM EXTERNAL ASQL_CUSTOMER LOCATION "SalesLT.Customer" USE DATABASE IoTADLADatabase; CREATE EXTERNAL TABLE Customer( [CustomerID] int, [NameStyle] bool, [Title] string, [FirstName] string, [MiddleName] string, [LastName] string, [Suffix] string, [CompanyName] string, [SalesPerson] string, [EmailAddress] string, [Phone] string, [PasswordHash] string, [PasswordSalt] string, [rowguid] Guid, [ModifiedDate] DateTime ) FROM lesteveasqldbDataSource LOCATION "SalesLT.Customer";

I use this conversion table to convert my T-SQL to U-SQL data types after I do a script table as create to new query: (Note: Remember to mark nullable T-SQL fields with ‘?' for best practice T-SQL to U-SQL conversions. Also note that Nullable types don't apply to reference types such as string.)

| T-SQL Data Type | U-SQL Data Type |

|---|---|

| bigint | long |

| binary | byte[] |

| bit | bool |

| char | string |

| date | DateTime |

| datetime | DateTime |

| datetime2 | DateTime |

| datetimeoffset | DateTime |

| decimal | decimal |

| FILESTREAM attribute | byte[] |

| float | double |

| image | byte[] |

| int | int |

| money | decimal |

| nchar | string |

| ntext | string |

| numeric | decimal |

| nvarchar | string |

| real | float |

| rowversion | byte[] |

| smalldatetime | DateTime |

| smallint | short |

| smallmoney | decimal |

| sql_variant | byte[] |

| text | string |

| time | DateTime |

| timestamp | byte[] |

| tinyint | byte |

| uniqueidentifier | Guid |

| varbinary | byte[] |

| varchar | string |

| xml | string |

U-SQL supports the following nullable types:

byte? sbyte? int? uint? long? ulong? float? double? decimal? short? ushort? bool? Guid? DateTime? char?

After the External table is created, it will appear under Tables:

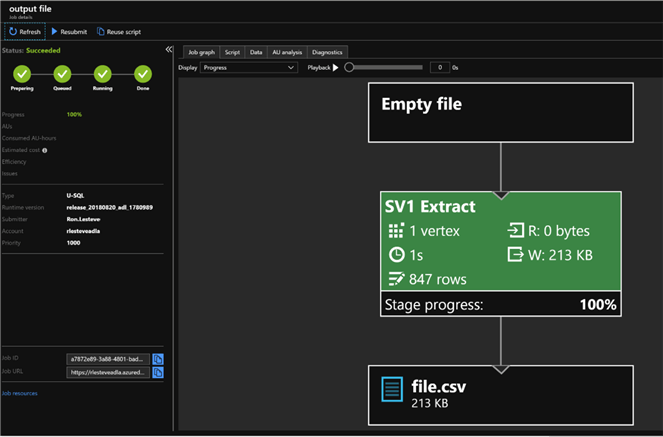

Query the federated external ASQLDB table and output result to file using the U-SQL query below:

USE DATABASE IoTADLADatabase; @query = SELECT CustomerID, NameStyle, Title, FirstName, MiddleName, LastName, Suffix, CompanyName, SalesPerson, EmailAddress, Phone, PasswordHash, PasswordSalt, rowguid, ModifiedDate FROM dbo.Customer; OUTPUT @query TO "/Output/file.csv" USING Outputters.Csv();

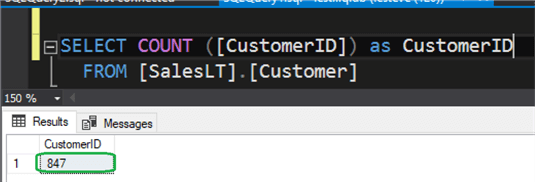

Once the query completes successfully, we can see that the newly generated output file contains 847 rows.

Sure enough, the count of 847 matches our ASQL DB Customer Table count as well:

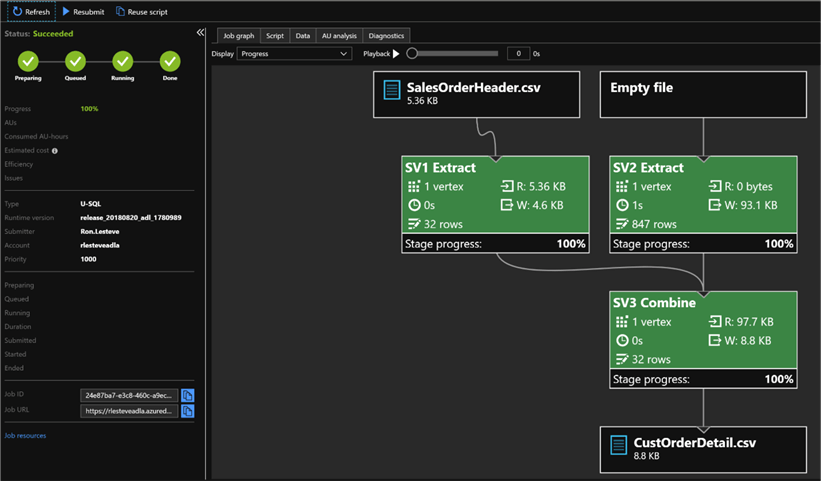

Joining Files in ADLS with Data in ASQLDB

Now that we have successfully run a test federated U-SQL query against the ASQLDB, we can now write a U-SQL query to join a file from ADLS to data in ASQLDB by using a common join key.

Let's assume that millions of records of Sales Order Header Data is coming in to our Azure Data Lake Store on a daily basis and will need to be processed further with data residing in the data warehouse in ASQLDB. To keep this last exercise simple, let's assume that there are 32 Sales Order Header records residing in ADLS. Our column of interest in this data set is CustomerID which we want to join to CustomerID from the External ASQL Customer Table to get us pertinent customer detail that we need.

USE DATABASE IoTADLADatabase;

//

// factorderheader - Input File

//

@factorderheader =

EXTRACT

SalesOrderID int,

RevisionNumber byte,

Status byte,

SalesOrderNumber string,

PurchaseOrderNumber string,

AccountNumber string,

CustomerID int,

ShipToAddressID int?,

BillToAddressID int?,

ShipMethod string,

CreditCardApprovalCode string,

SubTotal decimal,

TaxAmt decimal,

Freight decimal,

TotalDue decimal,

Comment string,

rowguid Guid

FROM "/Output/SalesOrderHeader.csv"

USING Extractors.Csv(skipFirstNRows:1);

//

// customerdetail - Input File

//

@customerquery =

SELECT

CustomerID,

NameStyle,

Title,

FirstName,

MiddleName,

LastName,

Suffix,

CompanyName,

SalesPerson,

EmailAddress,

Phone,

PasswordHash,

PasswordSalt,

rowguid,

FROM dbo.Customer;

//

// Result Set - Join Logic

//

@result_set =

SELECT

o.SalesOrderID,

o.RevisionNumber,

o.Status,

o.SalesOrderNumber,

o.PurchaseOrderNumber,

o.AccountNumber,

o.CustomerID,

o.ShipToAddressID,

o.BillToAddressID,

o.ShipMethod,

o.CreditCardApprovalCode,

o.SubTotal,

o.TaxAmt,

o.Freight,

o.TotalDue,

o.Comment,

c.NameStyle,

c.Title,

c.FirstName,

c.MiddleName,

c.LastName,

c.Suffix,

c.CompanyName,

c.SalesPerson,

c.EmailAddress,

c.Phone

FROM @factorderheader AS o

LEFT JOIN

@customerquery AS c

ON o.CustomerID == c.CustomerID;

//

// Customer Detail - Output File

//

OUTPUT @result_set

TO "/Output/CustOrderDetail.csv"

USING Outputters.Csv(outputHeader: true);

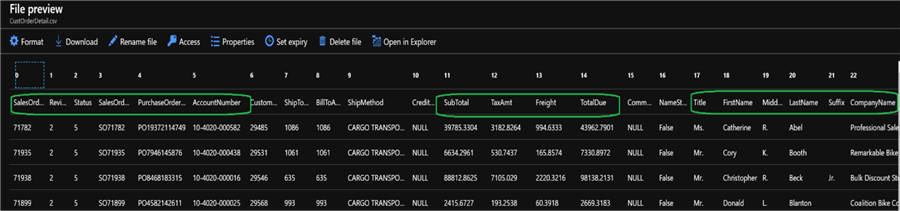

As we can see from the job graph, the job executed successfully and output 32 rows in the combined output file.

Based on the output file, we can see that we were able to successfully join Sales Order Header Data in ADLS (Azure Data Lake Store) with Customer Detail Data in ASQLDB (Azure SQL DB) on a common join key (CustomerID) and this was achieved by Federated Queries that we set up and ran in ADLA (Azure Data Lake Analytics).

Next Steps

- For architectural guidance on designing big data solutions in Azure, see the Microsoft Patterns and Practices architecture guide for Big Data.

- Be sure to explore U-SQL Tips and Best Practices for the SQL Programmer specifically related to Case-Sensitivity, C# Data Types, C# Comparison Operators, Comparing null Values, & Some Common SQL Constructs Are not Supported.

- For more information about U-SQL language, see the U-SQL Language Reference.

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-01-24