By: Eric Blinn | Updated: 2019-02-15 | Comments (5) | Related: > Indexing

Problem

I understand what the SQL Server fill factor is, but how do I choose an appropriate fill factor for any given index?

Solution

This prior tip will help understand what an index fill factor is and how it affects the size of a SQL Server index. In short, the bigger the fill factor the smaller the disk footprint of the index and vice versa. At the end of that tip it advises that indexes and their fill factors should be well planned. This tip will focus on how to plan for an appropriate fill factor by analyzing the impact of different fill factor numbers and by studying a few facts about the data in the index columns.

SQL Server Fill Factor Example

To better understand how different fill factors affect indexes we will make 4 identical tables. Each table will have 100,000 identical rows and be clustered based on a GUID column. That cluster will be made with different fill factors of 70, 80, 90, and 99.

This script will create the tables and put the rows in them.

DROP TABLE IF EXISTS dbo.RandomValue70; CREATE TABLE dbo.RandomValue70(num INT IDENTITY, i CHAR(36), j CHAR(36)); INSERT INTO dbo.RandomValue70 (i, j) SELECT TOP 100000 NEWID(), NEWID() FROM sys.all_columns CROSS JOIN sys.columns; CREATE UNIQUE CLUSTERED INDEX PK_RandomValue70 ON dbo.RandomValue70(i) WITH (FILLFACTOR=70, PAD_INDEX=ON) GO DROP TABLE IF EXISTS dbo.RandomValue80; CREATE TABLE dbo.RandomValue80(num INT IDENTITY, i CHAR(36), j CHAR(36)); INSERT INTO dbo.RandomValue80 (i, j) SELECT i, j FROM dbo.RandomValue70; CREATE UNIQUE CLUSTERED INDEX PK_RandomValue80 ON dbo.RandomValue80(i) WITH (FILLFACTOR=80, PAD_INDEX=ON) GO DROP TABLE IF EXISTS dbo.RandomValue90; CREATE TABLE dbo.RandomValue90(num INT IDENTITY, i CHAR(36), j CHAR(36)); INSERT INTO dbo.RandomValue90 (i, j) SELECT i, j FROM dbo.RandomValue70; CREATE UNIQUE CLUSTERED INDEX PK_RandomValue90 ON dbo.RandomValue90(i) WITH (FILLFACTOR=90, PAD_INDEX=ON) GO DROP TABLE IF EXISTS dbo.RandomValue99; CREATE TABLE dbo.RandomValue99(num INT IDENTITY, i CHAR(36), j CHAR(36)); INSERT INTO dbo.RandomValue99 (i, j) SELECT i, j FROM dbo.RandomValue70; CREATE UNIQUE CLUSTERED INDEX PK_RandomValue99 ON dbo.RandomValue99(i) WITH (FILLFACTOR=99, PAD_INDEX=ON) GO

This query tells us about the 4 tables that were just created and their indexes.

SELECT

tbl.name TableName

, idx.name IndexName, idx.fill_factor

, CAST(Fragmentation.avg_page_space_used_in_percent AS DECIMAL(4,1)) ActualFillFactor

, CAST(Fragmentation.avg_fragmentation_in_percent AS DECIMAL(4,1)) CurrentFragmentation

, Fragmentation.fragment_count

, CAST(Fragmentation.avg_fragment_size_in_pages AS DECIMAL(8,1)) AvgFragmentSize

FROM

sys.tables tbl

INNER JOIN

sys.indexes idx ON tbl.object_id = idx.object_id

CROSS APPLY

sys.dm_db_index_physical_stats(DB_ID(), tbl.object_id, idx.index_id, 0, 'SAMPLED') Fragmentation

WHERE

tbl.name LIKE 'RandomValue[0-9]%';

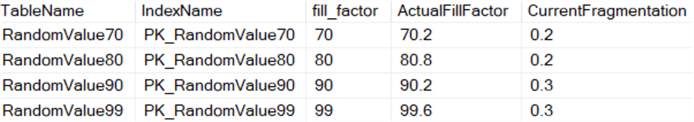

During the writing of this post, the results showed that the actual fill factor came in very close to the requested fill factor and that the index fragmentation is minimal. Note that the fill factor isn’t likely to come in exactly as the rows won’t necessarily line up exactly with the requested percentage and a single row cannot be split across multiple pages.

How SQL Server fill factor affects queries

To study how these different fill factors affect queries, these 4 identical statements will be executed. The only difference is the fill factor on the index that was set above.

SET STATISTICS IO ON; SET NOCOUNT ON; SELECT COUNT(*) FROM dbo.RandomValue70 WHERE i BETWEEN '001' AND '199'; SELECT COUNT(*) FROM dbo.RandomValue80 WHERE i BETWEEN '001' AND '199'; SELECT COUNT(*) FROM dbo.RandomValue90 WHERE i BETWEEN '001' AND '199'; SELECT COUNT(*) FROM dbo.RandomValue99 WHERE i BETWEEN '001' AND '199';

Upon executing the statements above, the result is 4 identical row counts and the IO stats, visible from the messages output tab.

Table 'RandomValue70'. Scan count 1, logical reads 151

Table 'RandomValue80'. Scan count 1, logical reads 131

Table 'RandomValue90'. Scan count 1, logical reads 118

Table 'RandomValue99'. Scan count 1, logical reads 107

From reviewing this output, we learn that the higher the fill factor value is on the index the lower the reads become. This makes sense as there are more rows stored on each page therefore requiring fewer pages to be read. Any time page reads can be reduced is good for query performance. All of which tells us that for read performance we need the highest fill factor possible.

Why not, then, just put every index at 99 or 100 and reduce the reads as much as possible? The answer to this question is fragmentation and how quickly indexes can become fragmented.

How SQL Server fill factors affect fragmentation

Continuing with this same script it is now time to add some new rows to the data set. This script will add 5,000 identical rows to each table. They will be randomly distributed throughout the cluster. After adding the new rows, rerun the index information script from above.

INSERT INTO dbo.RandomValue70 (i, j) SELECT TOP 5000 NEWID(), NEWID() FROM dbo.RandomValue70; INSERT INTO dbo.RandomValue80 (i, j) SELECT i, j FROM dbo.RandomValue70 WHERE num > 100000; INSERT INTO dbo.RandomValue90 (i, j) SELECT i, j FROM dbo.RandomValue70 WHERE num > 100000; INSERT INTO dbo.RandomValue99 (i, j) SELECT i, j FROM dbo.RandomValue70 WHERE num > 100000;

Upon adding just 5% more rows randomly throughout the table the fragmentation difference becomes immediately clear. A higher fill factor made it less likely the storage engine could find space on an existing page to put the new rows and instead had to split pages to make room. This led to a lower actual fill factor and a higher fragmentation rate.

Rerunning the SELECT statements from above yields very different results with the heavily fragmented index now having the highest number of reads.

Table 'RandomValue70'. Scan count 1, logical reads 151

Table 'RandomValue80'. Scan count 1, logical reads 131

Table

'RandomValue90'.

Scan

count

1,

logical reads 130

Table

'RandomValue99'.

Scan

count

1,

logical reads 210

Rows don’t just get inserted, sometimes existing rows change. This query will change about 7% of the rows to a new random value.

DECLARE @Mod INT, @Remainder INT; SELECT @Mod = 15, @Remainder = 2; UPDATE dbo.RandomValue70 SET i = NEWID() WHERE num % @Mod = @Remainder; UPDATE R80 SET i = R70.i FROM dbo.RandomValue80 R80 INNER JOIN dbo.RandomValue70 R70 ON R80.num = R70.num WHERE R70.num % @Mod = @Remainder; UPDATE R90 SET i = R70.i FROM dbo.RandomValue90 R90 INNER JOIN dbo.RandomValue70 R70 ON R90.num = R70.num WHERE R70.num % @Mod = @Remainder; UPDATE R99 SET i = R70.i FROM dbo.RandomValue99 R99 INNER JOIN dbo.RandomValue70 R70 ON R99.num = R70.num WHERE R70.num % @Mod = @Remainder;

Rerunning the index information query again shows that the fragmentation has gotten even worse.

A lower fill factor does not mean that there will never be fragmentation. Eventually, every index whose underlying data is changing will fragment. The UPDATE code snippet was run several more times with smaller @mod values and eventually even the lowest fill factor value indexes were heavily fragmented.

How to choose the best SQL Server fill factor value

An index will provide the most value with the highest possible fill factor without getting too much fragmentation on the index. Without a specific reason to choose an alternate value, consider 90 as a starting point and adjust from there. It's rare to go lower than that and it's especially rare to go below 80.

Consider how often index maintenance can be run on the indexes. If there are very limited windows to perform index maintenance to reduce fragmentation then smaller values should be considered to keep fragmentation down between maintenance windows.

The following is a list of occasions where a higher number can be considered.

- Does the underlying table get read significantly and updated minimally? This might be something like a master table or lookup table. These tables rarely change and are therefore not susceptible to significant fragmentation. They are read often and will benefit greatly from the fuller pages.

- Is the underlying table part of a data warehouse or other reporting environment that is read constantly and only updated periodically? The fragmentation problem can be mitigated by performing index maintenance after the ETL runs and all the queries will benefit from the higher fill factor.

- Is the index sorted on a column whose values constantly increase and whose values never change after the row is created? This would be something that defaults to current date and time, an identity column, or a number based on a sequence. There isn't a reason to leave significant space in the pages as no new rows will ever arrive in the database that would use that space. All the new rows will instead go to the end of the index as new pages.

Hopefully this gives you some better insight for the SQL Server fill factor setting and things to consider when setting the values.

Next Steps

- Check out these other articles:

About the author

Eric Blinn is the Sr. Data Architect for Squire Patton Boggs. He is also a SQL author and PASS Local Group leader.

Eric Blinn is the Sr. Data Architect for Squire Patton Boggs. He is also a SQL author and PASS Local Group leader.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-02-15