By: Ron L'Esteve | Updated: 2019-03-07 | Comments | Related: > Azure

Problem

As I begin to write more complex U-SQL scripts, I am interested in integrating more intricate C# code in my U-SQL scripts by using inline C# in my U-SQL jobs. Additionally, I am interested in using code-behind class files, custom functions and custom assemblies within U-SQL.

Solution

U-SQL is designed to blend the declarative nature of SQL with the procedural extensibility of C#. This blend of C# and U-SQL allow for optimal processing powers of big data work streams within Azure Data Lake Analytics. In this article, I will demonstrate how to use C# code within U-SQL scripts. I will also go into greater detail about more complex C# integrations with U-SQL such as code-behind class files, custom functions and custom assemblies.

Creating an Azure Data Lake Analytics Account

To process data in Azure Data Lake Analytics, you'll need an Azure Data Lake Analytics account and associate it with an Azure Data Lake store.

- In a web browser, navigate to http://portal.azure.com, and if prompted, sign in using the Microsoft account that is associated with your Azure subscription.

- In the Microsoft Azure portal, in the Hub Menu, click New. Then navigate to Data Lake Analytics.

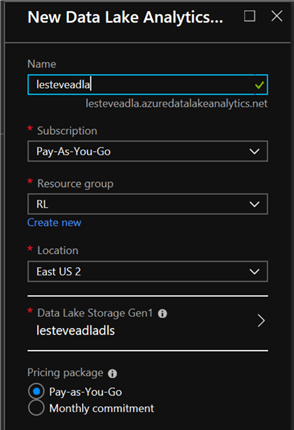

- In the New Data Lake Analytics Account blade, enter the following settings, and then click Create:

- Name: Enter a unique name

- Subscription: Select your Azure subscription

- Resource Group: Create a new resource group

- Location: Select a resource

- Data Lake Store: Create a new Data Lake Store

Create an Azure Data Lake Database

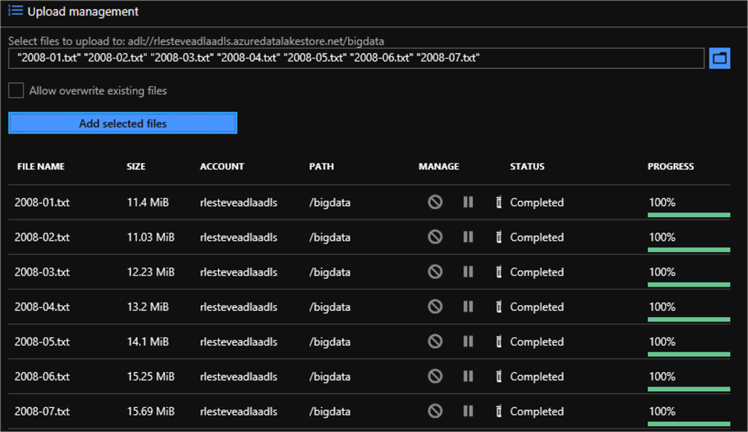

As a first step, I will begin by uploading files to my Azure Data Lake Store account. For the purposes of this demonstration, I will upload a file containing logs for a six-month period, with one file per month.

I will then create an Azure Data Lake Database by using the following script. For more detailed information on creating an Azure Data Lake Database, see my article on Creating an Azure Data Lake Database, in which I discuss how to create schemas, tables, views, table-valued functions and stored procedures in Azure Data Lake.

Creating a database allows you to store data in a structured format that is ready to be queried by jobs. I will create my database by clicking New Job in the blade for my Azure Data Lake Analytics account.

Within the new U-SQL Job blade, I'll type Create DB and then enter the following code:

CREATE DATABASE IF NOT EXISTS logdata;

USE DATABASE logdata;

CREATE SCHEMA IF NOT EXISTS iis;

CREATE TABLE iis.log (

date string,

time string,

client_ip string,

username string,

server_ip string,

port int,

method string,

stem string,

query string,

status string,

server_bytes int,

client_bytes int,

time_taken int?,

user_agent string,

referrer string,

INDEX idx_logdate CLUSTERED (date)

) DISTRIBUTED BY HASH(client_ip);

@log =

EXTRACT

date string,

time string,

client_ip string,

username string,

server_ip string,

port int,

method string,

stem string,

query string,

status string,

server_bytes int,

client_bytes int,

time_taken int?,

user_agent string,

referrer string

FROM

"/bigdata/{*}.txt" USING Extractors.Text(' ', silent : true);

INSERT INTO iis.log

SELECT

*

FROM

@log;

CREATE VIEW iis.summary AS

SELECT

date,

COUNT(*) AS hits,

SUM(server_bytes) AS bytes_sent,

SUM(client_bytes) AS bytes_received

FROM

iis.log

GROUP BY

date;

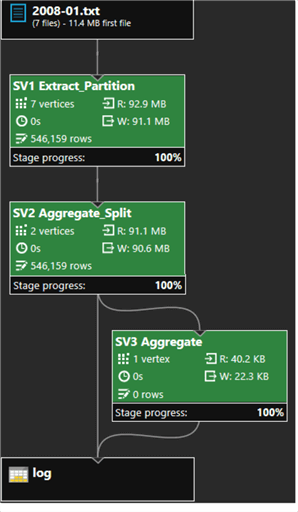

I'll then click Submit Job and observe the job status as it runs. Once the job completes running successfully, I notice from the job graph that all 7 of my files were successfully extracted and processed.

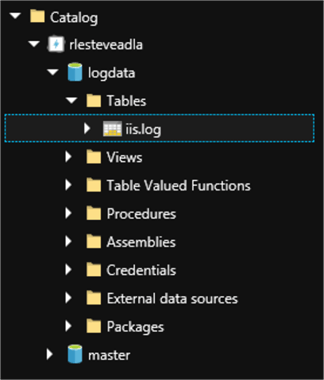

Next, I will return to the blade for my Azure Data Lake Analytics account and click Data Explorer. Under Catalog, I can now confirm that my logdata database has been created along with a table called iis.log.

Using Inline C# in a U-SQL Job

Now that my Azure Data Lake Database has been created, I am ready to begin writing some U-SQL and C# scripts. The simplest method of utilizing C# in a U-SQL job is to include inline calls to C# functions in your U-SQL code.

For this demo, I will Use a C# function in a U-SQL query to determine the IP address family of client IP addresses by examining the AddressFamily property of the Microsoft .NET System.Net.IPAddress class.

Most IP addresses in use on the Internet belong to the InterNetwork (IPv4) family such as 190.1.3.6 or the InterNetworkV6 (IPv6) family.

Once again, I will click New Job on my blade for Azure Data Lake Analytics in the Azure portal and will name it Get IP Details.

In my code editor, I will enter the following code, which will query the iis.log table and return each distinct client_ip address and the IP family to which the address belongs:

USE DATABASE logdata; @ipdetails = SELECT DISTINCT client_ip, System.Net.IPAddress.Parse(client_ip).AddressFamily.ToString() AS ipfamily FROM iis.log; OUTPUT @ipdetails TO "/output/ipdetails.csv" USING Outputters.Csv();

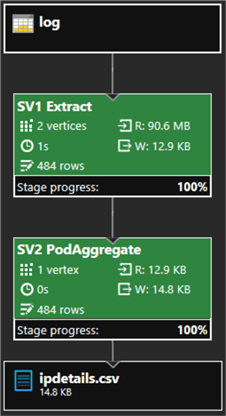

Once my job successfully finishes running after I click submit, I will check the job graph to ensure the process ran smoothly and error free.

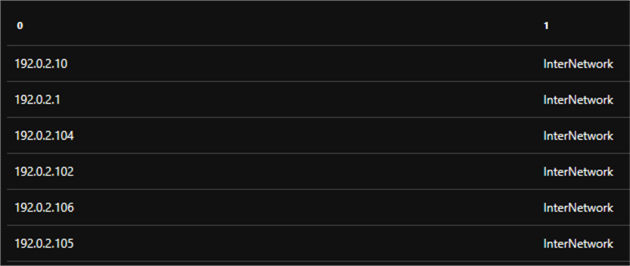

I will then navigate to my Output tab and will select ipdetails.csv to preview my results. Sure enough, I can see that all the client_ip addresses now belong to the InterNetwork family.

Using a More Complex C# Expression in a Query

Now that I have successfully written Inline C# in a U-SQL script, I want to take things one step further by writing more complex C# Expressions in my query. I now want to use C# expression to extract the parameters for each page request.

Each logged web requests includes the web page that was requested which would be the stem, and the query string that was passed in the request. Note that a query string can include parameters that are passed to the page in the name/value pair format name=value; with multiple parameters separated by a & character.

As an example, the web request http://rlesteveserver/rlestevepage1.aspx?p1=9&p2=234 includes a stem (/rlestevepage1.aspx) and two parameter name/value pairs (p1=9, and p2=234).

I will begin by navigating to my Azure Data Lake Analytics account, clicking New Job and naming it Identify Parameters.

In my code editor I'll enter the following code, which will query the iis.log table to return a SQL.ARRAY object that contains an array of C# strings. The array is populated by splitting the query field into one or more strings based on the & delimiter, and then further filtering the parameters by using a C# lambda expression to include only values that include a = character.

Each array of parameter name/value pairs is then unpacked into the result set by using the CROSS APPLY function with EXPLODE expression.

USE DATABASE logdata;

@paramstrings =

SELECT

stem AS page,

new SQL.ARRAY < string >(

query.Split('&').Where(

x => x.Contains("=")

)

) AS paramstrings

FROM

iis.log;

@params =

SELECT

DISTINCT page,

param

FROM

@paramstrings CROSS APPLY EXPLODE(paramstrings) AS t(param);

OUTPUT @params TO "/output/params.csv"

ORDER BY

page,

param USING Outputters.Csv();

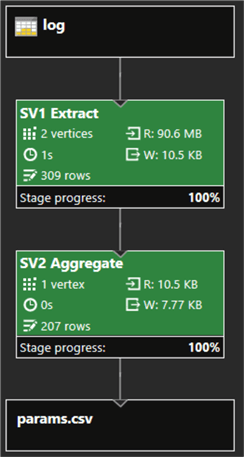

After I click submit and the job successfully completes, I will look at the job graph to ensure that the process ran as expected.

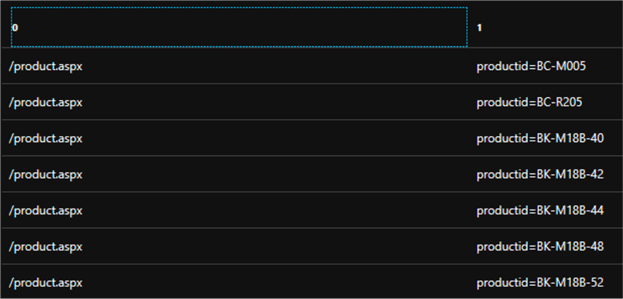

I will then click to output tab and select params.csv to see a preview of the results, which contains pages for which parameters were specified, and the parameter name/value pairs for each parameter requested.

Using a Code-Behind Class

To include custom functions in your U-SQL jobs, you can implement a code-behind class for your U-SQL query.

To get started with creating this code behind file, I'll need to install a few Azure Data Lake Analytics features to my Visual Studio application. I can do this by navigating to the Download Center and installing the following Azure Data Lake Tools for Visual Studio.

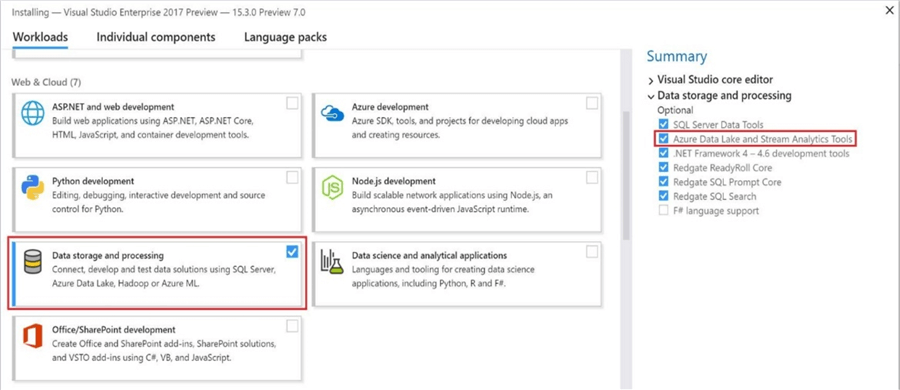

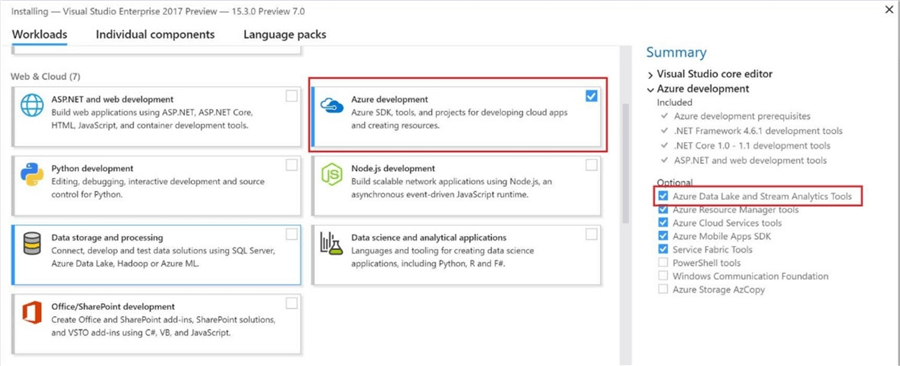

The tool is part of the Data storage and processing and Azure Development workloads in Visual Studio Installer. Enable either one of these two workloads as part of your Visual Studio installation.

Enable the Azure development workload as shown:

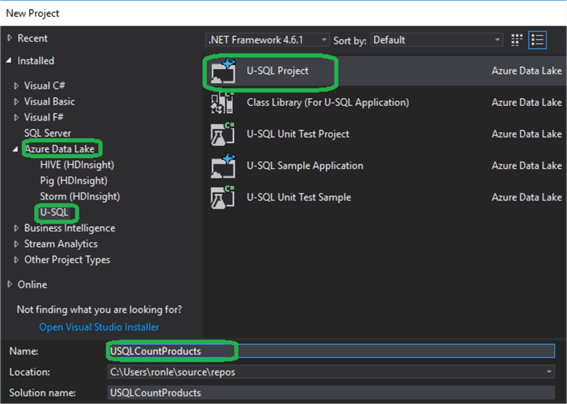

Create a U-SQL Visual Studio Project

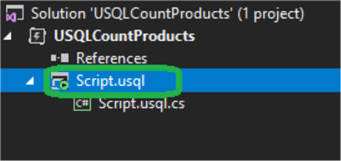

Once the installation is complete, I will go ahead and create a new Azure Data Lake U-SQL Project called 'USQLCountProducts'.

Implement a Code-Behind Class File

I'll now create a function to extract parameter values from my log query strings. The custom function will be defined in a code-behind class file.

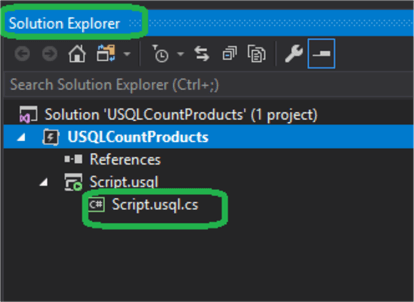

I'll start by opening the Solution Explorer pane and expanding the Script.usql file, and then double click on the Script.usql.cs code-behind file to open it in the editor.

I'll then add the following code within my USQLCountProducts namespace which will define a class named Requests, which contains a function named GetParameter. The GetParameter function attempts to find a specified parameter name in a provided query string, and if the parameter exists, the function extracts and returns its value. I will then save the file.

public static class Requests

{

public static string GetParameter(string queryString, string parameterName)

{

string paramValue = "";

int startParam = queryString.IndexOf(parameterName + "=");

if (startParam >= 0)

{

int startVal = queryString.IndexOf("=", startParam) + 1;

int endVal = queryString.IndexOf("&", startVal);

if (endVal < 0)

{

endVal = queryString.Length;

}

paramValue = queryString.Substring(startVal, endVal - startVal);

}

return paramValue;

}

}

Use the Custom Function in a U-SQL Query

Some of the query strings in the web logs include a productid parameter, indicating that the user viewed details about a specific product on your web site. In this exercise, you will implement a custom C# function in a code-behind file to parse the query string and extract a parameter value, enabling you to write a U-SQL query that counts the number of requests for each product.

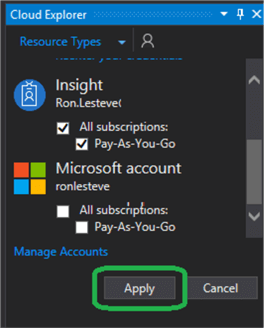

I'll start by opening the Cloud Explorer pane and I'll then connect to my Azure account.

After I have signed in, I can expand the Data Lake Analytics node under my subscription to see my Azure Data Lake Analytics service. In the Databases list, I select logdata.

I then add and save the following U-SQL code to the Script.usql code file, which uses the fully-qualified name of your function to extract the value of each productid parameter, and then uses that value in a query to aggregate the data by counting the number of requests for each productid value:

@products =

SELECT

USQLCountProducts.Requests.GetParameter(query, "productid") AS product

FROM

iis.log

WHERE

query.Contains("productid");

@productRequests =

SELECT

product,

COUNT(*) AS requests

FROM

@products

GROUP BY

product;

OUTPUT @productRequests TO "/output/productRequests.csv" USING Outputters.Csv();

Submit the Query

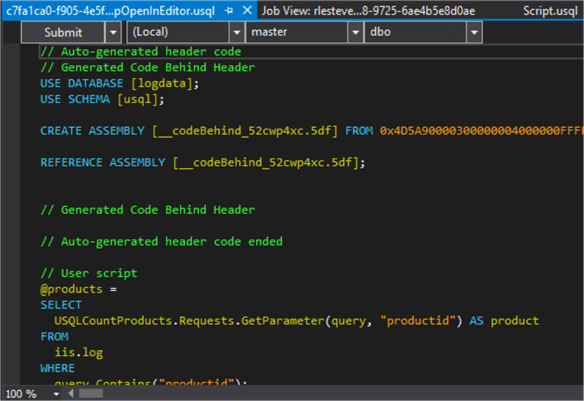

I can now submit my query from Visual Studio. The codebehind file is compiled into an assembly and temporarily deployed to the Azure Data Lake factory, where it can be used by my U-SQL query.

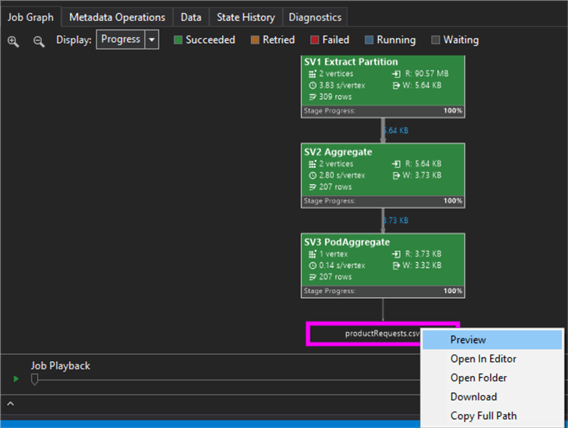

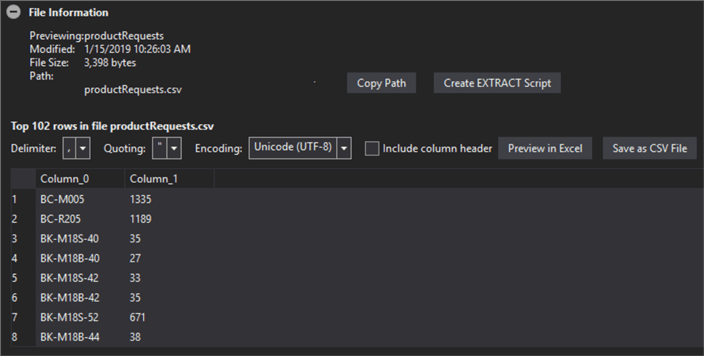

Once the job completes running successfully, I can navigate to the job graph, right-click the productRequests.csv output and click Preview.

When the preview opens, I'll verify that the output includes a table of product IDs and a count of requests for each product.

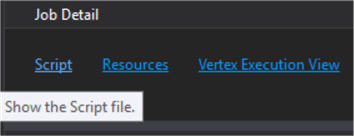

Next, I will return to the Job View pane, and under the job summary, I click Script to view the script that was uploaded to Azure Data Lake Analytics.

I'll briefly review the script and see that Visual Studio automatically added CREATE ASSEMBLY and REFERENCE ASSEMBLY statements to the beginning of the script, and a DROP ASSEMBLY statement to the end of the script. These statements were used to enable the U-SQL code to access the custom function in your code-behind class, which was compiled into a .NET assembly and deployed to the Azure Data Lake catalog when I submitted the query.

Creating a Custom Assembly

The ability to create a custom assembly and reuse custom functions in multiple U-SQL jobs is a nice option available in Azure Data Lake Analytics. This process involves creating and deploying a custom C# assembly to the Azure Data Lake store.

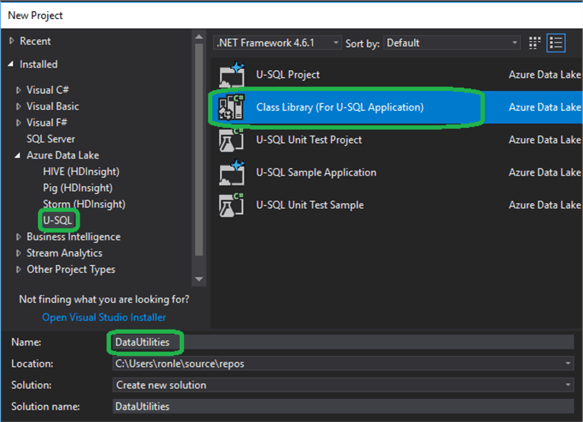

I will begin by creating a new Azure Data Lake USQL project named DataUtilities using the Class Library (for U-SQL Application) template.

A class library project includes only C# files, which can be used to implement classes containing functions that can be used from U-SQL scripts in Azure Data Lake Analytics. In this example, I will implement a simple utility class that includes a function to convert Bytes to Kilobytes.

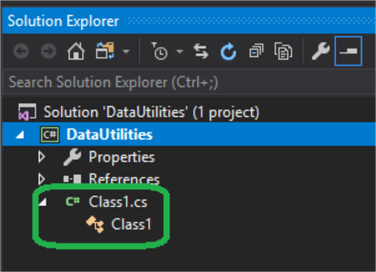

In Visual Studio, in the Solution Explorer pane, I'll open the Class1.cs code file if it is not already open.

I will now delete all the Using Statements and enter and save the following code in Class1, which defines a class named Convertor, which contains a function named BytesToKb. The BytesToKb function converts a specified value in Bytes to Kilobytes:

namespace DataUtilities

{

public class Convertor

{

public static float BytesToKb(long? bytes)

{

return (float)bytes / 1000;

}

}

}

Next, I will register the custom class library in the Azure Data Lake Analytics account before I use it in a U-SQL query.

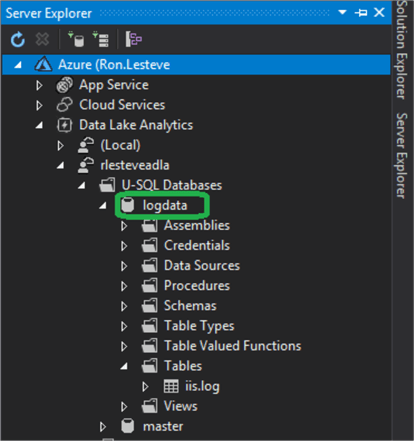

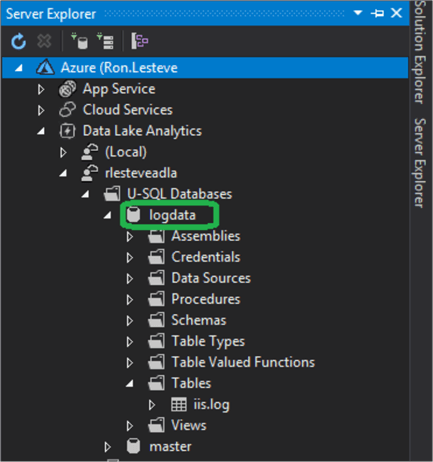

To do this, I will navigate to Server Explorer and then view the Cloud Explorer pane after signing in to my Azure account. After I have signed in and expanded my Data Lake Analytics account, I should see the logdata database.

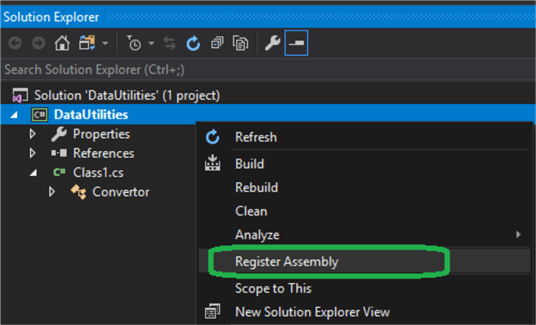

In the Solution Explorer pane, I will right-click the DataUtilities project and click Register Assembly.

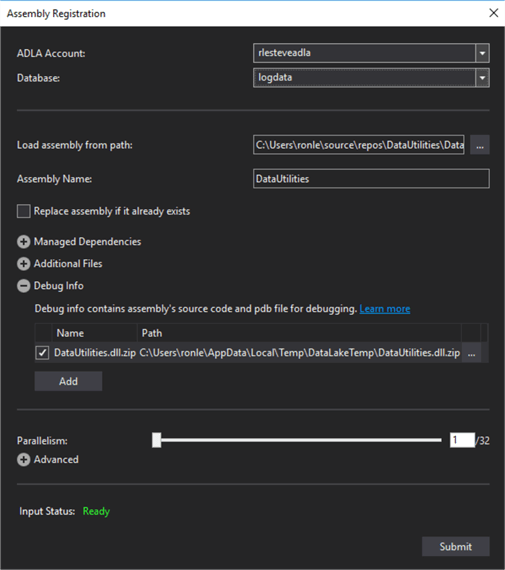

In the Assembly Registration dialog box, in the Analytics Account drop-down list, I have selected my Azure Data Lake Analytics account and the I selected logdata from the Database drop-down list.

I will click Submit after reviewing the remaining settings in the dialog box and the assembly will be compiled and deployed to my Azure Data Lake Analytics account.

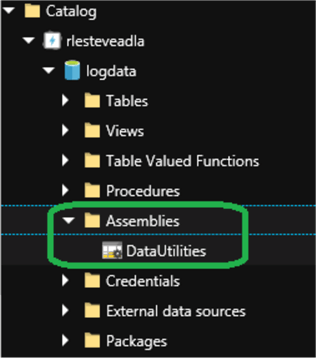

Once deployed, I will open my Azure Data Lake Analytics account in the Azure portal and then click Data Explorer; and then browse to the Assemblies folder in my logdata database to verify that the assembly has been registered.

Now that I've registered the assembly containing my custom class, I can reference it in a USQL script by creating a New Job called Use Custom Class in my Azure Data Lake Analytics Account.

In the code editor, I'll enter the following code, which queries the iis.summary view, and uses the DataUtilities.Convertor.BytesToKb function you created in your custom class to convert the bytes_received value to Kilobytes.:

USE DATABASE logdata; REFERENCE ASSEMBLY DataUtilities; @kb = SELECT date, DataUtilities.Convertor.BytesToKb(bytes_received) AS kb_received FROM iis.summary; OUTPUT @kb TO "/output/kb.csv" ORDER BY date USING Outputters.Csv();

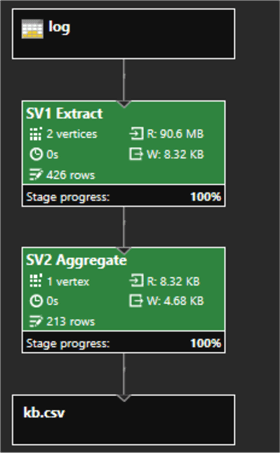

I then click Submit Job and observe the job details as it is run.

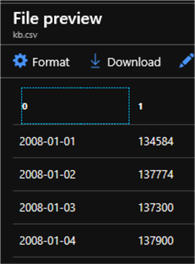

Once the job completed, I will click the Output tab and select kb.csv to see a preview my results which contain the number of Kilobytes received on each date.

Next Steps

- In this article, I discussed how to use simple and complex C# code in U-SQL queries running in Azure Data Lake Analytics. Additionally, I investigated deploying and using C# code-behind files and custom C# assemblies.

- For more information related to Using C# with U-SQL, see Microsoft's article Extending U-SQL Expressions with User-Code, which contains additional detailed examples.

- My article Using Azure Data Lake Analytics to Process U-SQL Queries discusses details related to writing U-SQL queries for big data processing.

- For a more concentrated guide on the extensibility and programmability of the U-SQL language that's enabled by C#, check out Microsoft's U-SQL programmability guide

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-03-07