By: Fikrat Azizov | Updated: 2019-06-20 | Comments (1) | Related: 1 | 2 | 3 | > Azure Data Factory

Problem

In these series of posts, I am going to explore Azure Data Factory (ADF), compare its features against SSIS and show how to use it towards real-life data integration problems. In the previous post, I provided an overview of triggers and explained how to create a tumbling window trigger to schedule pipeline executions. This trigger type is good for automating historical data type loads, however if your purpose is to automate future loads with more advanced scheduling options, a schedule trigger could be a better option, so we will be exploring schedule triggers in this post.

Solution

Azure Data Factory Schedule Triggers

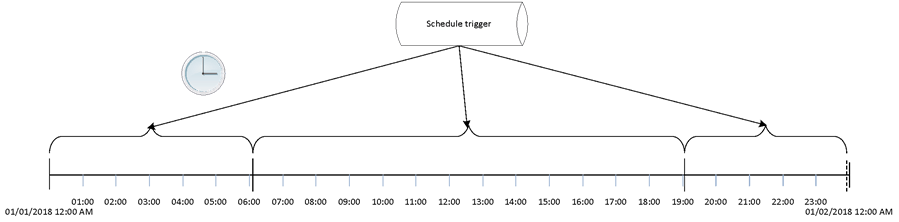

A schedule trigger runs pipelines on a wall-clock schedule and supports periodic and advanced calendar options. As we have learned in the previous post, tumbling window triggers allow only minute-based and hourly-based frequencies. Schedule triggers offer daily, weekly and monthly frequencies, in addition to minute and hour based settings. Furthermore, with weekly and monthly frequencies, schedule triggers allow specifying multiple week days or month days and multiple time windows within each day, which means, its time intervals do not need to be the same size, see example below for a schedule trigger with three periods of different lengths:

I think schedule triggers are a much better fit for real-life job scheduling scenarios, although they do not allow initiation of past data loads.

Creating Schedule Trigger in Azure Data Factory

In the previous post, we created a trigger from the pipeline authoring window. I'll demonstrate a slightly different method of creating triggers in this exercise- we'll first create a trigger using the 'Triggers' screen and then assign it to multiple pipelines.

Because schedule triggers allow assigning to multiple pipelines, I will assign this trigger to two pipelines (CopyPipeline_l6c and SQL_AQSL_PL) we created in previous posts.

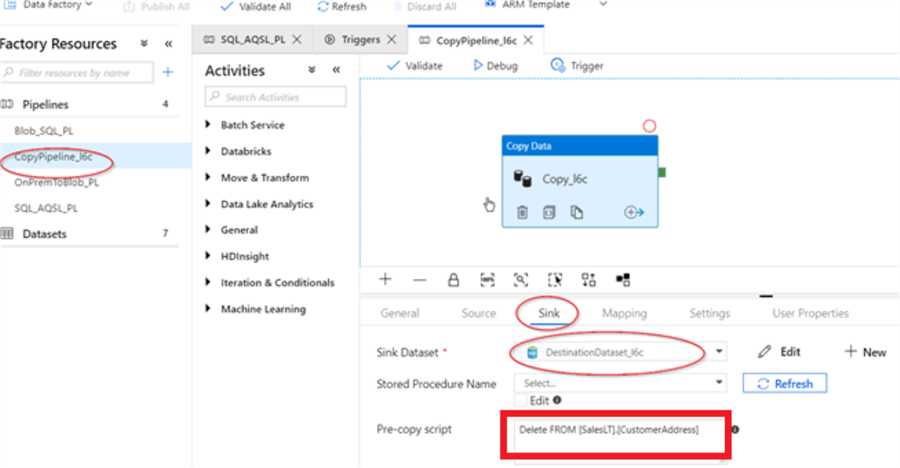

Before we proceed further, let's add a purge query for the destination table for pipeline CopyPipeline_l6c, to prevent it from failing due to PK errors:

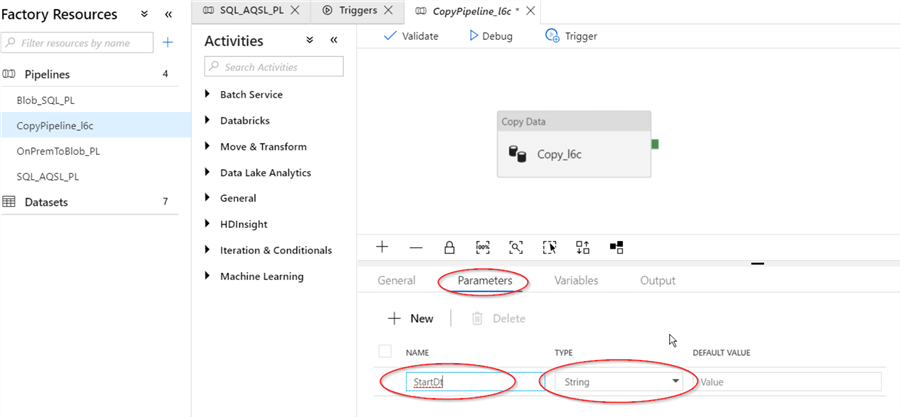

Next, I will also add a parameter named 'StartDt' to this pipeline, to be able to examine values passed from the trigger, similar to how we did in the previous post:

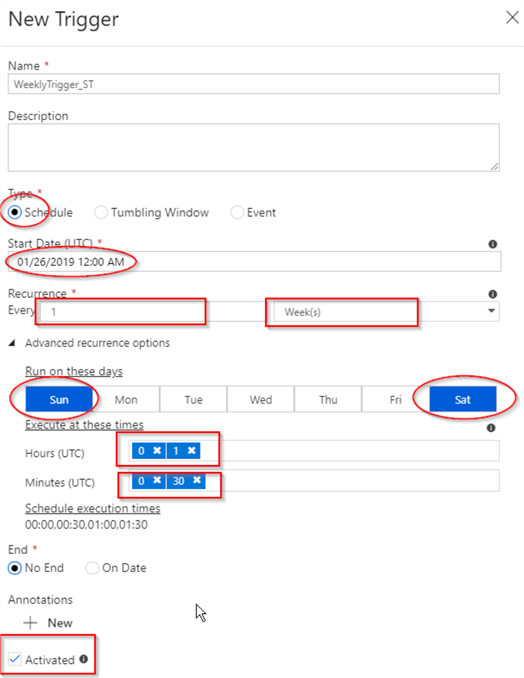

Our trigger will have the following configuration parameters:

- Type: Schedule

- Start time: 12 am

- Frequency: weekly, every one week

- Week days Sat, Sun

- Hours: 0,1

- Minutes: 0,30

ADF will calculate all combinations of hour/minute settings and produce eight time windows- 12:00 AM, 0:30 AM, 1:00 AM, 1:30 AM UTC time for Thursday and Friday. I have selected the trigger start time and time windows settings in a way to ensure that some periods fall prior, while others fall in the future in respect to our current configuration time (configuration time was 0:49 AM UTC time), as I am curious to see how ADF will handle past periods.

Here are steps I followed to create a schedule trigger with the above parameters:

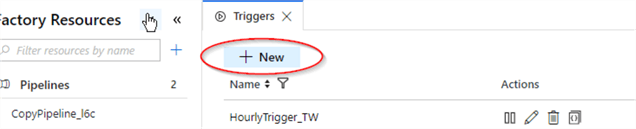

- Opened 'Triggers' tab from Factory Resources tab and hit '+New' button:

- Entered trigger name (WeeklyTrigger_ST in this example), start date/time, confirmed that type is set to 'Schedule', selected weekly recurrence, selected Sat, Sun as weekdays, added 0,1 to 'Hours' field and 0,30 to 'Minutes' field. Finally, I have checked 'Activated' box to ensure the trigger becomes active as soon as it is published. Here's a screenshot with all settings:

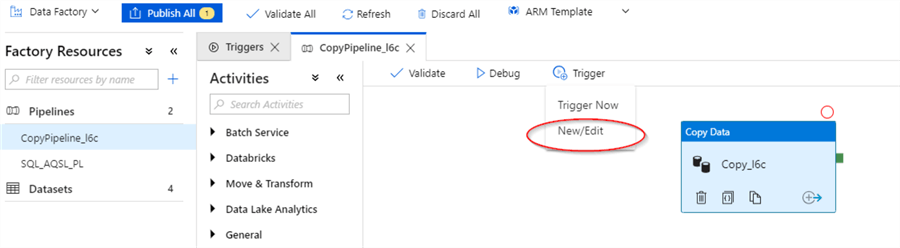

- Next, to assign pipelines to this trigger, I've switched to the 'Pipelines' window, selected pipeline CopyPipeline_l6c created earlier, clicked New/Edit command under 'Trigger' menu:

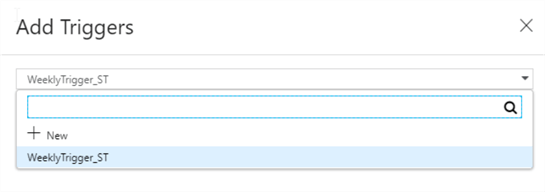

- Next, I selected the name of schedule trigger we just created from drop-down list and confirmed:

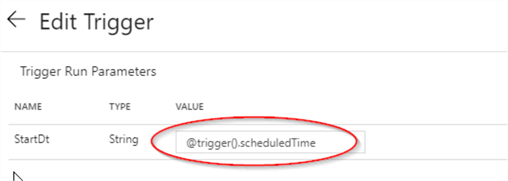

- Next, I've reviewed trigger settings in 'Edit Trigger' dialog window and confirmed to switch to the parameter page and assigned system variable @trigger().scheduledTime to the pipeline parameter StartDt we've created earlier. Please note, we used @trigger().outputs.windowStartTime system variable, when we configured tumbling window trigger in the previous post. However, we can't use the same variable this time, because schedule trigger has its own set of available system variables (see this article for the list of available system variables):

- Finally, I repeated steps 3-5 to assign the trigger WeeklyTrigger_ST to SQL_AQSL_PL pipeline.

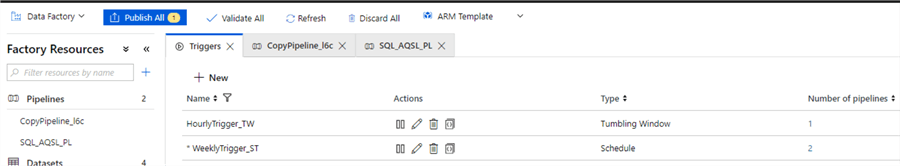

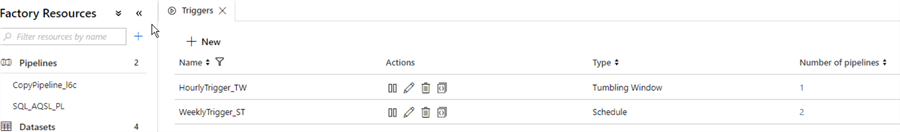

Here is the screenshot from the 'Triggers' page with two triggers we have created so far:

Please note the new trigger's name is preceded with a star symbol to indicate it has pending changes. In addition, as we can see from last column, two pipelines have been assigned to this trigger. As always, let's publish the changes and see the triggers list again:

Here is the JSON scripts created behind the scenes for the new trigger WeeklyTrigger_ST:

{

"name": "WeeklyTrigger_ST",

"properties": {

"runtimeState": "Started",

"pipelines": [

{

"pipelineReference": {

"referenceName": "SQL_AQSL_PL",

"type": "PipelineReference"

},

"parameters": {

"StartDt": "@trigger().scheduledTime"

}

},

{

"pipelineReference": {

"referenceName": "CopyPipeline_l6c",

"type": "PipelineReference"

},

"parameters": {

"StartDt": "@trigger().scheduledTime"

}

}

],

"type": "ScheduleTrigger",

"typeProperties": {

"recurrence": {

"frequency": "Week",

"interval": 1,

"startTime": "2019-01-26T00:00:00.000Z",

"timeZone": "UTC",

"schedule": {

"minutes": [

0,

30

],

"hours": [

0,

1

],

"weekDays": [

"Saturday",

"Sunday"

]

}

}

}

}

}

Monitoring Execution Results in Azure Data Factory

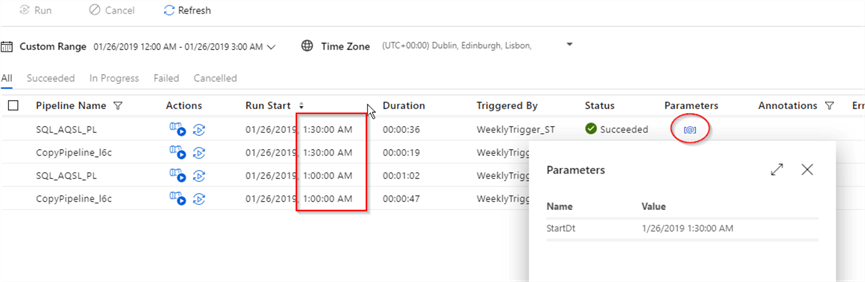

I waited until the start of last window for Friday (1:30 am) and switched to ADF monitoring page to see execution results. We can also examine parameter values assigned to each execution. Here is the screenshot from ADF monitoring page:

Here are few observations, based on above screenshot:

- The schedule trigger didn't run for past periods (12:00 AM,0:30 AM)

- Each pipeline had two future time executions and all of them were successful.

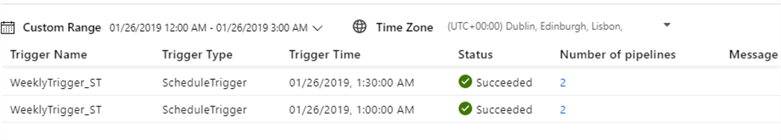

Another way of monitoring trigger execution activity is using the 'Trigger Runs' screen:

Conclusion

Schedule triggers offer much more flexible configuration options, but they cannot initiate historical loads. That means, if your pipeline needs to handle both past and future periods, you need to assign both tumbling window and schedule triggers to it. In addition, the ability of assigning multiple pipelines to the same trigger makes schedule triggers very valuable.

Next Steps

- Read: Stairway to Azure Data Factory: Automating pipeline executions, Part 1 of 3

- Read: Pipeline execution and triggers in Azure Data Factory

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-06-20