By: Fikrat Azizov | Updated: 2019-07-01 | Comments (1) | Related: 1 | 2 | 3 | > Azure Data Factory

Problem

In these series of posts, I am going to explore Azure Data Factory (ADF), compare its features against SQL Server Integration Services (SSIS) and show how to use it towards real-life data integration problems. In the previous two posts, I have provided an overview of triggers and explained how to create time based triggers to automate pipeline executions. Sometimes data flows need to be kicked-off in response to certain events, rather than on a pre-scheduled time interval. In this post, we will be exploring event-based triggers, which initiate pipelines in response to file events, such as file arrival or removal.

Solution

Event-based triggers in Azure Data Factory

Event-based triggers start pipelines in response to file deposit and removal events on Azure Blob Storage. This feature leverages Azure Event Grid functionality, so we need to follow the below steps to enable Azure Event Grid for our subscription:

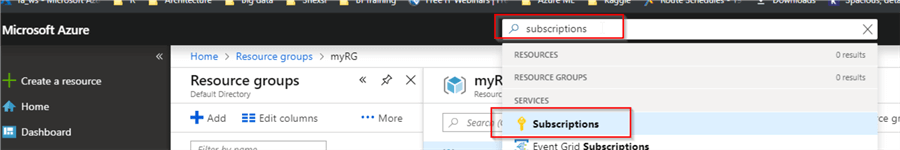

- Open Azure Portal, type 'subscriptions' in the top search box and select 'Subscriptions' menu:

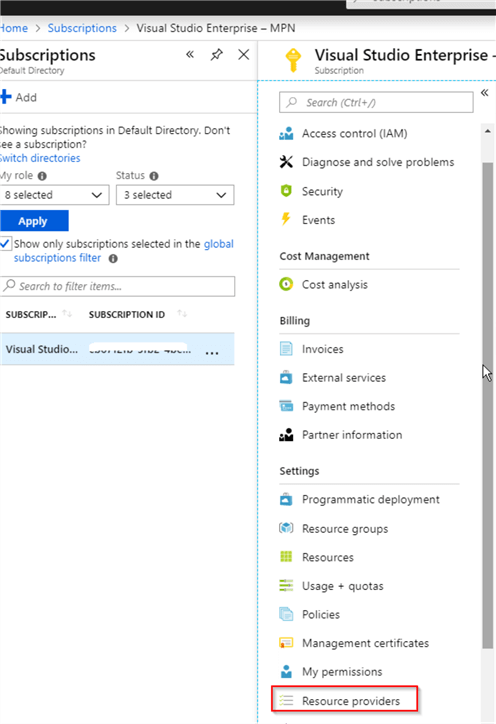

- Choose your subscription name and scroll down the menu panel to select 'Resource providers' command:

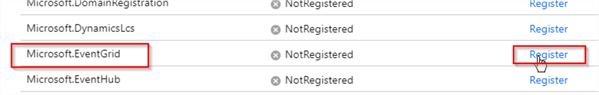

- Scroll down the provider list to find 'Microsoft.EventGrid' provider and click 'Register' button:

Data flow description in Azure Data Factory

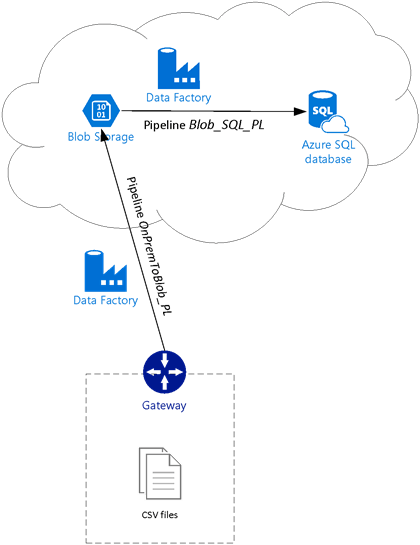

In earlier posts dedicated to file transfer pipelines (see Transfer On-Premises Files to Azure Blob Storage), we created a blob storage account, hosting container csvfiles and built pipeline OnPremToBlob_PL, which transferred CSV files into that container. Then we built pipeline Blob _SQL_PL to bring those files from blob storage into Azure SQL Database and executed both pipelines manually. In this exercise, we will create an event trigger to initiate pipeline Blob_SQL_PL automatically, in response to file deposit events into the csvfiles container and use pipeline OnPremToBlob_PL to deposit the CSV files into this container, as shown in this diagram:

The pipeline Blob_SQL_PL should be kicked-off automatically, in response to each file drop event and will transfer data related to that specific file. Therefore, in our case we should see three executions, matching the count of CSV files.

The pipeline Blob_SQL_PL we have built so far, transfers data for all CSV files located in its source container. However, I want to make this trigger a bit smarter to initiate each execution with specific parameters, indicating which file has caused this execution, so the pipeline transfers data for the related file only.

So, let's parameterize pipeline Blob_SQL_PL and its source dataset, before we proceed further.

Parameterization of the pipeline and its dataset

We learned how to add pipeline parameters and tie them to trigger settings in the previous posts. This time we will parameterize the pipeline and its source dataset and link these parameters together, please see below required steps:

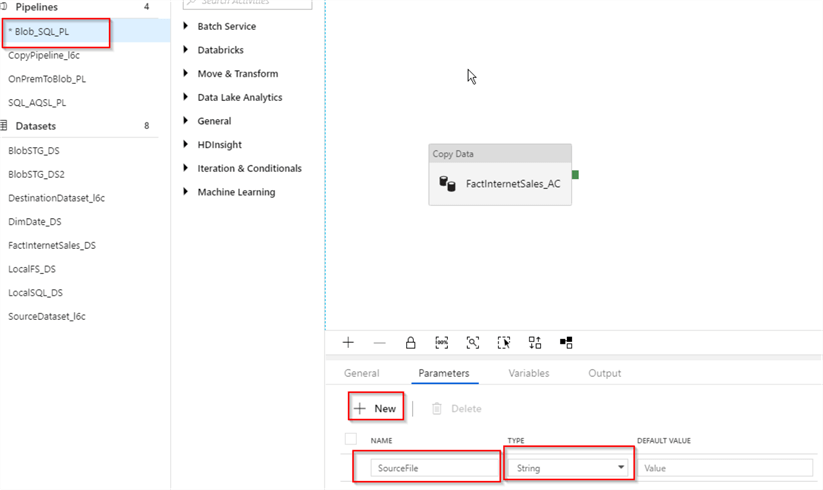

- Select pipeline Blob_SQL_PL, switch to 'Parameters' tab and add string type parameter SourceFile:

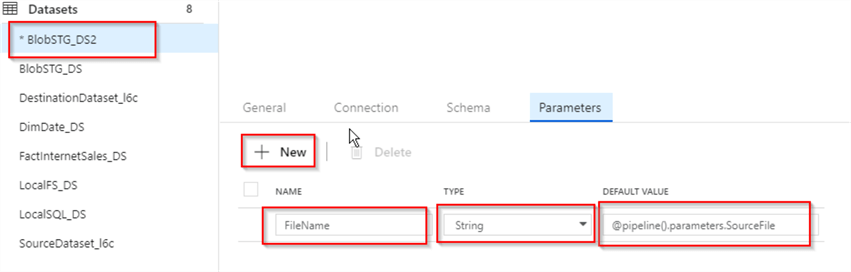

- We can refer to the pipeline parameters using expression like @pipeline().parameters.XXXX, where the last part of the expression represents the parameter name. Accordingly, we can use an expression @pipeline().parameters.SourceFile to refer to SourceFile parameter we created in the previous step. So, let's select dataset BlobSTG_DS2, switch to the 'Parameters' tab, add string type parameter FileName and assign the expression @pipeline().parameters.SourceFile as the default value for this parameter:

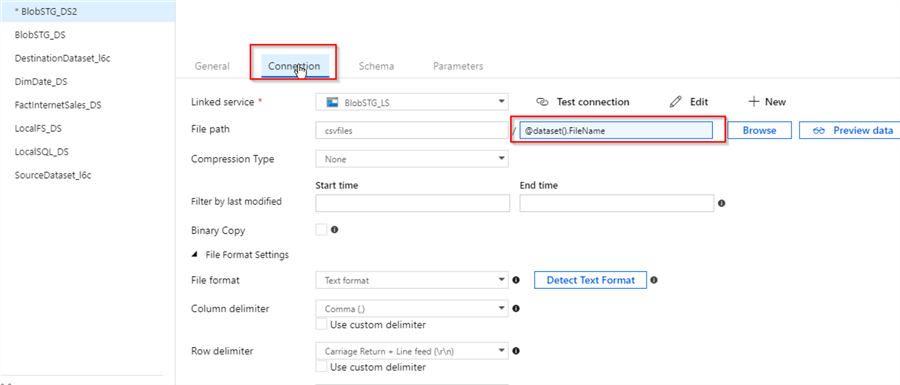

- To ensure that each execution instance of the pipeline transfers only the file specific to that execution, we need to pass the source file name from the trigger via the dataset parameter we just created. To refer to dataset parameter FileName, we can use an expression @dataset().FileName, so let's switch to the Connection tab and add that expression into the file name related part of the 'File path' text box:

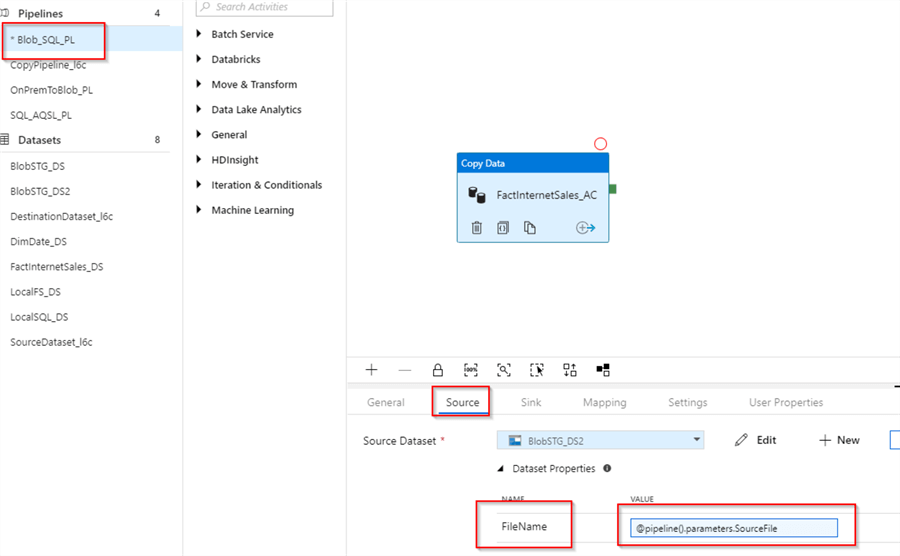

- Next, to link the dataset parameter FileName to the pipeline parameter SourceFile, let's select activity FactInternetSales_AC, switch to 'Source' tab and assign expression @pipeline().parameters.SourceFile to the FileName parameter under 'Dataset Parameters' section:

- Finally, let's publish the changes, using the 'Publish All' button.

Creating event-based trigger in Azure Data Factory

Now that we have prepared pipeline 'Blob_SQL_PL' to receive settings from the trigger, let's proceed with that event trigger's configuration, as follows:

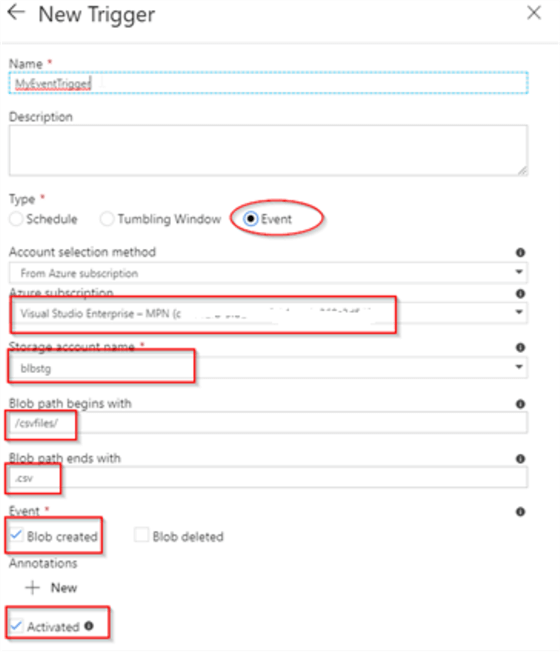

- Select pipeline 'Blob_SQL_PL', click 'New/Edit' command under Trigger menu and choose 'New trigger' from drop-down list

- Assign the trigger name ('MyEventTrigger' in this example) and select event trigger type

- Next few steps are related to blob storage where we are expecting the file drops. Select your Azure subscription from drop-down list, as well as storage account name ('blbstg' in this example)

- Specify container name, enclosed by '/' character ('/csvfiles/' in this example) in the 'Blob path begins with' field

- The 'Blob path ends with' field can be used to enter file names, but in our case we want to include all CSV files, located in csvfiles container, so we'll specify only file extension '.csv'

- Check 'Blob created' checkbox, to indicate that trigger needs to be fired in response to file arrival events into the above-mentioned container. Please note that, we could also set file removal as a triggering condition, however that would probably not be suitable for file copy pipelines. Here's how would the 'New Trigger' window looks after all these settings:

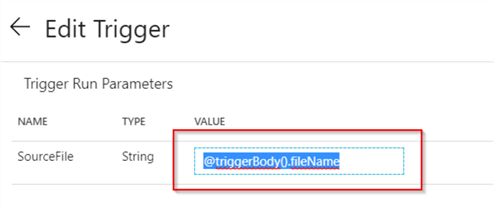

- An event based trigger can pass two different system variables to the related pipeline- @triggerBody().fileName and @triggerBody().folderPath, which represents the name and folder of the file. In this case, we're only interested in passing @triggerBody().fileName, so let's assign this expression to the pipeline's SourceFile parameter:

- Finally, let's publish the changes using 'Publish All' button.

Here's is the JSON code for the new event-based trigger:

{ "name": "MyEventTrigger",

"properties": {

"runtimeState": "Started",

"pipelines": [

{

"pipelineReference": {

"referenceName": "Blob_SQL_PL",

"type": "PipelineReference"

},

"parameters": {

"SourceFile": "@triggerBody().fileName"

}

}

],

"type": "BlobEventsTrigger",

"typeProperties": {

"blobPathBeginsWith": "/csvfiles/",

"scope": "/subscriptions/cb6712fb-5fb2-4be3-b071-1e369c3d5499/resourceGroups/myRG/providers/Microsoft.Storage/storageAccounts/blbstg",

"events": [

"Microsoft.Storage.BlobCreated"

]

}

}

}

You can also find JSON files for pipeline 'Blob_SQL_PL'.

Validating trigger execution in Azure Data Factory

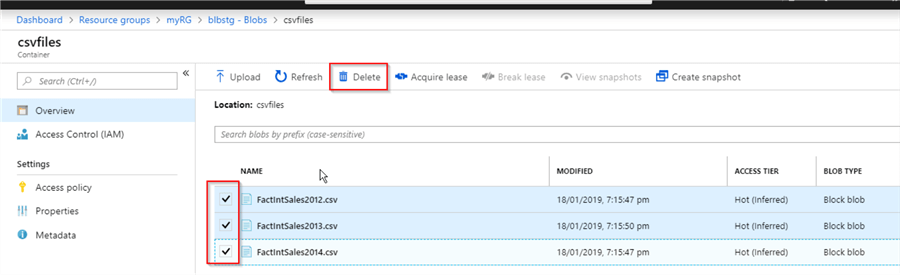

To validate our newly created event trigger, let's open the Blob Storage page and container csvfiles inside it, select all three files and remove them, using the 'Delete' button:

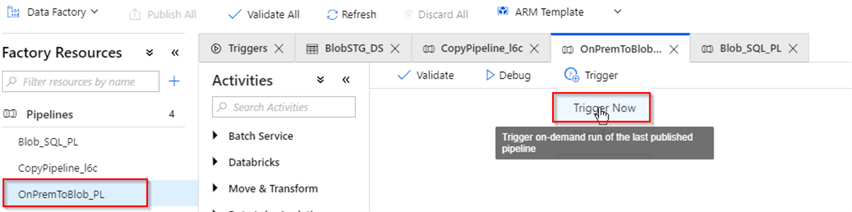

Now, let's trigger OnPremToBlob_PL pipeline manually, to deposit the CSV files into the blob storage again:

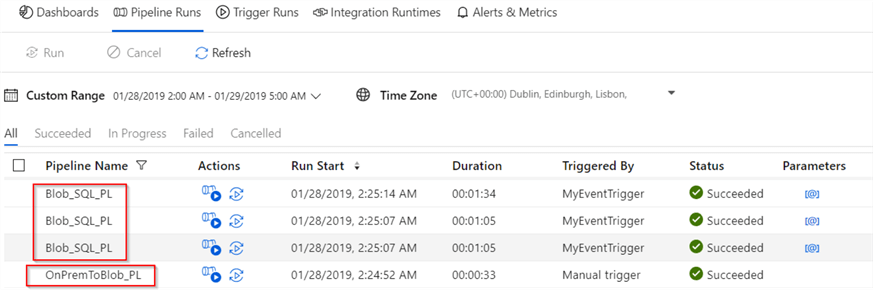

Once execution of the OnPremToBlob_PL pipeline has finished, we can open ADF monitoring page and examine the execution results, here is the screenshot:

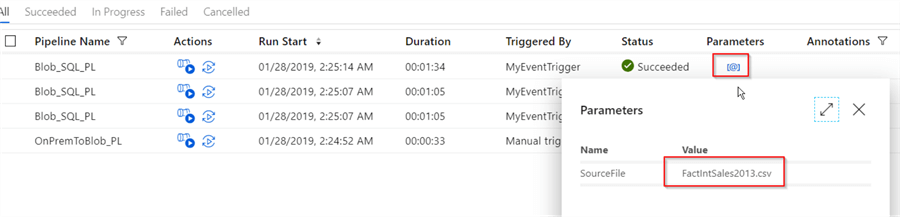

We can also examine file name parameter passed to each execution, using the 'Parameters' button:

Conclusion

This post concludes discussions on different ways to automate ADF pipeline executions. Event based triggers are a great feature, allowing you to set pipeline execution based on the dependency of file deposit or removal events, rather than having time-based scheduling. There are some other ways to build dependencies between ADF pipelines, which we will be exploring in future posts.

Next Steps

- Read: Stairway to Azure Data Factory: Automating pipeline executions, Part 1 of 3

- Read: Stairway to Azure Data Factory: Automating pipeline executions, Part 2 of 3

- Read: Copy data from an on-premises SQL Server database to Azure Blob storage

- Download the files for this tip.

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-07-01