By: K. Brian Kelley | Updated: 2019-07-15 | Comments | Related: > Monitoring

Problem

We had an issue with our database where the files grew larger unexpectedly. Unfortunately, we didn't have proactive monitoring in place to know exactly what caused the issue. The database is in full recovery mode and we have the transaction log backups, but it isn't worth the time to examine the transaction log when we can't narrow down a timeframe for the change. Is there anything we can do to narrow down when the change happened?

Solution

If you didn't have proactive logging, there are a few places which might have captured your growth. Let's look at them in turn.

Investigating the Default SQL Server Trace Files

The first place you should always check, if you haven't disabled it on the SQL Server, is the Default Trace. You're specifically interested in these files because the default traces captures more events than what is reported in the Schema Changes History report. The log files are found in the Log directory for your SQL Server install. Look for the .trc files and copy them to another location. While SQL Server is running, you can't open them using Profiler, and you'll need to open them to investigate. However, the operating system does allow you to copy the files to a different location. From there, you can open the trace files using SQL Profiler. You'll be looking for something like this:

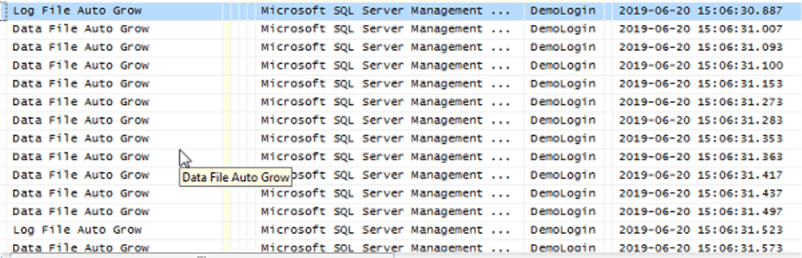

Note the Event Classes showing up here:

- Log File Auto Grow

- Data File Auto Grow

These events are what we'd expect to see with any database growth, but especially unexpected database growth. Here we see that DemoLogin was the login causing the event to occur and something was running in SQL Server Management Studio (SSMS). We also see the timestamp: a crucial piece of information. Now we know when. It is important to verify you have the right database. While not shown in the screenshot above, the event will also contain the Database ID and the Database Name. You can use that information to verify you have the correct database for these events.

The main issue with default trace, though, is it has fairly small trace files and they roll over. Therefore, you may run into the case on a busy SQL Server where you can't find these events. That also can be a clue to help you narrow down when. If you don't see the events in the default trace files, that means the events happened before the first entry you have in those files. That certainly helps reduce the timeframe, but there's another option available.

Looking at SQL Server Transaction Log Backup Files

Since you have backup files, especially transaction log backup files, you can use them to get a clearer picture of when the database file change happened. Even if the database files are larger but the space used is the same, the transaction log backups should give you a good idea of when the event happened. Why would you have an increase in database file size but the used space remain the same? One possibility is online reindexing in the database, especially with the clustered index. After all, to rebuild the clustered index, SQL Server effectively needs to rebuild the table in parallel. During the reindex, SQL Server will use that space, but once the reindex is complete, it'll drop back down.

You can set up a test scenario like this:

-- Create the Database and set it to full recovery mode CREATE DATABASE Test; GO ALTER DATABASE Test SET RECOVERY FULL; -- Create a full backup to start the recovery chain BACKUP DATABASE Test TO DISK = 'C:\Backups\Test_Full.bak'; -- Create the initial transaction log backup BACKUP LOG Test TO DISK = 'C:\Backups\Test_Log_01.trn';

Note that the code immediate establishes the chain for the transaction log backups by taking a full backup. It then take a transaction log backup so you have a comparison point. Now to perform a “regular” operation and take another transaction log backup:

-- Let's do some data creation CREATE TABLE Foo (FooID int); GO INSERT INTO Foo SELECT ROW_NUMBER() OVER (ORDER BY Object_id) FROM sys.objects; -- Now let's create our second transaction log backup BACKUP LOG Test TO DISK = 'C:\Backups\Test_Log_02.trn';

The way the code generates data is by using a windowing function to generate a row number for every row in sys.objects. These get inserted into the Foo table. There's not a lot of data being inserted, so it'll serve as a good comparison point for when the larger data load happens.

We need that larger data load and we can use a similar technique to create more data easily. Then, another transaction log backup is taken:

USE Test; GO -- Bigger data load -- Create an anomaly CREATE TABLE Foo2 (FooID1 int, FooID2 int); GO INSERT INTO Foo2 (FooID1, FooID2) SELECT c1.RN, c2.RN FROM (SELECT ROW_NUMBER() OVER (ORDER BY Object_id, column_id) AS RN FROM sys.columns) AS c1 CROSS JOIN (SELECT ROW_NUMBER() OVER (ORDER BY Object_id, column_id) AS RN FROM sys.columns) AS c2 ORDER BY c1.RN, c2.RN DESC; -- Create a clustered index CREATE CLUSTERED INDEX IDX_Foo2 ON Foo2 (FooID1, FooID2); -- Now let's create our second transaction log backup BACKUP LOG Test TO DISK = 'C:\Backups\Test_Log_03.trn';

First, the code creates a table with two integer columns. Next, using a cross join of the row numbers for sys.columns against the row numbers for sys.columns again, data in inserted. Given that the table is a heap table, it doesn't have a physical sort, the code next institutes a clustered index. Note that the heap should have the second column in reverse order and the code straightens the physical sort with the clustered index.

This should generate a sizeable difference when the transaction log backup is taken.

Now, perform a normal operation again, just to help the transaction log backup size difference to stand out:

USE Test; GO -- Let's do some data creation CREATE TABLE Foo3 (FooID int); GO INSERT INTO Foo3 SELECT ROW_NUMBER() OVER (ORDER BY Object_id) FROM sys.objects; -- Now let's create our second transaction log backup BACKUP LOG Test TO DISK = 'C:\Backups\Test_Log_04.trn';

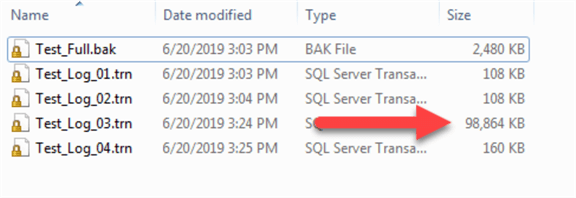

Now that the four transaction log backups exist, look at the size difference:

The transaction log backup at 3:24 PM is a great deal bigger than all the others. That tells us that something happened between 3:04 PM and 3:24 PM to cause a lot of entries in the log. Even if we don't have proactive monitoring, it may be possible to investigate what typically runs at that time, or what certain users might have been doing, simply by asking and looking at other systems. Having narrowed the timeframe down to 20 minutes, that's significantly better than multiple hours or multiple days.

But What If I Don't Have SQL Server Transaction Log Backups?

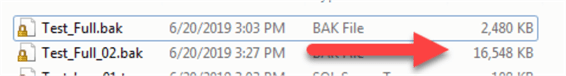

Depending on how often you take full and differential backups, you might be able to tell the same thing. Some operations, like reindexing, might not show up. But other types of data operations, especially ones that change the space used, should be observable if the activity was sizeable enough. For instance, if you take a full backup immediately after the last transaction log backup, you'll see that the full backup size is significantly greater than the first full backup:

Here you can see a difference between 3:03 PM and 3:27 PM. The full backup is 8x larger than the first one. Therefore, something happened in these 24 minutes. Granted, this was a test system and an example, but the technique works on larger databases with less frequent backups. Remember, you're trying to narrow down the window, so any clues help, even if they only let you narrow things by a day or a few hours.

Of course, one big difference between a system with transaction log backup files and one without is that you can interrogate the transaction log with each file restore to determine what was going on. If you only have full backups, you can check the difference in the data, but you won't get any other forensics.

Concluding Thoughts

Neither the default trace nor looking at backup files sizes are the preferred means of determining what happened on the database. The right way to go is to have proper auditing and monitoring in place beforehand. However, we don't always get to do that. Therefore, we have to be creative in trying to research what and when something happened. In this case, the default trace and/or the backup files may help us narrow down when something happened, which will help in investigating what happened.

Next Steps

- If you do need to investigate the transaction log, here's how you can do so.

- More than likely, though, you'll want to look at the transaction log backup directly.

- Learn more about how to use Default Trace.

About the author

K. Brian Kelley is a SQL Server author and columnist focusing primarily on SQL Server security.

K. Brian Kelley is a SQL Server author and columnist focusing primarily on SQL Server security.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-07-15