By: Fikrat Azizov | Updated: 2019-08-28 | Comments (1) | Related: > Azure Data Factory

Problem

In these series of posts, I am going to explore Azure Data Factory (ADF), compare its features against SQL Server Integration Services (SSIS) and show how to use it towards real-life data integration problems. In Control flow activities , I have provided an overview of control flow activities and explored few simple activity types. In this post, we will be exploring If Condition activity.

Solution

Azure Data Factory If Condition Activity

If Condition activity is similar to SSIS's Conditional Split control, described here. It allows directing of a pipeline's execution one way or another, based on some internal or external condition.

Unlike simple activities we have considered so far, the If Condition activity is a compound activity, it contains a logical evaluation condition and two activity groups, a group matching to a true evaluation result and another group matching to a false evaluation result.

If Condition activity's condition is based on logical expression, which can include properties of pipeline, trigger as well as some system variables and functions.

Creating Azure Data Factory If Condition Activity

In one of the earlier posts (see Automating pipeline executions, Part 3), we have created pipeline Blob_SQL_PL, which would kick-off in response to file arrival events into blob storage container. This pipeline had a single activity, designed to transfer data from CSV files into FactInternetSales table in Azure SQL db.

We will customize this pipeline, make it more intelligent - it will check input file's name and based on that, transfer files into either FactInternetSales or DimCurrency table, by initiating different activities.

To prepare the destination for the second activity, I have created table DimCurrency inside DstDb, using the below script:

CREATE TABLE [dbo].[DimCurrency]( [CurrencyKey] [int] IDENTITY(1,1) NOT NULL, [CurrencyAlternateKey] [nchar](3) NOT NULL, [CurrencyName] [nvarchar](50) NOT NULL, CONSTRAINT [PK_DimCurrency_CurrencyKey] PRIMARY KEY CLUSTERED ([CurrencyKey] ASC) GO

Let's follow the below steps to add an If Condition activity:

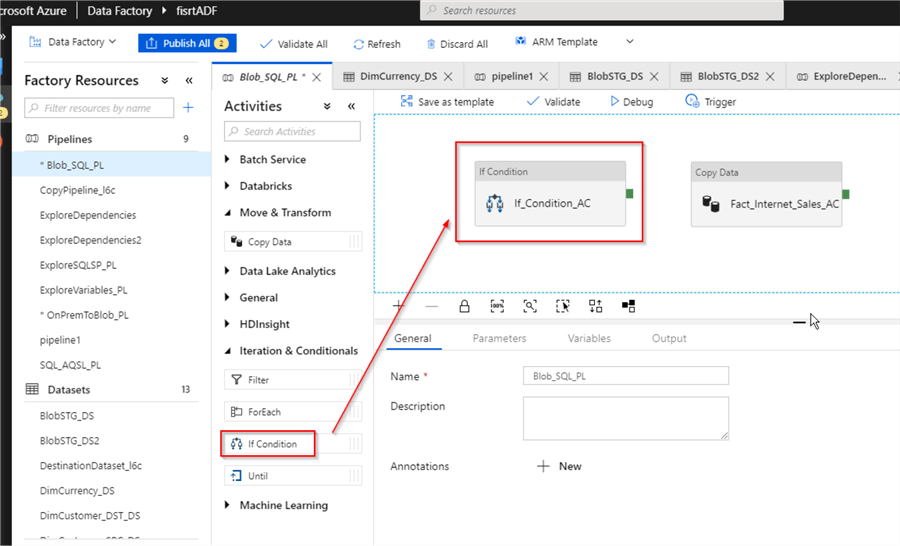

Select pipeline Blob_SQL_PL, expand 'Iterations and Conditionals' group on Activities panel, drag-drop an If Condition activity into the central panel and assign the name (I've named it If_Condition_AC):

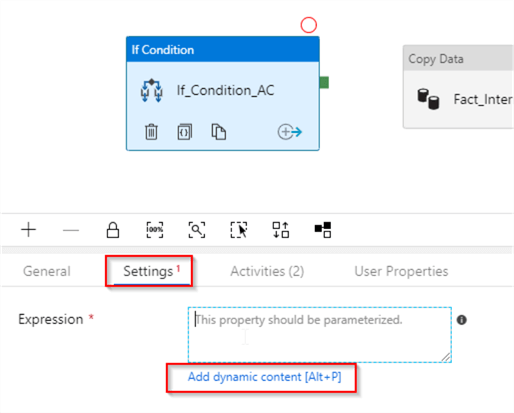

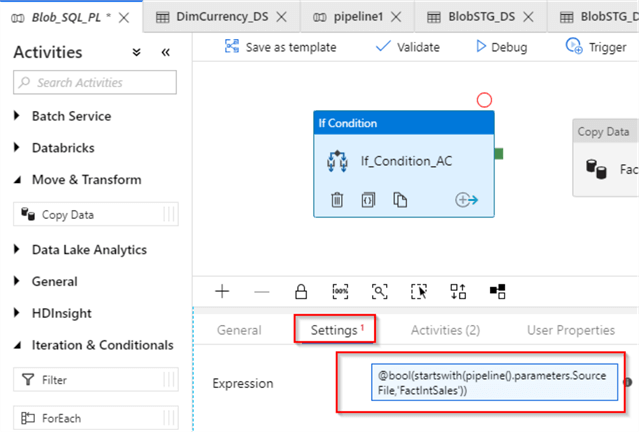

Switch to the Settings tab, place the cursor in the Expression text box and click the 'Add dynamic content' link under that text box, to start building an evaluation expression:

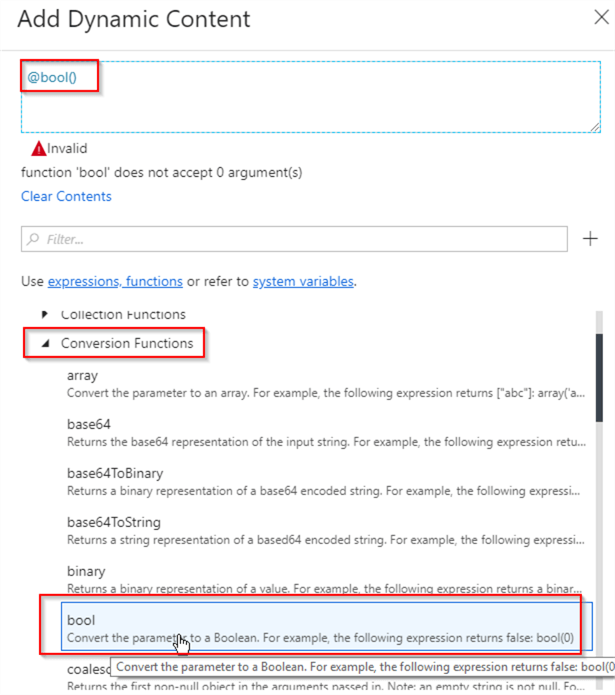

Expand Functions/Conversion Functions group and select the bool function:

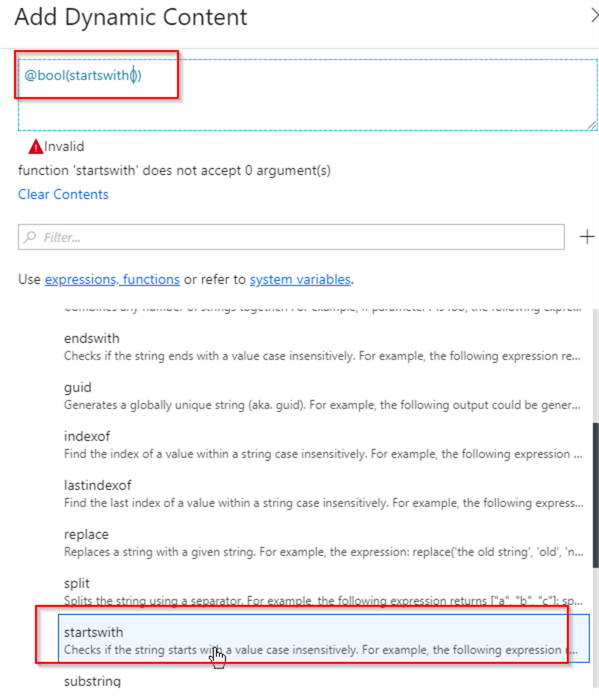

Place the cursor inside the bool function brackets, expand Functions/String Functions group and select the startswith function:

Place the cursor inside the startswith function brackets and select SourceFile pipeline parameter we created earlier, followed by a comma and 'FactIntSales' string and then confirm to close the Add Dynamic Content window. Here's the final expression- @bool(startswith(pipeline().parameters.SourceFile,'FactIntSales')) , which evaluates whether or not the input file's name starts with 'FactIntSales' string. Here's a screenshot for the activity with the evaluation condition:

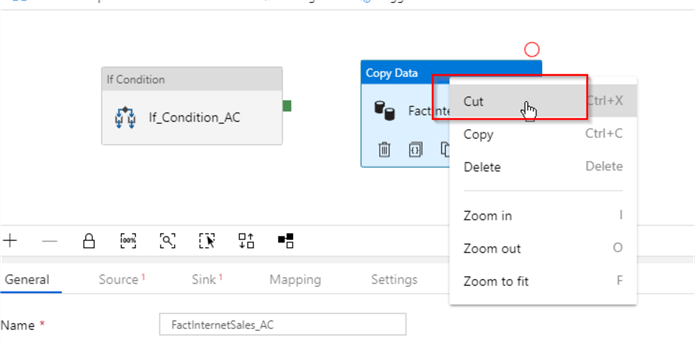

Next, let's copy FactInternetSales_AC activity into the buffer, using right click and Cut command:

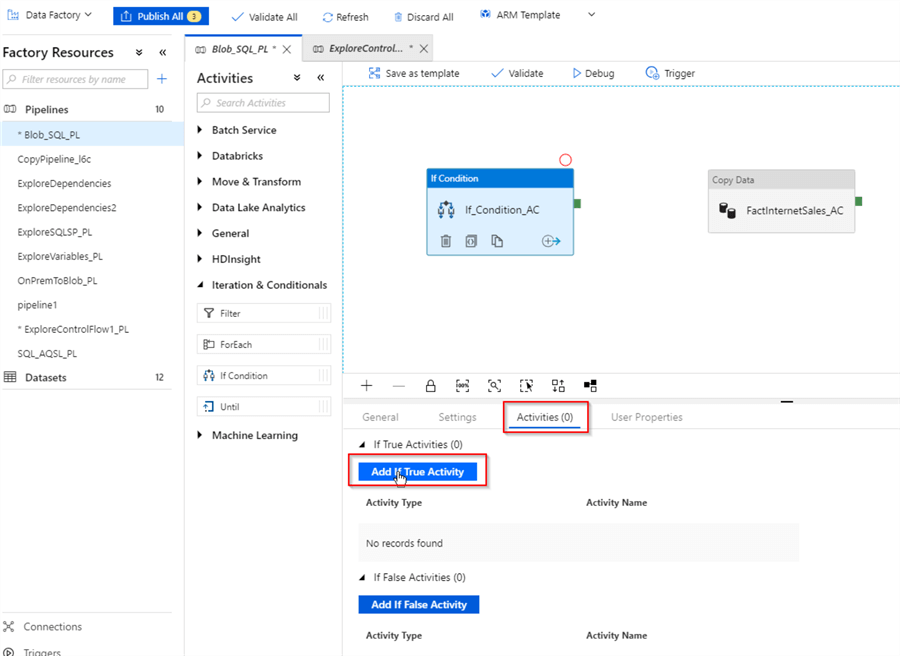

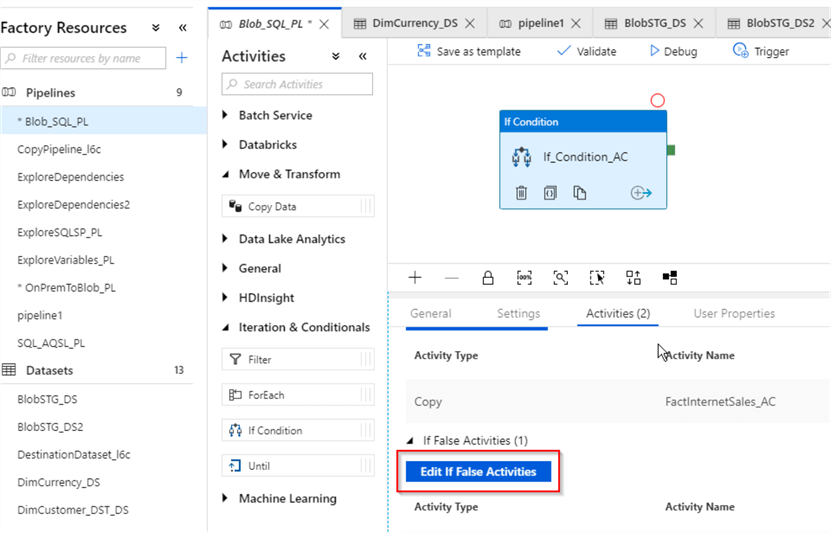

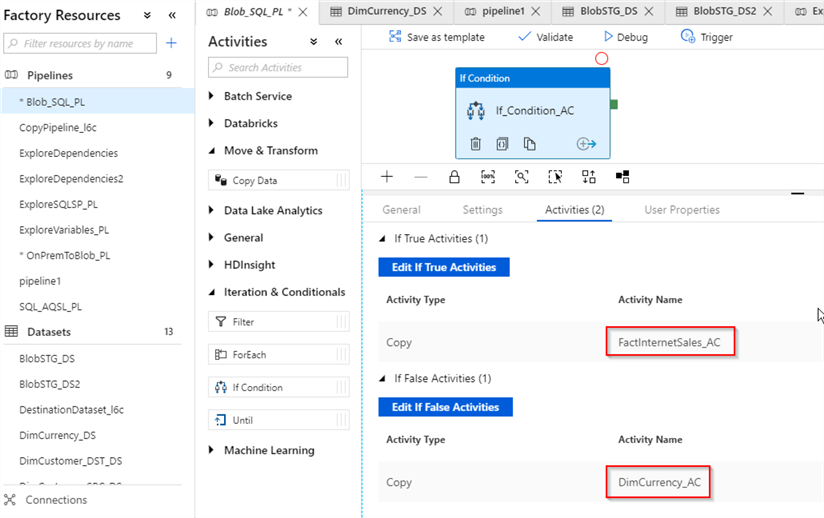

Now, we need to add activities to True and False evaluation groups. Select If_Condition_AC activity, switch to the Activities tab and click Add If True Activity button:

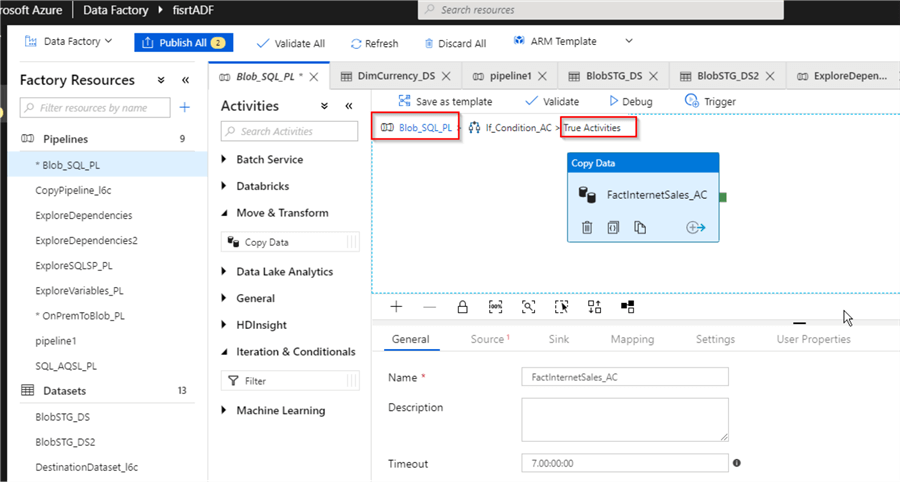

Right click in the design surface and select the Paste command, to paste the activity we copied earlier into the buffer and assign a name (I have named it FactInternetSales_AC):

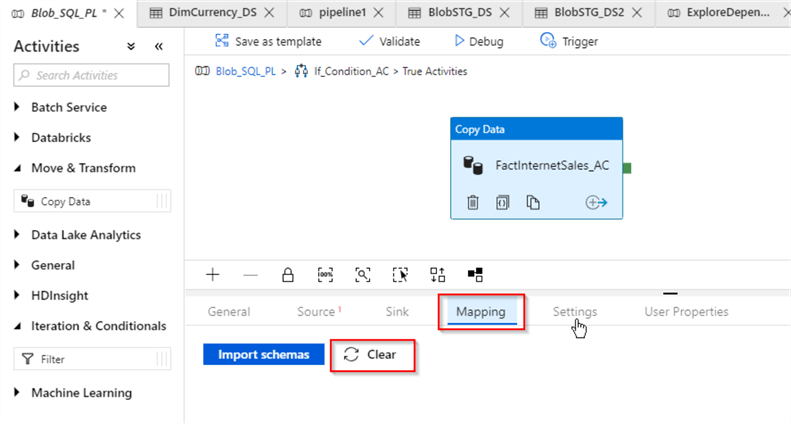

The activity FactInternetSales_AC originally has been created with the explicit field mapping (see Transfer On-Premises Files to Azure SQL Database for more details). However, because this pipeline is going to transfer files with different structures, we no longer need to have explicit mapping, so let's switch to the Mapping tab and click the Clear button, to remove mapping:

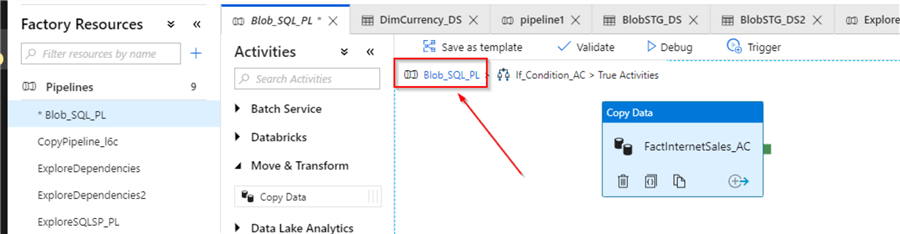

Please note the pipeline hierarchy link at the top of design surface, which allows you to navigate to the parent pipeline's design screen. We could add more activities into True Activities group, however that's not required for the purpose of this exercise, so let's click Blob_SQL_PL navigation link to return to the parent pipeline's design screen:

We'll follow similar steps to add activity into False group:

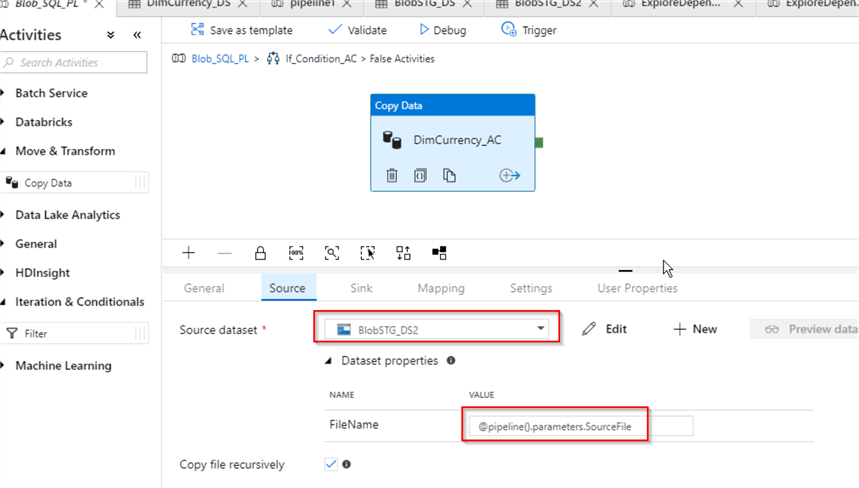

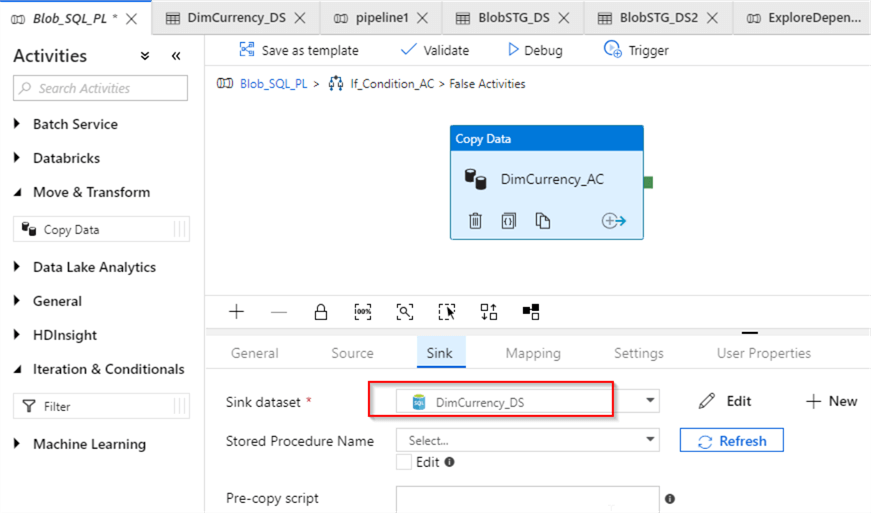

Let's add a Copy activity to copy files from the blob storage container into the DimCurrency table in Azure SQL DB (I've named it DimCurrency_AC). This activity's source dataset screen will be identical to the FactInternetSales_AC activity's source screen:

As for the sink dataset, we will need to create Azure SQL DB dataset, pointing to the DimCurrency table:

Now that we are done with the configuration of DimCurrency_AC activity, we can return to the parent screen, using the parent navigation link and publish changes. Here is how your final screen should look at this point:

For those, who want to see the JSON script for the pipeline we just created, I have attached the script here.

Validating Azure Data Factory Pipeline Execution

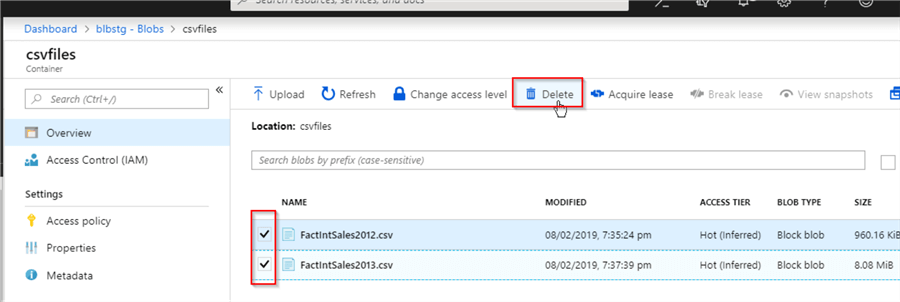

Because this pipeline has an event-based trigger associated with it, all we need to initiate it is to drop files into the source container. We can use Azure Portal to manage files in the blob storage, so let's open the Blob Storage screen and remove existing files from the csvfiles container:

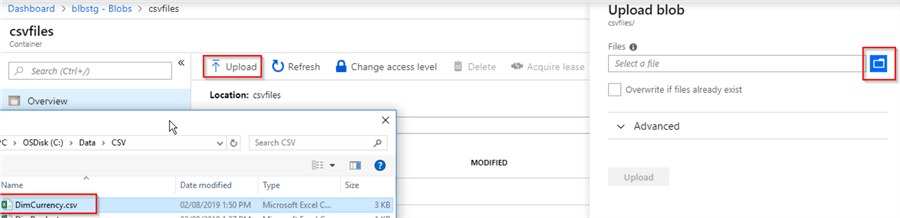

Now, use the Upload button to select DimCurrency.csv file from the local folder:

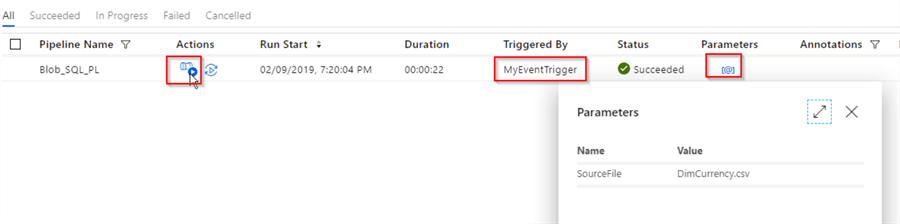

Let's wait few minutes for this pipeline to finish and switch to the Monitor screen, to examine the execution results. As expected, MyEventTrigger has started the pipeline in response to DimCurrency.csv file's upload event:

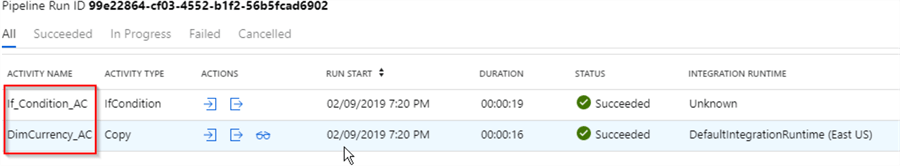

Upon further examination of execution details, we can see that DimCurrency_AC activity ran after conditional validation:

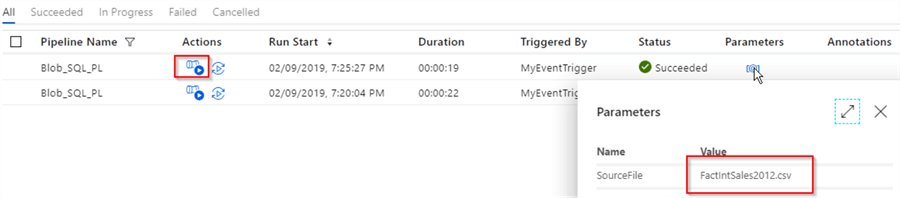

Now, let's upload FactIntSales2012.csv file and see the execution results:

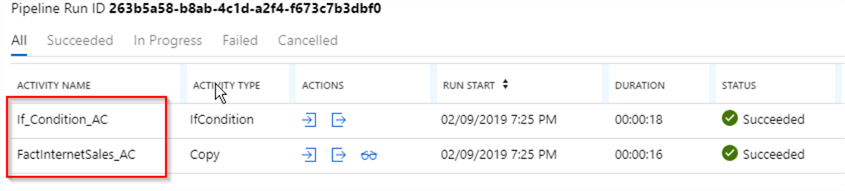

Activity Runs screen confirms that conditional activity worked as expected:

Conclusion

The If Condition activity is great feature, allowing adding conditional logic to your data flow. You can build complex evaluation expressions interactively, using the Add Dynamic Content window and you can nest multiple activities within an If Condition activity.

Although If Condition activity's functionality in ADF is similar to SSIS's Conditional Split control's functionality, there are few important differences:

- If Condition activity's evaluation conditions are based on object level (for example, dataset source file name, pipeline name, trigger time, etc.), whereas SSIS's Conditional Split's evaluation is based on row level conditions.

- SSIS's Conditional Split has default output, where rows not matching specified criteria can be directed, whereas ADF only has True and False condition outputs.

Next Steps

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-08-28