By: Aleksejs Kozmins | Updated: 2019-11-28 | Comments | Related: More > Integration Services Development

Problem

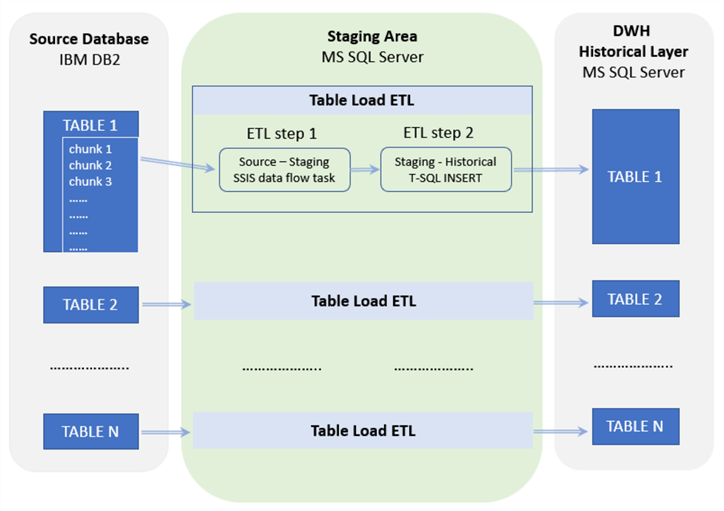

In a big SQL Server data warehouse project, I had to provide an SQL Server Integration Services (SSIS) based ETL process to store historical data. The ETL flow is shown below.

Fig.1. The scheme of history process.

The ETL was implemented as a set of Table Load ETL processes running in parallel. The set of records in each source table assigned to transfer was divided into chunks of the same size (e.g. 20000 records). The ETL process for each source table was performed in a cycle, selecting the data consecutively, chunk by chunk.

Each cycle iteration included two steps:

- Truncation of the staging table and transfer of the source data chunk to the staging table by SSIS data flow task (DF-task)

- Transfer of the staged chunk of data to the history table using a T-SQL INSERT statement.

It was required for the project to implement this two-step approach instead of a direct 'source to historical' data transfer.

The critical point is the following: the ETL run time was twice as long compared to a one step 'source to historical' ETL. How can the performance be improved with minimal overhead on the ETL process? The solution is given below.

Solution

Two ETL steps running in parallel

The solution was to run the processes in parallel to speed up the overall ETL process.

The possibility to perform two ETL steps in parallel is based on these steps:

- The source-staging step (staging in) is an SSIS DF-task

- The staging-historical step (staging out) is a T-SQL INSERT statement

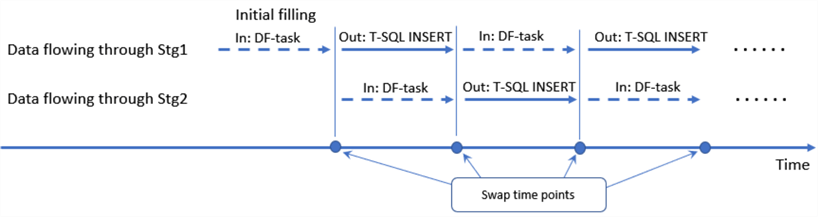

Let's assume that we have a staging table Stg1 filled with an initial portion of the source data. In this case we can start the data transmission from this table to the historical one starting at the same time as the DF-task to fill in the source data for another staging table Stg2. When the transmission of Stg1 data to the historical table is over then:

- Stg1 table is truncated

- The DF-task is switched to transfer the source data to Stg1 (the filling of Stg2 table is stopped)

- The data from Stg2 are transmitted to the historical table concurrently with the DF-task filling table Stg1

Next, when the data from Stg2 are transmitted to the historical table, the process is repeated again with Stg1 and Stg2 swapped out. Therefore, starting from the initial filling of table Stg1, both ETL steps can be performed in parallel where the staging tables are swapped out in a cycle.

The time diagram of this process is shown in Fig.2 below.

Fig.2. The time diagram of ETL process with Stg1 and Stg2 swapped out.

Switch DF-task from one destination to another during run time

To do this, it is an event-driven process using an external control SQL script and a specific feature of the SSIS data flow to make it possible.

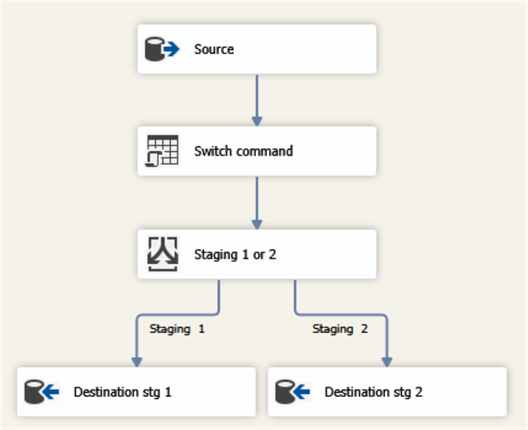

The structure of the DF-task is given in Fig.3 below.

Fig. 3. The data flow task with alternative destination components.

To make this work, a new field X is added in the SELECT statement of the source component as shown below:

SELECT 0 AS X, * FROM dbo.SalesOrderDetail ORDER BY SalesOrderDetailID

Initially, this field has a fixed value of 0 in all rows going from the source component to the OLEDB Command task 'Switch command' (see Fig.3). This component updates the field X value from the outside, i.e. from the configuration and control table. The command script is:

SELECT ? = StagingFlowNr FROM dbo.ETLswitchConfig WHERE ID = 1

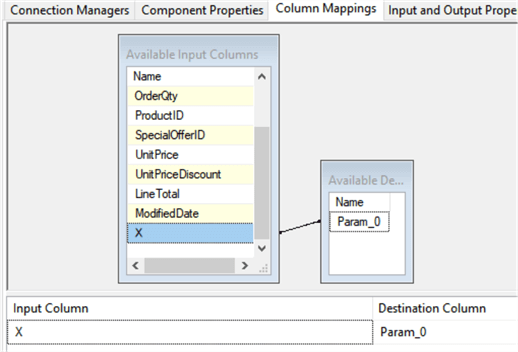

The captured parameter is mapped to the new field X (see Fig.4):

Fig.4. Mapping of the control value taken from the outside to the column X

Therefore, all rows coming out from 'Switch command' component have a new value in field X.

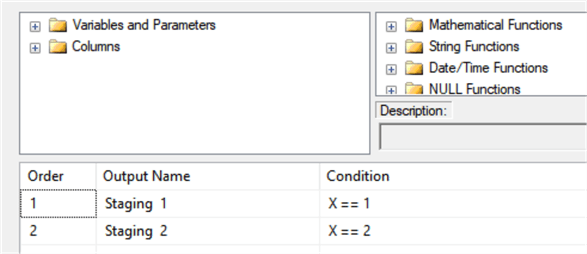

Next, the Conditional Split task 'Staging 1 or 2' (Fig.3) checks the value of the X field and generates an appropriate output (see Fig.5).

Fig.5. Alternative outputs for data flow task based on the control value in X column.

In short:

- The 'Switch command' task receives a signal from the outside (field X) to switch data flow to another staging table

- The task 'Staging 1 or 2' performs this switch

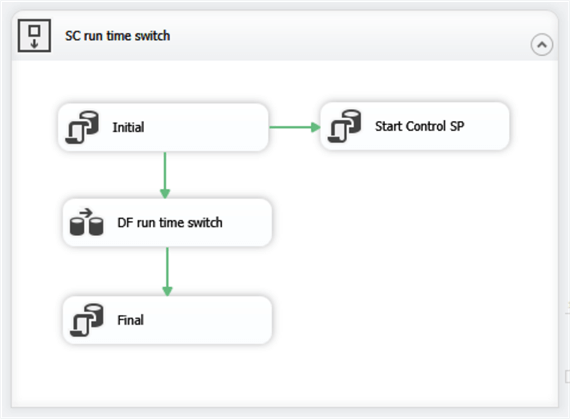

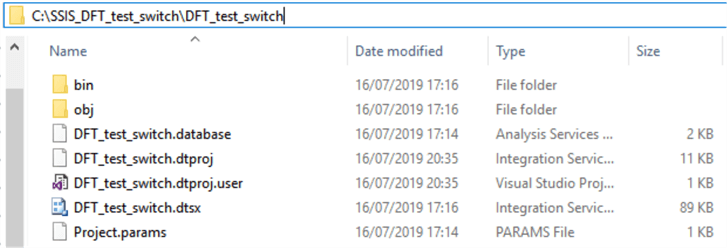

Overview of the SSIS Package

Fig.6. General structure of the SSIS package.

The 'Initial' component of the package does the following:

- Performs a preliminary cleaning of the intermediate tables and indicates the DF-task should start in the ETL configuration table.

- Launches the DF-task 'DF run time switch' and 'Start Control SP' component to start the control stored procedure dbo.DFswitch.

The 'Final' component indicates DF-task completion in the ETL configuration table.

The tables used in the ETL process are given below.

| Table name | Explanation |

|---|---|

| ETLswitchConfig |

Configuration and control data. Includes the

following fields:

- ETLstatus. Indicates the status of data flow task. The initial value is 'START', the final one (after data flow is over) is 'STOP'. - StagingFlowNr: the control value needed to switch data flow to alternative staging table. Values: 1 or 2, set within the stored procedure. - Other fields with the ETL result. Explained later. |

| SalesOrderDetalHist | Historical data to be filled in by the ETL process |

| SalesOrderDetalStg1 | Staging table 1 |

| SalesOrderDetalStg2 | Staging table 2 |

| ETLswitchLog | Log table to register all the insertions of the staged data into historical table. |

The procedure dbo.DFswitch performs the following operations during the ETL run:

- Initial delay to let the first portion of the source data to get into staging table 1

- Sends a signal to switch data flow to alternative destination

- Insertion of the newly staged data into historical table

- Logging of the ETL results

The stored procedure is below.

CREATE PROCEDURE [dbo].[DFswitch] AS BEGIN WAITFOR DELAY '00:00:05'; -- delay for initial data data loading in staging table 1 -- main cycle WHILE 1 = 1 BEGIN -- is the DF switched to staging table 1 ? IF (SELECT StagingFlowNr FROM dbo.ETLswitchConfig WHERE ID = 1) = 1 BEGIN -- control signal to data flow: switch to staging table 2 UPDATE dbo.ETLswitchConfig SET StagingFlowNr =2 WHERE ID = 1; -- check if the data transfer to staging table 2 has started WHILE NOT EXISTS (SELECT TOP 1 1 FROM dbo.SalesOrderDetailStg2) BEGIN WAITFOR DELAY '00:00:01' -- has the data flow task completed ? IF (SELECT ETLstatus FROM dbo.ETLswitchConfig WHERE ID=1) = 'STOP' GOTO lblEnd; END -- the data flow with staging table 2 is on -- the newly staged data from staging table 1 are inserted into historical INSERT INTO dbo.SalesOrderDetailHist (SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID, UnitPrice, UnitPriceDiscount, LineTotal, ModifiedDate) SELECT SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID, UnitPrice, UnitPriceDiscount, LineTotal, ModifiedDate FROM dbo.SalesOrderDetailStg1; -- logging of the insertion results INSERT INTO dbo.ETLswitchLog (StagingFlowNr, RecordsStaged) VALUES (1, (SELECT count(1) from dbo.SalesOrderDetailStg1)); -- truncation of staging table 1 TRUNCATE TABLE dbo.SalesOrderDetailStg1; END ELSE -- same process with staging tables swapped out IF (SELECT StagingFlowNr FROM dbo.ETLswitchConfig WHERE ID = 1) = 2 BEGIN UPDATE dbo.ETLswitchConfig SET StagingFlowNr =1 WHERE ID = 1; WHILE NOT EXISTS (SELECT TOP 1 1 FROM dbo.SalesOrderDetailStg1) BEGIN WAITFOR DELAY '00:00:01' -- has the data flow task completed ? IF (SELECT ETLstatus FROM dbo.ETLswitchConfig WHERE ID=1) = 'STOP' GOTO lblEnd; END INSERT INTO dbo.SalesOrderDetailHist (SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID, UnitPrice, UnitPriceDiscount, LineTotal, ModifiedDate) SELECT SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID, UnitPrice, UnitPriceDiscount, LineTotal, ModifiedDate FROM dbo.SalesOrderDetailStg2; INSERT INTO dbo.ETLswitchLog (StagingFlowNr, RecordsStaged) VALUES (2, (SELECT count(1) from dbo.SalesOrderDetailStg2)); TRUNCATE TABLE dbo.SalesOrderDetailStg2; END END lblEnd: -- final insertion of the satging data remained after data flow is over INSERT INTO dbo.SalesOrderDetailHist (SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID, UnitPrice, UnitPriceDiscount, LineTotal, ModifiedDate) SELECT SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID, UnitPrice, UnitPriceDiscount, LineTotal, ModifiedDate FROM dbo.SalesOrderDetailStg1 UNION ALL SELECT SalesOrderID, SalesOrderDetailID, CarrierTrackingNumber, OrderQty, ProductID, SpecialOfferID, UnitPrice, UnitPriceDiscount, LineTotal, ModifiedDate FROM dbo.SalesOrderDetailStg2; -- final logging INSERT INTO dbo.ETLswitchLog (StagingFlowNr, RecordsStaged) VALUES ( (SELECT IIF(StagingFlowNr=1,2,1) FROM dbo.ETLswitchConfig WHERE ID =1), (SELECT count(1) from dbo.SalesOrderDetailStg2 )+ (SELECT count(1) from dbo.SalesOrderDetailStg1) ); UPDATE dbo.ETLswitchConfig SET RecordsTransferred = (SELECT count(1) from dbo.SalesOrderDetailHist) WHERE ID =1 ; END

Comments on the stored procedure

When a control signal to switch data flow to a new destination is sent, it needs to be ensured that the data coming to this destination has started. A check is performed by the nested WHILE cycle shown below:

WHILE NOT EXISTS (SELECT TOP 1 1 FROM dbo.SalesOrderDetailStg1) BEGIN WAITFOR DELAY '00:00:01' -- has the data flow task completed ? IF (SELECT ETLstatus FROM dbo.ETLswitchConfig WHERE ID=1) = 'STOP' GOTO lblEnd; END

Along with this check the data flow status is traced. The stored procedure goes to the end when the data flow gets stopped:

(SELECT ETLstatus FROM dbo.ETLswitchConfig WHERE ID=1) = 'STOP'

Only after these checks should the data insertion into the historical table be performed.

When the data flow is over, the final portion of the staged data should be transferred to the historical table (the INSERT statement after lblEnd).

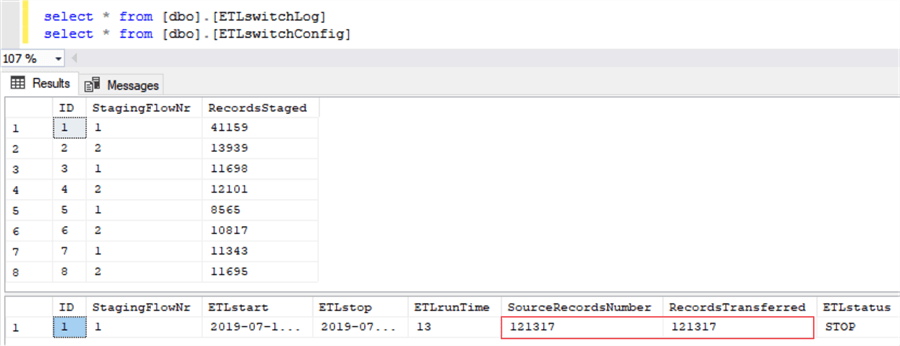

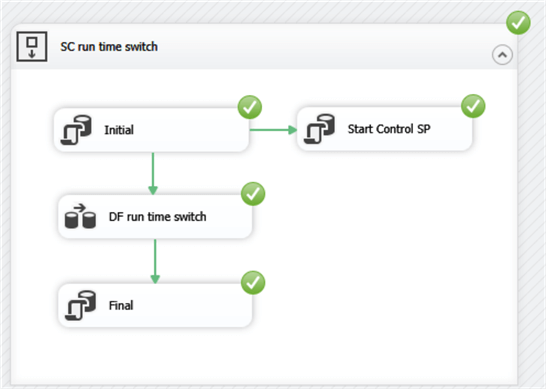

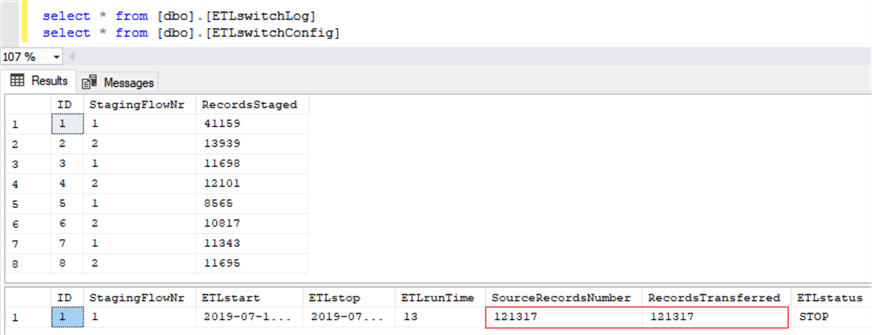

Sample ETL Run Results

Fig.7. The logged ETL results.

The first table is the ETL log. Each log line contains:

- The number of the staging table which the data flow was switched to

- The number of records transmitted from the staging table to the historical table

To make sure the ETL result is correct, the ETL configuration and control table both get the numbers of source and transmitted records (outlined in red). An additional check of the result can be performed with this code:

SELECT SUM(RecordsStaged) FROM dbo.ETLswitchLog

Conclusion

The example demonstrates how an SSIS data flow task can be dynamically controlled from the outside. The external control process (stored procedure and configuration table) can be updated independently from the data flow task.

Moreover, the logic of the ETL control process may be changed during ETL runtime, and more than one control parameter can be applied and more sophisticated logic for the data flow control could be implemented.

Setup Test Environment

To reproduce what is given in the article, download DFT_test_switch.zip and perform the following steps.

Create and populate the training database with the SQL scripts given in CreateTrainingDB.zip.

Run the SQL-scripts following the numbers in the file names.

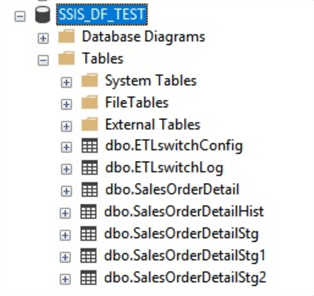

The created DB structure should contain the following tables (see Fig.8) and the stored procedure dbo.DFswitch.

Fig.8. The structure of dbo.SSIS_DF_TEST.

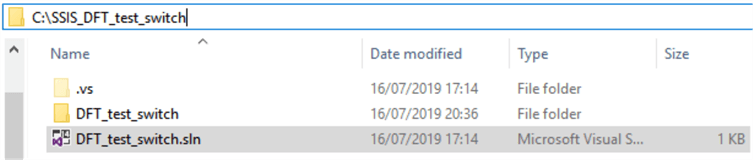

Create the folder C:\ SSIS_DFT_test_switch and unzip SSIS_DFT_test_switch.zip to this folder (see Fig.9).

Fig.10. The unzipped ETL components.

Open Visual Studio, find and open the solution file C:\SSIS_DF_test_switch\SSIS_DF_test_switch.sln.

Expand the solution view, find the package DF_test_switch.dtsx and execute it. The results should look as shown below (Fig.11).

Fig 11. The executed package and the final look of the data in the log and configuration/control table.

Next Steps

- Check out my prior tip: How to Stop a SSIS Package Execution from Code

About the author

Aleksejs Kozmins is a Data Warehousing and Business Intelligence professional, focused on efficient SQL Server Integration Services processes.

Aleksejs Kozmins is a Data Warehousing and Business Intelligence professional, focused on efficient SQL Server Integration Services processes.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-11-28