By: Alejandro Cobar | Updated: 2020-03-17 | Comments (15) | Related: > TSQL

Problem

Sometimes in large SQL Server OLTP systems, tables can grow very big over time. When that time comes, queries against such tables might begin to suffer performance issues because those tables were never designed to host that volume of data. Even with the correct indexes, the performance might not be as good as expected and you might be forced to give some extra thought purging some old data.

Solution

In this tip I’m going to present a few T-SQL scripts that can help you copy information to a historical table (in case historical information is required in the future, but not in the principal table), and set into motion a loop that can delete records in batches (specified by you) using a determined start date and end date.

During the process, you can use another T-SQL script (provided in this solution) to constantly monitor the progress of such deletion. Perhaps you need to know how many time it takes to delete a day of information or simply how much time it takes to delete X amount of records, and the overall progress.

Code Explained

TThe process will be handled through a stored procedure, so it can fit any case you might have.

- I will use tempdb as my chosen database to work with through the entire demonstration.

- I have created a test table called "big_table" and have populated it with 500,000 rows distributed across 5 days.

HHere’s how you are going to call the stored procedure:

EXECUTE [dbo].[Batch_Delete] @startDate = '2015-01-01' ,@endDate = '2015-01-06' ,@dbName = 'tempdb' ,@schemaName = 'dbo' ,@tableName = 'big_table' ,@dateFieldName = 'created_date' ,@saveToHistoryTable = 1 ,@batch = 1000

The names of the parameters are pretty much self-explanatory, but here’s their purpose:

- @startDate: The start date from when to start working with the records.

- @endDate: A non-inclusive date to limit when the records will be taken into account. In my example it means that I’m just going to be working with records from >= ‘2015-01-01’ and date < ‘2015-01-06’.

- @dbName: The name of the database that hosts the table that you want to

work with. Take into account that in this same database the historic and metrics

tables are created.

- IfIf you’d like to have these 2 tables in separate databases, a few tweaks would have to be made to the stored procedure and an additional parameter would have to be passed to specify the name of the other database.

- @schemaName: The name of the schema of the table.

- @tableName: The name of the table to be processed.

- @d@dateFieldName: The name of the column that contains the timestamp fields to work with. Remember that this logic is written towards a date-based approach; if you want a different one you’d have to adjust the stored procedure to your particular case.

- @saveToHistoryTable: If 1, then an identical empty table is created based on @tableName, and the name "_historic" is added to it to distinguish it. If 0, then no data movement will be performed during the execution of the script (use it very carefully).

ThThe stored procedure contains a set of initial validations to make sure that you have entered the correct information to proceed.

Here are all the validations considered:

- If @startDate is equal or greater than @endDate then notify the user.

- If the @dbName isn’t specified or it is left blank then notify the user.

- If the @schemaName isn’t specified or it is left blank then notify the user.

- If the @tableName isn’t specified or it is left blank then notify the user.

- If the @dateFieldName isn’t specified or it is left blank then notify the user.

- If the table targeted by @dbName.@schemaName.@tableName doesn’t exist, then notify the user.

- If the table exists but the field @dateFieldName doesn’t, then notify the user.

- IfIf the parameter @saveToHistoryTable is set to 0 (being 1 the default), a warning will be shown to the user.

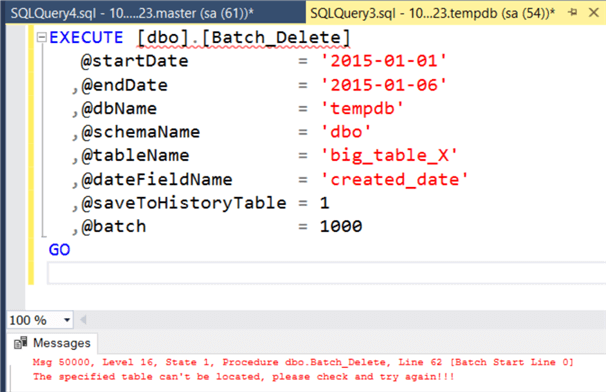

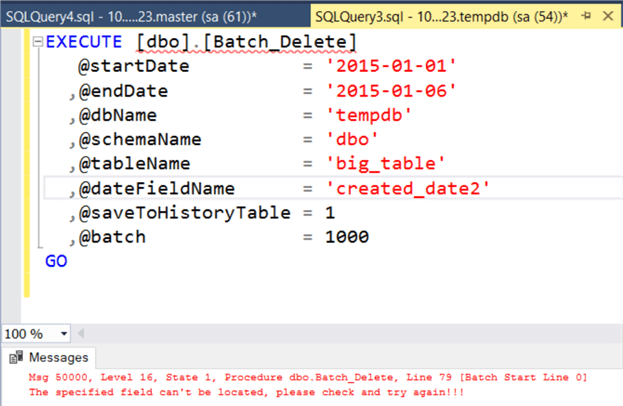

Below are some screenshots as an example.

In this example, the table "big_table_X" does not exists, so we get an error.

In this example, the column "create_date2" does not exists, so we get an error.

TST-SQL Code for Stored Procedure Creation

At a high-level, this is what the stored procedure does:

- If the stored procedure already exists, delete the stored procedure and create it from scratch.

- All the set validations described above are performed initially and the execution stops if any of them fails.

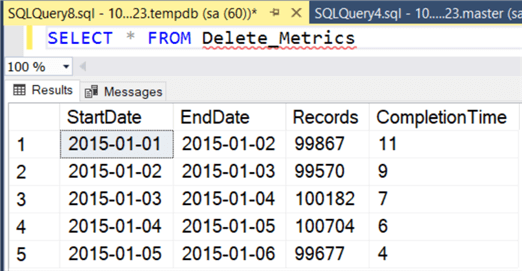

- If all the validations pass, then create the "Delete_Metrics" table to keep

track of how many times (in seconds) it takes each day to be wiped out from

the main table.

- A non-clustered index is placed on the StartDate and EndDate, in case you end up with a lot of records and want to speed up your lookups. If you think there are not that many, then you can consider removing this.

- If the @saveToHistoryTable is set to 1, then all the records that will be affected are copied to a newly created table that has the exact same structure as the original table (without constraints and indexes), and will have the name "_historic" added to it.

- After all the records have been saved to the history table, a while loop that goes from @startDate to @endDate-1 will kick in and the processing will take place inside a transaction. Why? Because if anything goes wrong at any given time then it has a chance to rollback.

- Per day, the deletes will take place by batches specified by the parameter @batch, so use it and test it carefully.

- After all the records for a given day have been deleted, then the respective record is inserted in the Delete_Metrics table, to show how many seconds it took to delete X amount of records.

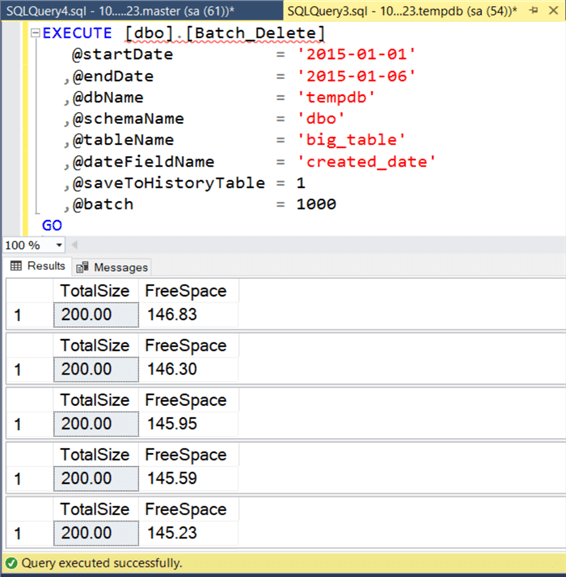

- As a bonus, I included a piece of code that constant displays (after each batch of records is deleted) the current size of the transaction log (in MB) of the database where the activity is taking place, along with the free space in the file (in MB).

With all this information, not only can you create a baseline of delete operations for daily records, but you also can know the impact this will have on the transaction log file.

Here is the entire script.

IF EXISTS (

SELECT type_desc, type

FROM sys.procedures WITH(NOLOCK)

WHERE NAME = 'Batch_Delete'

AND type = 'P'

)

DROP PROCEDURE Batch_Delete

GO

CREATE PROCEDURE Batch_Delete

@startDate DATE,

@endDate DATE,

@dbName VARCHAR(64) = NULL,

@schemaName VARCHAR(64) = NULL,

@tableName VARCHAR(64) = NULL,

@dateFieldName VARCHAR(64) = NULL,

@saveToHistoryTable BIT = 1,

@batch INT = 1000

AS

SET NOCOUNT ON

DECLARE @tableExists BIT = 0

DECLARE @fieldExists BIT = 0

DECLARE @sqlCommand NVARCHAR(2048)

IF(@startDate > @endDate OR @startDate = @endDate)

BEGIN

RAISERROR('startDate can''t be higher or equal than endDate!!!', 16, -1)

RETURN

END

IF(@dbName IS NULL OR TRIM(@dbname) = '')

BEGIN

RAISERROR('You must specify the source database where the table is hosted!!!', 16, -1)

RETURN

END

IF(@schemaName IS NULL OR TRIM(@schemaName) = '')

BEGIN

RAISERROR('You must specify the schema of the table!!!', 16, -1)

RETURN

END

IF(@tableName IS NULL OR TRIM(@tableName) = '')

BEGIN

RAISERROR('You must specify the name of the table!!!', 16, -1)

RETURN

END

IF(@dateFieldName IS NULL OR TRIM(@dateFieldName) = '')

BEGIN

RAISERROR('You must specify the name of the column that contains the dates for the lookups!!!', 16, -1)

RETURN

END

DECLARE @e AS TABLE([objectID] BIGINT)

SET @sqlCommand = '

DECLARE @objectID BIGINT = 0

SELECT @objectID = OBJECT_ID ('+CHAR(39)+'['+@dbname+'].['+@schemaName+'].['+@tableName+']'+CHAR(39)+',''U'')

SELECT ISNULL(@objectID,-1)

'

INSERT INTO @e

EXEC sp_executesql @sqlCommand

SET @tableExists = (SELECT CASE [objectID] WHEN -1 THEN 0 ELSE 1 END FROM @e)

DELETE FROM @e

IF(@tableExists <> 1)

BEGIN

RAISERROR('The specified table can''t be located, please check and try again!!!', 16, -1)

RETURN

END

DECLARE @f AS TABLE([size] SMALLINT)

SET @sqlCommand = '

DECLARE @colSize SMALLINT = 0

SELECT @colSize = COL_LENGTH ('+CHAR(39)+'['+@dbname+'].['+@schemaName+'].['+@tableName+']'+CHAR(39)+','+CHAR(39)+@dateFieldName+CHAR(39)+')

SELECT ISNULL(@colSize,-1)

'

INSERT INTO @f

EXEC sp_executesql @sqlCommand

SET @fieldExists = (SELECT CASE [size] WHEN -1 THEN 0 ELSE 1 END FROM @f)

DELETE FROM @f

IF(@fieldExists = 0)

BEGIN

RAISERROR('The specified field can''t be located, please check and try again!!!', 16, -1)

RETURN

END

IF(@saveToHistoryTable = 0)

PRINT 'Be aware that you have invoked the execution of this SP with historical data transfer turned off!!!'

-- Per Day logic

DECLARE @currentDate DATE

DECLARE @startTime DATETIME

DECLARE @endTime DATETIME

DECLARE @rows INT

DECLARE @totalRows INT

DECLARE @deletedRows INT

SET @currentDate = @startDate

SET @sqlCommand = '

USE '+'['+@dbname+']

'

EXEC(@sqlCommand)

IF OBJECT_ID ('Delete_Metrics','U') IS NULL

BEGIN

CREATE TABLE Delete_Metrics(

StartDate DATE NOT NULL,

EndDate DATE NOT NULL,

Records INT NOT NULL,

CompletionTime INT NOT NULL

)

CREATE NONCLUSTERED INDEX IX_StartDate ON Delete_Metrics(StartDate)

CREATE NONCLUSTERED INDEX IX_EndDate ON Delete_Metrics(EndDate)

END

IF(@saveToHistoryTable = 1)

BEGIN

DECLARE @h AS TABLE([rows] INT)

SET @sqlCommand = '

SET NOCOUNT ON

IF OBJECT_ID ('+CHAR(39)+'['+@dbname+'].['+@schemaName+'].['+@tableName+'_historic]'+CHAR(39)+',''U'') IS NULL

BEGIN

SELECT TOP 0 * INTO ['+@dbname+'].['+@schemaName+'].['+@tableName+'_historic] FROM ['+@dbname+'].['+@schemaName+'].['+@tableName+']

END

INSERT INTO ['+@dbname+'].['+@schemaName+'].['+@tableName+'_historic]

SELECT * FROM ['+@dbname+'].['+@schemaName+'].['+@tableName+'] WHERE ['+@dateFieldName+'] >= '+CHAR(39)+CONVERT(VARCHAR(20),@startDate)+CHAR(39)+' AND ['+@dateFieldName+'] < '+CHAR(39)+CONVERT(VARCHAR(20),@endDate)+CHAR(39)+'

SELECT @@ROWCOUNT

'

INSERT INTO @h

EXEC sp_executesql @sqlCommand

SET @totalRows = (SELECT [rows] FROM @h)

DELETE FROM @h

IF(@totalRows > 0)

RAISERROR ('#Finished transferring records to historic table#', 0, 1) WITH NOWAIT

END

WHILE(@currentDate < @endDate)

BEGIN

BEGIN TRANSACTION

BEGIN TRY

DECLARE @t AS TABLE([rows] INT)

SET @sqlCommand = '

DECLARE @tempTotalRows INT = 0

SELECT @tempTotalRows = COUNT(*) FROM ['+@dbName+'].['+@schemaName+'].['+@tableName+'] WHERE ['+@dateFieldName+'] >= '+CHAR(39)+CONVERT(VARCHAR(20),@currentDate)+CHAR(39)+' AND ['+@dateFieldName+'] < DATEADD(DAY,1,'+CHAR(39)+CONVERT(VARCHAR(20),@currentDate)+CHAR(39)+')

SELECT @tempTotalRows

'

INSERT INTO @t

EXEC sp_executesql @sqlCommand

SET @totalRows = (SELECT [rows] FROM @t)

DELETE FROM @t

SET @deletedRows = 0

SET @startTime = GETDATE()

DECLARE @d AS TABLE([rows] INT)

WHILE @deletedRows < @totalRows

BEGIN

SET @sqlCommand = '

DELETE TOP ('+CONVERT(VARCHAR(16),@batch)+')

FROM ['+@dbName+'].['+@schemaName+'].['+@tableName+'] WHERE ['+@dateFieldName+'] >= '+CHAR(39)+CONVERT(VARCHAR(20),@currentDate)+CHAR(39)+' AND ['+@dateFieldName+'] < DATEADD(DAY,1,'+CHAR(39)+CONVERT(VARCHAR(20),@currentDate)+CHAR(39)+')

SELECT @@ROWCOUNT

'

INSERT INTO @d

EXEC sp_executesql @sqlCommand

SET @deletedRows += (SELECT [rows] FROM @d)

DELETE FROM @d

SELECT l.total_size AS TotalSize,f.free_space AS FreeSpace

FROM(

SELECT CONVERT(DECIMAL(10,2),(total_log_size_in_bytes - used_log_space_in_bytes)/1024.0/1024.0) AS [free_space]

FROM sys.dm_db_log_space_usage

)AS f,

(

SELECT CONVERT(DECIMAL(10,2),size*8.0/1024.0) AS [total_size]

FROM sys.database_files

WHERE type_desc = 'LOG'

)AS l

END

IF(@deletedRows > 0)

BEGIN

DECLARE @stringDate VARCHAR(10) = CONVERT(VARCHAR(10),@currentDate)

RAISERROR('Finished deleting records for date: %s',0,1,@stringDate) WITH NOWAIT

INSERT INTO Delete_Metrics VALUES(@currentDate, DATEADD(DAY,1,@currentDate),@deletedRows,DATEDIFF(SECOND,@startTime,GETDATE()))

END

SET @currentDate = DATEADD(DAY,1,@currentDate)

COMMIT TRANSACTION

END TRY

BEGIN CATCH

ROLLBACK TRANSACTION

SELECT ERROR_MESSAGE() AS ErrorMessage;

END CATCH

END

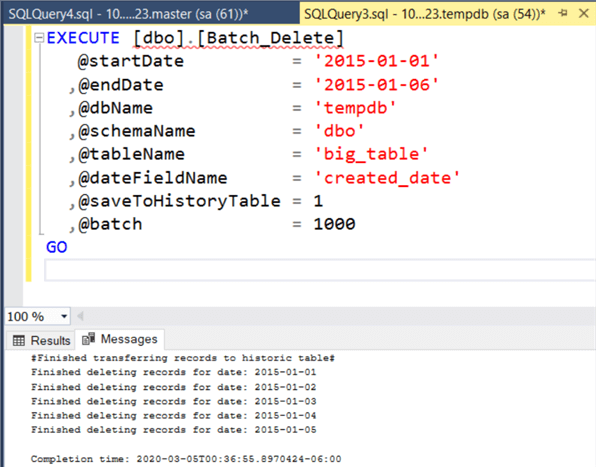

Running the Delete Stored Procedure

Here are some screenshots of what you can expect upon a successful execution:

Check Progress of Delete Processing

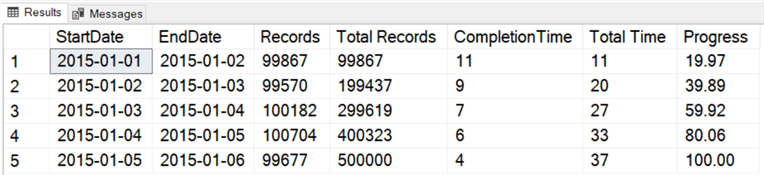

- This T-SQL code can be used to constantly check the progress of the rows deleted.

- You can see how much time each individual day took to get deleted and the total time the entire process has consumed so far. In the very last column, you can see a column named "Progress" which is the % of records deleted that are already copied to the historic table (make sure to put the correct table name for your case).

- Keep in mind that this query will work in instances using SQL Server 2012 and above, due to the usage of the SUM(X) OVER(Y) function.

Here is the query you can use to get information about the delete process:

SELECT StartDate, EndDate, Records, [Total Records] = SUM(Records) OVER (ORDER BY StartDate), CompletionTime, [Total Time] = SUM(CompletionTime) OVER (ORDER BY StartDate), CONVERT(DECIMAL(10,2),(SUM(Records) OVER (ORDER BY StartDate) / (SELECT CONVERT(DECIMAL(10,2),COUNT(*)) FROM big_table_historic) ) * 100) AS 'Progress' FROM Delete_Metrics

Here’s a screenshot of what the result set looks:

Next Steps

- YoYou should consider that if you delete a large amount of records, the respective indexes and statistics may need to be rebuilt.

- Depending on the volume of information, you might have to schedule an eventual shrink of the files to reclaim disk space.

- If you need to address a problem using this approach, make sure to run tests in development and test to get an idea of how long the process takes and the possible impact.li>

- FeFeel free to customize the stored procedure in any way that you like, as long as it is useful for your particular use case.

- Download the T-SQL code for this tip.

About the author

Alejandro Cobar is an MCP, SQL Server DBA and Developer with 10+ years of experience working in a variety of environments.

Alejandro Cobar is an MCP, SQL Server DBA and Developer with 10+ years of experience working in a variety of environments.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2020-03-17