By: Fikrat Azizov | Updated: 2019-11-28 | Comments (6) | Related: > Azure Data Factory

Problem

In a previous post (Lookup activity), we discussed Lookup activity to read the content of the database tables or files. ADF also has another type of activity: Get Metadata activity, which allows reading metadata of its sources. We are going to explore the capabilities of this activity, in this post.

Solution

The Get Metadata activity allows reading metadata information of its sources.

The list of attributes returned by this activity is dependent on its source type, some attributes are available only for file-based sources, others available for database tables and there are few attributes applicable for both types. Here is the full list of attributes, borrowed from Microsoft's site:

| Attribute name | Data source type | Description |

|---|---|---|

| itemName | File storages | Name of the file or folder. |

| itemType | File storages | Type of the file or folder. The output value is File Folder. |

| size | File storages | Size of the file in bytes. Applicable to file only. |

| created | File storages | Created date/time of the file or folder. |

| lastModified | File storages | Last modified date/time of the file or folder. |

| childItems | File storages | List of sub-folders and files inside the given folder. Applicable to the folder object only. The output value is a list of name and type of each child item. |

| contentMD5 | File storages | MD5 of the file. Applicable to file only. |

| structure | File and database systems | Data structure inside the file or relational database table. The output value is a list of column name and column type. |

| columnCount | File and database systems | The number of columns inside the file or relational table. |

| exists | File and database systems | Whether a file/folder/table exists or not. Note if "exists" is specified in the GetaMetadata field list, the activity will not fail even when the item (file/folder/table) does not exist; instead, it returns exists: false in the output. |

Please note that the childItems attribute from this list is applicable to folders only and is designed to provide list of files and folders nested within the source folder.

The Metadata activity can read from Microsoft's on-premises and cloud database systems, like Microsoft SQL Server, Azure SQL database, etc. As to the file systems, it can read from most of the on-premises and cloud storages on Azure, please see here a list of all sources.

The data obtained by Get Metadata activity can be used by subsequent iterative activities, to perform copy or transformation activities on a dynamic basis.

Creating Get Metadata activity

To demonstrate Get Metadata activity at work, I will create a data flow with the following logic:

- Read the list of the files available in the source folder, using Get Metadata activity and pass this data to ForEach activity

- Within the ForEach activity, read the properties of each file, using another Get Metadata activity and pass it to conditional activity, to determine if the file has been modified within the last 7 days

- Copy each recently changed file into the destination database.

Here are the steps to create this data flow:

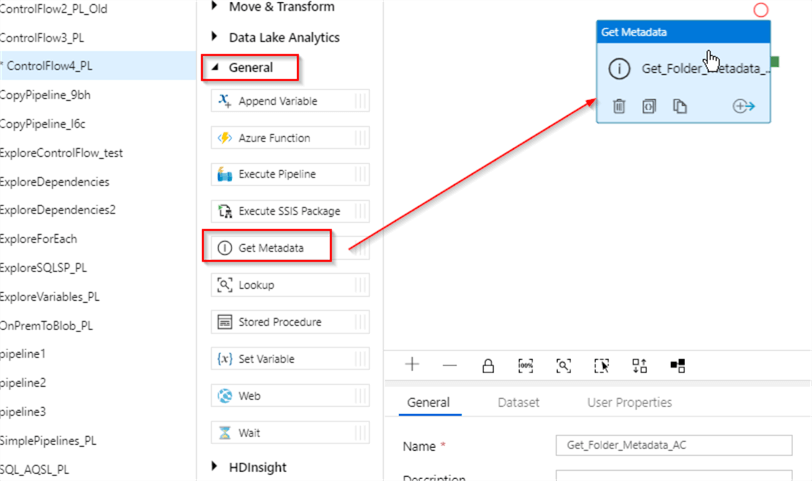

Create new pipeline and drag-drop a Get Metadata activity from the General group (I have named it as Get_Folder_Metadata_AC) to its design surface. This activity will read names of all files in its source container:

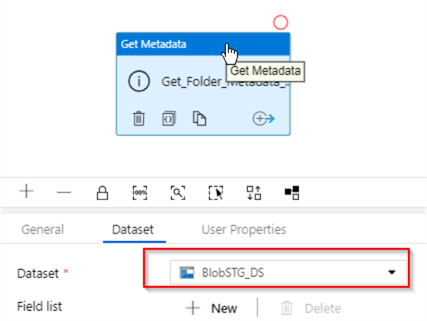

Switch to Dataset tab and select BlobSTG_DS dataset we created in one of the earlier posts (see Transfer On-Premises Files to Azure Blob Storage for more details). This dataset points to csvfiles blob container:

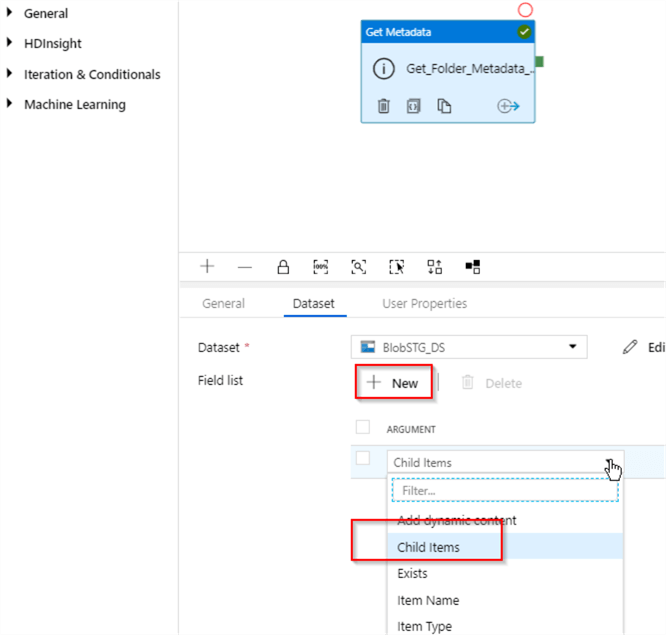

Next, click the '+New' button to add metadata field and select Child Items from the dropdown list-this field will produce names of the files in the csvfiles container:

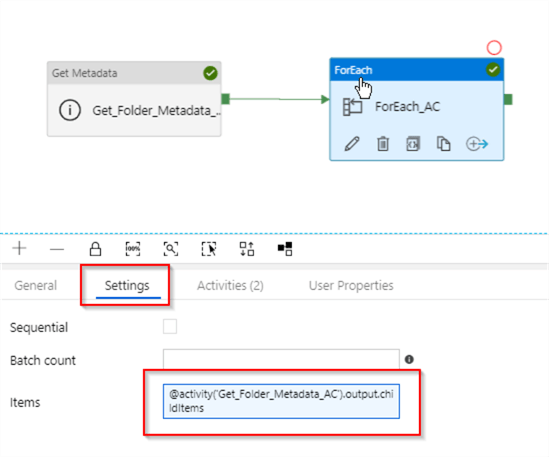

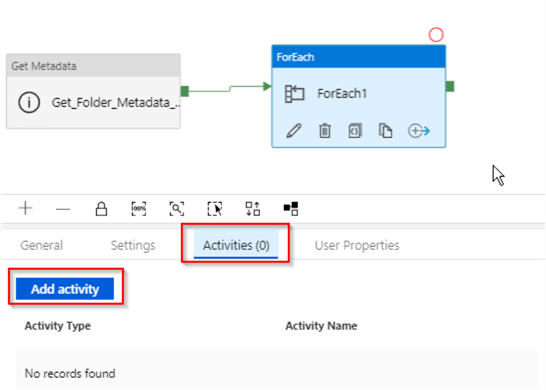

Next, let's add ForEach activity to our pipeline (I've named it as ForEach_AC), link it to the Success end of the Get_Folder_Metadata_ACactivity and add the following expression to its Items text box: @activity('Get_Folder_Metadata_AC').output.childItems. This expression will extract childItems values from the output of the Get_Folder_Metadata_AC activity:

Next, let us switch to Activities tab and click the Add activity button to start adding child tasks to the ForEach activity:

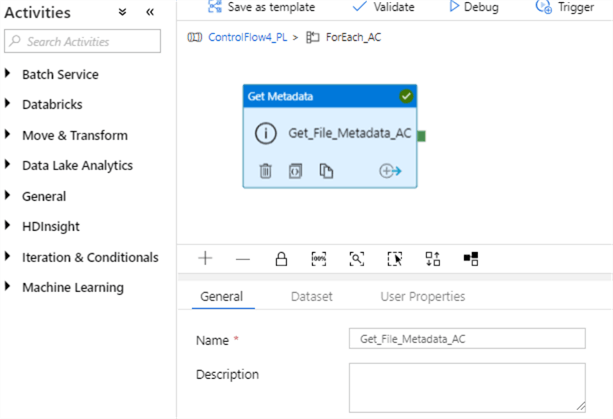

The first activity within the ForEach loop is going to be Get Metadata activity (I've named it as Get_File_Metadata_AC), which is going to read its input file's modified date/time attribute:

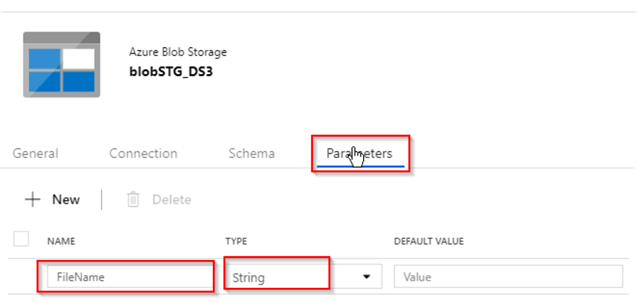

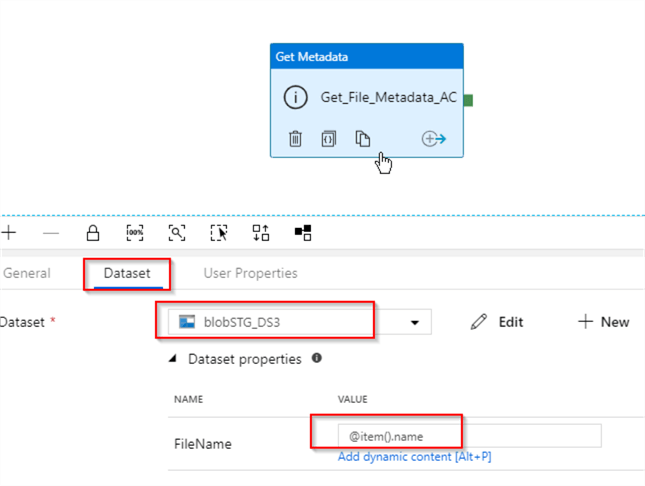

Let us switch to the Dataset tab to specify dataset details. Because this activity is going to receive its file name from the parent activity, its source needs to be a parameterized dataset. I have created a parameterized blob storage dataset (I have named it as blobSTG_DS3) with the FileName parameter (see Transfer On-Premises Files to Azure SQL Database for details on how to create parameterized dataset). Here is the screenshot with the details of parameter configuration for this dataset:

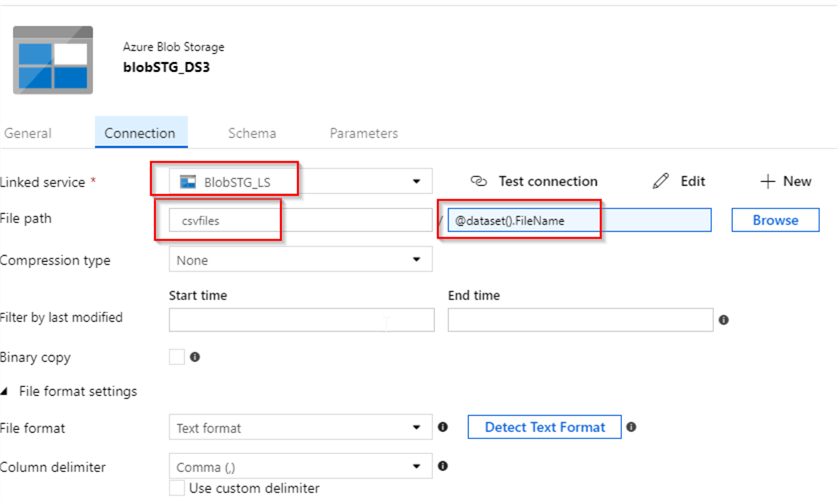

Next, we need to assign an expression @dataset.FileName to this dataset's filename property, so that it reads the file name from dataset's FileName parameter. Here is the screenshot of the dataset's Connection tab settings:

Next, let's return to Get_File_Metadata_AC activity, select dataset BlobSTG_DS3 dataset we just created and enter an expression @item().name into its FileName parameter text box. This expression is going to pass the next file name value from ForEach activity's item collection to the BlobSTG_DS3 dataset:

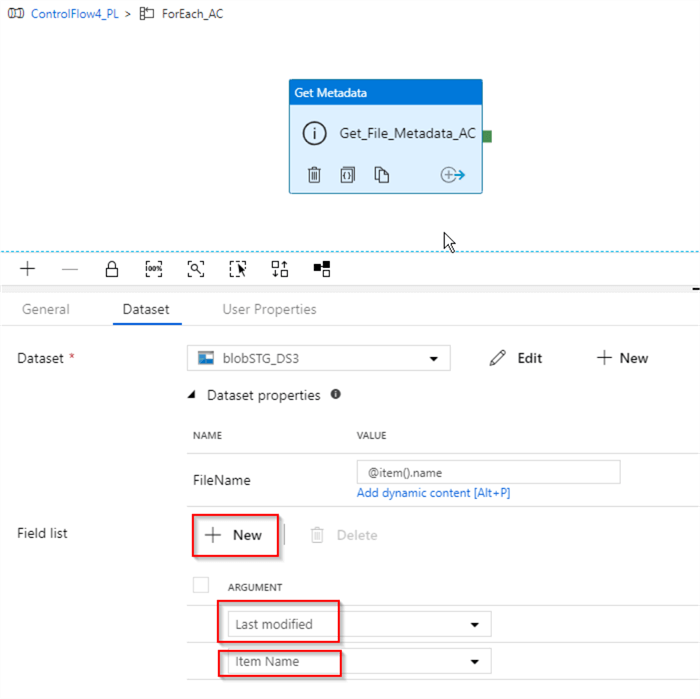

Next, let's add the fields Last modified and Item Name fields, using the New button- these fields will be required for the subsequent activities:

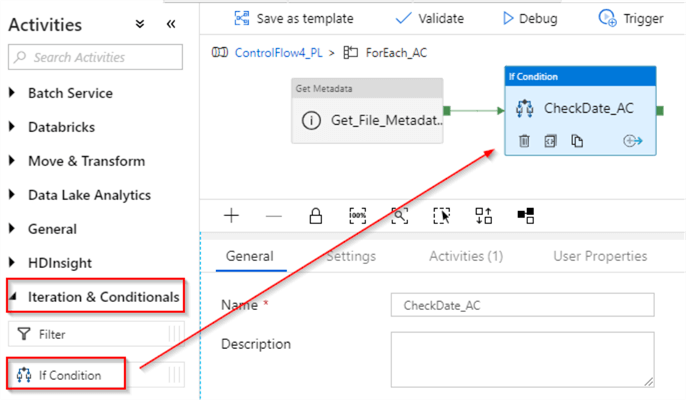

Next, let's add If Condition activity (I've named it as CheckDate_AC) and link it to the Success end of the Get_File_Metadata_AC activity:

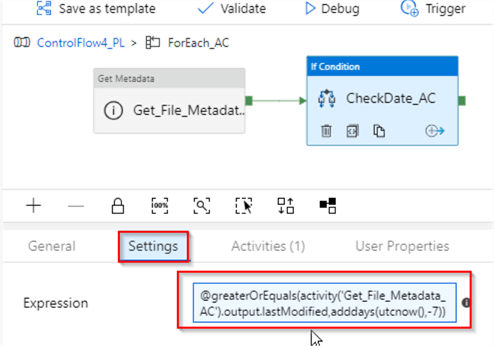

Let's switch to the Settings tab and enter the following expression: @greaterOrEquals(activity('Get_File_Metadata_AC').output.lastModified,adddays(utcnow(),-7)). This expression will check whether or not the Last modified field obtained from the previous activity falls within last 7 days period:

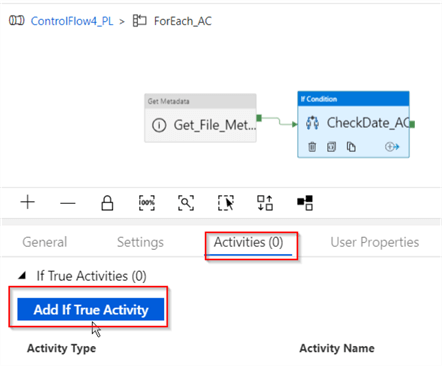

Next, switch to Activities tab and click Add If True Activity button, to start building tasks which will be executed when the condition evaluates to true:

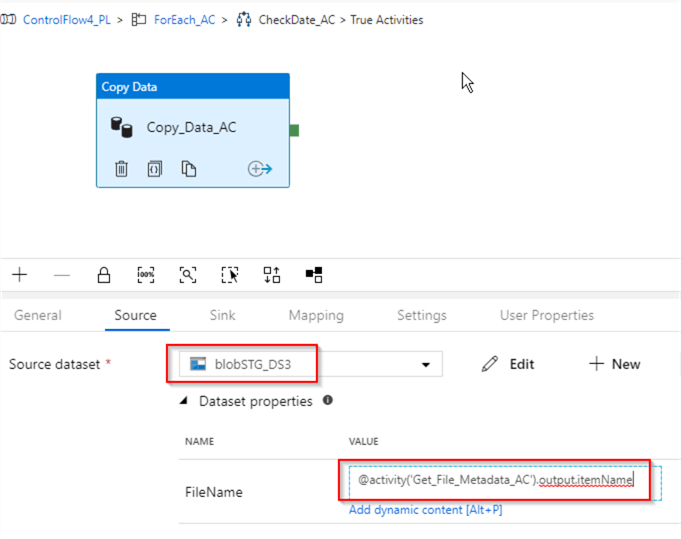

Within child activities window, add a Copy activity (I've named it as Copy_Data_AC), select BlobSTG_DS3 dataset as its source and assign an expression @activity('Get_File_Metadata_AC').output.itemName to its FileName parameter. This expression will ensure that next file name, extracted by Get_File_Metadata_AC activity is passed as the input file name for copy activity. Here's the screenshot:

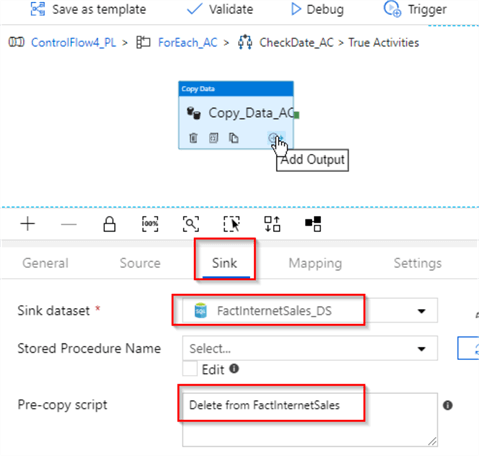

Next, switch to the Sink tab, select FactInternetSales_DS dataset we created earlier and enter following purge query for destination table - Delete from FactInternetSales. This will ensure that this activity doesn't fail with duplicate key errors, in case the rows we're transferring already exist in the destination table:

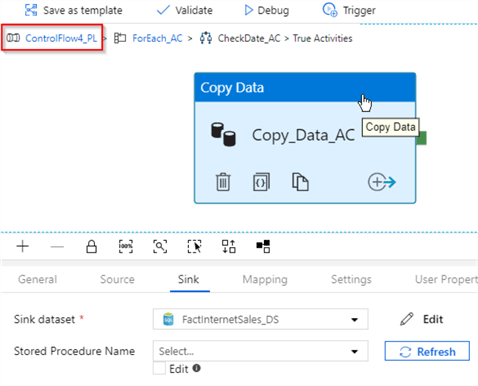

Finally, let's click the navigation link at the top of the screen and return to the parent pipeline's design screen:

Now that we have completed building data flow, we can test it. I will execute this pipeline twice to test the following scenarios:

- The first execution will be done with older files in the source container

- The second execution will be done with a newly modified file added to the source folder.

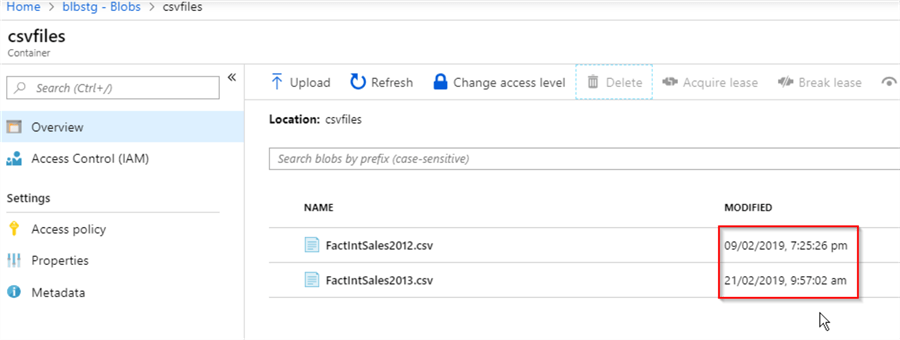

Let us open the blob storage page and ensure that all the files existing in its csvfiles container are dated more than 7 days from the execution date:

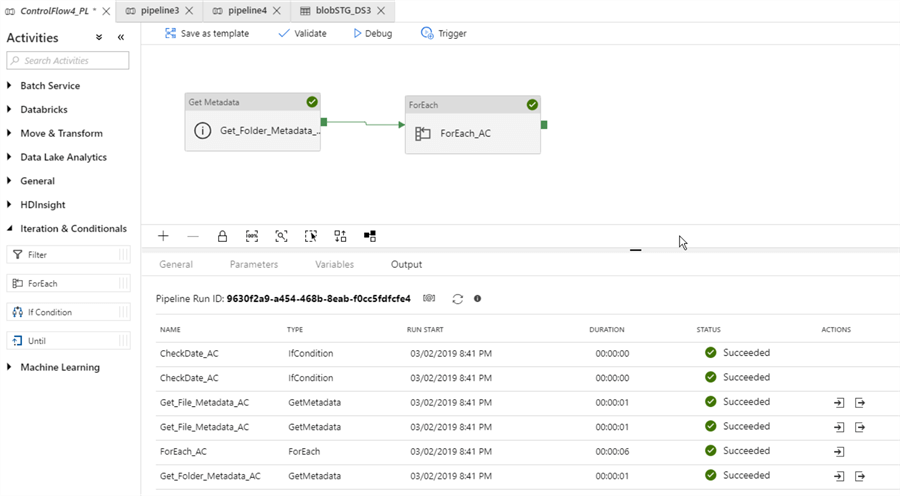

Let us start the pipeline in the debug mode and examine execution logs in the Output window:

As you can see from the logs, all the activities, except the copy activity has executed successfully. The copy activity did not run, because the files in the source container are older than 7 days.

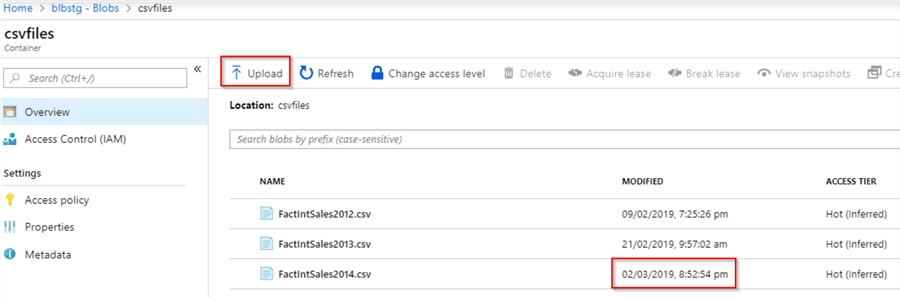

Next, I will upload a new file, using Upload button from Azure portal's blob storage page:

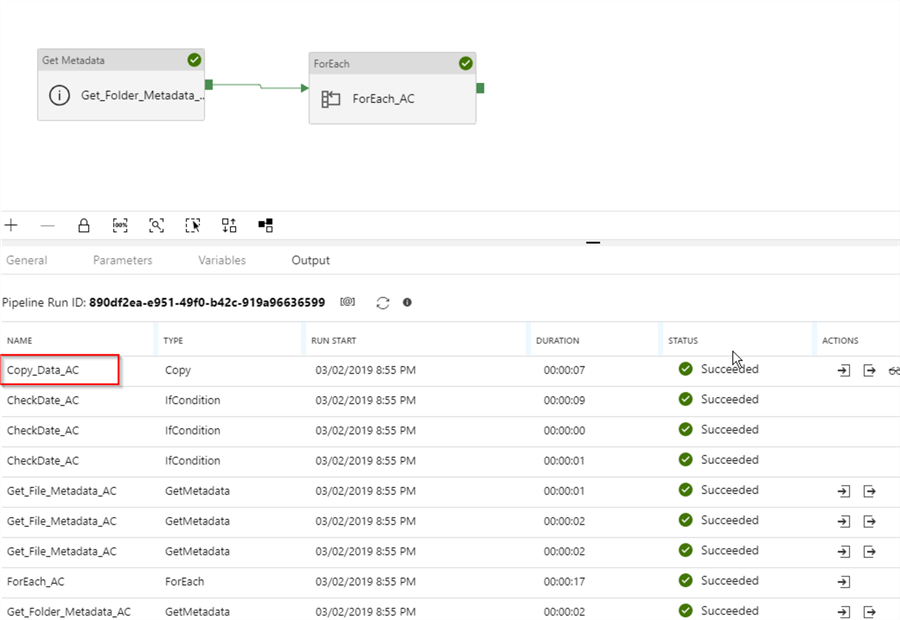

Let us execute the pipeline in a debug mode again and examine execution logs. As you can see from the logs, this execution included the copy activity as well, which is what we expected:

As usual, we will need to publish the changes, to ensure that they are permanent.

I have included JSON scripts for this pipeline here, for your reference.

Next Steps

- Read: Azure Data Factory ForEach Activity Example

- Read: Transfer On-Premises Files to Azure SQL Database

- Read: Azure Data Factory If Condition Activity

- Read: Get metadata activity in Azure Data Factory

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-11-28