By: Fikrat Azizov | Updated: 2019-11-29 | Comments (1) | Related: > Azure Data Factory

Problem

In previous posts we discussed a few Azure Data Factory (ADF) activities that have similar functionality to SQL Server Integration Services (SSIS) tasks. However, we also learned that in some cases SSIS components are more powerful and flexible than their ADF counterparts. Fortunately, ADF allows running SSIS packages in an Azure environment, which we are going to discuss in this article.

Solution

SSIS Integration Runtime

The ADF pipelines we built to this point have been managed by Azure Integration Runtime services. In contrast, deploying SSIS packages to Azure requires provisioning of SSIS Integration Runtime.

Just like the on-premises version of Integration Services, SSIS IR service needs a catalog database to host SSIS packages and store the execution related data. The catalog database for SSIS IR can be deployed to Azure SQL Server or Azure SQL Managed Instance.

Creating SSIS IR services involves a number of steps, well described here and I would recommend you go ahead and provision IR services, before proceeding further.

When provisioning SSIS IR, you will need to make following decisions:

- Determine Azure SQL server where catalog database will be deployed. The SSIS IR deployment wizard provisions the catalog database and names it as SSISDB, by default.

- Determine the authentication method used to connect to the catalog database (this could be either SQL or Azure Active Directory authentication)

- Whether or not SSIS IR needs to be join to the virtual networks. Joining to virtual networks is required, when SSIS packages need to access on-premises data sources (you can read more about this configuration here).

I have already provisioned a simple SSIS IR, named SsisIR, which I am going to use for deploying my SSIS package:

SSIS package configuration

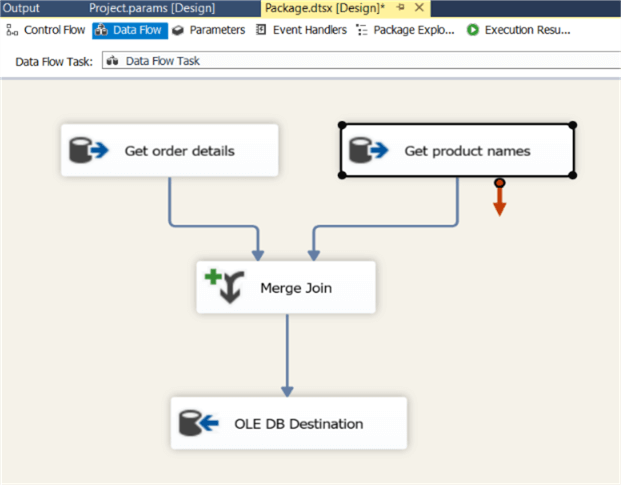

I’ve built an SSIS package with a single Data Flow task and following logic:

- Read sales order details from the SalesLT.SalesOrderDetail table in SrcDb Azure SQL Database

- Read the product names from SalesLT.Product table in DstDb Azure SQL Database

- Join above mentioned two streams, using a Merge Join component. This join will produce a dataset with an extra product name column

- Write the results into target SalesLT.SalesOrderDetail table in DstDb database

Here is the screenshot of the Data Flow task configuration:

We need to add an extra column to the destination table to allocate product names, so use the following script in the context of DstDb, to add the ProductName column:

ALTER TABLE [SalesLT].[SalesOrderDetail] ADD [ProductName] nvarchar(50)

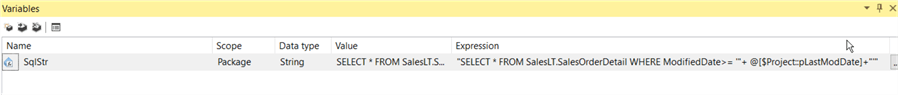

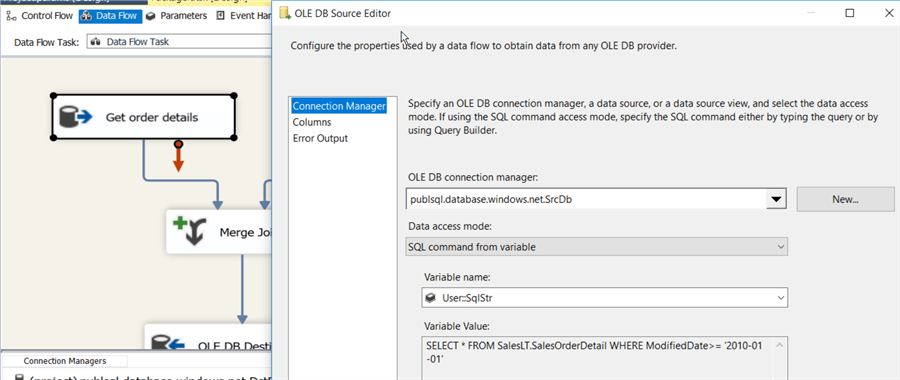

My SSIS project includes a string parameter pLastModDate, which will be populated by the ADF pipeline. I have also added a package variable SqlStr, which contains a dynamic expression, based on the pLastModDate parameter. This expression will ensure that source data includes only sales order lines with modified date, greater than the input parameter value.

The SqlStr variable will serve as a source variable for the Get order details component’s data source:

For those who want to see package details, I have included this SSIS project here to download.

SSIS package deployment with Azure Data Factory

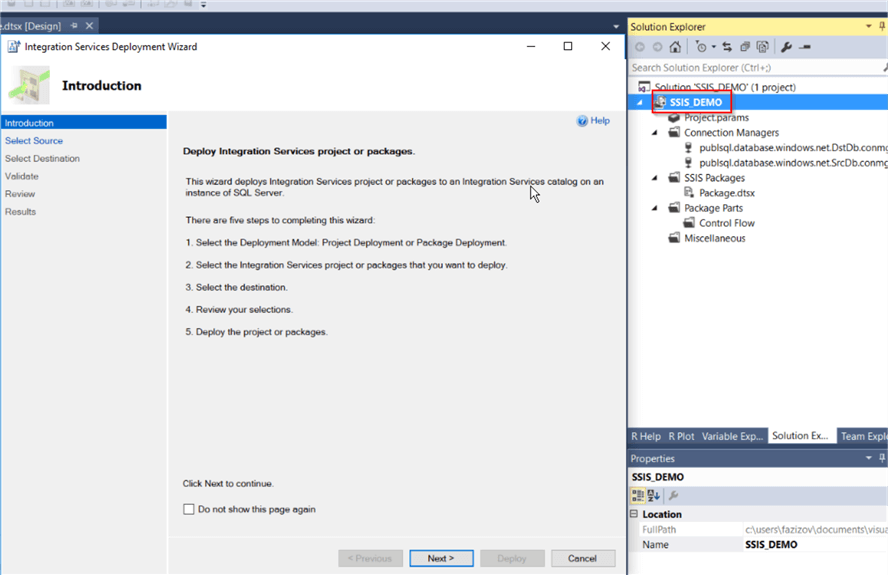

If you already provisioned the SSIS IR, you can proceed with the following steps to deploy the SSIS package to Azure:

In the Visual Studio IDE, right click on SSIS project name and select the Deploy command; this will open the project deployment dialogue window:

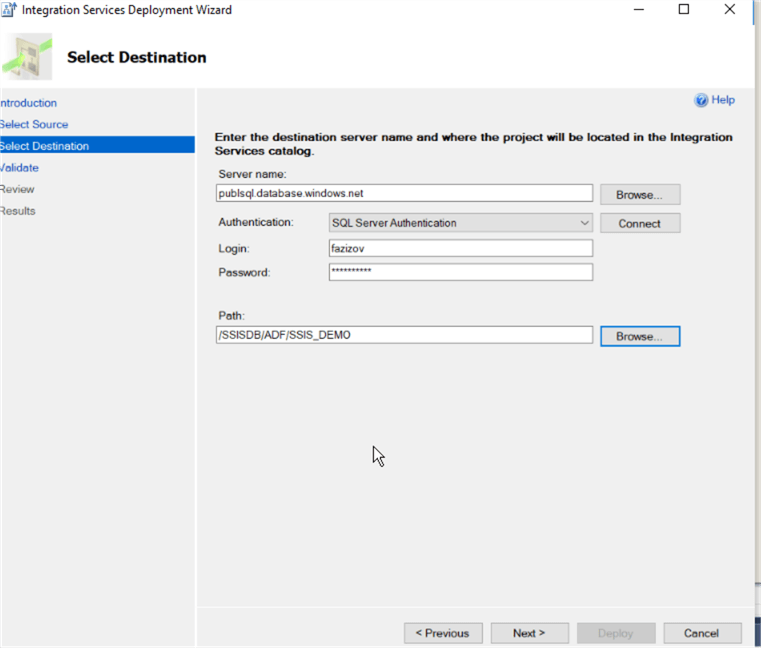

Next, enter the Azure SQL server name and folder, where the SSIS project will be deployed and provide the required credentials:

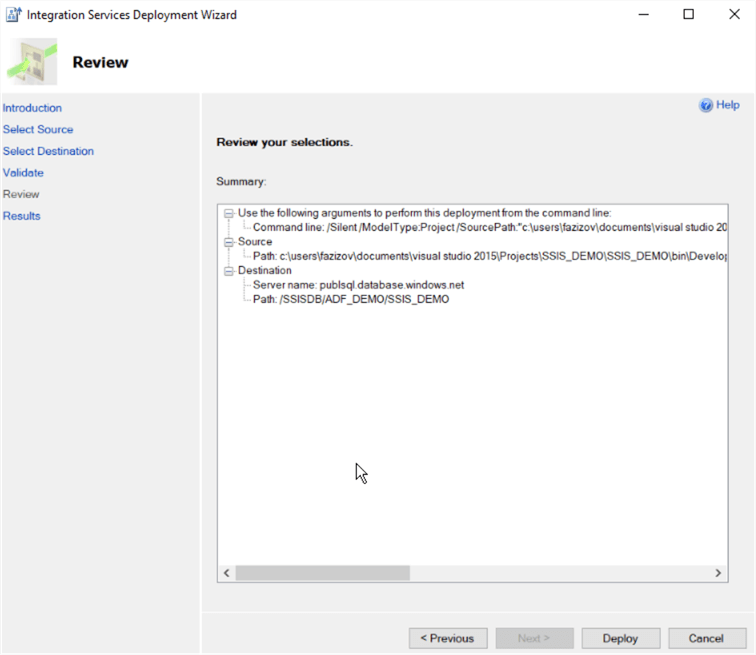

Finally, review all of the settings and confirm:

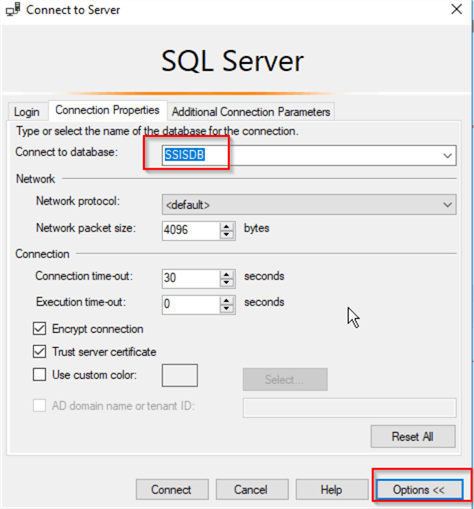

Once SSIS deployment completes, you can connect to SSISDB on Azure SQL Server and validate the results. Please note that you will need to specify SSISDB as a database name in your connection details to be able to see the packages deployed to SSIS catalog, as in the following screenshot:

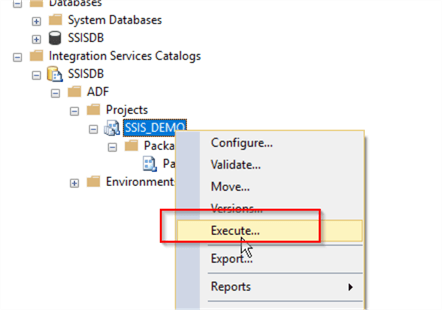

If you need to execute the SSIS package manually, you can do that from SSMS directly, using an execute command:

Execute SSIS package activity from Azure Data Factory

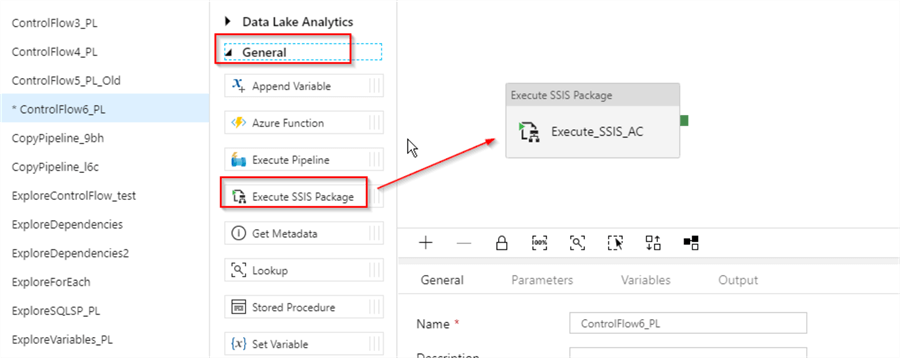

I have created a new pipeline named ControlFlow6_PL, to illustrate how an Execute SSIS package activity works. Let's open that pipeline and follow the below steps to configure Execute SSIS package activity:

Drag and drop Execute SSIS Package activity to the pipeline design surface and name it as Execute_SSIS_AC:

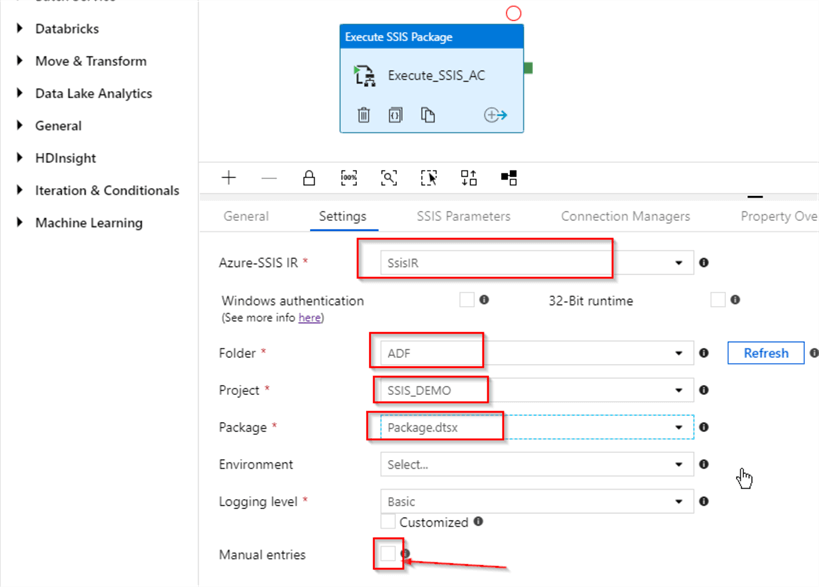

Switch to the Settings tab, select SSIS-IR from Azure SSIS IR drop-down list. Next, if SSIS IR is running and the Manual entries checkbox is clear, select the catalog folder, project and package names from the respective drop-down lists. If SSIS IR is not running or Manual entries check box is set, you would need to enter the package path manually. Please note this screen also allows you configuring the package logging level:

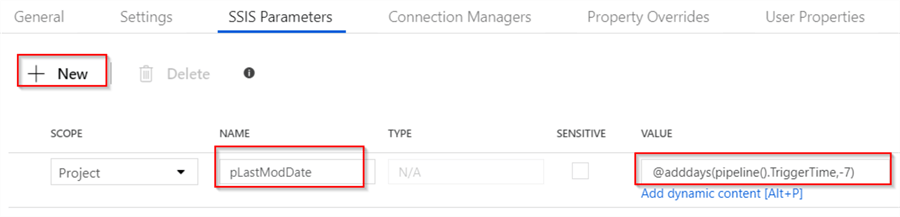

Switch to SSIS Parameters tab, add a new parameter, name it as pLastModDate and put an expression @adddays(pipeline().TriggerTime,-7) in its Value text box. This configuration will ensure that we’re filtering out rows with modified dates falling in the last seven days:

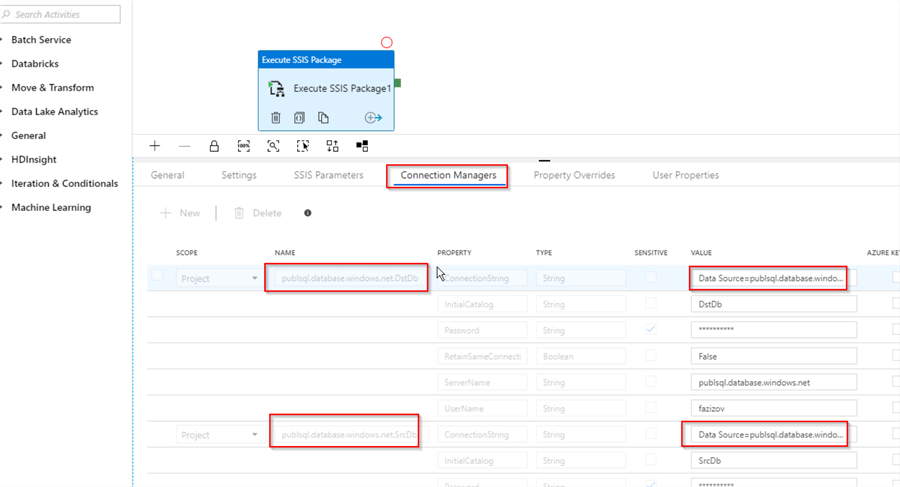

Switch to Connection Managers tab and ensure that the source and destination connection strings are correct:

Execute and monitor the pipeline in Azure Data Factory

Before we test the pipeline, let's purge all rows in the destination table, this will help us avoid possible duplicate key errors. Please execute the below script in the context of the DstDb database:

TRUNCATE TABLE [SalesLT].[SalesOrderDetail]

Next, execute the below script in the context of SrcDb database, to ensure that recently modified rows exist in the source table:

UPDATE [SalesLT].[SalesOrderDetail] SET ModifiedDate=getDate() WHERE SalesOrderID<=71784

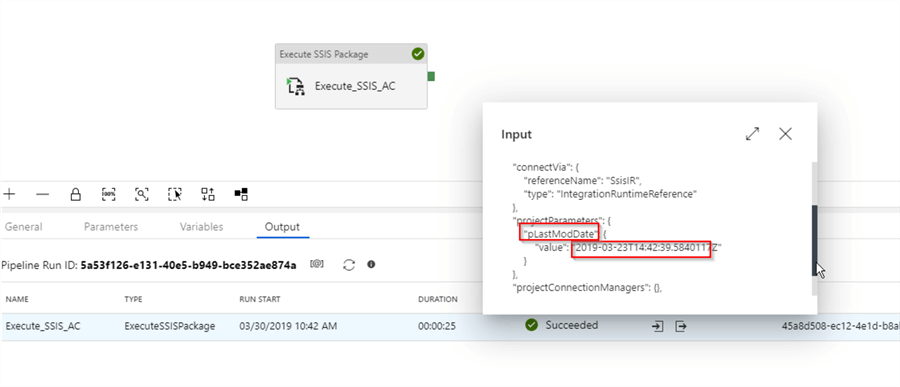

Finally, kick-off this pipeline in a debug mode and examine input parameter passed to the activity, using the Input button from the Output window:

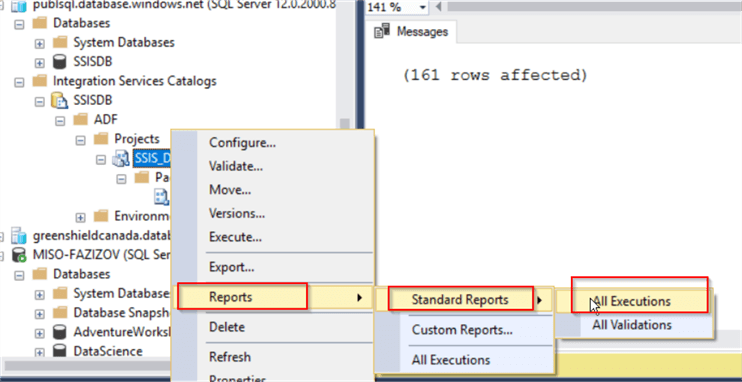

We can also inspect the SSIS package execution logs from SQL Server Management Studio (SSMS), by right clicking on the project name and using Reports/Standard Reports/all Executions command:

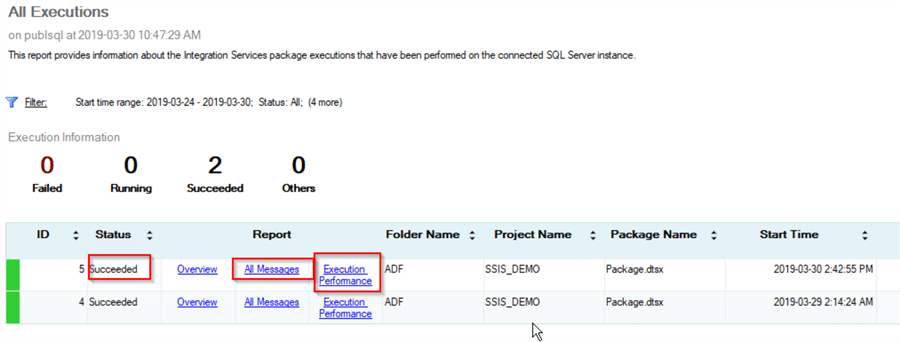

Just like with on-premises SSIS server’s catalog report, you can inspect execution status, detailed information/warning/error logs recorded during the package execution and examine performance dashboard, using this report (see this tip for more details on SSIS catalog reports):

Finally, once you are satisfied with the results, publish the pipeline, to ensure that the changes are preserved.

Also, because SSIS IR service are charged on an hourly basis, make sure that you stop your service, whenever you don’t need it.

Here is the JSON script for this pipeline, for your reference:

{

"name": "ControlFlow6_PL",

"properties": {

"activities": [

{

"name": "Execute_SSIS_AC",

"type": "ExecuteSSISPackage",

"policy": {

"timeout": "7.00:00:00",

"retry": 0,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"typeProperties": {

"packageLocation": {

"packagePath": "ADF/SSIS_DEMO/Package.dtsx"

},

"loggingLevel": "Basic",

"environmentPath": null,

"connectVia": {

"referenceName": "SsisIR",

"type": "IntegrationRuntimeReference"

},

"projectParameters": {

"pLastModDate": {

"value": "@adddays(pipeline().TriggerTime,-7)"

}

}

}

}

]

},

"type": "Microsoft.DataFactory/factories/pipelines"

}

Next Steps

- Read: Configure an Azure SQL Server Integration Services Integration Runtime

- Read: Run an SSIS package with the Execute SSIS Package activity in Azure Data Factory

- Read: Join an Azure-SSIS integration runtime to a virtual network

About the author

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.

Fikrat Azizov has been working with SQL Server since 2002 and has earned two MCSE certifications. He’s currently working as a Solutions Architect at Slalom Canada.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2019-11-29