By: Svetlana Golovko | Updated: 2020-01-22 | Comments | Related: > Analysis Services Administration

Problem

We need to migrate our SQL Server Analysis Services (SSAS) instances from SQL Server 2012 to SQL Server 2017. What are the steps? What are the potential issues? Is there a difference for multidimensional and tabular models upgrade?

Solution

In this tip we will provide the steps for the SSAS migration using a backup/restore method and using a project deployment method (for the tabular models). Then we will outline the steps you may need to perform after the databases upgrade.

In this tip, we won't cover steps for the SSAS installation. Note, that we also won't cover PowerPivot SSAS Mode.

You have to make sure that the new SSAS 2017 instances are ready and that they are in the same mode as your old SSAS 2012 instances (Tabular or Multidimensional) before you start the databases migration. Make sure you selected the right mode during the installation:

Having the same instance name for the new 2017 instances … will help … for example..

Migrate SSAS Databases Using the Backup/Restore Method

The high-level steps for the SSAS upgrade as per Microsoft's article are:

- Backup the databases

- Restore the databases

- Process the databases

- Run databases consistency checks (DBCC for SSAS tabular and multidimensional databases)

- Test dependent applications

For the backup/restore method we will use a couple of dynamic scripts that are very useful if you have multiple SSAS databases per instance. These dynamic scripts will be generated based on SSAS Dynamic Management Views (DMVs).

Backup SSAS Databases

Connect with SQL Server Management Studio (SSMS) to your source SQL Server 2012 SSAS Instance. Click "Connect" in the Object Explorer, select "Analysis Services…", enter the SSAS instance name and click the "Connect" button:

Right-click on one of the databases name's and click "Back Up…". Select the backup options including the location of the backup file. Click "Script". Then select "Script Action to New Query Window":

Here is an example of the script that will be generated:

<Backup xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Object>

<DatabaseID>_DemoSSAS_Tabular</DatabaseID>

</Object>

<File>C:\Temp\_DemoSSAS_Tabular.abf</File>

<ApplyCompression>false</ApplyCompression>

</Backup>

Save this script for your future migration if you have only one database on your SSAS instance.

If you have multiple databases, you can generate a single script to backup all your SSAS databases with a dynamic query. This query will run against DBSCHEMA_CATALOGS schema rowset. Note, that the script to query schema rowsets or SSAS Dynamic Management Views should run as an MDX script in SSMS:

SELECT '

<Backup xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Object>

<DatabaseID>' + [CATALOG_NAME] + '</DatabaseID>

</Object>

<File>C:\temp\' + [CATALOG_NAME] + '.abf</File>

<AllowOverwrite>true</AllowOverwrite>

</Backup>' FROM $SYSTEM.DBSCHEMA_CATALOGS

Copy the script's output.

Insert at the top of the script "Batch" command. Make sure that Transaction attribute is set to "0" here:

<Batch Transaction="0" xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

If the Transaction attribute is set to "1" you will get the following error:

<return xmlns="urn:schemas-microsoft-com:xml-analysis">

<results xmlns="http://schemas.microsoft.com/analysisservices/2003/xmla-multipleresults">

<root xmlns="urn:schemas-microsoft-com:xml-analysis:empty">

<Exception xmlns="urn:schemas-microsoft-com:xml-analysis:exception" />

<Messages xmlns="urn:schemas-microsoft-com:xml-analysis:exception">

<Error ErrorCode="3239968806" Description="Backup and restore errors: The Backup, Restore or Synchronize command cannot be executed within a user initiated transaction or in transactional batch." Source="Microsoft SQL Server 2012 Analysis Services" HelpFile="" />

</Messages>

</root>

</results>

</return>

Append the closing tag at the end of the backup script:

</Batch>

The final script to backup all databases will look like this:

<Batch Transaction="0" xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Backup xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Object>

<DatabaseID>BasicDataMining</DatabaseID>

</Object>

<File>C:\temp\SSASDB1.abf</File>

<AllowOverwrite>true</AllowOverwrite>

</Backup>

<Backup xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Object>

<DatabaseID>SSASDB2</DatabaseID>

</Object>

<File>C:\temp\DMAddinsDB.abf</File>

<AllowOverwrite>true</AllowOverwrite>

</Backup>

</Batch>

Save the final script.

Make sure that you run the script as XMLA script when you run it later. Right-click in SSMS on the SSAS instance, click "New Query…", select "XMLA":

Read more about Analysis Services Scripting Language here.

Here is a quick reference to other SSAS languages.

Restore SSAS Databases

The SSAS database restore steps could be found here.

We will generate the restore script for multiple databases similar to the Backup dynamic script:

SELECT ' <Restore xmlns="http://schemas.microsoft.com/analysisservices/2003/engine"> <File>C:\temp\SSAS\' + [CATALOG_NAME] + '.abf</File> <DatabaseName>' + [CATALOG_NAME] + '</DatabaseName> </Restore> ' FROM $SYSTEM.DBSCHEMA_CATALOGS

You may need to add other parameters to the script if you use, for example, backup encryption.

Add "Batch" tags before the output and after as we did for the backup script. The final restore script will look something like this:

<Batch Transaction="0" xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Restore xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<File>C:\temp\SSASDB1.abf</File>

<DatabaseName>SsasDb1</DatabaseName>

</Restore>

<Restore xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<File>C:\temp\SSASDB2.abf</File>

<DatabaseName>SsasDb2</DatabaseName>

</Restore>

<Restore xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<File>C:\temp\SSASDB3.abf</File>

<DatabaseName>SsasDb3</DatabaseName>

</Restore>

</Batch>

There are some potential issues with SSAS restore related to the permissions that you need to be aware of. Check this article from Microsoft for the details.

Post-Migration Steps

The high-level post-migration steps are:

- Update models' compatibility mode if required. Check here which operations require that the source databases share the same compatibility level (for the multidimensional databases).

- Process all databases

- Run DBCC for all SSAS databases

Updating Multidimensional Databases Compatibility Level

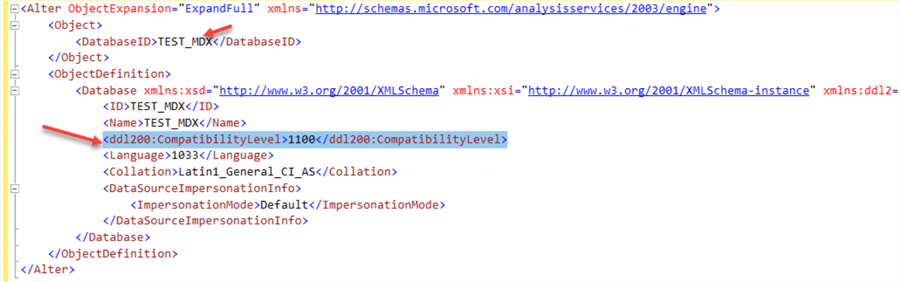

You don't need to do anything if you are migrating multidimensional databases from SSAS 2012 or higher. They will all have the compatibility level of 1100 after the databases restore.

Run this MDX query after databases migration to check the compatibility levels:

SELECT [Catalog_Name], Compatibility_level FROM $SYSTEM.DBSCHEMA_CATALOGS

An exception will be if a database was migrated from SSAS 2008. Then the compatibility level will be 1050:

To update the compatibility level of a multidimensional database from 1050 to 1100 in SSMS follow the steps from this article:

1. Before raising the compatibility level, backup the database in case you want to reverse your changes later.

2. Using SQL Server Management Studio, connect to the SQL Server 2017Analysis Services server that hosts the database.

3. Right-click the database name, point to Script Database as, point to ALTER to, and then select New Query Editor Window. An XMLA representation of the database will open in a new window.

4. Copy the following XML element:

<ddl200:CompatibilityLevel>1100</ddl200:CompatibilityLevel>

5. Paste it after the </Annotations> closing element and before the <Language> element. The XML should look similar to the following example:

</Annotations> <ddl200:CompatibilityLevel>1100</ddl200:CompatibilityLevel> <Language>1033</Language>

6. Save the file.

7. To run the script, click Execute on the Query menu or press F5.

Here is an example of the ALTER command for our TEST_MDX database (note that the "Annotations" tag is not present here):

Check the compatibility level again after executing the "ALTER" script above:

SELECT [Catalog_Name], Compatibility_level FROM $SYSTEM.DBSCHEMA_CATALOGS WHERE [Catalog_Name] = 'TEST_MDX'

Updating Tabular Databases Compatibility Level

This Microsoft article provides details about tabular compatibility levels, the supportability of these levels and high-level steps to upgrade the compatibility levels.

The compatibility level that you are going to have in SSAS 2012 most likely will be 1103 (it could be 1100 as well). These levels are deprecated in SSAS 2017 and it's recommended to update the databases to the latest level available (1400 in SSAS 2017).

We will still have SSAS 2012 levels after we restore tabular databases from SSAS 2012 backups:

Note that if you are not planning to keep the old compatibility level then you can skip the restore step for the tabular databases migration. We have restored the databases to demonstrate the restore process and to check the databases' properties after the restore.

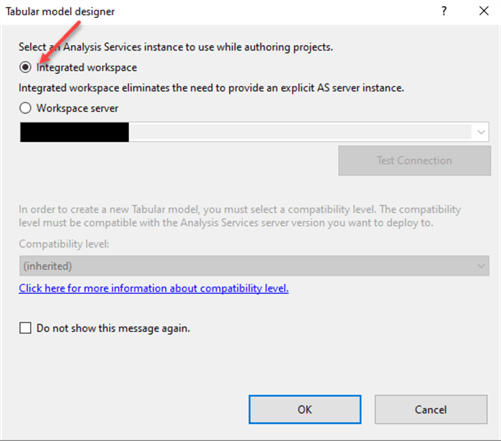

This tip describes how to change the compatibility level for the new and existing Tabular Projects using SQL Server Data Tools (SSDT).

But how can you do this if you don't have any existing SSAS projects or can't access them?

Here are the steps:

- Run Visual Studio or SSDT as Administrator

- Click "File", "New", "Project…"

- Select "Import from Server (Tabular)":

- Select the Workspace:

- Click "OK" and enter the connection information (SSAS instance name and the database name):

- Click "OK". The project is created now.

- Double click on the model file to open it.

- Review the model's properties and note the compatibility level (1103):

- Select "SQL Server 2017 / Azure Analysis Services (1400)" from the drop-down list and confirm compatibility level update:

- Note, that you may not see the "1400" compatibility level if you have an older version of SSDT or Visual Studio. This article has release notes for the SSDT. You will need to use SSDT version 17.0 or later for Visual Studio 2015.

- Validate the compatibility level after the project is reloaded:

- Right-click on the solution name and select properties. Update the name of the Deployment Server to the new SSAS 2017 instance where we want to migrate our tabular database. Click "OK" to save the changes:

- On the Analysis Services toolbar click "Existing Connections" icon:

- Validate or update the connections.

- Right-click on the Solution name and click "Deploy". Validate that deployment is successful:

- Connect to the target SSAS 2017 instance with SSMS and review the deployed tabular database's compatibility level either by checking the database's properties:

- Or by running the query that we used before against the DBSCHEMA_CATALOGS schema rowset:

- Note, that the deployment may fail for different reasons (permissions, syntax error, the edition used, features deprecation, etc.).

Process Databases

All models must be processed before they could be used. Queries for the unprocessed objects will not be resolved and may break applications that use the models.

The processing for the Tabular projects will occur during their deployment to the new SSAS instance if you deploy the projects from Visual Studio or SSDT. The multidimensional databases will be processed as well if you use the deployment method.

Read more about multidimensional models processing here and about tabular models here.

If you use backup/restore method for the SSAS databases migration, then you can use a query below to generate a single script for all databases processing (run the scrip below as the MDX script):

SELECT '<Process xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Type>ProcessFull</Type>

<Object>

<DatabaseID>' + [CATALOG_NAME] + '</DatabaseID>

</Object>

</Process>

' FROM $SYSTEM.DBSCHEMA_CATALOGS

Modify the output. Add "Batch" tags before the output and after as we did for the backup script:

Run the final script as the XMLA script when you are ready to process the databases.

Note that for the newer compatibility levels you will get the following error during tabular databases processing:

The multidimensional databases will be processed fine regardless of the compatibility level.

Here are the compatibility modes of our tabular databases:

Let's process the database with compatibility level 1400 (SSAS 2017) in SSMS and generate the script for the processing:

The generated script will be in JSON format and will be using "Refresh" TMSL (Tabular Model Scripting Language) command:

{

"refresh": {

"type": "full",

"objects": [

{

"database": "TabularProjectImport_Upgrade"

}

]

}

}

The TMLS is used for the tabular databases starting with compatibility level 1200 (SSAS 2016).

If we try to use JSON script above for the processing of the databases with a legacy compatibility level, you will get another error:

An unexpected exception occurred.

Here is the final dynamic query for generating processing script the tabular databases with compatibility modes lower than 1200:

SELECT '<Process xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Type>ProcessFull</Type>

<Object>

<DatabaseID>' + [CATALOG_NAME] + '</DatabaseID>

</Object>

</Process>'

FROM $SYSTEM.DBSCHEMA_CATALOGS

WHERE COMPATIBILITY_LEVEL <=1103

Wrap it up in the "Batch" tags and run as a single script.

Here is the final dynamic query for generating processing script the tabular databases with compatibility modes starting 1200:

SELECT '{"refresh": {"type": "full",

"objects": [{"database": "' + [CATALOG_NAME] + '"}]

}}'

FROM $SYSTEM.DBSCHEMA_CATALOGS

WHERE COMPATIBILITY_LEVEL >1103

You will need to run the output script line by line (separately for each database). The Refresh JSON command can only run for one database at a time:

{"refresh": {"type": "full", "objects": [{"database": "TabularProject_2016"}] }}

{"refresh": {"type": "full", "objects": [{"database": "TabularProjectImport_Upgrade"}] }}

Run Database Consistency Checks (DBCC)

The last step before the testing of your applications is running DBCC for the SSAS databases.

Note, that the databases must be in the processed state in order to run the DBCC. Also, as per Microsoft's article:

For Tabular databases, consistency checks performed by DBCC are equivalent to the built-in validation that occurs automatically every time you reload, synchronize, or restore a database. In contrast, consistency checks for Multidimensional databases happen only when you run DBCC on demand.

The keyword here is "equivalent". If you trace these operations with SQL Server Profiler or xEvent sessions in SSMS you won't see the DBCC command executed explicitly.

In our DBCC examples we will use dynamic queries against DBSCHEMA_CATALOGS schema rowset (similar to the queries for the backups/restore/process databases above).

Here is the dynamic query to generate the DBCC script for each multidimensional as well as tabular 1100 and 1103 database:

SELECT '<DBCC xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Object>

<DatabaseID>' + [CATALOG_NAME] + '</DatabaseID>

</Object>

</DBCC>' FROM $SYSTEM.DBSCHEMA_CATALOGS WHERE COMPATIBILITY_LEVEL < 1200

Here is the dynamic query to generate the DBCC for the tabular databases at the 1200 and higher compatibility level (note the difference in the namespace):

SELECT '<DBCC xmlns="http://schemas.microsoft.com/analysisservices/2014/engine">

<DatabaseID>' + [CATALOG_NAME] + '</DatabaseID>

</DBCC>' FROM $SYSTEM.DBSCHEMA_CATALOGS WHERE COMPATIBILITY_LEVEL >= 1200

Refer to this post if you want to make the query even more generic/dynamic. This could be done by using SQL Server linked server to SSAS (use "CASE" for the COMPATIBILITY_LEVEL column for "Set @XMLA=").

Note, that you need to run DBCC commands one by one for each database. Batch mode is not supported, and you will get the following error if you try to run the output as a batch:

Here is an example of the DBCC commands generated for the databases with current and older compatibility levels:

<DBCC xmlns="http://schemas.microsoft.com/analysisservices/2014/engine">

<DatabaseID>TabularProjectImport_Upgrade</DatabaseID>

<TableName>Fact_Scenario</TableName>

</DBCC>

<DBCC xmlns="http://schemas.microsoft.com/analysisservices/2003/engine">

<Object>

<DatabaseID>TEST_SSAS_TAB</DatabaseID>

</Object>

</DBCC>

Other Post-Migration Steps

Some other post-migration steps may include:

- Data Sources and impersonation accounts check for each database (to make sure that connections are valid):

SELECT [Name], ConnectionString, Account from [AFE].$SYSTEM.TMSCHEMA_DATA_SOURCES

- Replacing Drillthrough Actions with Detail Rows Expression.

Conclusion

At this point, the SSAS databases are migrated, upgraded and validated. The next step is testing dependent processes and applications' functionality.

Please note, that examples in this tip are provided to demonstrate simple steps for the databases migrations and to highlight the differences in the upgrade steps for the different compatibility levels. We used SSMS to run all the commands, but you can use different tools (for example, Analysis Services PowerShell cmdlets, ASCMD). Some of these tools offer better automation capabilities.

Read more about available tools in the Tools and Approaches for Processing (Analysis Services) article from Microsoft.

Next Steps

- Read other tips related to the SSAS Administration here.

- Read about "How to automate SSAS tabular model processing in SQL Server 2016" here.

- Read this tip about "Using ASCMD to run command line scripts for SQL Server Analysis Services".

- Read Part 1 and Part 2 tips about Automating SQL Server Analysis Services Tasks with PowerShell.

- Read more about TMSL here.

- This article is about comparing tabular and multidimensional solutions.

- Read about "What's New in Analysis Services Tabular 2017" here.

About the author

Svetlana Golovko is a DBA with 13 years of the IT experience (including SQL Server and Oracle) with main focus on performance.

Svetlana Golovko is a DBA with 13 years of the IT experience (including SQL Server and Oracle) with main focus on performance. This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2020-01-22