By: John Miner | Updated: 2020-01-29 | Comments | Related: > Azure

Problem

I hope you noticed the similarity of the article’s title to a famous novel named "The tale of two cities" by Charles Dickens. This novel is Dicken’s best-known work of historical fiction, with over 200 million copies sold and is regularly cited as the best-selling novel of all time. It was required reading in one of my English classes during high school.

The reason behind the name of this article is the fact that Azure has two hubs that can be used for capturing messages (events). Both Azure IoT Hub and Azure Event Hubs are cloud services that can receive telemetry (messages) and process and/or store that data for business insights. These services support the ingestion of large amounts of data with low latency and high reliability, but they are designed for different purposes.

IoT Hub was design for connecting of devices to the internet. Unlike Event Hubs, the communication can be bi-directional. This Cloud-to-Device communication opens the door way for many different things. The device can be updated with new configuration settings or perform actions upon request. Updating intelligent processing (machine learning) can be achieved when using an Azure IoT edge device. Last but not least, there is a framework for device provisioning, device identity, and transferring of data.

Event Hubs was designed as a big data streaming service capable of processing millions of messages per second. The partitioned consumer model can be used to scale out your streaming data. Big data analytics services of Azure including Databricks, Stream Analytics, ADLS, and HDInsight can read and process the data from the hub. Unlike IoT Hub, identity is done with shared access signatures and communication supports only two protocols.

Today’s focus will be on deploying and configuring the Azure Event Hub service. This service is less complex and will work fine for an IoT prototype. How can we accomplish this task?

Solution

The Azure Portal is the go-to place for one-time deployments. For repeatable tasks, I suggest either using programming or ARM templates. I will explore how to deploy and configure the Azure Event Hub Service using the Azure Portal.

Business Problem

Our boss has asked us to investigate connecting the machines owned by Collegiate Vending, Inc. to the cloud to capture soda product sales. The ultimate goal is to save the messages in an Azure SQL database for analysis and reporting. Our fictitious company has a contract to distribute Coca-Cola products and vending machines to various educational institutions in the United States of America.

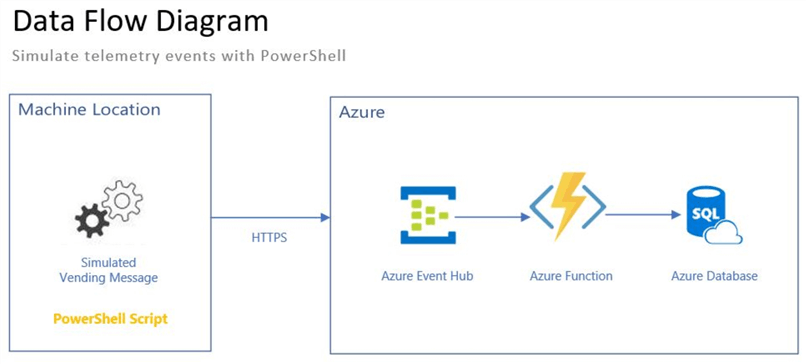

In a prior article, we created a PowerShell script to write messages to an Azure Event Hub using PowerShell and HTTPS. The diagram below depicts the data flow in our system.

Today, our job is to create and configure an Azure Event Hub service for the test program that sends simulated soda machine telemetry to the hub. We need to have an understanding of event hub features before we can successfully deploy a hub.

About Namespaces

The event hub namespace is the logical parent object that can contain one or more event hubs. For those database administrators, a namespace is conceptually the same as a logical database server. It is a unique scoping container that can be reference by a fully qualified domain name (FQDN). Security can be given out at this level; However, I would not recommend it since the end application will have access to all hubs.

The namespace deployment is part of the event hub creation when using the Azure Portal. There are two types of Event Hub offerings: single hub and clustered. The fictitious Collegiate Vending company is only processing 200K records per hour. Therefore, the non-cluster deployment has plenty of horse power to handle this processing.

The usual information is required to deploy the namespace object. Please see the next section for details.

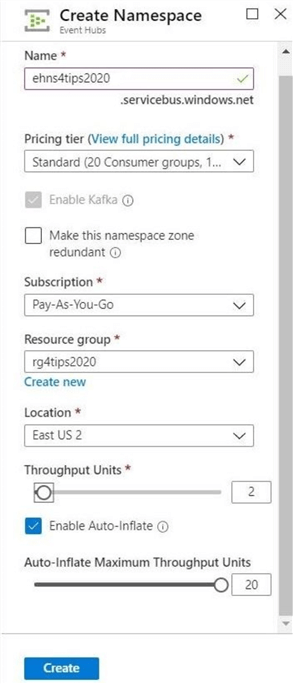

The following information is specific to this service: tier, throughput, and zone redundancy. The Azure Event Hub service has two tiers: Basic and Standard. This setting determines how many connections and consumer groups the namespace can have. Throughput units defines how much data can be consumed per second. I really like the auto inflate feature of this service. I suggest you set the minimum level to 1 and the maximum level to 20. Apache Kafka messaging is automatically enabled in the current version of the service.

There is one import thing to remember, these settings can not be changed after deployment. Thus, choose wisely when creating this scoping container.

Deploy Namespace

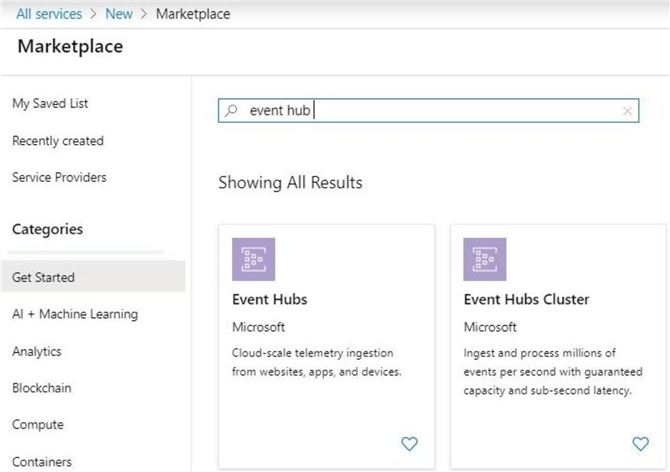

Now that we are informed about the choices for the namespace, lets deploy the service for our company. A search of the Azure Marketplace brings up two offerings for event hub. Choose the non-clustered installation option.

The typical overview page for a service is shown below. Links to documentation and pricing are informative for the first use. Choose the create button to continue the deployment.

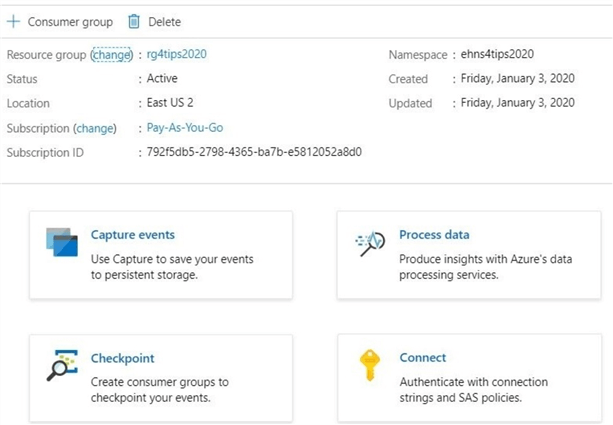

The image below shows the deployment of a namespace called ehns4tips2020. It is interesting to note that the FQDN contains the servicebus.windows.net address. Thus, Azure Service Bus is the foundation of this service. The subscription – pay-as-you-go, resource group – rg4tips2020 and location – east us 2 are entered to complete the basic information required by all Azure objects. Click the create button to move onto the next screen.

We will need more background information about Event Hubs to finish the deployment selections.

About Event Hubs

Publishers write events to the hub and subscribers read events from the hub. Publishers connect to the hub via HTTPS or AMQP protocols. The choice of protocol is specific to the usage scenario. The AMQP protocol requires the establishment of a persistent bidirectional socket which has higher network costs when initializing the session. On the other hand, HTTPS requires additional SSL overhead for every request. I think I might look at the AMQP protocol in a future article so that a performance comparison can be made. Messages can be sent in single or batch fashion. The limit on message size, regardless of sending method, is 1 MB.

The number of partitions and retention period are key properties of an event hub. Why are these numbers important?

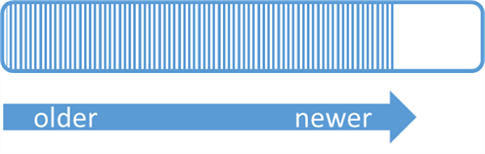

The above image shows the conceptual view of a single partition. A partition is a sliding queue in which messages drop off one end as time move on. The newest messages are at the front of the queue. The retention period for an event hub can be between 1 and 7 days.

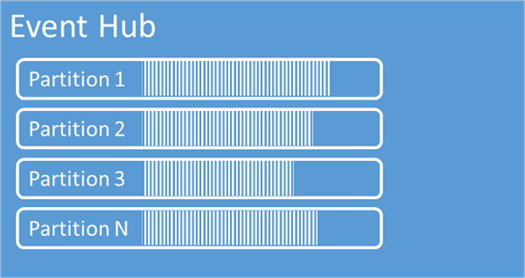

Each event hub can have multiple partitions. This setting ranges from 1 to 32. It is a best practice to have a 1 to 1 relationship between throughput units and the number of partitions. This guarantees that enough resources are available for writing and reading to each partition.

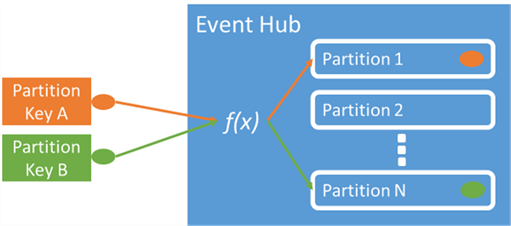

When writing to an event hub that has multiple partitions without specifying a partition key, the messages are spread across the N partitions. Therefore, the order of the events is not preserved. If a partition key is specified, then messages are written to one partition and the order of the events is guaranteed. The image below shows how this mapping takes place.

Deploy Event Hub

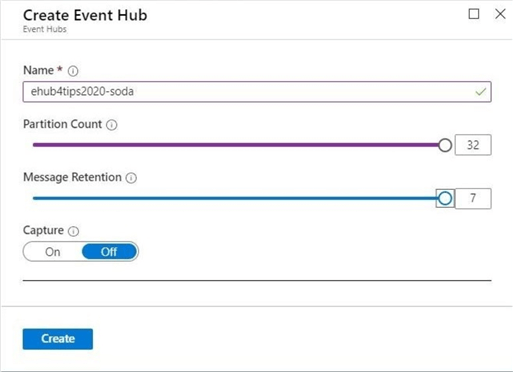

The create event hub dialog box allows the user to pick the partition count and message retention period. I choose the maximum choices in the window shown below.

You can set the partition count and message retention to 1 for this poof of concept. If you are using the deployment template from the Portal, choose okay to build both the namespace and hub at the same time. The message retention and capture options can be changed after deployment. The partition count is static for the lifetime of the object.

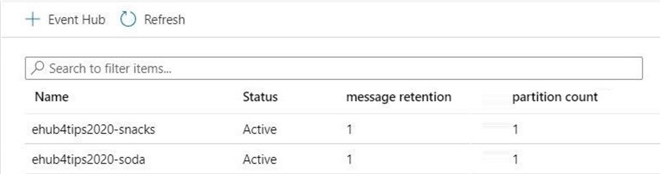

Since the Collegiate Vending company is thinking of branching out into snack machines, we want to deploy an additional event hub called ehub4tips2020-snacks. Just choose the "+ Event Hub" button on the overview page of the namespace to start the process.

The above image shows two hubs that are associated with our namespace. In the next section, an overview of the screens (blades) dedicated to managing the namespace.

Namespace Management

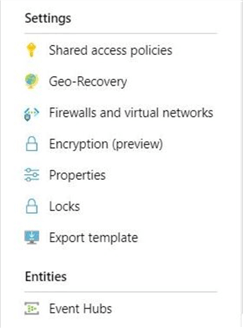

The Azure portal is where management of the namespace can be done via a graphical user interface (GUI). I am going to break the menu into three parts (images) that can be easily commented on. Not all options are required in most use cases.

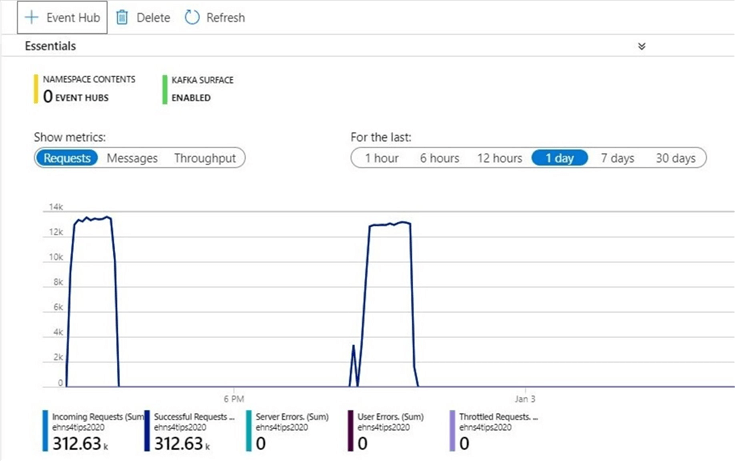

The overview menu option is the most popular destination. It brings up the current use of the namespace capacity. During the holiday break, I was doing a lot of testing of a Python program connecting to the event hub using AMQP. The results of those tests are seen in the chart below.

The access control (IAM) menu option allows for the assignment of Azure rights to users and groups. The typical rights of owner, contributor and reader can be assigned to the appropriate user or group.

The Tags menu option is useful when companies want to assign charge backs to departments for use of the Azure Subscription. Many companies enter department, application, component and cost center as descriptive tags to the deployed Azure objects.

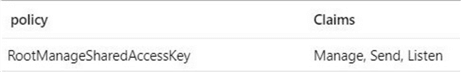

The shared access policies are where namespace level security can be assigned. Be careful on handling this root manage shared access key since it has control over the namespace and event hubs.

One might be tempted to open firewall menu option and add your client internet protocol (IP) address. This will be a very bad mistake on your part. Enabling this feature disables connectivity to all Azure services. Thus, if you want Azure Functions or Azure Stream Analytics to interact with the hub, I would not enable this setting. I heard there is a future feature on the Microsoft road map to map this setting more like Azure SQL database where there is an allow azure services check box. I do not know when that will come out.

The event hubs menu option is a common place to be since it allows you to fine tune settings of the event hub.

The last section of the namespace menu contains options for alerts and metrics. Unless you are using a holistic approach to validate the health of your Azure systems, enabling alerting on failures or high capacity is a good idea. Microsoft Azure Management Suite (OMS) is a holistic approach to monitoring. See the documentation for details.

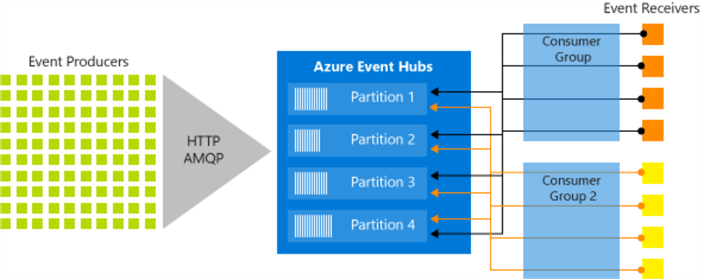

Consumer Groups

The publisher/subscriber mechanism of Event Hubs is enabled through consumer groups. A consumer group is a view (state, position, or offset) of an entire event hub. Consumer groups enable multiple consuming applications to each have a separate view of the event stream, and to read the stream independently at their own pace and with their own offsets. We will talk about offsets in a future article.

The image below shows two consumer groups have access to the four partitions. In event processing, each application is considered a consumer. There is always a default consumer for each event hub.

Going back to our proof of concept, we might want to have one consumer group for each our Azure Function applications. If we want to persist all messages to storage, we could create another consumer group for that effort.

Event Hub Management

The Azure portal is where management of the events hubs can be done via a graphical user interface (GUI). The overview menu option displays metrics about requests, messages and throughput at the bottom of the page. These numbers should match the namespace metrics if one event hub is deployed. The summation of the event hub metrics should always equal the container (namespace) metrics.

At the top of the page, the four most common menu actions are show as large push buttons.

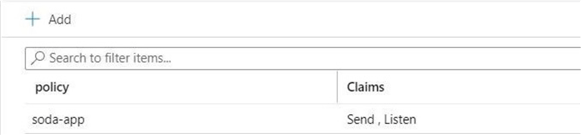

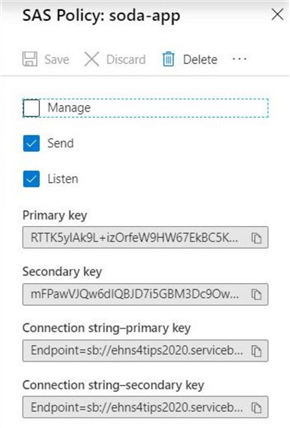

The connect menu brings the user to a screen that can be used to define access policy. Each policy can be granted manage, send, and listen rights. The image below shows a policy for our soda-app. This has been given read and write privileges.

Double clicking the policy after definition brings up the SAS Policy. There are two different keys that can be used to connect to the event hub service. While some services have different end points, this service lists the same end point twice.

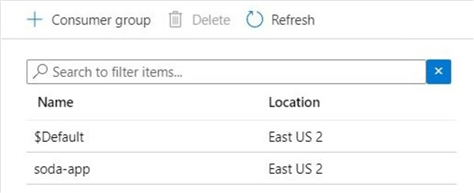

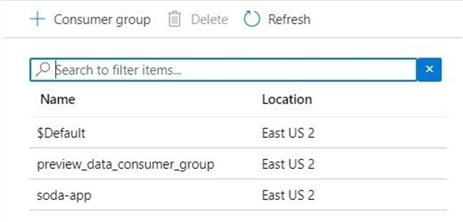

The checkpoint menu brings the user to a screen that can be used to define a consumer group. There can be at most 5 concurrent readers for a given consumer group and partition at one time. However, each hub can have up to 20 different groups. This feature allows the hub to scale to a variety of consumers. The image below shows the definition of the soda-app consumer group.

The process data menu brings the user to a poorly designed web page. One of the principle concepts of user interface design is not to show a screen unless it is meaningful. Since this page is a transfer page to the query explorer, it could be eliminated from the design.

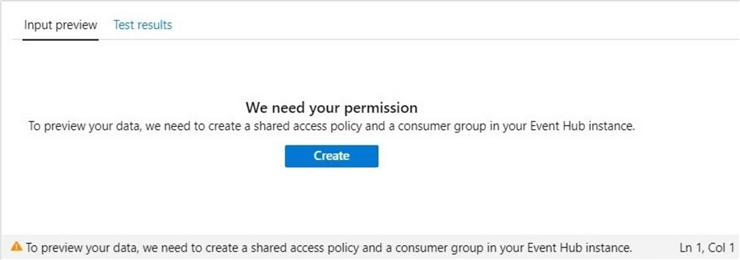

The query explorer is where we can write Stream Analytics SQL to peek at the data. To enable this query explorer, the portal is asking us to create a shared access policy and consumer group. Click the create button to complete this action.

The checkpoint menu can be used to verify that a new policy has been added to the event hub. The image below shows the new consumer group.

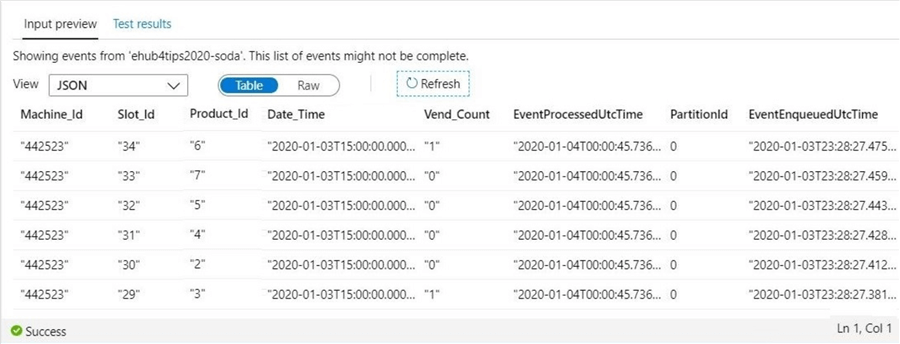

The input preview shows a handful of records in input preview. If you are paying attention, you might catch the important notice "This list of events might not be complete".

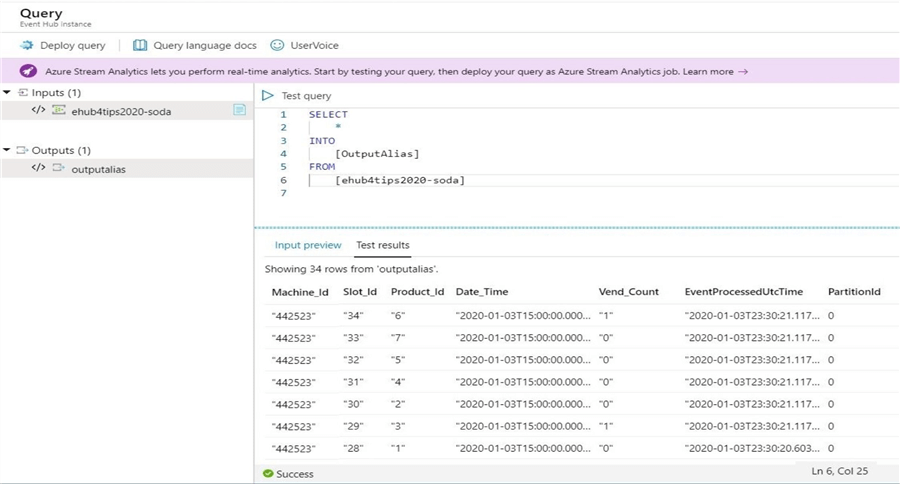

Okay, all looks good if the preview displays the top N rows from the event hub. How about if I run a test query? The default query selects everything from the event hub named [ehub4tips2020-soda] and returns the results as [OuputAlias]. Unfortunately, I am getting a disappointing result. I am only seeing 34 rows being display. See the image below for details.

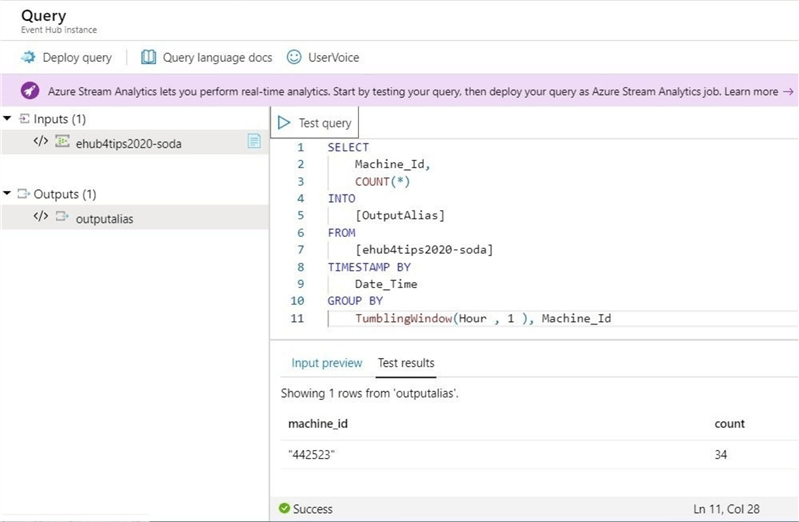

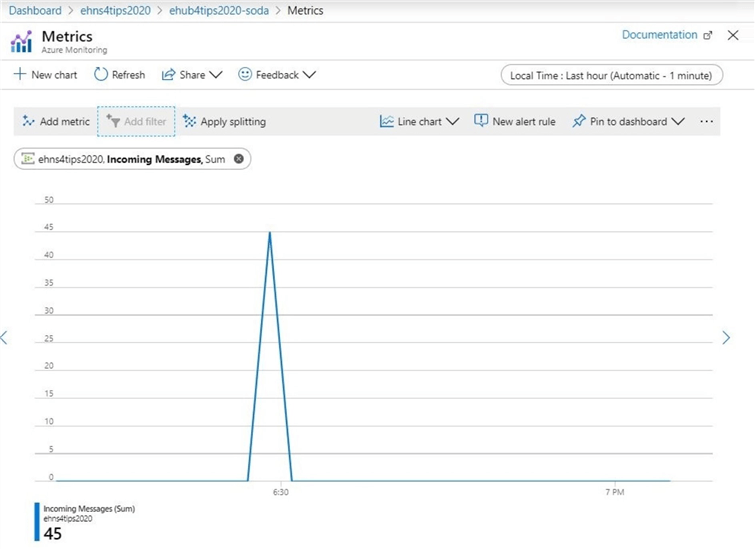

Without introducing the Stream Analytics query language, I am going to supply a query that groups by machine id for either a tumbling or sliding window of 4 hours. That means we should see the 45 messages (events) that were sent to the event hub. Please see the result below.

Again, we are getting the wrong result. That is why the event hub captures metrics like requests and messages. The image below shows 45 messages being received just before 6:30 PM.

One can only conclude that the test query that was executed is using only a small subset of the data as input. This is good to know if you were actually counting on real numbers.

The concept of data, messages and telemetry is sometimes used interchangeably when dealing with event hubs. The real time processing of data has been studied by computer scientists for a while. The Lambda architecture has two consumption lanes: speed and batch. Reading from the event hub in real time is the speed lane. The next menu option covers the batch lane.

The capture events menu allows for the storage of events to either Azure Blob Storage or Azure Data Lake Storage. I will be talking about how to consume messages from AVRO files in Azure Storage using Azure Data Lake as a future article. I will cover in depth the details behind this screen then.

A capture window is defined by a time period and file size. For instance, a time period of 10 minutes or 100 MB is our sample window. If either of these limits are exceeded, the current file is closed and a new file is opened. Unfortunately, the directory and/or file name has to use a fixed pattern. However, the data will be ready for batch consumption.

Summary

Today, we explored how to deploy and configure both a namespace and event hub for streaming data. It is important to choose certain parameters such as throughput units and partitions correctly at the start of a project. Changes to these properties will require the re-creation of the objects.

The details about how to read from the event hub partition were briefly covered. The partition is maintained for N days of retention. That means that various offsets can be used when reading the hub. In the future, I will show how to use Python to read and write to the hub. I am choosing this language since many of the edge device run Linux. Adding Python to this operating system is very simple.

The partitioned consumer model allows for the application to read or write to a particular partition. Thus, scale can be achieved through parallelism. If you have multiple applications using the same event hub, it is wise to create a separate consumer for each read.

If we were using Event Hub for many IoT devices, we can see that security could become unwieldy with the use of separate policies or shared access keys. That is why Microsoft suggests IoT hub for this use case. Event Hub has a major role to play when it comes to streaming big data. Stay tuned for more articles on how to consume this data.

Next Steps

- Write and read event hub message with Python

- Trigger an Azure Function to store event hub messages

- Filtering or aggregating event hub data with Stream Analytics

- Capturing events to Azure Storage for batch processing

- Use Azure Data Factory to batch load telemetry data

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2020-01-29