By: Alejandro Cobar | Updated: 2020-02-26 | Comments (1) | Related: > Restore

Problem

As SQL Server DBAs, we can all agree that backups are essential and must be managed properly so that we can be able to recover from any disaster scenario. However, there are some cases where having the backup file is simply just not enough because you might not know how much disk space you actually require for the .mdf (and any secondary files) and .ldf files until they are successfully restored. There might be also a scenario where you have the backup file in a remote location and you don’t have access anymore to the database server where the database data files reside, so you just can’t log in there and see the current size of the datafiles.

Solution

In this tip I’m going to present a set of T-SQL scripts that you can use to keep track of very useful information for full backups, along with the actual files size that these actually represent. The main idea is that you capture this information on a regularly basis (through jobs/scripts automation, or whatever method you prefer) so that you can query it and have this information available and as up to date as possible. Also, I’m covering the scenario where you have the backup file, but don’t have access to the source SQL Server instance to figure out any additional information of the database that the backup file contains.

To exemplify a particular case where this script might be handy, I’m going to create an empty new database called "LargeDB", that will have a primary .mdf file (90GB) and an .ldf file (10GB). I will also present 3 different approaches that can help you see this information, but from different "angles" so that you can pick the one you like the most.

Option 1 - List SQL Server database files sizes and backup size

With this option, you focus on the data from the databases themselves and add the size of their latest full backup to the mix (if any).

High-level explanation of the code:

- I create temp table #FreeSpace to store the amount of free space available in each database. That piece of information comes from the subtraction of the entire size of the database (found in the sys.master_files table) and the amount of space used by the database (returned by the FILEPROPERTY function).

- Since the FILEPROPERTY function returns information only from the current database that you are currently connected to, that’s why I’ve encapsulated this logic inside an sp_MSforeachdb command so that I’m able to store the information of all the databases into the #FreeSpace table for later use.

- After the execution of the sp_MSforeachdb stored procedure, I build the main query through the usage of the tables: sys.databases, sys.master_files and a subquery from the msdb.dbo.backupset (just to be able to capture the backup size of the latest full backup, if any).

- In the end, I drop the #FreeSpace table just to leave things clean.

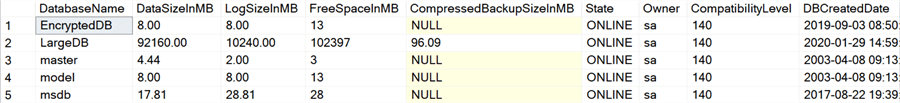

The end result looks like this:

As you can immediately see, the "LargeDB" database’s backup file is 96MB but the size of the data file is 90GB and the log file is 10GB. So, a backup of 96MB can turn into 100GB of database without you being aware of it, up until now that you have this piece of code that can raise your awareness of such important detail.

Option 1 - T-SQL script

IF OBJECT_ID('tempdb..#FreeSpace') IS NOT NULL DROP TABLE #FreeSpace

CREATE TABLE #FreeSpace([database] VARCHAR(64) NOT NULL,amount INT NOT NULL)

DECLARE @sqlCommand varchar(2048)

SELECT @sqlCommand = 'USE [?]

DECLARE @freeSpace INT

SELECT @freeSpace = SUM(size/128 -(FILEPROPERTY(name, ''SpaceUsed'')/128)) FROM sys.master_files

INSERT INTO #FreeSpace VALUES(''?'', @freeSpace)

'

EXEC sp_MSforeachdb @sqlCommand

SELECT DISTINCT

d.name AS 'DatabaseName',

(SELECT CONVERT( DECIMAL(10,2),SUM(size)*8.0/1024)

FROM sys.master_files

WHERE type_desc = 'ROWS'

AND database_id = mf.database_id

GROUP BY database_id) AS 'DataSizeInMB',

(SELECT CONVERT(DECIMAL(10,2),SUM(size)*8.0/1024)

FROM sys.master_files

WHERE type_desc = 'LOG'

AND database_id = mf.database_id

GROUP BY database_id) AS 'LogSizeInMB',

(SELECT amount

FROM #FreeSpace

WHERE [database] = d.name) AS 'FreeSpaceInMB',

CONVERT(DECIMAL(10,2),b.compressed_backup_size/1024.0/1024.0) AS CompressedBackupSizeInMB,

d.state_desc AS 'State',

suser_sname(d.owner_sid) AS 'Owner',

d.compatibility_level AS 'CompatibilityLevel',

d.create_date AS 'DBCreatedDate'

FROM sys.databases d

JOIN sys.master_files mf ON d.database_id = mf.database_id

LEFT JOIN (

SELECT bs.compressed_backup_size,bs.database_name

FROM msdb.dbo.backupset bs

WHERE bs.backup_set_id IN (SELECT backup_set_id FROM msdb.dbo.backupset WHERE backup_start_date = (SELECT MAX(backup_start_date) FROM msdb.dbo.backupset WHERE database_name = bs.database_name))

) AS b ON b.database_name = d.name

WHERE d.name NOT IN ('tempdb')

ORDER BY d.name

DROP TABLE #FreeSpace

Option 2 - List SQL Server database files sizes and backup size

This option approaches the problem from the backup’s information angle.

High-level explanation of the code:

- The goal of the CTE called MostRecentBackups is precisely that, to build a result set that contains the most recent full backups logged in the msdb, with the respective date when that backup actually finished.

- With that information, we can now build a new CTE called BackupsWithSize so that we can add the rest of the backup’s information like: size of the backup, how many days ago did that last full backup occurred, the path where that backup is located, etc.

- After building the result set that contains the backups information, I simply adhere the information of the size of the data file and the log file to the final result set, which looks like this:

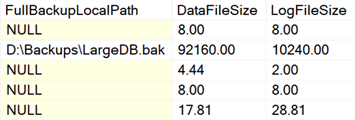

I’ve split it in 2 screenshots due to the wideness of the result set.

As you can see, this result set is centered around relevant information for the full backups, but it is being complemented with the actual data size that the backup file really represents. So, for the "LargeDB" database, we are seeing the exact same 90GB for the data file and 10GB for the log file (the same as with the option 1).

Option 2 - T-SQL Script

WITH

MostRecentBackups

AS(

SELECT

database_name AS [Database],

MAX(bus.backup_finish_date) AS LastBackupTime,

CASE bus.type

WHEN 'D' THEN 'Full'

END AS Type

FROM msdb.dbo.backupset bus

WHERE bus.type <> 'F'

GROUP BY bus.database_name,bus.type

),

BackupsWithSize

AS(

SELECT

mrb.*,

(SELECT TOP 1 CONVERT(DECIMAL(10,2), b.compressed_backup_size/1024/1024) AS backup_size FROM msdb.dbo.backupset b WHERE [Database] = b.database_name AND LastBackupTime = b.backup_finish_date) AS [Backup Size],

(SELECT TOP 1 DATEDIFF(s, b.backup_start_date, b.backup_finish_date) FROM msdb.dbo.backupset b WHERE [Database] = b.database_name AND LastBackupTime = b.backup_finish_date) AS [Seconds],

(SELECT TOP 1 b.media_set_id FROM msdb.dbo.backupset b WHERE [Database] = b.database_name AND LastBackupTime = b.backup_finish_date) AS media_set_id

FROM MostRecentBackups mrb

)

SELECT

d.name AS [Database],

d.state_desc AS State,

bf.LastBackupTime AS [LastFull],

DATEDIFF(DAY,bf.LastBackupTime,GETDATE()) AS [TimeSinceLastFullInDays],

bf.[Backup Size] AS [FullBackupSizeInMB],

bf.Seconds AS [FullBackupSecondsToComplete],

CASE WHEN DATEDIFF(DAY,bf.LastBackupTime,GETDATE()) > 14 THEN NULL ELSE (SELECT TOP 1 bmf.physical_device_name FROM msdb.dbo.backupmediafamily bmf WHERE bmf.media_set_id = bf.media_set_id AND bmf.device_type = 2) END AS [FullBackupLocalPath],

(SELECT CONVERT(DECIMAL(10,2),SUM(size)*8.0/1024) AS size FROM sys.master_files WHERE type = 0 AND d.name = DB_NAME(database_id)) AS DataFileSize,

(SELECT CONVERT(DECIMAL(10,2),SUM(size)*8.0/1024) AS size FROM sys.master_files WHERE type = 1 AND d.name = DB_NAME(database_id)) AS LogFileSize

FROM sys.databases d

LEFT JOIN BackupsWithSize bf ON (d.name = bf.[Database] AND (bf.Type = 'Full' OR bf.Type IS NULL))

WHERE d.name <> 'tempdb' AND d.source_database_id IS NULL

ORDER BY d.name

Option 3 - Get Size of SQL Server Database Files from Backup File

This option approaches the problem from the backup file alone, meaning that you only have the backup file and absolutely nothing else.

High-level explanation of the code:

- We are going to approach the problem by using the command "RESTORE FILELISTONLY", so that we can extract the size information directly from the file.

- The "RESTORE FILELISTONLY" command returns a result set with the information we want, but to be able to store this inside a temporal table and work with the data, we are going to execute the command, but as a parameter of an EXEC call.

- There is 1 important detail to keep in mind:

- Starting from SQL Server 2016, Microsoft added 1 additional column to the result set returned by the FILELISTONLY command (SnapshotUrl), so I decided to create the definition of the temporal table depending on the SQL Server version the script will be executed at. That way the end user won’t experience any fuzzy errors due to columns mismatch in the definition of the table.

Option 3 - TSQL Script

-- enter the path and file name of the backup

DECLARE @filename nvarchar(500) = 'C:\Temp\test.bak'

IF NOT EXISTS (SELECT * FROM dbo.sysobjects where id = object_id(N'RestoreFilelistOnly') and OBJECTPROPERTY(id, N'IsTable') = 1)

BEGIN

SET NOCOUNT ON;

DECLARE @sqlCommand NVARCHAR(2048);

IF(

(SELECT

CASE

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '8%' THEN 0

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '9%' THEN 0

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '10.0%' THEN 0

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '10.5%' THEN 0

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '11%' THEN 0

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '12%' THEN 0

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '13%' THEN 1

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '14%' THEN 1

WHEN CONVERT(VARCHAR(128), SERVERPROPERTY ('PRODUCTVERSION')) LIKE '15%' THEN 1

ELSE 1

END

) = 0

)

SET @sqlCommand = '

CREATE TABLE ##RestoreFilelistOnly (

[LogicalName] NVARCHAR(128),

[PhysicalName] NVARCHAR(260),

[Type] CHAR(1),

[FileGroupName] NVARCHAR(128),

[Size] NUMERIC(20,0),

[MaxSize] NUMERIC(20,0),

[FileID] BIGINT,

[CreateLSN] NUMERIC(25,0),

[DropLSN] NUMERIC(25,0),

[UniqueID] UNIQUEIDENTIFIER,

[ReadOnlyLSN] NUMERIC(25,0),

[ReadWriteLSN] NUMERIC(25,0),

[BackupSizeInBytes] BIGINT,

[SourceBlockSize] INT,

[FileGroupID] INT,

[LogGroupGUID] UNIQUEIDENTIFIER,

[DifferentialBaseLSN] NUMERIC(25,0),

[DifferentialBaseGUID] UNIQUEIDENTIFIER,

[IsReadOnly] BIT,

[IsPresent] BIT,

[TDEThumbprint] VARBINARY(32)

)'

ELSE

SET @sqlCommand = '

CREATE TABLE ##RestoreFilelistOnly (

[LogicalName] NVARCHAR(128),

[PhysicalName] NVARCHAR(260),

[Type] CHAR(1),

[FileGroupName] NVARCHAR(128),

[Size] NUMERIC(20,0),

[MaxSize] NUMERIC(20,0),

[FileID] BIGINT,

[CreateLSN] NUMERIC(25,0),

[DropLSN] NUMERIC(25,0),

[UniqueID] UNIQUEIDENTIFIER,

[ReadOnlyLSN] NUMERIC(25,0),

[ReadWriteLSN] NUMERIC(25,0),

[BackupSizeInBytes] BIGINT,

[SourceBlockSize] INT,

[FileGroupID] INT,

[LogGroupGUID] UNIQUEIDENTIFIER,

[DifferentialBaseLSN] NUMERIC(25,0),

[DifferentialBaseGUID] UNIQUEIDENTIFIER,

[IsReadOnly] BIT,

[IsPresent] BIT,

[TDEThumbprint] VARBINARY(32),

[SnapshotUrl] NVARCHAR(360)

)'

EXEC sp_executesql @sqlCommand;

INSERT INTO ##RestoreFilelistOnly EXEC('RESTORE FILELISTONLY FROM DISK = ''' + @filename + '''')

SELECT PhysicalName, CONVERT(DECIMAL(10,3),(Size/1024/1024)) as FileSizeMB, CONVERT(DECIMAL(10,3),(BackupSizeInBytes/1024/1024)) as BackupSizeMB

FROM ##RestoreFilelistOnly

DROP TABLE ##RestoreFilelistOnly

END

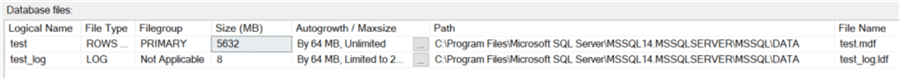

I have created an empty database called test, with an .mdf file of 5.5GB and an .ldf of 8MB. After executing a full backup to test the above script, here’s its output:

As you can see, the information matches exactly with the current size of my test database:

Next Steps

- It probably is a very good idea to choose option 1 or 2 and schedule it in a job and store the results in a specific table (for later analysis).

- If you take a quick peek at some of my articles, you will see that I like to capture different result sets for a bunch of instances, through PowerShell automation, so I think this would be a good fit to capture the results either from option 1 or 2, for all the instances under your care, and save it in a centralized database for post-analysis.

- Option 3 aims to address the scenario where you have only the backup file and know absolutely nothing about the database, and don’t have access to the source SQL Server instance to figure out any additional information.

- Now that you have this information, next time you try to restore a full backup that "seems small" but failed due to a lack of available disk space, you will have the knowledge to know exactly why that happened and how much space you really need to succeed.

- Also, it would be useful to run the code in option 3 before you do a restore on another server. The backup file might look small, but the restored database might be much larger so it would be useful to know this before you begin the restore process to ensure you have plenty of free disk space.

About the author

Alejandro Cobar is an MCP, SQL Server DBA and Developer with 10+ years of experience working in a variety of environments.

Alejandro Cobar is an MCP, SQL Server DBA and Developer with 10+ years of experience working in a variety of environments.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2020-02-26