By: John Miner | Updated: 2020-09-04 | Comments | Related: > Azure

Problem

Many companies are moving new and existing workloads to the Azure cloud. Larger companies have been traditionally purchasing commercial off the self (COTS) database software due to the fact that someone from Microsoft or Oracle will provide support when an unexpected situation occurs. Smaller companies have been using open source databases and operating systems to reduce the reoccurring software costs. However, the added maintenance hours of such systems eventually add up to money elsewhere. The current trend in the market is to take advantage of a cloud provider's Platform as a Service (PaaS) database to reduce the time to deploy and maintain such databases.

How can we deploy a MySQL database in the Azure Cloud?

Solution

Microsoft announced the general availability of Azure Database for MySQL in April of 2018. Please see this blog post for the details. The delivery of this service was made possible by leveraging existing innovations in Azure. The MySQL service is managed using the fabric and the infrastructure that Microsoft's Azure SQL Database service has been built on since 2010. Of course, the service has all the bells and whistles including built-in high availability, a 99.99% availability SLA, elastic scaling for performance, and industry leading security and compliance.

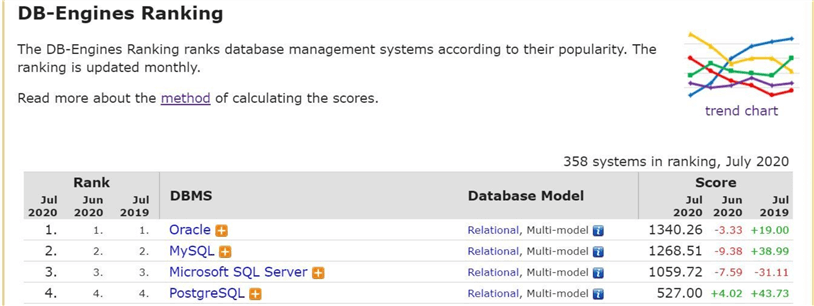

One might question Microsoft's foray into the open source database market, but it makes perfect sense given the chart below from DB-Engines. This means that Microsoft has 3 out of the 4 top databases as a Platform as a Service (PaaS) offering. That is called market share.

Business Problem

Our boss has asked us to investigate the Azure Database for MySQL server. We have several on premises databases with medium workloads. The code within the databases complies with the ANSI standard of the structure query language (SQL). Therefore, migrating the databases to the cloud might not require major work.

It is best to choose a simple problem that can be solved on our local laptop and in the cloud. This will to create a performance comparison between various services. This proof of concept will use the math database schema which calculates prime numbers from 1 to N. In our case, we want to know how many primes numbers exist between 1 and 5 million. We want to execute 20 asynchronous jobs to accomplish this task as fast as possible.

The rest of the article will show you how to deploy an Azure Database for MySQL, install tools to manage the database, restore an existing sample database and create the new math database. Comparing this PaaS service to other services will tell us how fast it is.

Azure Portal deployment

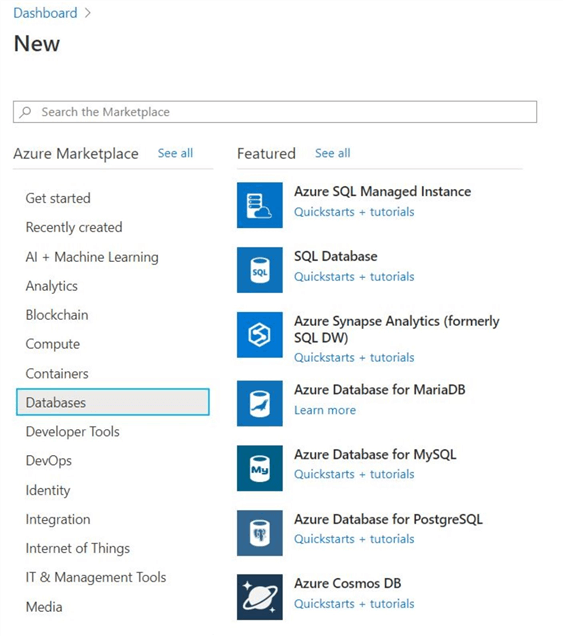

I am going to assume you are familiar with deploying objects from the Azure Portal. Please select the create a resource option from the main menu. If we search the Azure Marketplace for databases, we get the following list. Three open source databases are shown in the image below: MySQL, MariaDB and PostgreSQL. Please choose MySQL to continue.

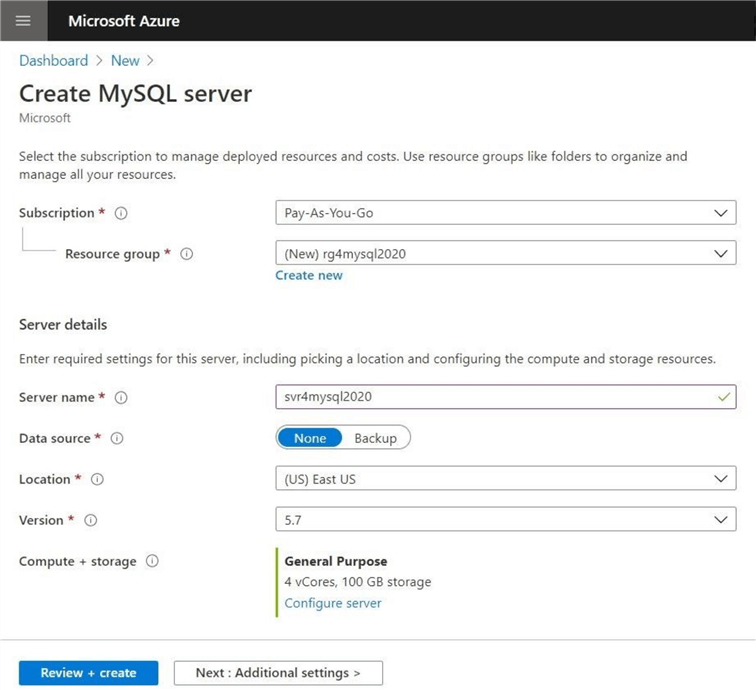

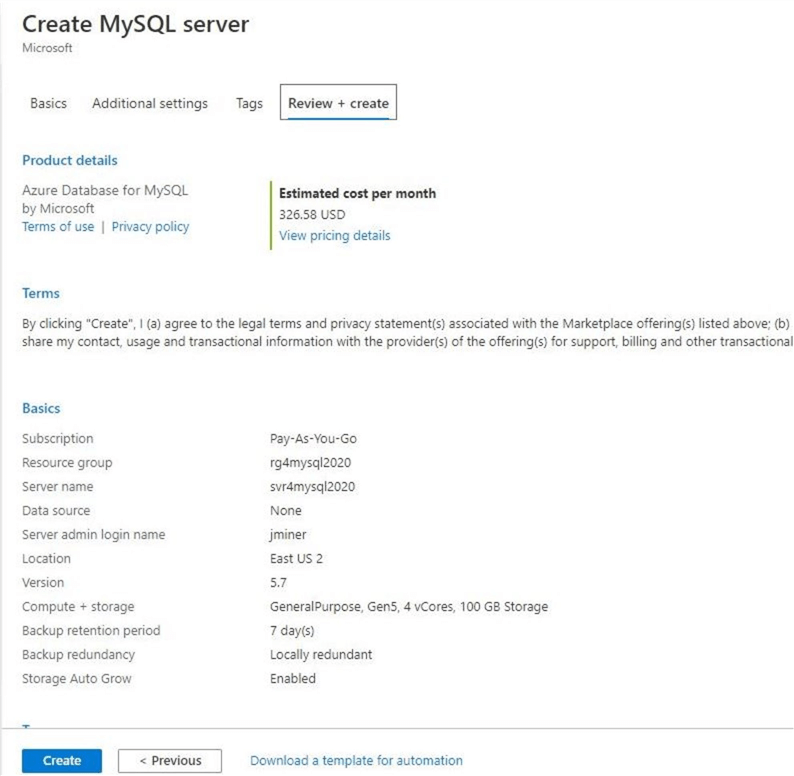

I am choosing to create a new resource group named rg4mysql2020 to house my database. The name of the database server will be called svr4mysql2020, and it will be in the East US data center. The data source option allows you to create a server from a backup or create a blank server (none). Please take the default choice. If your open source application software is certified for a certain release number, please use the dropdown to make the change at this time. The compute and storage option allows you to configure the resources available to the server.

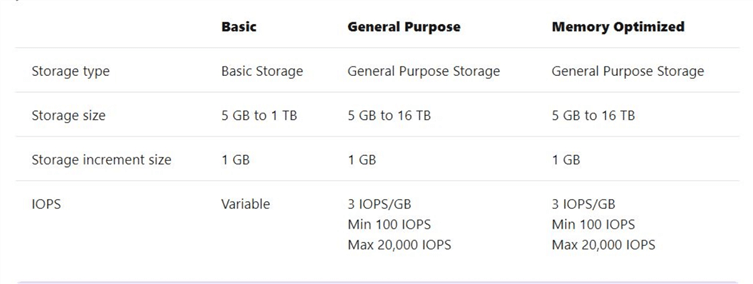

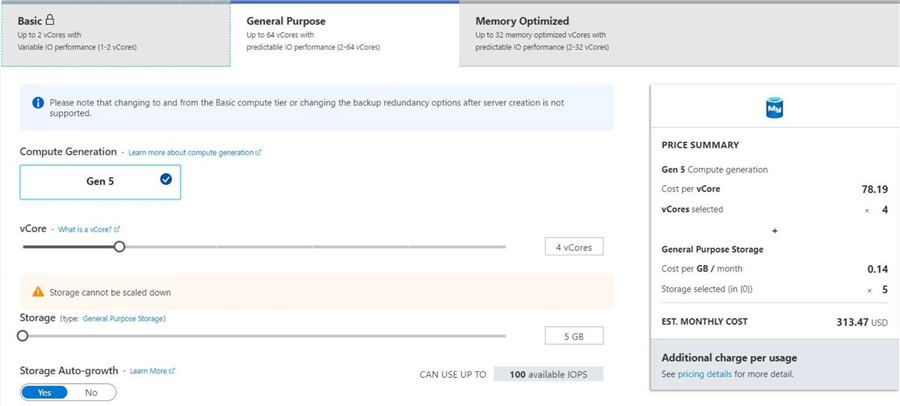

Just like other databases, there are three different tiers that can be selected: basic, general purpose and memory optimized. The table below shows the difference in storage size and IOPS for each tier.

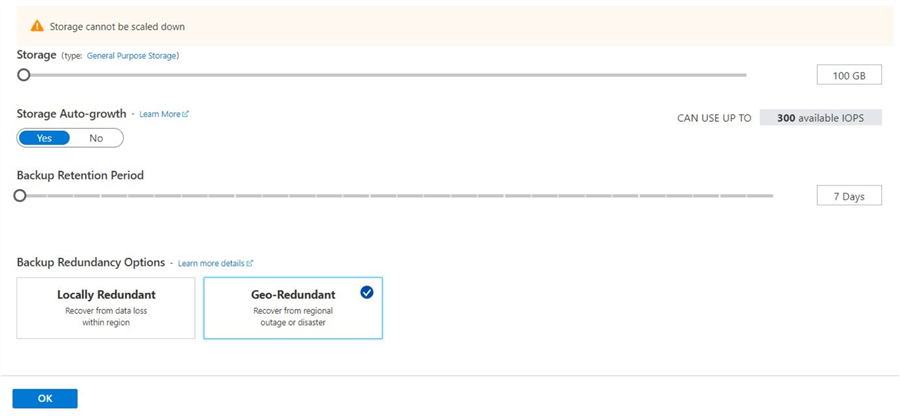

There is a direct relationship between storage size and IOPS. The larger the storage, the more IOPS are allocated to the database server. Unlike Azure SQL database, storage is pre-allocated. Once storage is scaled up, it can't be scaled down. The default retention period is set to 7 days. I suggest you adjust this setting to the maximum value of 35 days for any production grade server.

The last step in any Azure object deploy is reviewing the selections chosen by the user. We are also presented with an estimated monthly cost for this server which is $327.

To recap, the deploy of an Azure Database for MySQL is very easy to do in the portal. Just double check the selections before pushing the create button. Make sure you record the admin login name and password for later use.

Installing the client tools

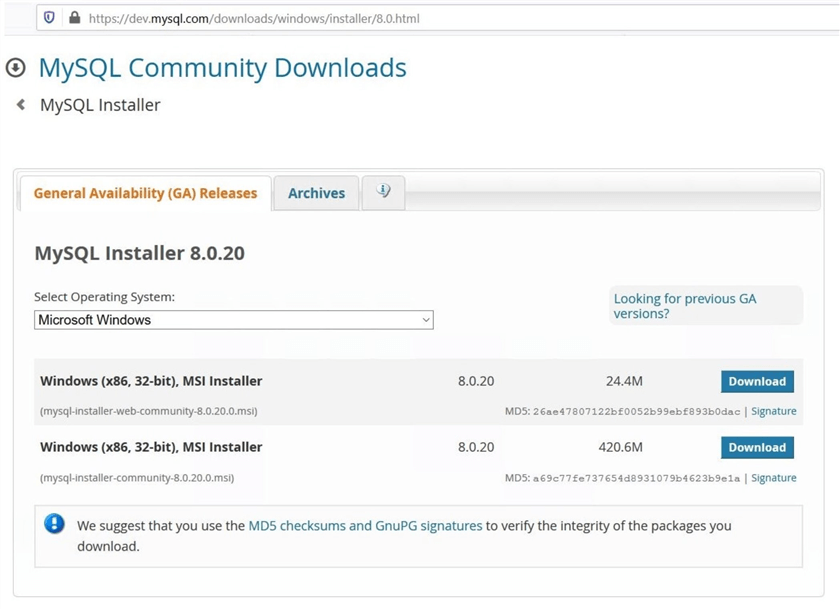

The MySQL Community downloads webpage contain many different installers. Please select the installer for windows using the correct hyperlink. If you did not already know, Oracle owns the MySQL brand. There is a commercial version of the product that is available for a fee. I choose to download the full install for the free community edition.

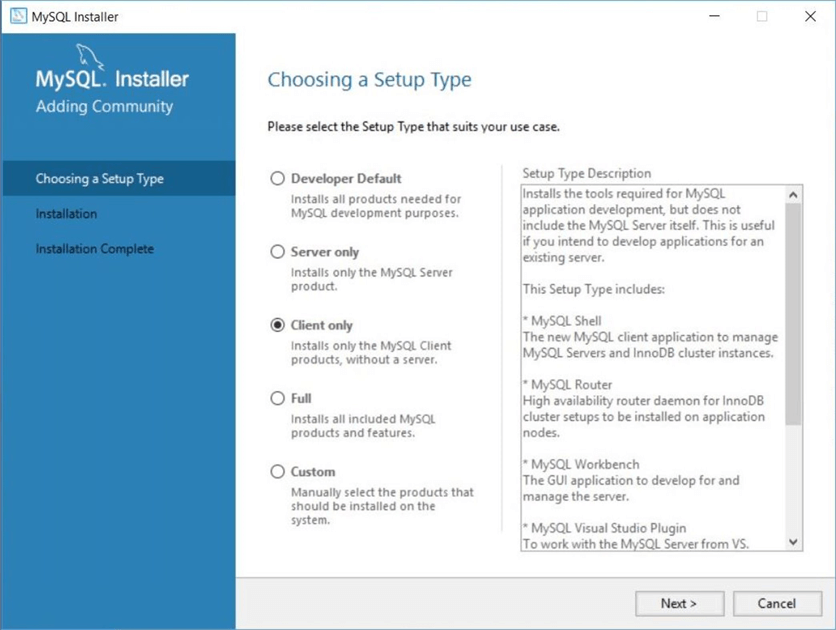

Please double click the installer to start the process. Since our MySQL server is in the Azure cloud, we just want to download the client tools only. Click the next button to continue.

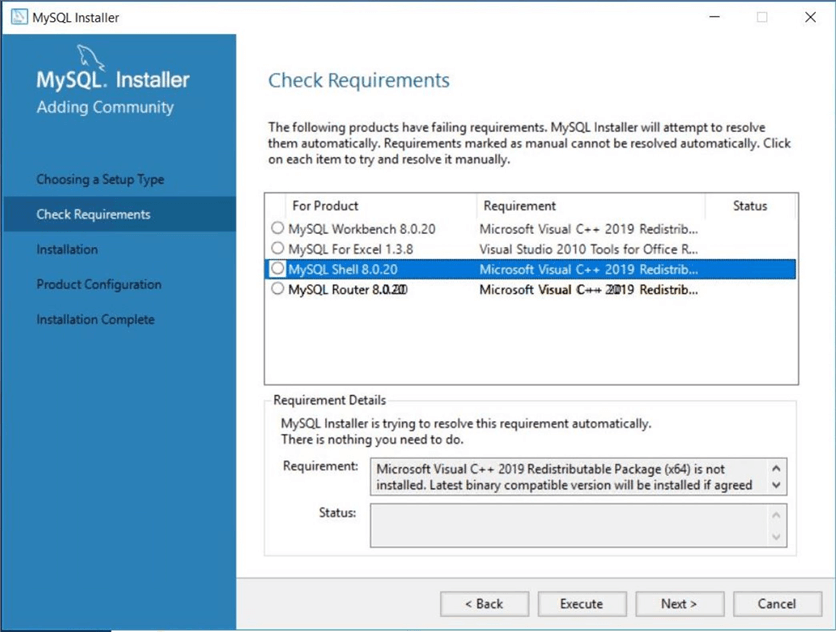

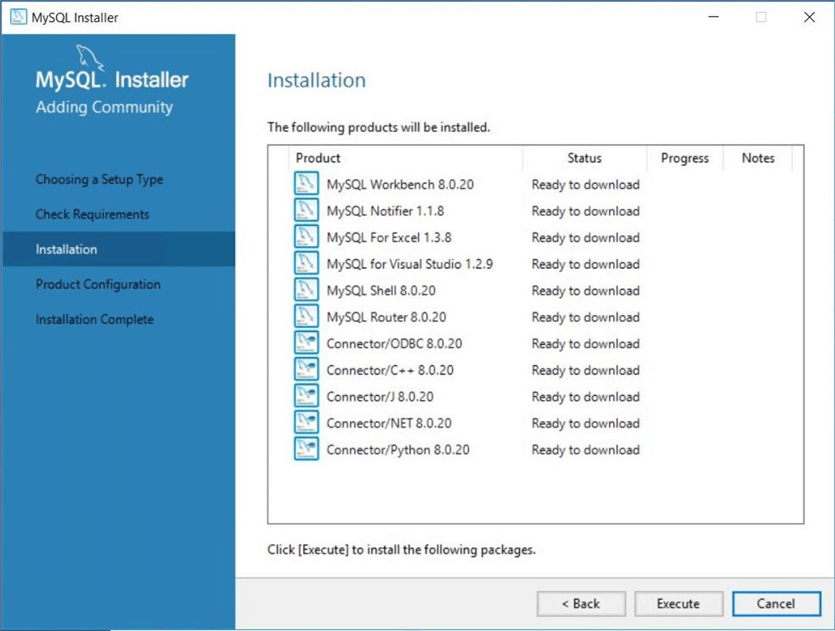

The MySQL Workbench is the equivalent of SQL Server Management Studio. Each of these products will be installed as a single group. Any missing dependencies such as a runtime engine will be installed as a prerequisite. Click the execute button to start the process.

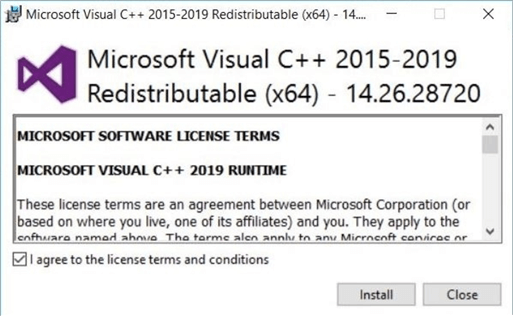

The Visual C++ runtime was missing from my Windows 10 virtual machine that I deployed to the Azure cloud. The image below shows that this prerequisite is being installed now.

Finally, click the execute button after all requirements have been satisfied. The MySQL Workbench should show up in the start up menu. We will be configuring this tool in the next section to work with our cloud database server.

Configuring the workbench

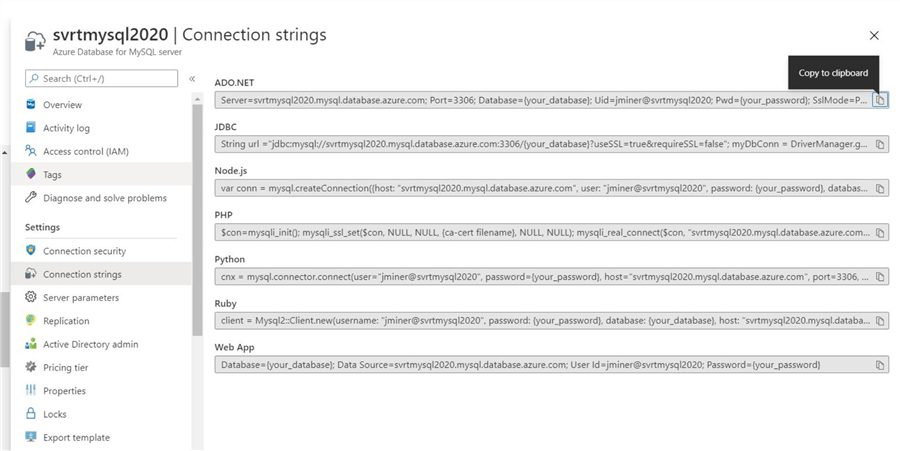

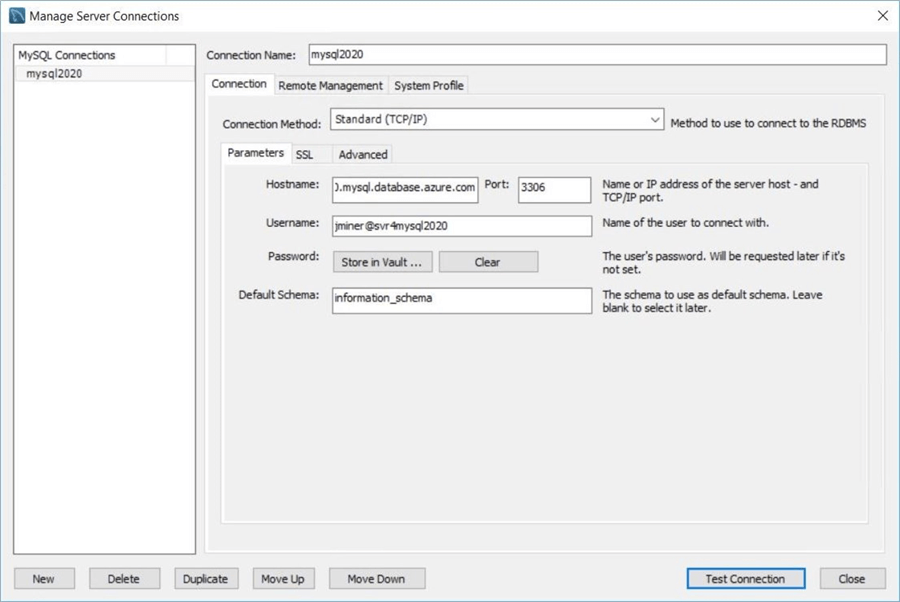

The Azure Database for MySQL uses a unique fully qualified name and port number. The easiest way to get this information is to copy it from the connection strings screen in the Azure Portal. I usually paste this into my favorite editor, notepad++ to parse out the required information.

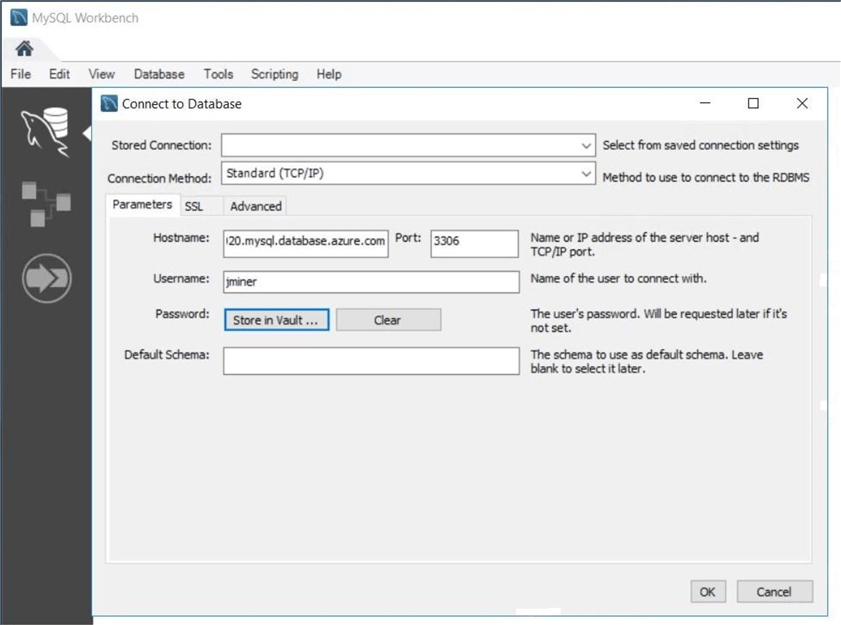

Now launch the MySQL Workbench application and choose the connect to database menu option. Enter the host name, port, username and password.

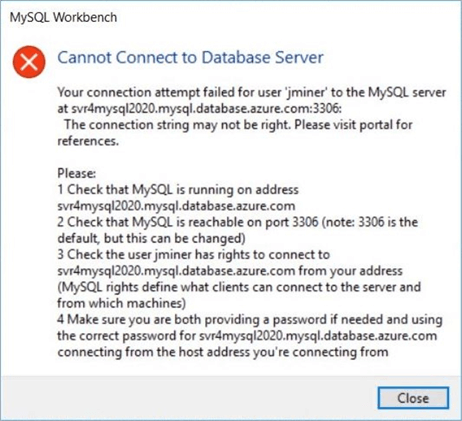

The first error will show up now after clicking okay. The Azure environment is always secure with a firewall rule. Did we add a rule for this service so that my laptop can access the database server?

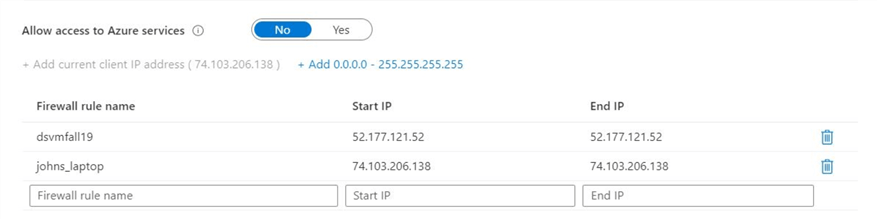

Under the connection security menu in the Azure portal, add any firewall rules for computers that might use this database server. I am going to add two computers to the service at this time. Please see the image below for details.

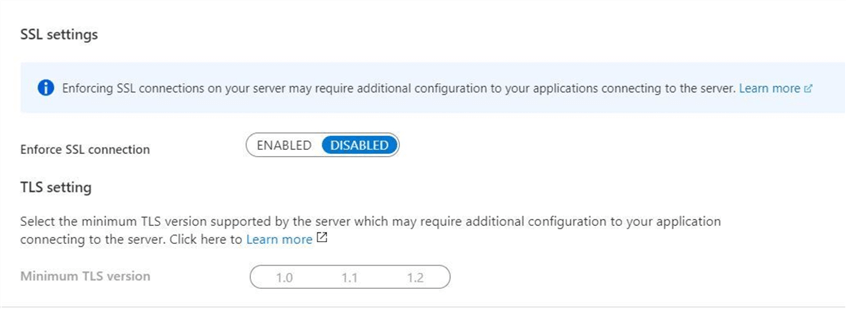

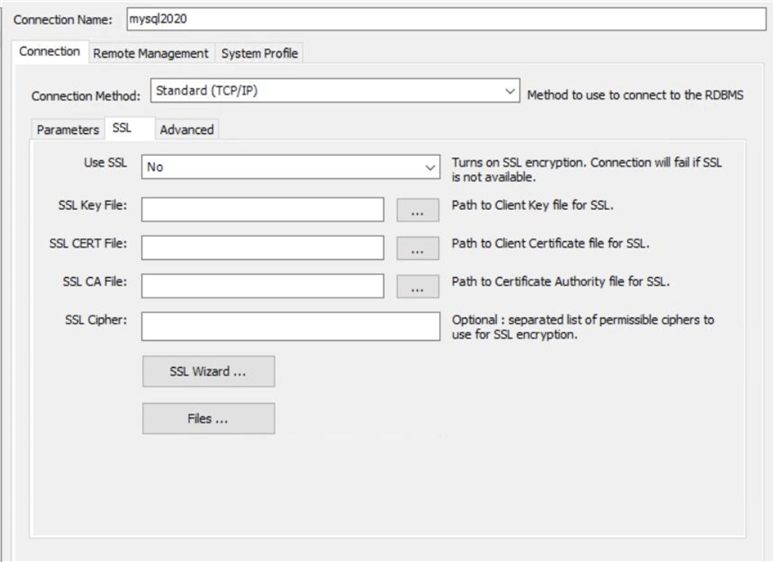

The next error that will occur when you try to complete the connection is that fact that SSL is not setup for the MySQL Workbench application. The quickest way to fix this problem is to temporarily allow non SSL connections to exist. We will fix this issue later by configuring SSL for the Workbench application.

The last error that you will encounter on your first connection to the database server is the format of the username. Unlike SQL Server, the name needs to be post fixed with the @ symbol followed by the server name. The image below shows the final connection configuration before a successful connection is made.

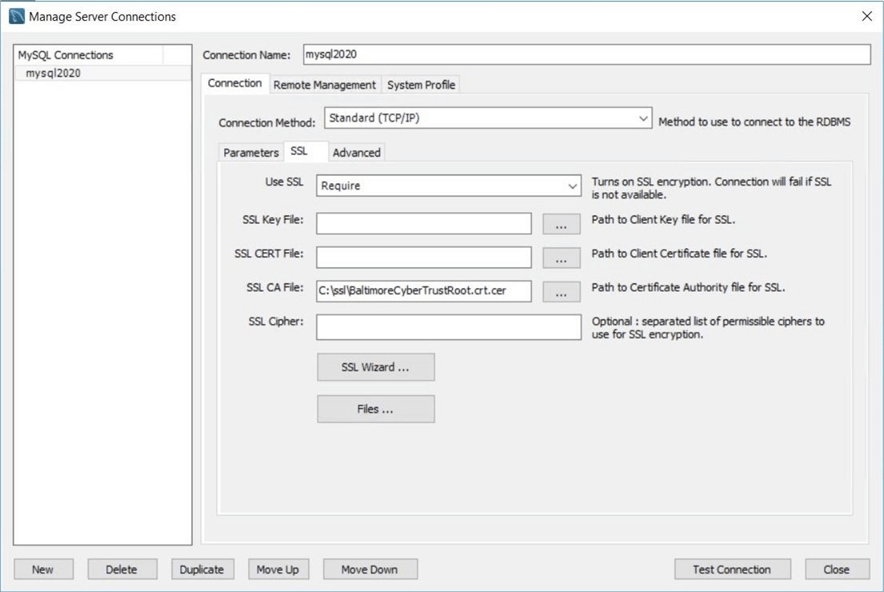

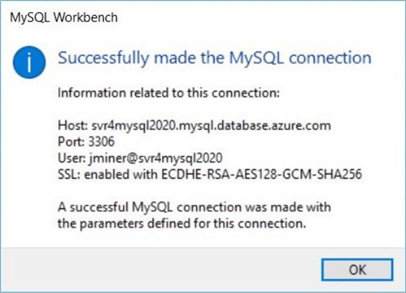

If you hit the Test Connection button at this time, a success message will show. However, we are not using security to connect to the database. Let's enable SSL right now. Please change the option from NO to REQUIRE security using SSL.

You will need to download the certificate authority file for Azure for MySQL at this time. The details are in the online documentation that Microsoft provides. I choose to store my certificate in a directory under the C drive.

The image below shows a successful connection to the database service using SSL. I suggest you go back to the connection security screen in the Azure portal and enforce the use of SSL for connections at this time. The next task is to deploy the math schema to our newly built database server.

Deploying the Math database

The math schema has been our baseline for testing performance of different database services in the cloud. However, there are several changes that need to be made for this code to work with MySQL. I will be highlighting code snippets to point out the most important differences that were found.

First, I suggest you start using the semicolons to end all statements. Also, a carriage return (CR) is considered default delimiter or GO command in the Workbench editor. Second, the user who creates the database is not automatically give rights to the database. The GRANT statement seen below gives the administrative account rights to the math database for the jminer admin account.

-- Delete existing database DROP DATABASE IF EXISTS MATH; -- Add new database? CREATE DATABASE IF NOT EXISTS MATH; -- Grant access GRANT ALL PRIVILEGES ON MATH.* TO 'jminer'@'%';

Third, the MySQL engine supports many different storage engines. The Azure service only supports the INNODB and MEMORY engines. Please note, a restart of the MEMORY engine will result in an empty table. Thus, there is no durability of the data. Please see this Microsoft article for details. My favorite engine is the BLACKHOLE in which nothing escapes this storage format! Any SELECT statement always returns an empty result set. The code below uses the ENGINE clause of the CREATE TABLE statement to store data to disk.

-- Delete existing table DROP TABLE IF EXISTS MATH.TBL_PRIMES; -- Add new table CREATE TABLE MATH.TBL_PRIMES ( MY_VALUE BIGINT NOT NULL, MY_DIVISION BIGINT NOT NULL, MY_TIME DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP, PRIMARY KEY CLUSTERED (MY_VALUE ASC) ) ENGINE = INNODB;

So far, the syntax changes have not been that bad. That is about to change. When dealing with multi line code in a stored procedure, we will be introducing a new concept not in T-SQL. Since a carriage return is considered end of statement, we must change the delimiter so that the query parser does not try to evaluate the first line of the stored procedure as the whole program. The DELIMITER statement allows the developer to use another set of characters to signal end of statement. Also, the DEFINER clause of the CREATE PROCEDURE statement indicates who owns the stored procedure. The use of local variables does not require the name to be prefixed with a @ character. However, session level variables should be defined using that notation. Certain control constructs are defined differently in MySQL as shown below. Please take a look at the WHILE construct which is different than T-SQL. Finally, variable assignments can have a colon before the equals sign. The purpose of this article is not to teach the MySQL programming language. However, you should be able to point your developers to the online documentation for MySQL. After defining the procedure, reset the DELIMITER back to a semi-colon.

-- Delete existing procedure

DROP PROCEDURE IF EXISTS MATH.SP_STORE_PRIMES;

-- Set block statement (chars)

DELIMITER //

-- Create the stored procedure from scratch

CREATE DEFINER = 'jminer'@'%' PROCEDURE MATH.SP_STORE_PRIMES (IN VAR_ALPHA BIGINT, IN VAR_OMEGA BIGINT)

BEGIN

-- DECLARE VARIABLES

DECLARE VAR_CNT1 BIGINT;

DECLARE VAR_RET1 INT;

-- SET VARIABLES

SET VAR_RET1 := 0;

SET VAR_CNT1 := VAR_ALPHA;

-- CHECK EACH NUMBER FOR PRIMENESS

WHILE (VAR_CNT1 <= VAR_OMEGA)

DO

-- ARE WE PRIME?

CALL MATH.SP_IS_PRIME (VAR_CNT1, VAR_RET1);

-- FOUND A PRIME

IF (VAR_RET1 = 1) THEN

INSERT INTO MATH.TBL_PRIMES (MY_VALUE, MY_DIVISION)

VALUES (VAR_CNT1, SQRT(VAR_CNT1));

END IF;

-- INCREMENT COUNTER

SET VAR_CNT1 := VAR_CNT1 + 1;

END WHILE;

END //

// Reset block statement (char)

DELIMITER ;

Enclosed is the updated schema for the MATH database to work correctly on MySQL. Open a new query window, paste in the code as text and execute. This should create a new MATH database. Just like Oracle, MySQL considers a database and a schema as the same object. Thus, a given server can have multiple schemas (databases). The information_schema is supplied for users who do not want to learn the base system tables. This same schema also exists in SQL Server. The code below can be used to store primes numbers from 2 to 100K. Paste the code in a new query window and execute the code.

/* Test routine - sp_store_primes() */ SET @VAR_START := 2; SET @VAR_END := 100000; CALL MATH.SP_STORE_PRIMES (@VAR_START, @VAR_END);

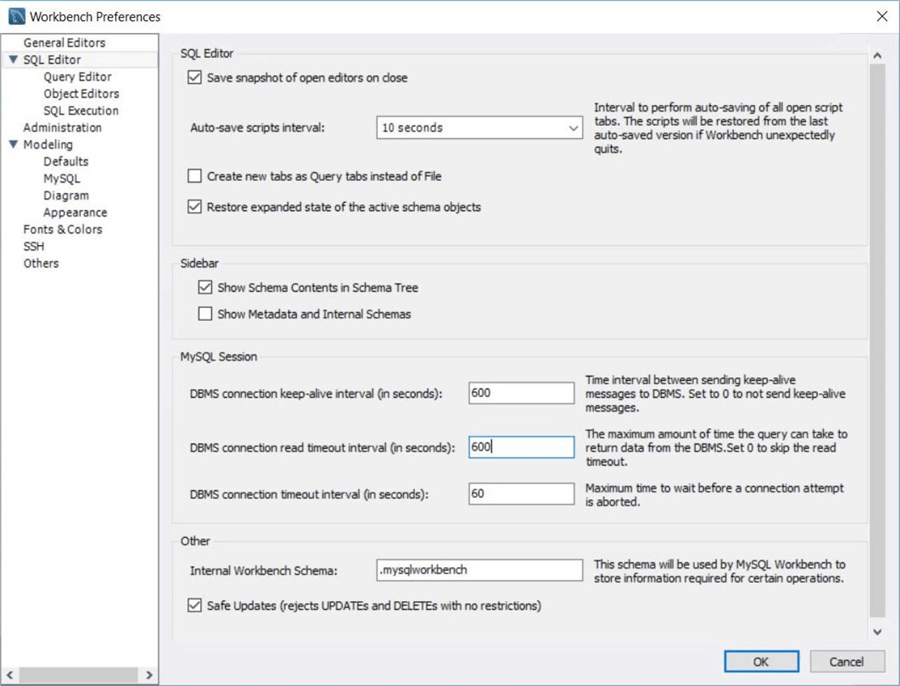

I encountered a query timeout when I executed this code. This error will show up as a lost connection. Find the Workbench preferences menu and increase the timeout to 10 minutes or 600 seconds.

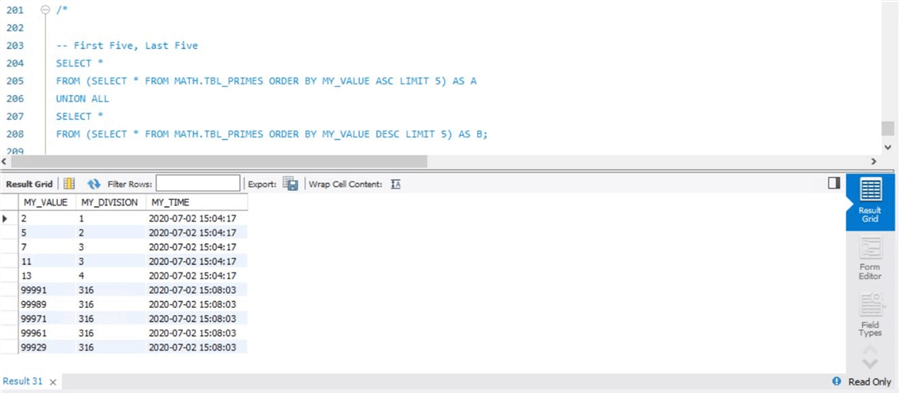

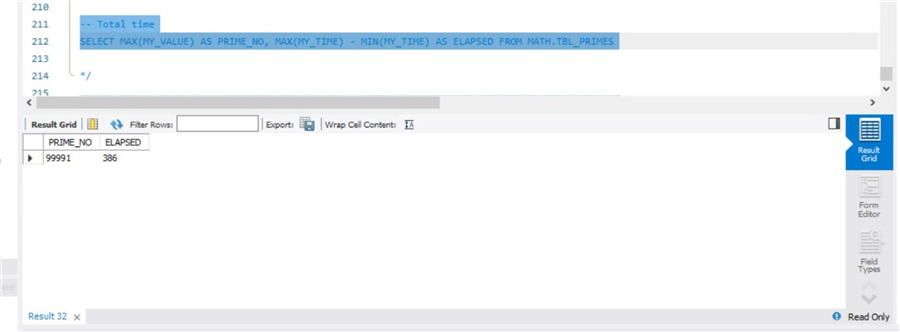

The code below shows the top 5 and bottom 5 primes numbers found in our search. A UNION ALL operator can be used to join two query results in SQL Server. However, in MySQL a derived table needs to be used before unioning the result sets. Also, the TOP (N) clause is supported in TSQL. But in MySQL, the LIMIT clause can be used to reduce the final result set.

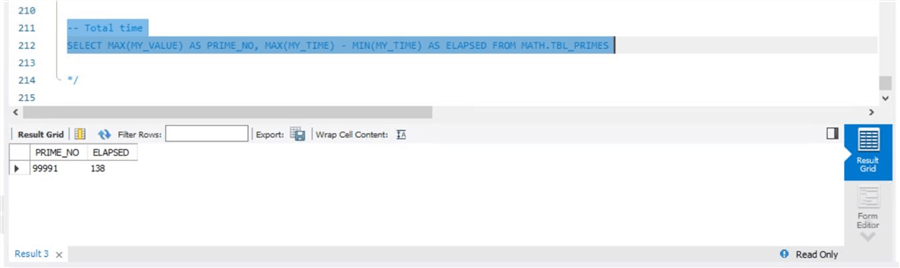

The image below shows the elapsed time for MySQL to calculate the prime numbers between 2 and 100 K. It took over 6 minutes to accomplish this task. That is quite slow. We will work on improving these numbers in the next section.

In a nutshell, databases that are migrated from other database systems will need a lot of work when it comes to compiled code. Standard ANSI constructs such as CREATE TABLE and CREATE VIEW will have little syntax changes. One thing I do not like about the community edition of the Workbench is the fact that only one connection can run at a time. Thus, if you are running a query in one window, you can't execute any commands until that command finishes.

Increasing Storage Size

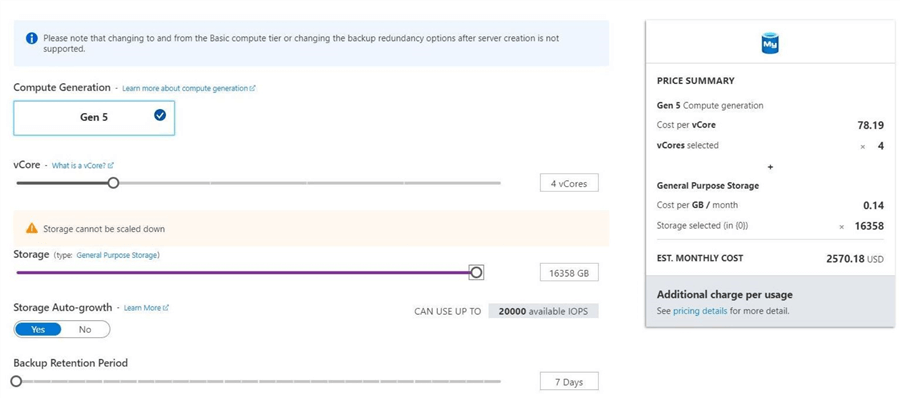

In a previous section, it was noted there was a direct correlation between storage space and IOPS. We will max out the disk space in attempt to decrease the execution time. The default storage space is 5 GB with the performance of 100 IOPS. Let's change that configuration now.

If we specify a 16 TB disk during deployment, the total cost increases from $313 to $2570. However, the number of IOPS increases to 20K. I ran disk speed utility on my 3-year-old laptop that has an SSD drive. The laptop has IOPS between 9K and 5K depending on whether a read or write action is being performed.

If we deploy the math database schema and execute the same search for prime numbers, we receive the following results shown in the image below. The execute time went from 386 to 138 seconds which is a 64.25% decrease in time. However, the cost went from $313 to $2570 which is an increase in cost of 721%.

It has been experimentally proven that larger disks reduce the total time to process a workload. This is due to the fact that more IOPS are available for these larger disks. As an end user, you need to decide if the increase in cost is worth the decrease in time spent on a problem.

Importing a database

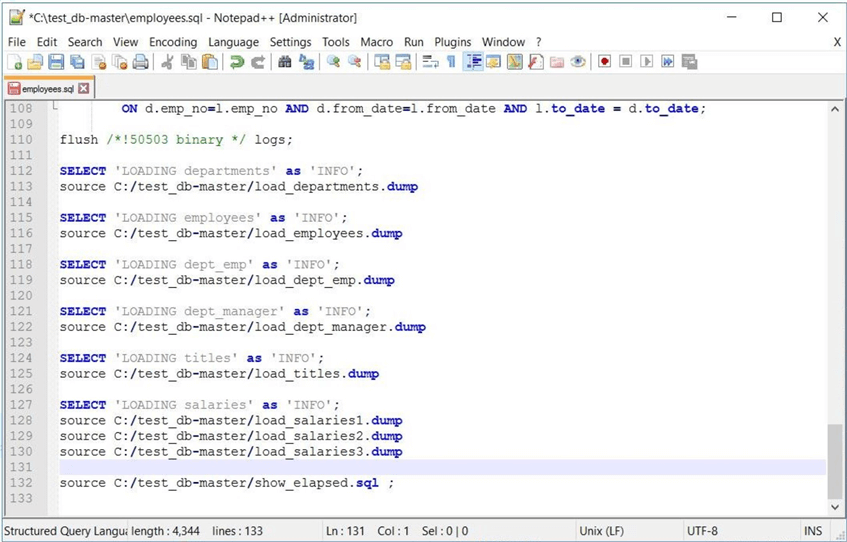

The Employees sample database is used by students learning the MySQL database. The original SQL dump can be found on GitHub. The modified SQL dump file is enclosed. Since the Azure service only supports two engines, the default SQL script had to be changed to use the INNODB engine. Also, the last few lines of the file had to be changed match the location of the scripts. Please note that MySQL was initially developed for Linux and will expect forward instead of back slashes for directory paths. The image below shows changes being made to the SQL dump file.

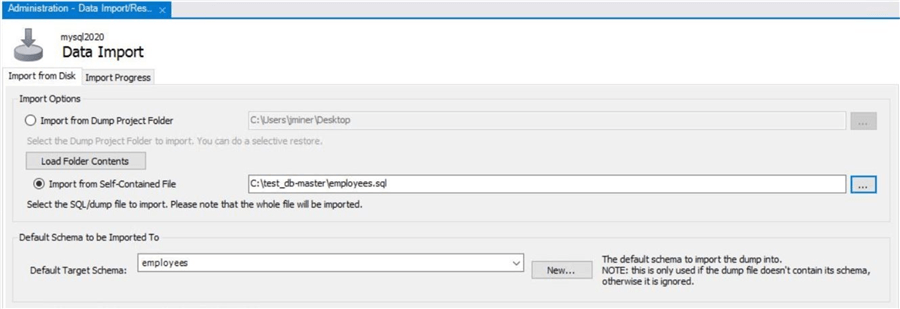

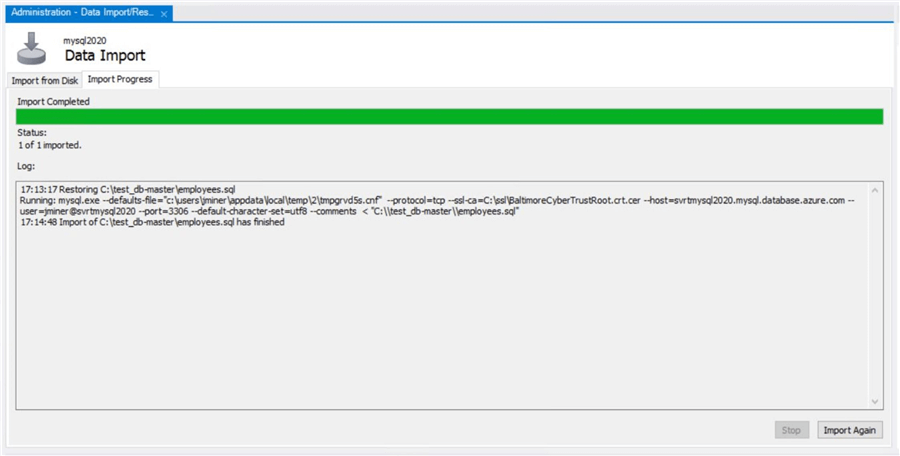

Now, please start the data import process using the Workbench application. Search for the "employees.sql" file in the "c:\test_db-master\" directory. Start the import process now.

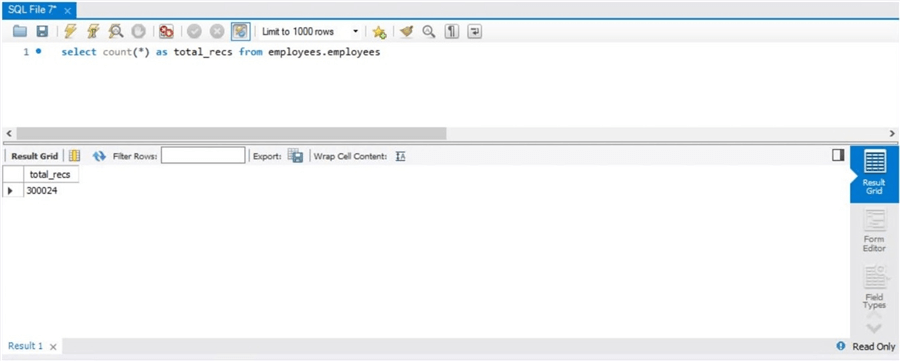

The output window should show the status of the import. Upon completion, we can get a record count from one of the tables in the database (schema) by executing a query.

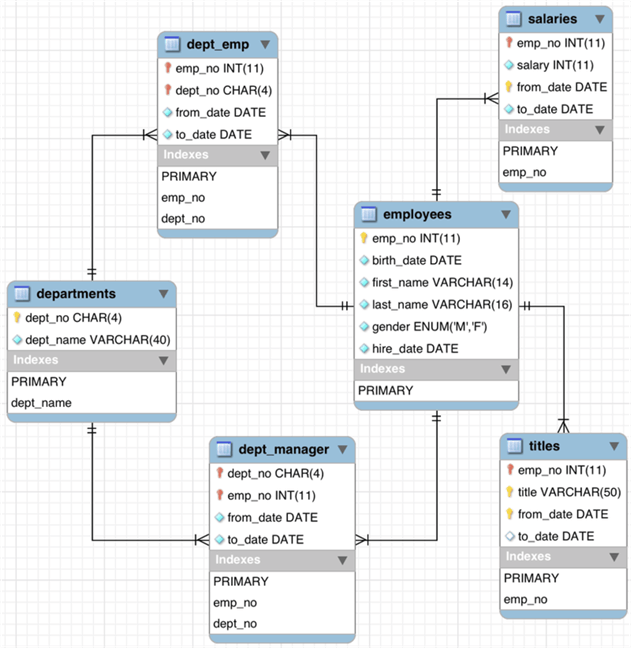

If you look around for the employees sample database on the web site, you will find the entity relationship diagram (ERD) for the schema. There are 6 simple tables in this sample database.

I chose to get the record count from the main employee table. This table contains a little over 300K records.

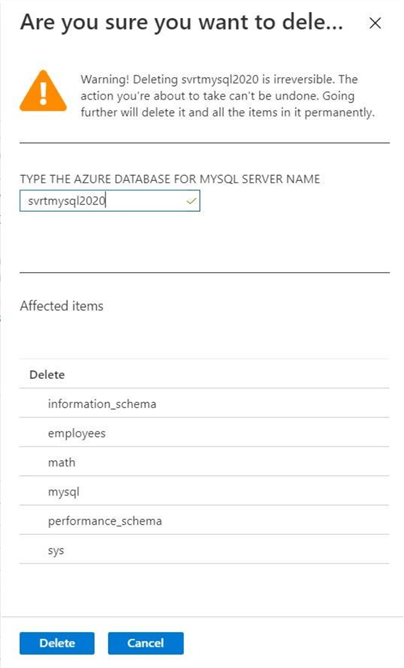

If you are curious, perform a mock delete of the Azure Database for MySQL service. A full list of database schemas will be shown in the Azure portal. These are the standard (system) schemas that are deployed with the database server.

In summary, database SQL dumps can be used to transfer databases to and from on-premises.

Database Bakeoff

The batch file that was used to create 20 different asynchronous calls to the Azure SQL database in the past needs to be modified for Azure database for MySQL. In the Workbench directory, there should be the mysql command line utility. Just supply the correct values for host, user and password. Use the DOS pipe command to redirect the SQL text file as input.

REM

REM Calculate primes numbers <= 5 M asynchronously.

REM

CD "C:\Program Files\MySQL\MySQL Workbench 8.0 CE"

FOR /L %%A IN (1,1,20) DO (

start cmd /c "mysql --host=svrtmysql2020.mysql.database.azure.com --user=jminer@svrtmysql2020 --password=7mEJu77tbgqR5x9S < c:\primes\calculate-prime-numbers.sql"

)

The MySQL language does not support the OUPUT clause. Therefore, a new design had to be created. If we use an auto increment column, the LAST_INSERT_ID function can be used to calculate the search range. The code below searches for primes numbers in blocks of 250K.

--

-- Insert control card record.

-- Use id to determine search range.

-- Call sp to find prime numbers.

--

INSERT INTO MATH.TBL_CONTROL_CARD (MY_COMMENT) VALUES ('Look for prime numbers.');

SET @VAR_START := (LAST_INSERT_ID() - 1) * 250000;

SET @VAR_END := @VAR_START + 250000 - 1;

CALL MATH.SP_STORE_PRIMES (@VAR_START, @VAR_END);

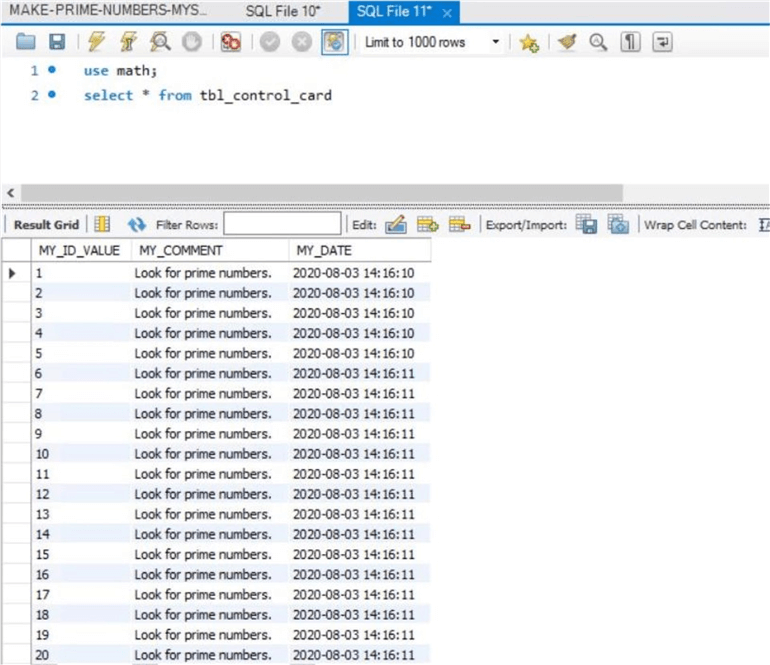

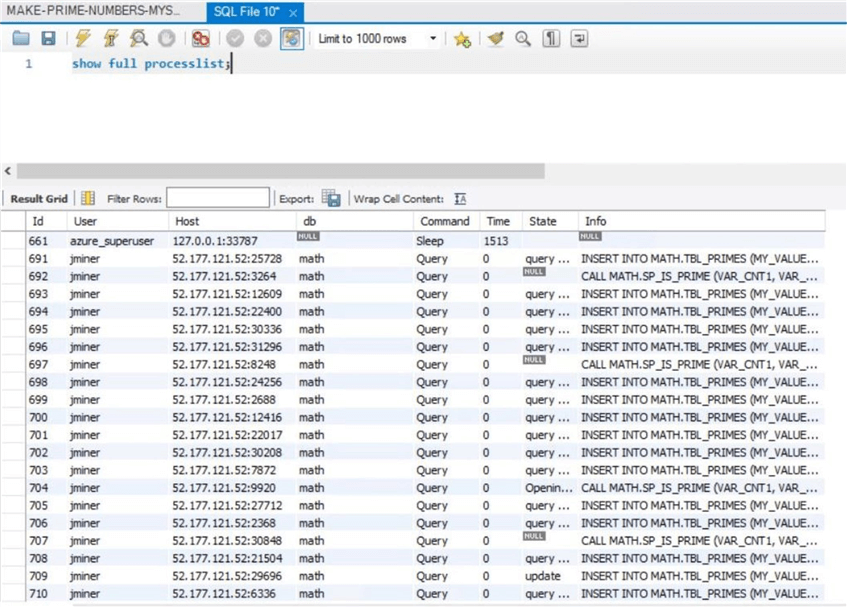

The image below shows all twenty threads have written to the control card table.

The show full process list command is similar in nature to sp_who2. At most, four sessions will be running at a time since we only have four virtual cores. The running sessions are shown with the information field like "CALL MATH_SP_IS_PRIME".

I bumped up the fixed storage to 500 GB which equates to 1500 IOPS. This is a little more than GENERAL purpose but less than BUSINESS CRITICAL tiers for Azure SQL Database. Please see the limits article for details. I executed the batch program for a math database schema that uses the INNODB storage engine and recorded the results for the first test. Next, I dropped the math database and recreated the database schema using the MEMORY engine. Last but not least, I executed the batch program and record the results for the second test. In the next section, we will see how the service compares to previous tests that have been performed with SQL Server.

Bakeoff Results

Most cloud services are using the fifth generation Intel processors are based on the Haswell architecture. Each database deployed with a GEN5 v-core is hyper-threaded, has a lower memory to core ratio, uses a non-volatile memory express controller for solid state drives, and has network acceleration. The total number of cores is capped at 2, 64, and 32 depending on the selected tier (basic, general purpose or memory optimized).

The table below shows a summary of PERFORMANCE testing comparing SQL Server vs MySQL in various configurations. I am purposely leaving out the BUSINESS CRITICAL tier since the price point is a lot more expensive than the other services. Again, our use case for the database is a light to medium workload with 500 GB or less of data. The top half results were executed in the past. The bottom half results were discovered for this article.

| Test No | Description | Configuration | Compute (4 cores) | Time (secs) |

|---|---|---|---|---|

| 1 | Local Laptop | WIN 10 / SQL DB 16 | GEN6 | 235 |

| 2 | Provisioned General Purpose | AZURE SQL DB | GEN5 | 426 |

| 3 | Serverless General Purpose | AZURE SQL DB | GEN5 | 437 |

| 4 | Provisioned General Purpose | AZURE SQL MI | GEN5 | 387 |

| 5 | Provisioned General Purpose | AZURE MYSQL – INNODB | GEN5 | 1260 |

| 6 | Provisioned General Purpose | AZURE MYSQL – MEMORY | GEN5 | 1515 |

| 7 | Provisioned Memory Optimized | AZURE MYSQL – INNODB | GEN5 | 1314 |

| 8 | Provisioned Memory Optimized | AZURE MYSQL – MEMORY | GEN5 | 1597 |

Many projects sponsored by the business line are on a tight budget. Therefore, presenting different options to the business line allows for an informed decision. Most clients will be curious about how price correlates to performance. The chart below shows some ESTIMATED PRICING pulled from the Azure pricing calculator.

| Test No | Description | Configuration | Compute (4 cores) | Cost ($) |

|---|---|---|---|---|

| 1 | Local Laptop | WIN 10 / SQL DB 16 | GEN6 | $1200 / once |

| 2 | Provisioned General Purpose | AZURE SQL DB | GEN5 | $794 / month |

| 3 | Serverless General Purpose | AZURE SQL DB | GEN5 | $160 / month |

| 4 | Provisioned General Purpose | AZURE SQL MI | GEN5 | $791 / month |

| 5 | Provisioned General Purpose | AZURE MYSQL – INNODB | GEN5 | $436 / month |

| 6 | Provisioned General Purpose | AZURE MYSQL – MEMORY | GEN5 | $436 / month |

| 7 | Provisioned Memory Optimized | AZURE MYSQL – INNODB | GEN5 | $543 / month |

| 8 | Provisioned Memory Optimized | AZURE MYSQL – MEMORY | GEN5 | $543 / month |

It is surprising to note that the general purpose tier has better performance timings the memory optimized tier. Furthermore, the INNODB engine calculated prime numbers faster than the MEMORY engine. This discovery does not make sense since only the schema is durable in this offering. I can only assume that these results are related to the code base for the COMMUNITY edition of the database server. I hope that a faster code base exists for the PRODUCTION edition of the database server. However, this will not help the current version of the Azure Service. To summarize, I would try different workloads and v-Core configurations to see if these results are constant regardless of the situation.

Summary

Microsoft has taken a bold step by adding open sources databases to their service offering. This is good news for consultants that work on a variety of projects. Many older HADOOP projects used MySQL to store configuration information related to batch processing. Some companies are moving the map reduce jobs written in Java to Azure Databricks using Scala. Having this database service reduces the time to market and eliminates the need for another virtual machine – Infrastructure as a Service (IaaS).

The cost of Azure Database for MySQL is priced at the bottom of the list for provisioned servers. Given the performance results, I would always choose the General Purpose tier of the Azure Database for MySQL service. When comparing the MySQL to SQL Server database service, there is estimated increase of 245 to 300 percent in performance with an increase of 46 to 82 percent in cost per month.

It is nice to see that there are several open source databases are offered as a service in Azure. This may reduce the time to migrate an application from on premises to in cloud. Please check the performance of service for your workload to make sure the performance meets the expectations of the business users. I cannot wait to look at the PostgreSQL offering. This database engine has been slowly gaining market share. I am curious how this server stacks up to the others in terms of performance. If you like this article, please stay tuned for my next open source exploration.

Next Steps

- Investigating the Azure database service for PostgreSQL

- Investigating the Azure database service for MariaDB

- Deploying the new open source databases with PowerShell cmdlets

- Check out these other Azure articles

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2020-09-04