By: Koen Verbeeck | Updated: 2020-09-15 | Comments | Related: > Azure Integration Services

Problem

We have an Integration Services project deployed to Azure Data Factory, where we use an Azure-SSIS Integration Runtime to run the packages. Is it possible to execute packages from a command line on a local machine? We would for example use an on-premises scheduler to schedule the execution.

Solution

You can migrate your Integration Services (SSIS) project to the Azure cloud by using an Azure-SSIS Integration Runtime, which is configured and maintained in Azure Data Factory (ADF). Typically, you schedule and execute your SSIS packages using an ADF pipeline, but sometimes there’s the need to start the execution of a package from an on-premises server (to be clear, the actual execution is still in Azure). For example, when you have a hybrid scenario where you combine on-premises and cloud ETL components, but you only want one scheduler.

In an on-premises scenario, you use the utility DTEXEC to execute SSIS packages from the command line (or from any tool able to start an executable). For the cloud, a new version of DTEXEC was created: the Azure-enabled DTEXEC. Although not explicitly mentioned in the documentation, it seems you can only use the Azure-enabled DTEXEC for packages in the package deployment model. You can find more information about this deployment model in Azure in the tip Migrate a Package Deployment Integration Services Project to Azure.

How to use Azure-enabled DTEXEC

Configuration

The regular DTEXEC utility comes with the installation of SQL Server or the Integration Services service. For the Azure-enabled version, we need one of the later versions of SSMS installed on the machine (version 18.3 or later). Before we can use the DTEXEC, we need to specify how it can connect to the various components in Azure.

In SSMS, go to Tools > Migrate to Azure > Configure Azure-enabled DTExec.

In the configuration menu, there are several items that need to be provided.

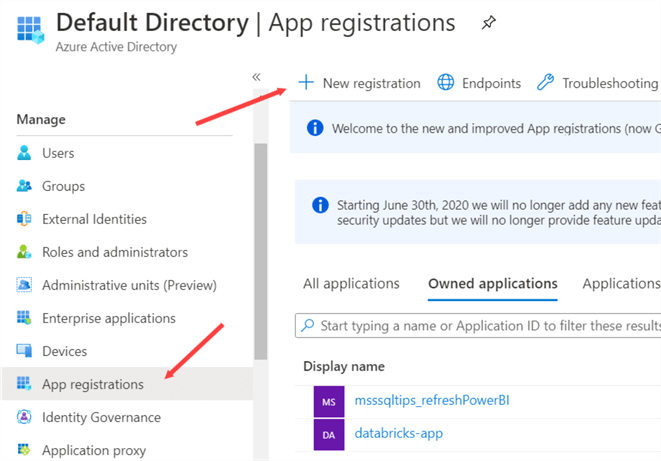

The first section, Azure AD Authentication, needs information for an Azure AD App that will create pipelines in ADF on your behalf. Let’s create such an app. In the Azure Portal, go to Azure Active Directory. Go to App Registrations and create a new registration.

Give the app a name, choose the supported account types (the default – single tenant – is fine) and specify a redirect URL. You can enter any valid URL.

To be able to authenticate to the app, a client secret (also known as authentication key) must be created. Go to the Certificates & Secrets section and click on New client secret to create one.

You can specify a description and choose when it expires:

The client secret is created. Be sure to copy the value, as we need it later on.

Next, we need to give this app registration the necessary permissions to create pipelines in ADF. Still in the Azure Portal, navigate to Azure Data Factory and to the access control section. Go the Role assignments and add a new one.

Assign the app to the contributor role:

You should see the app listed in the list of role assignments:

We can now provide the necessary values for the Azure-enabled DTEXEC config. You can find the app ID and the tenant ID in the overview page of the app registration we created earlier:

For the Azure-SSIS IR, you need to fill in the name of your Azure Data Factory, the name of the Integration Runtime (using Azure Files as the method for storing packages, which is needed for the package deployment model), the resource group, the ID of your subscription (which can be found in the Azure Portal) and the length of the hash which is appended to the name of each automatically created pipeline.

If you want SSIS to log, you can provide a UNC path and the user credentials needed to access this path. In the example, the logs are written to the same Azure Files storage account. We also need to specify the user credentials needed to access the location of the SSIS package. You will also need to specify a logging level, which are the same as the logging levels used in the SSIS catalog (even though we’re not using the project deployment model).

The actual location is provided as an execution parameter when the DTEXEC utility is executed. In this case, the user credentials for the SSIS package location and the log location are the same. The tip Migrate a Package Deployment Integration Services Project to Azure goes into more detail how you can configure the package and log location for the package deployment model.

Executing a Package

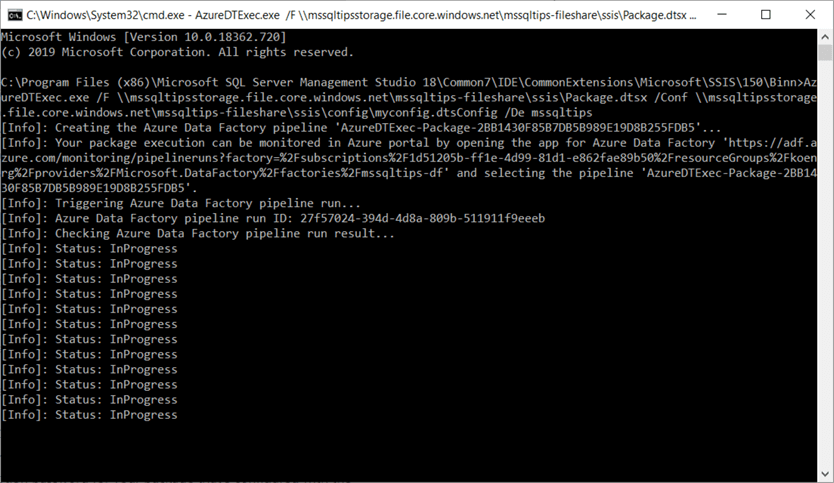

Once the utility is configured in SSMS, it can be used to execute packages in the Azure-SSIS IR (make sure it is running). The utility itself can be found in the folder C:\Program Files (x86)\Microsoft SQL Server Management Studio 18\Common7\IDE\CommonExtensions\Microsoft\SSIS\150\Binn (this might change in the future if SSMS get upgraded).

In the same folder, you can also find the config file which we created in the previous section trough the SSMS user interface. Start up the command prompt, and enter the following command to execute an SSIS package:

AzureDTExec.exe /F \\mystorage\myfolder\myPackage.dtsx /Conf \\mystore\myfolder\myconfig.dtsConfig /De mypassword

Specifying a config file or a password is optional and depends on how you created your package.

Once the package has finished, you will be presented with the execution status, the path of the log file, the runtime duration and a bit of extra information.

In the Azure storage account, we can verify the logging has been created:

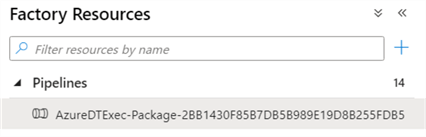

In Azure Data Factory, we can see a new pipeline has been created (the name has a hash suffix of 32 characters, as was specified in the configuration):

In the pipeline, an Execute SSIS package activity is used. It would be possible to schedule this newly created pipeline for further use, but this is discouraged as parameters are used and these might change in the future:

Conclusion

In this tip we showed you how you can configure the Azure-enabled DTEXEC and how you can use it to execute an SSIS package in an Azure-SSIS Integration Runtime. This utility can be used by any on-premises scheduling tool for the scheduling of your SSIS packages.

Next Steps

- To get up to speed with SSIS in Azure, you can go through the following tips:

- For more Azure tips, check out this overview.

- You can learn more about SSIS in this SSIS tutorial and from these SSIS tips.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2020-09-15