By: Ray Barley | Updated: 2020-11-16 | Comments | Related: > SQL Server Agent

Problem

There are many aspects to consider when designing and building a data mart. Since we have been building data marts for many years, there are plenty of best practices that we can implement.

In this tip I am going to walk through building a data mart that performs an incremental load where only the data that has changed since the last run is loaded. I will also highlight my ideas on best practices along the way.

Solution

In my earlier tip Monitor SQL Agent Job Activity with Power BI, I demonstrated a simple approach to gathering data from the SQL Server Agent tables, populating a data mart, and rendering the data with a Power BI report. In this tip I will take the data mart to the next level by implementing an incremental Extract, Transform and Load (ETL) process. The earlier tip did a simple truncate and load on the data in the data mart. It is quick and easy. However, often what you really need is an incremental ETL process where you periodically update the data mart with just the data that has changed since the last update.

At a high level, I will walk through the following steps:

- Review my ideas on best practices for implementing an incremental ETL process.

- Review the SQL Server Agent tables which contain the source data for the data mart.

- Review the dimensional model for the data mart to be populated from the SQL Server Agent tables.

- Provide an overview of the SQL Agent jobs that perform the ETL operations for the data mart.

- Provide a detailed walk through of the SQL Server Integration Services (SSIS) packages that are executed by the SQL Agent jobs to perform the periodic ETL process for the data mart.

- Review a simple demo of the output from the SQL Agent jobs.

Best Practices

Over the years I have worked on many ETL projects. In this section I am going to provide a high-level list of the best practices that I use on projects.

Incremental Load

- Build ETL processes that only load changes since the last time the process ran.

- If possible, use built-in capabilities like Change Tracking or Change Data Capture to identify the rows that have changed since the last run rather than writing custom code to figure this out.

- Where built-in capabilities to determine what changed do not exist, query the data in the data mart to determine the range of data for the incremental load; e.g. query the destination table to get the latest loaded details and use that to determine where to begin extracting from the source data. Make sure you have the appropriate indexes to make the queries efficient.

- Use the SSIS Data Flow to extract data from source systems and persist to staging tables in the destination database. This handles tables in the same databases, different databases and even different database servers.

- Determine whether the data from the source table represents an insert, update or delete to the destination table.

- Use primary or unique keys in the destination tables to allow inserting new rows where the row does not exist based on a LEFT JOIN between the staging table and destination table.

- Use stored procedures to transform data in a staging table and update the destination table, e.g. dimension or fact tables. This also helps with testing and debugging; you can easily test and debug a stored procedure outside of the ETL process. You can use the sys.dm_exec_procedure_stats DMV to get aggregate performance statistics for cached stored procedures.

- Use the SSIS OLE DB Source component with a SELECT statement that specifies the list of columns needed. Specify filter criteria (i.e. WHERE clause) to retrieve the rows inserted or changed since the last run.

- Tag data extracted so its source can be identified when updated into fact and dimension tables.

SSIS Packages

- Use Visual Studio or SQL Server Data Tools and take advantage of the GUI tool to develop, test and debug SSIS packages.

- Use the project deployment model and deploy projects to the Integration Services Catalog which provides detailed logging of SSIS package execution, error logging, and the ability to use Environments to specify parameter values at runtime.

- Use project parameters for values that are used in most if not all SSIS packages in the project, e.g. the connection manager server name property which can change when deploying to the development, test and production environments.

- Create environments in the Integration Services Catalog and specify the values for project parameters.

- Use package parameters for values that need to be specified at runtime for a particular SSIS package.

SQL Agent Jobs

- Think of job steps as the bullet list of tasks that need to be performed.

- Execute SSIS packages in job steps. SSIS packages make it easy to add logic to job steps as the need arises.

- Specify the SSIS project parameter values in a job step by selecting an environment from the Integration Services Catalog which makes it easy to change the values as necessary.

- Specify the SSIS package parameters in the job step which makes it easy to change the values as necessary.

- Create job state and persist to a table as the first step. Job state includes the data values that are determined at runtime and referenced by other steps in the job. In the earlier tip How to Maintain State in a SQL Server Agent Job, I provided an alternative approach where I used JSON to persist the job state.

- Retrieve the job state in every step where it is needed.

- Design the steps so that when an error occurs, you resolve the error and restart the job at the point of failure. The step should be able to retrieve the job state and either restart from the beginning or pick up where it left off.

- Mark the job state that the job has completed.

- Schedule SQL Agent jobs to run at specific times.

SQL Server Agent Tables

SQL Server Agent provides many tables in the msdb database that can be queried to retrieve current and historical data about SQL Server Agent jobs. You can get the full details on the available tables here. For our purposes, we will be using the following tables:

- sysjobs stores data about each SQL Agent job

- sysjobsteps stores data for each step in a SQL Agent job

- sysjobactivity stores data about current SQL Agent job activity and job status

- sysjobhistory stores data about SQL Agent jobs and job steps that have executed

- syssessions stores data each time the SQL Agent service is started

Dimensional Model

In this section I will walk through the data mart dimensional model broken down into the following usage scenarios:

- Dimensions

- Job History

- Active Jobs

1 - Dimensions

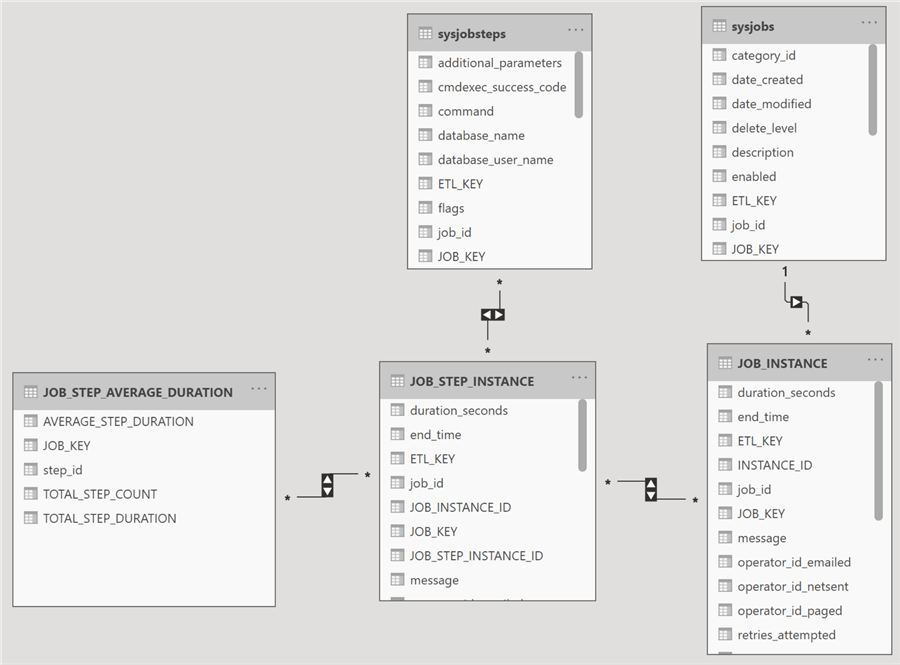

The following diagram shows the dimensions in the model:

The main points are:

- The above tables are in the dbo schema of the SQL_AGENT_DATA_MART database

- The sysjobs table is populated from the sysjobs table in the msdb database

- The sysjobsteps table is populated from the sysjobsteps table in the msdb database

- The JOB_CURRENT table identifies the current dimension in the sysjobs table

I chose to use the sysjobs and sysjobsteps table names because they are so well known. Both tables are implemented in the data mart as type 2 slowly changing dimensions which means they have the rows that represent the state of the jobs and job steps over time. Whenever a change is made to the sysjobs or sysjobsteps tables in the msdb database, new rows are inserted into the dimension tables and the existing rows remain unchanged allowing us to retain the history of the job and job steps.

The JOB_CURRENT table always contains the job_id of the current row along with the JOB_KEY surrogate key value. The JOB_KEY is used by the fact tables to join to the dimensions.

2 - Job History

When a SQL Agent job executes, the data we need to extract and load into fact tables primarily comes from the sysjobhistory table in the msdb database. Whenever a job or job step completes, a row is inserted into the sysjobhistory table with the pertinent details. The sysjobhistory table has many columns, but at this point I will just highlight the following:

- The run_status column can have several different values. It represents the result of the job or job step. The only one we care about is 1 which indicates success.

- The step_id column gets the value of the step_id from the sysjobsteps table for a job step completion or 0 for a job completion.

The following diagram shows the data mart tables related to the SQL Agent job history:

The following are the main points:

- The sysjobs and sysjobsteps tables are dimension tables; they were described in the previous section.

- All the above tables are joined by the JOB_KEY which is a surrogate key value.

- JOB_STEP_AVERAGE_DURATION is the main table in the above diagram. It stores the historical value of the average duration in seconds for a job step.

- JOB_STEP_INSTANCE contains the details from the SQL Agent sysjobhistory table in the msdb database for every time the job step has run.

- JOB_INSTANCE contains the details from the SQL Agent sysjobhistory table in the msdb database for every time the job has run.

As noted in the previous section the dimension tables are implemented as type 2 slowly changing dimensions. The JOB_INSTANCE and JOB_STEP_INSTANCE fact tables are joined to the “version” of the dimension tables that existed at the time the SQL Agent job ran.

3 - Active Jobs

The final part of the data mart has the details on the SQL Agent jobs that are currently running as shown in the following diagram:

The following are the main points for the above diagram:

- The ACTIVE_JOBS table is populated primarily from the sysjobactivity table in the msdb database.

- The ACTIVE_JOBS_REFRESH table logs the refresh date and time when the PROCESS SQL AGENT DATA MART ACTIVITY SQL Agent job (details in the next section) runs to retrieve the data on the SQL Agent jobs currently running.

- The JOB_CURRENT table identifies the JOB_KEY value for the current sysjobs dimension row based on the job_id and joins to the JOB_STEP_AVERAGE_DURATION table via the JOB_KEY.

- The JOB_STEP_AVERAGE_DURATION table is used to aggregate the sum of the average duration of the steps remaining to be completed in a currently running SQL Agent job.

SQL Server Agent Jobs

SQL Agent jobs are a good choice for performing a periodic ETL process. The following SQL Agent jobs are used in this implementation:

- PROCESS SQL AGENT DATA MART DIMENSIONS

- PROCESS SQL AGENT DATA MART HISTORY

- PROCESS SQL AGENT DATA MART ACTIVITY

Each SQL Agent job updates a part of the data mart that was discussed above.

I will provide a high-level overview of each job and walk through the interesting points in the SQL Server Integration Services (SSIS) packages that are executed in the job.

1 - PROCESS SQL AGENT DATA MART DIMENSIONS

The PROCESS SQL AGENT DATA MART DIMENSIONS job performs an incremental load of the Dimension tables in the data mart. The idea is to update the dimensions with any changes made to SQL Agent jobs since the last time this job was run. The assumption is that SQL Agent jobs do not change often, and when you do make changes you can run this job to update the data mart. It is also assumed that you can run this job before the next time the jobs you changed will run.

The job contains the following steps:

The following are the main points about the job steps:

- CREATE JOB STATE executes the BEGIN-ETL-DIMENSION-JOB SSIS package which creates a new row in the ETL_DIMENSION_LOG table. The row contains the ETL_KEY value for the job execution. The ETL_KEY will be stored in every row loaded.

- STAGE SQL AGENT DIMENSIONS executes the STAGE-SQL-AGENT-Dimensions SSIS package which extracts all rows from the SQL Agent sysjobs and sysjobsteps tables into staging tables.

- UPDATE SQL AGENT DIMENSIONS executes the UPDATE-SQL-AGENT-Dimensions SSIS package which performs an incremental load from the staging tables to the dimension tables.

- CLOSE JOB STATE executes the END-ETL-DIMENSION-LOG SSIS package which marks the ETL_DIMENSION_LOG table row complete.

I will walk through the details of the STAGE-SQL-AGENT-Dimensions and UPDATE-SQL-AGENT-Dimensions SSIS packages.

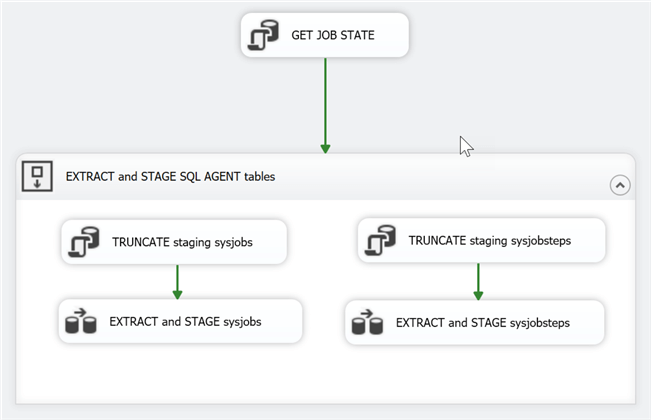

STAGE-SQL-AGENT-Dimensions

The STAGE-SQL-AGENT-Dimensions SSIS package extracts the data from the sysjobs and sysjobsteps SQL Agent tables in the msdb database and loads into staging tables. For the safe of simplicity, testing, and debugging, all rows are extracted from these tables. Normally you would choose to only extract the changes, but in this case, I’m making an exception which will be detailed below.

The following is the Control Flow for the package:

The following are the main points for the Control Flow:

- The Control Flow is simple by design. If an error were to occur, you simply rerun the SSIS package from the beginning.

- GET JOB STATE retrieves the row from the ETL_DIMENSION_LOG table for this job execution and stores the ETL_KEY column in an SSIS variable.

- The SSIS package implements the truncate and load pattern; truncate the staging tables and load them from the SQL Agent job tables.

- The loads are performed in a Data Flow which is a good choice because it easily accommodates the scenario where when the source tables and destination tables are in different databases as well as different SQL Server instances.

- While my preference is to use stored procedures in SSIS packages, I like stored procedures to only use tables in a single database so that I have the flexibility to move databases between different SQL Server instances and just change a configuration parameter.

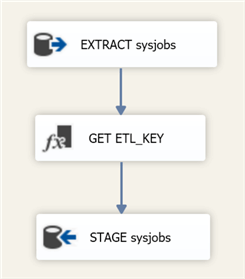

The following is the EXTRACT and STAGE sysjobs Data Flow:

The main points are:

- EXTRACT sysjobs is an OLE DB Source component. I choose SQL command for the Data access mode and supply the SELECT statement with the column list I need instead of SELECT * to get all columns.

- GET ETL_KEY is a Derived Column transform that retrieves the ETL_KEY variable and adds it to the Data Flow.

- STAGE sysjobs is an OLE DB Destination component that inserts every from that passes through the Data Flow into the staging.sysjobs table in the SQL_AGENT_DATA_MART database.

The EXTRACT and STAGE sysjobsteps Data Flow follows the same pattern so I will skip the details.

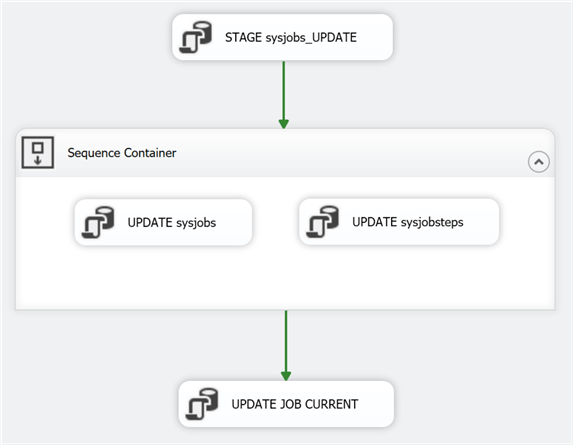

UPDATE-SQL-AGENT-Dimensions

The UPDATE-SQL-AGENT-Dimensions SSIS package performs an incremental load of the sysjobs and sysjobsteps dimension tables in the data mart from the staging tables. The following is the control flow for the package:

The main points for the control flow are:

- Each task in the Control Flow is an Execute SQL Task that executes a stored procedure that is named based on the task name.

- STAGE sysjobs_UPDATE compares the staging.sysjobs table to the dbo.sysjobs dimension table, determines any staging rows that are new or have changed, and writes the details to the staging.sysjobs_UPDATE table.

- UPDATE sysjobs updates the sysjobs dimension table based on the details in the staging.sysjobs_UPDATE table.

- UPDATE sysjobsteps updates the sysjobsteps dimension table based on the details in the staging.sysjobs_UPDATE table.

- UPDATE JOB CURRENT updates the JOB_CURRENT table with the “current” version of the row for each job in the sysjobs dimension table.

I will walk through the pertinent details for each of the stored procedures.

STAGE_sysjobs_UPDATE

The following is the STAGE_sysjobs_UPDATE stored procedure:

CREATE PROCEDURE [staging].[STAGE_sysjobs_UPDATE] AS BEGIN TRUNCATE TABLE [staging].[sysjobs_UPDATE]; ;WITH CTE_sysjobs AS( SELECT s.[ETL_KEY] , s.[STAGING_KEY] , CASE WHEN d.[job_id] IS NULL THEN 'I' -- new job WHEN d.[job_id] IS NOT NULL AND s.[date_modified] > d.[date_modified] THEN 'U' END [Action] , d.[JOB_KEY] , s.[job_id] FROM [staging].[sysjobs] s LEFT JOIN[dbo].[vJOB_CURRENT] d ON d.[job_id] = s.[job_id] LEFT JOIN [dbo].[JOB_EXCLUDE] x ON x.[job_id] = s.[job_id] WHERE x.[name] IS NULL ) INSERT [staging].[sysjobs_UPDATE]( [ETL_KEY] , [STAGING_KEY] , [ACTION] , [job_id] , [JOB_KEY] , [NEW_JOB_KEY] ) SELECT [ETL_KEY] , [STAGING_KEY] , [ACTION] , [job_id] , [JOB_KEY] -- NULL if insert , NEXT VALUE FOR [dbo].[JOB_KEY] FROM CTE_sysjobs WHERE [Action] IN('I', 'U'); END

The main points for the above stored procedure are:

- Truncate the [staging].[sysjobs_UPDATE] table.

- The CTE_sysjobs common table expression selects the rows to be processed from the sysjobs staging table.

- Use the LEFT JOIN to the JOB_CURRENT table to determine if the staging job_id exists; i.e. is it a new job or a possible update to an existing job?

- If the row in staging is a new job, then the [Action] will be set to ‘I’ to indicate an INSERT will be performed.

- If the row in staging is not a new job, compare the [date_modified] to determine whether the job has changed, and set the [Action] to ‘U’ indicating an UPDATE will be performed if the job has changed.

- Use the LEFT JOIN to the JOB_EXCLUDE table to see if we have marked this job as excluded from processing.

- Get the rows from the CTE_sysjobs common table expression where an INSERT or UPDATE is required.

- Get the value for the JOB_KEY (surrogate key) from the JOB_KEY sequence.

- Insert the rows into the sysjobs_UPDATE staging table.

Remember that we are implementing the type 2 slowly changing dimension pattern for the sysjobs and sysjobsteps dimension tables. The handling of the sysjobs dimension follows the normal pattern where you check for a new or updated row based on some business logic applied to the staging and dimension tables. In this case we compare the [date_modified]. I will provide the details for the sysjobsteps dimension in the UPDATE_sysjobsteps later in this section.

UPDATE_sysjobs

The following is the T-SQL code for the body of the UPDATE_sysjobs stored procedure which updates the sysjobs dimension:

CREATE PROCEDURE [dbo].[UPDATE_sysjobs] AS BEGIN INSERT [dbo].[sysjobs]( [JOB_KEY] , [job_id] , <remaining column list goes here> , [ETL_KEY] , [STAGING_KEY] ) SELECT u.[NEW_JOB_KEY] , j.[job_id] , <remaining column list goes here> , j.[ETL_KEY] , j.[STAGING_KEY] FROM [staging].[sysjobs_UPDATE] u JOIN [staging].[sysjobs] j ON j.[job_id] = u.[job_id] LEFT JOIN [dbo].[sysjobs] d ON d.[JOB_KEY] = u.[NEW_JOB_KEY] WHERE d.JOB_KEY IS NULL; END

The main points for the above stored procedure are:

- Retrieve the rows from the sysjobs_UPDATE staging table (it only includes rows for new jobs or changed jobs).

- The ETL_KEY originally comes from the job state.

- The STAGING_KEY is an IDENTITY which simply numbers the rows in the staging table.

- Join to the sysjobs staging table to retrieve all the columns for the job.

- Use a LEFT JOIN to the sysjobs dimension table to exclude any row that has already been inserted into the sysjobs dimension. This simple step allows for the possibility that if the stored procedure were run again, no error would be raised. The rows already inserted would be excluded.

UPDATE_sysjobsteps

The following is the stored procedure which updates the sysjobsteps dimension:

CREATE PROCEDURE [dbo].[UPDATE_sysjobsteps] AS BEGIN INSERT [dbo].[sysjobsteps]( [JOB_KEY] , [job_id] , [step_id] , [step_name] , <remaining column list goes here> , [ETL_KEY] , [STAGING_KEY] ) SELECT u.[NEW_JOB_KEY] , s.[job_id] , s.[step_id] , s.[step_name] , <remaining column list goes here> , s.[ETL_KEY] , s.[STAGING_KEY] FROM [staging].[sysjobs_UPDATE] u JOIN [staging].[sysjobsteps] s ON s.[job_id] = u.[job_id] LEFT JOIN [dbo].[sysjobsteps] d ON d.[JOB_KEY] = u.[NEW_JOB_KEY] AND d.[step_id] = s.[step_id] WHERE d.[JOB_KEY] IS NULL; END

The handling of the sysjobsteps dimension does not follow the normal pattern. The sysjobs and sysjobsteps tables have a parent-child relationship. The sysjobsteps table in the msdb database does not provide any column that we can easily use to determine a new or changed row. The approach taken is that all rows from the sysjobsteps staging table are inserted into the sysjobsteps dimension when the sysjobs_UPDATE staging table identifies a job as new or updated.

While I could implement business logic to determine what changed in the sysjobsteps table, the complexity of doing so is not worth it. The approach taken is the simple one and it handles new and updated job steps easily.

UPDATE_JOB_CURRENT

The following is the stored procedure which updates the JOB_CURRENT dimension:

CREATE PROCEDURE [dbo].[UPDATE_JOB_CURRENT] AS BEGIN ;WITH CTE_JOB_VERSION AS( SELECT [JOB_KEY] , [job_id] , ROW_NUMBER() OVER( PARTITION BY [job_id] ORDER BY [job_id], [date_modified] DESC ) AS [ROW_NUMBER] FROM [dbo].[sysjobs] ) , CTE_CURRENT_JOB AS( SELECT [JOB_KEY] , [job_id] FROM CTE_JOB_VERSION WHERE [ROW_NUMBER] = 1 ) , CTE_JOB_STEP_COUNT AS( SELECT s.[JOB_KEY] , COUNT(*)AS [JOB_STEP_COUNT] FROM [dbo].[sysjobsteps] s JOIN CTE_CURRENT_JOB c ON c.[JOB_KEY] = s.[JOB_KEY] GROUP BY s.[JOB_KEY] ) INSERT [dbo].[JOB_CURRENT]( [JOB_KEY] , [job_id] , [JOB_STEP_COUNT] ) SELECT j.[JOB_KEY] , j.[job_id] , ISNULL(n.[JOB_STEP_COUNT], 0) FROM CTE_CURRENT_JOB j LEFT JOIN CTE_JOB_STEP_COUNT n ON n.JOB_KEY = j.JOB_KEY LEFT JOIN [dbo].[JOB_CURRENT] c ON c.[JOB_KEY] = j.[JOB_KEY] WHERE c.[JOB_KEY] IS NULL; ;WITH CTE_OLD_JOBS AS( SELECT [JOB_KEY] , [job_id] , ROW_NUMBER() OVER( PARTITION BY [job_id] ORDER BY [job_id], [JOB_KEY] DESC ) AS [ROW_NUMBER] FROM [dbo].[JOB_CURRENT] ) DELETE c FROM [dbo].[JOB_CURRENT] c JOIN CTE_OLD_JOBS x ON x.[JOB_KEY] = c.[JOB_KEY] WHERE x.[ROW_NUMBER] > 1; END

The main points for the above stored procedure are:

- The purpose of the stored procedure is to keep the latest or current version of each job in the JOB_CURRENT table. The table is used in the PROCESS SQL AGENT DATA MART HISTORY job to get the JOB_KEY surrogate key value for the fact tables.

- The CTE_ JOB_VERSION common table expression gets the JOB_KEY and job_id columns for the rows in the sysjobs dimension and assigns a row number to identify the latest row for each job based on the [date_modified] column sorted in descending order.

- The CTE_JOB_CURRENT common table expression retrieves the JOB_KEY and job_id columns for the current job.

- The CTE_JOB_STEP_COUNT common table expression gets the number of steps in the current job.

- The new current job(s) are inserted into the JOB_CURRENT table. New current jobs are identified by a LEFT JOIN to the JOB_CURRENT table where the JOB_KEY does not exist.

- The CTE_OLD_JOBS common table expression identifies any old versions of jobs so that they can be deleted from the JOB_CURRENT table.

2 - PROCESS SQL AGENT DATA MART HISTORY

The PROCESS SQL AGENT DATA MART HISTORY job performs an incremental load of the Job History tables in the data mart. The Job History tables are the fact tables in the dimensional model.

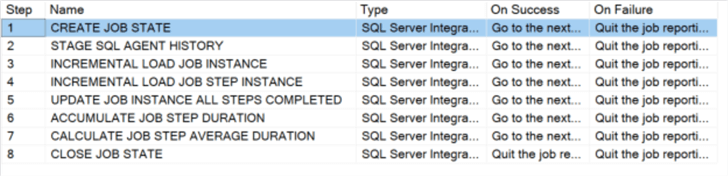

The job contains the following steps:

The following are the main points about the job steps:

- CREATE JOB STATE executes the BEGIN-ETL-HISTORY-JOB SSIS package.

- STAGE SQL AGENT HISTORY extracts the rows from the SQL Agent sysjobhistory table that have been inserted since the last time the job was run and inserts into a staging table.

- INCREMENTAL LOAD JOB INSTANCE loads the JOB_INSTANCE fact table with the job executions that have completed successfully since the last time the job was run.

- INCREMENTAL LOAD JOB STEP INSTANCE loads the JOB_STEP_INSTANCE fact table with the job step executions that have completed successfully since the last time the job was run.

- UPDATE JOB INSTANCE ALL STEPS COMPLETED loads the JOB_INSTANCE_ALL_STEPS_COMPLETED fact table with job executions where all steps in the job completed successfully.

- ACCUMULATE JOB STEP DURATION loads the JOB_STEP_DURATION fact table with the duration (in seconds) for job steps that completed successful.

- CALCULATE JOB STEP AVERAGE DURATION updates the JOB_STEP_AVERAGE_DURATION fact table based on the number of executions and duration of job steps in the JOB_STEP_DURATION fact table.

- CLOSE JOB STATE updates the job state to indicate that the job ended. Only successful job executions update the job state.

BEGIN-ETL-HISTORY-JOB

The BEGIN_ETL_HISTORY-JOB SSIS package creates the job state by executing the following stored procedure:

CREATE PROCEDURE [dbo].[CREATE_ETL_HISTORY_LOG] @P_ETL_KEY INT OUTPUT AS BEGIN DECLARE @BEGIN_INSTANCE_ID INT , @END_INSTANCE_ID INT; SELECT @BEGIN_INSTANCE_ID = MAX([INSTANCE_ID]) + 1 FROM [dbo].[JOB_INSTANCE]; SELECT @END_INSTANCE_ID = MAX([instance_id]) FROM msdb.dbo.sysjobhistory WHERE [run_status] = 1; INSERT [dbo].[ETL_HISTORY_LOG]( [BEGIN_INSTANCE_ID] , [END_INSTANCE_ID] ) VALUES( COALESCE(@BEGIN_INSTANCE_ID, 0) , COALESCE(@END_INSTANCE_ID, 0) ); SET @P_ETL_KEY = SCOPE_IDENTITY(); END

The main points for the above stored procedure are:

- Create the job state and insert into the ETL_HISTORY_LOG table.

- Get the range of [instance_id] values that have been inserted into the SQL Agent sysjobhistory table in the msdb database since the last time the job was run.

- The [BEGIN_INSTANCE_ID] is the MAX [INSTANCE_ID] from the JOB_INSTANCE table plus 1.

- The [END_INSTANCE_ID] is the MAX [instance_id] from the SQL Agent sysjobhistory table in the msdb database. I am only retrieving the rows that completed successfully (run_instance = 1).

- Insert the job state into the ETL_HISTORY_LOG table.

- The @P_ETL_KEY output parameter is assigned the IDENTITY value from the insert into the ETL_HISTORY_LOG table. Every row loaded into the fact tables will have this value in its [ETL_KEY] column to identify the job run that loaded the row.

Note that the job state can be referenced in any of the steps that follow in the job. It is especially useful to persist the job state in a table so that it can be referenced in the steps that follow in the job as well as if the job fails. When a step fails, you resolve the issue and restart the job at the point of failure. The job state can be retrieved and this allows the job step to run again with the values in the job state.

STAGE-SQL-AGENT-HISTORY

The STAGE-SQL-AGENT-HISTORY SSIS package truncates the sysjobhistory staging table and loads it with the rows from the SQL Agent sysjobhistory table in the msdb database that have been inserted since the last time the job was run.

The following is the Control Flow for the SSIS package:

GET JOB STATE is an Execute SQL Task that executes the following stored procedure:

CREATE PROCEDURE [dbo].[GET_ETL_HISTORY_LOG] @P_ETL_KEY INT OUTPUT , @P_BEGIN_INSTANCE_ID INT OUTPUT , @P_END_INSTANCE_ID INT OUTPUT AS BEGIN DECLARE @ETL_KEY INT , @BEGIN_INSTANCE_ID INT , @END_INSTANCE_ID INT; SELECT @ETL_KEY = MAX([ETL_KEY]) FROM [dbo].[ETL_HISTORY_LOG]; SELECT @BEGIN_INSTANCE_ID = [BEGIN_INSTANCE_ID] , @END_INSTANCE_ID = [END_INSTANCE_ID] FROM [dbo].[ETL_HISTORY_LOG] WHERE [ETL_KEY] = @ETL_KEY; SET @P_ETL_KEY = @ETL_KEY; SET @P_BEGIN_INSTANCE_ID = @BEGIN_INSTANCE_ID; SET @P_END_INSTANCE_ID = @END_INSTANCE_ID; END

The main points for the above stored procedure are:

- The job state is stored in the [ETL_HISTORY_LOG] table.

- Each time the job is run a new row is inserted into the table by the CREATE JOB STATE step of the job.

- The current job state is the row with the MAX [ETL_KEY] value in the table.

- The job state is returned to the caller via the output parameters.

- The output parameter values are stored in SSIS variables of the same name.

- When an error is raised in an SSIS package and the SSIS package is restarted, the stored procedure retrieves the job state allowing the package to start from the beginning with the same values as when the package was initially run. The job state could be updated which would allow the package to pick up where it left off in the event of a package restart. I did not do any updates to the job state.

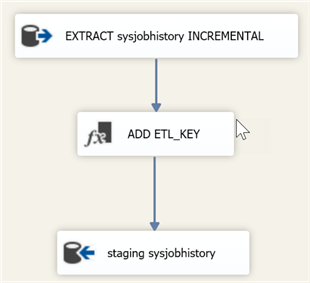

STAGE sysjobhistory is a Data Flow as shown below:

EXTRACT sysjobhistory INCREMENTAL is an OLE DB Source component that executes the following SQL statement:

SELECT [instance_id] , [job_id] , [step_id] , [step_name] , [sql_message_id] , [sql_severity] , [message] , [run_status] , [run_date] , [run_time] , [run_duration] , [operator_id_emailed] , [operator_id_netsent] , [operator_id_paged] , [retries_attempted] , [server] , msdb.dbo.agent_datetime(run_date, run_time) AS [start_time] , DATEADD( second , [run_duration] / 10000 * 3600 + -- convert hours to seconds ([run_duration] % 10000) / 100 * 60 +-- convert minutes to seconds ([run_duration] % 10000) % 100 -- get seconds , msdb.dbo.agent_datetime(run_date, run_time)) [end_time] , [run_duration] / 10000 * 3600 + -- convert hours to seconds ([run_duration] % 10000) / 100 * 60 + -- convert minutes to seconds ([run_duration] % 10000) % 100 -- get seconds AS [duration_seconds] FROM [dbo].[sysjobhistory] WHERE [instance_id] BETWEEN ? AND ? AND [run_status] = 1;

The following are the main points:

- The SELECT statement specifies the column list rather than a table name or SELECT *.

- The [step_id] column has a value of 0 for a job outcome and the step_id of a job (i.e. > 0) for a job step outcome.

- The agent_datetime function is used to convert the run_date and run_time into [start_time] which is a DATETIME type. Run_date is an INT with the value formatted as YYYYMMDD. Run_time is an INT with the value formatted as HHMMSS. It is the time that the job or job step started.

- The agent_datetime function is also used to return the [end_time] which is a DATETIME type.

- The duration_seconds calculation returns the number of seconds that the job or job step ran. [run_duration[ is an INT formatted as HHMMSS.

- The WHERE clause ‘?’ parameter placeholder values are replaced with the SSIS variables BEGIN_INSTANCE_ID and END_INSTANCE_ID.

- The WHERE clause [run_status] = 1 limits the rows return to successful job and job step executions.

ADD ETL KEY is a Derived Column Transform that adds the ETL_KEY variable value to the Data Flow.

Staging sysjobhistory is an OLE DB Destination component that inserts the rows from the Data Flow into the sysjobhistory staging table.

INCREMENTAL-LOAD-JOB-INSTANCE

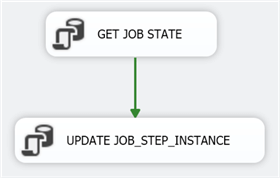

The INCREMENTAL-LOAD-JOB-INSTANCE SSIS packages loads the JOB_INSTANCE fact table with data for jobs that have executed successfully since the last time the job was run. The following is the Control Flow:

GET JOB STATE retrieves the job state and was described above in the STAGE-SQL-AGENT-HISTORY SSIS package section.

UPDATE JOB_INSTANCE executes the following stored procedure:

CREATE PROCEDURE [dbo].[UPDATE_JOB_INSTANCE] AS BEGIN INSERT [dbo].[JOB_INSTANCE]( [JOB_KEY] , [INSTANCE_ID] , [job_id] , [step_id] , <remaining column list goes here> , [ETL_KEY] , [STAGING_KEY] ) SELECT c.JOB_KEY ,[instance_id] , h.[job_id] , [step_id] , <remaining column list goes here> , [ETL_KEY] , [STAGING_KEY] FROM [staging].[sysjobhistory] h JOIN [dbo].[JOB_CURRENT] c ON c.[job_id] = h.[job_id] LEFT JOIN [dbo].[JOB_EXCLUDE] x ON x.[job_id] = h.[job_id] WHERE h.[step_id] = 0 -- only get job outcome rows AND x.[name] IS NULL; END

The main points for the above stored procedure are:

- Select the job outcome rows ([step_id] = 0) from the sysjobhistory staging table.

- JOIN to the JOB_CURRENT table to get the [JOB_KEY] surrogate key value for the current version of the job.

- LEFT JOIN to the JOB_EXCLUDE table to exclude any jobs you do not want to track.

- Insert the rows into the JOB_INSTANCE table.

INCREMENTAL-LOAD-JOB-STEP-INSTANCE

The INCREMENTAL-LOAD-JOB-STEP-INSTANCE SSIS package loads the JOB_STEP_INSTANCE fact table with data for job steps that have executed successfully since the last time the job was run. The following is the Control Flow:

GET JOB STATE retrieves the job state and was described above in the STAGE-SQL-AGENT-HISTORY SSIS package section.

UPDATE JOB_STEP_INSTANCE executes the following stored procedure:

CREATE PROCEDURE [dbo].[UPDATE_JOB_STEP_INSTANCE] AS BEGIN INSERT [dbo].[JOB_STEP_INSTANCE]( [JOB_KEY] , [JOB_INSTANCE_ID] , [JOB_STEP_INSTANCE_ID] , [job_id] , [step_id] , <remaining column list goes here> , [ETL_KEY] , [STAGING_KEY] ) SELECT c.JOB_KEY ,j.[INSTANCE_ID] -- JOB_INSTANCE ,h.[instance_id] -- JOB_STEP_INSTANCE_ID , h.[job_id] , h.[step_id] , <remaining column list goes here> , h.[ETL_KEY] , h.[STAGING_KEY] FROM [staging].[sysjobhistory] h JOIN [dbo].[JOB_CURRENT] c ON c.[job_id] = h.[job_id] JOIN [dbo].[JOB_INSTANCE] j ON j.[JOB_KEY] = c.[JOB_KEY] LEFT JOIN [dbo].[JOB_EXCLUDE] x ON x.[job_id] = h.[job_id] WHERE h.[step_id] > 0 AND h.[start_time] BETWEEN j.[start_time] AND j.[end_time] AND x.[name] IS NULL; END

The main points for the above stored procedure are:

- Select the job step outcome rows ([step_id] > 0) from the sysjobhistory staging table.

- JOIN to the JOB_CURRENT table to get the [JOB_KEY] surrogate key value for the current version of the job.

- JOIN to the JOB_INSTANCE table to get the [start_time] and [end_time] for the job execution. This allows for associating the job step with the correct job instance.

- LEFT JOIN to the JOB_EXCLUDE table to exclude any jobs that you do not want to track.

- Insert the rows into the JOB_STEP_INSTANCE table.

UPDATE-JOB-INSTANCE-ALL-STEPS-COMPLETED

The UPDATE-JOB-INSTANCE-ALL-STEPS-COMPLETED SSIS package populates the JOB_INSTANCE_ALL_STEPS_COMPLETED table with the combination of [JOB_KEY] and [JOB_INSTANCE_ID] values for JOB_INSTANCES where all job steps completed successfully. My initial thought for this table was to use it to exclude JOB_STEP_INSTANCE rows from the average duration calculation where the job did not complete all steps successfully. I thought jobs that fail and get restarted (possibly multiple times) might skew the average duration. However, I decided not to do that. I left the code in place because I may have a use for this table in the future.

The following is the package Control Flow:

GET JOB STATE retrieves the job state and was described above in the STAGE-SQL-AGENT-HISTORY SSIS package section.

UPDATE JOB_INSTANCE_ALL_STEPS_COMPLETED executes the following stored procedure:

CREATE PROCEDURE [dbo].[UPDATE_JOB_INSTANCE_ALL_STEPS_COMPLETED] @BEGIN_JOB_INSTANCE INT , @END_JOB_INSTANCE INT AS BEGIN ; WITH CTE_JOB_STEPS_COMPLETED AS( SELECT [JOB_INSTANCE_ID] , [JOB_KEY] , COUNT(*)AS [STEPS_COMPLETED] FROM [dbo].[JOB_STEP_INSTANCE] j WHERE [JOB_INSTANCE_ID] BETWEEN @BEGIN_JOB_INSTANCE AND @END_JOB_INSTANCE GROUP BY [JOB_INSTANCE_ID], [JOB_KEY] ) , CTE_DISTINCT_JOBS AS( SELECT DISTINCT [JOB_KEY] FROM CTE_JOB_STEPS_COMPLETED ) , CTE_JOB_STEPS_COUNT AS( SELECT s.[JOB_KEY] , COUNT(*)AS [STEP_COUNT] FROM [dbo].[sysjobsteps] s JOIN CTE_DISTINCT_JOBS j ON j.[JOB_KEY] = s.[JOB_KEY] GROUP BY s.[JOB_KEY] ) INSERT [dbo].[JOB_INSTANCE_ALL_STEPS_COMPLETED]( [JOB_KEY] , [INSTANCE_ID] ) SELECT c.[JOB_KEY] , c.[JOB_INSTANCE_ID] FROM CTE_JOB_STEPS_COMPLETED c JOIN CTE_JOB_STEPS_COUNT s ON s.[JOB_KEY] = c.[JOB_KEY] WHERE c.[STEPS_COMPLETED] = s.[STEP_COUNT]; END

The following are the main points for the stored procedure:

- The stored procedure parameters are assigned values from the GET JOB STATE step.

- The CTE_JOB_STEPS_COMPLETED common table expression gets the JOB_STEP_INSTANCE rows based on the stored procedure parameters and the number of steps completed for each JOB_INSTANCE.

- The CTE_DISTINCT_JOBS common table expression gets the distinct JOB_KEY values.

- The CTE_JOB_STEPS_COUNT common table expression gets the number of steps in the jobs.

- The CTE_JOB_STEPS_COMPLETED and CTE_JOB_STEPS_COUNT common table expressions are joined together and inserted into the JOB_INSTANCE_ALL_STEPS_COMPLETED table where the number of steps completed in the job equal the number of steps in the job.

ACCUMULATE-JOB-STEP-DURATION

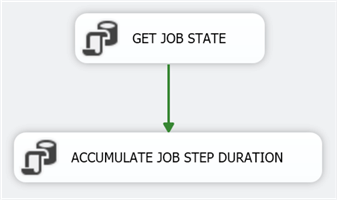

The ACCUMULATE-JOB-STEP-DURATION SSIS package inserts rows into the JOB_STEP_DURATION table which is used to calculate the average duration of the job step. The following in the Control Flow:

GET JOB STATE retrieves the job state and was described above in the STAGE-SQL-AGENT-HISTORY SSIS package section.

ACCUMULATE JOB STEP DURATION executes the following stored procedure:

CREATE PROCEDURE [dbo].[ACCUMULATE_JOB_STEP_DURATION] @BEGIN_JOB_INSTANCE INT , @END_JOB_INSTANCE INT AS BEGIN ;WITH CTE_JOB_STEPS AS( SELECT j.[JOB_KEY] , j.[JOB_INSTANCE_ID] , j.[step_id] , j.[duration_seconds] FROM [dbo].[JOB_STEP_INSTANCE] j WHERE j.[JOB_INSTANCE_ID] BETWEEN @BEGIN_JOB_INSTANCE AND @END_JOB_INSTANCE ) , CTE_SUM_STEPS AS( SELECT [JOB_KEY] , [step_id] , COUNT(*) AS [JOB_STEP_COUNT] , SUM([duration_seconds])AS [JOB_STEP_TOTAL_SECONDS] FROM CTE_JOB_STEPS GROUP BY [JOB_KEY], [step_id] ) INSERT [dbo].[JOB_STEP_DURATION]( [JOB_KEY] , [step_id] , [JOB_STEP_COUNT] , [JOB_STEP_TOTAL_SECONDS] ) SELECT [JOB_KEY] , [step_id] , [JOB_STEP_COUNT] , [JOB_STEP_TOTAL_SECONDS] FROM CTE_SUM_STEPS; END

The following are the main points for the stored procedure:

- The stored procedure parameters are supplied from the Job State.

- The CTE_JOB_STEPS common table expression retrieves the range of rows from the JOB_STEP_INSTANCE table based on the stored procedure parameters. The rows represent job steps that have completed successfully.

- The CTE_SUM_STEPS common table expression calculates the count and total duration by job and job step.

- Insert the results from CTE_SUM_STEPS into the JOB_STEP_DURATION table.

CALCULATE-JOB-STEP-AVERAGE-DURATION

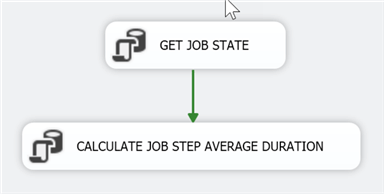

This last step in the PROCESS SQL AGENT DATA MART HISTORY SQL Agent job recalculates the average duration of the job steps completed since the last time the job was run. The following is the Control Flow:

GET JOB STATE retrieves the job state and was described above in the STAGE-SQL-AGENT-HISTORY SSIS package section.

CALCULATE JOB STEP AVERAGE DURATION executes the following stored procedure:

CREATE PROCEDURE [dbo].[CALCULATE_JOB_STEP_AVERAGE_DURATION] AS BEGIN TRUNCATE TABLE [dbo].[JOB_STEP_AVERAGE_DURATION]; ;WITH CTE_SUM_DURATION AS( SELECT [JOB_KEY] , [step_id] , SUM([JOB_STEP_COUNT]) [TOTAL_STEP_COUNT] , SUM([JOB_STEP_TOTAL_SECONDS])[TOTAL_STEP_DURATION] FROM [dbo].[JOB_STEP_DURATION] GROUP BY [JOB_KEY], [step_id] ) INSERT [dbo].[JOB_STEP_AVERAGE_DURATION]( [JOB_KEY] , [step_id] , [TOTAL_STEP_COUNT] , [TOTAL_STEP_DURATION] , [AVERAGE_STEP_DURATION] ) SELECT [JOB_KEY] , [step_id] , [TOTAL_STEP_COUNT] , [TOTAL_STEP_DURATION] , IIF(ISNULL([TOTAL_STEP_COUNT], 0) > 0 , [TOTAL_STEP_DURATION] / [TOTAL_STEP_COUNT] , NULL ) AS [AVERAGE_STEP_DURATION] FROM CTE_SUM_DURATION END

The following are the main points for the above stored procedure:

- The CTE_JOBS common table expression gets the distinct list of JOB_KEY values for the job steps that completed successfully since the last time the job was run.

- The CTE_SUM_DURATION common table expression gets the sum of job steps and job step duration for the JOB_KEY values.

- THE CTE_AVG_DURATION common table expression computes the average duration for the job steps that have completed successfully since the last time the job was run. The entire history is used to calculate the average duration. The results are written to the JOB_STEP_AVERAGE_DURATION_UPDATE table.

- The JOB_STEP_AVERAGE_DURATION table is updated from the JOB_STEP_AVERAGE_DURATION_UPDATE table.

3 - PROCESS SQL AGENT DATA MART ACTIVITY

The PROCESS SQL AGENT DATA MART ACTIVITY job gets the details on the SQL Agent jobs currently running and populates the Active Jobs tables in the data mart.

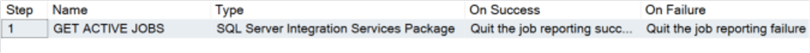

The job contains the following steps:

The following are the main points about the job steps:

- Because there is only one step in this job, I did not include the CREATE JOB STATE and CLOSE JOB STATE steps that appear in the prior SQL Agent jobs. At this point the job has no need for state.

- GET ACTIVE JOBS executes the GET-ACTIVE-JOBS SSIS package.

The GET-ACTIVE-JOBS package only has a single Execute SQL Task that executes the GET_ACTIVE_JOBS stored procedure as shown below:

DECLARE @SQL_AGENT_IS_RUNNING SMALLINT , @SESSION_ID INT , @REFRESH_KEY INT = NEXT VALUE FOR [dbo].[REFRESH_KEY] , @REFRESH_DATE SMALLDATETIME = CONVERT(SMALLDATETIME, GETDATE()); SELECT @SQL_AGENT_IS_RUNNING = IIF([status_desc] = 'Running', 1, 0) FROM sys.dm_server_services WHERE LEFT([servicename], 16) = 'SQL Server Agent'; IF @SQL_AGENT_IS_RUNNING = 0 BEGIN RAISERROR('The SQL Agent Service is not running.', 11, 1); RETURN; END SELECT @SESSION_ID = MAX(session_id) FROM msdb.dbo.syssessions; ;WITH CTE_JOBS_RUNNING AS ( SELECT c.[JOB_KEY] , a.[job_id] , a.[start_execution_date] , a.[last_executed_step_id] , a.[last_executed_step_date] FROM msdb.dbo.sysjobactivity a LEFT JOIN [dbo].[JOB_EXCLUDE] x ON x.[job_id] = a.[job_id] LEFT JOIN [dbo].[JOB_CURRENT] c ON c.[job_id] = a.[job_id] WHERE a.session_id = @SESSION_ID AND a.start_execution_date IS NOT NULL AND stop_execution_date IS NULL AND x.[name] IS NULL ) , CTE_JOB_STEPS_REMAINING_DURATION AS ( SELECT a.[JOB_KEY] , SUM(d.[AVERAGE_STEP_DURATION]) [job_step_average_duration] FROM CTE_JOBS_RUNNING a LEFT JOIN [dbo].[JOB_STEP_AVERAGE_DURATION] d ON d.[JOB_KEY] = a.[JOB_KEY] WHERE d.[step_id] > COALESCE(a.[last_executed_step_id], 0) GROUP BY a.[JOB_KEY] ) INSERT [dbo].[ACTIVE_JOBS] ( [REFRESH_KEY] , [job_id] , [CURRENT_DURATION] , [EXECUTION_COUNT] , [AVERAGE_DURATION] , [ESTIMATED_COMPLETION] ) SELECT @REFRESH_KEY , a.[job_id] , DATEDIFF(second, a.[start_execution_date], GETDATE()) AS [current_duration] , 0 -- TO DO: get the number of times the job has run? , d.[job_step_average_duration] -- based on last step executed , CONVERT( SMALLDATETIME , DATEADD( second , d.[job_step_average_duration] + DATEDIFF(second, a.[start_execution_date], GETDATE()) , a.[start_execution_date] ) ) AS [estimated_completion] FROM CTE_JOBS_RUNNING a LEFT JOIN CTE_JOB_STEPS_REMAINING_DURATION d ON d.[JOB_KEY] = a.[JOB_KEY]; INSERT [dbo].[ACTIVE_JOBS_REFRESH] ( [REFRESH_KEY] , [REFRESH_DATE] ) SELECT @REFRESH_KEY , @REFRESH_DATE;

The following are the main points for the above stored procedure:

- Check that the SQL Agent service is running by querying the sys.dm_server_services dynamic management view (DMV). If the service is not running raise an error and return. You can only test this when the stored procedure is not being executed from a SQL Agent job. Note that you would have to modify this code if you have more than one instance of the SQL Agent service; check the value of the [servicename] column.

- The @REFRESH_KEY variable is set to the next value from the REFRESH_KEY; I will explain how this is used below.

- Get the MAX session_id from the msdb syssessions table. This represents the session_id for the currently running instance of the SQL Agent service.

- The CTE_JOBS_RUNNING common table expression queries the SQL Agent sysjobactivity table to get the currently running jobs. The criteria for a running job are: session_id is for the current SQL Agent service instance, start_execution_date IS NOT NULL, and stop_execution_date IS NULL.

- LEFT JOIN to the JOB_EXCLUDE table to exclude any jobs you do not want to track.

- LEFT JOIN to the JOB_CURRENT table to get the JOB_KEY. A left join is used in case a new job was added but the PROCESS SQL AGENT DATA MART DIMENSIONS job has not been run since adding the job (the job will not be in the dimension tables).

- The CTE_JOB_STEPS_REMAINING_DURATION common table expression calculates the remaining duration for the job based on the current step. There are a couple of quirks here; the [last_executed_step_id] column is NULL until the job completes a step. When you launch a SQL Agent job you can specify the starting step_id. However, I do not see how to determine what step_id was chosen from the sysjobactivity table. Therefore, prior to completing a step, the remaining duration is assuming you started the job at step_id = 1. Once a step completes, the [last_executed_step_id] column will have a value and the remaining duration will include all steps after it. Finally, the time that the current step has been running is not taken into account in calculating the remaining duration.

- The CTE_JOBS_RUNNING and CTE_JOB_STEPS_REMAINING_DURATION common table expressions are joined together and the results are inserted into the ACTIVE_JOBS table.

- The final step in the procedure is to insert a row into the ACTIVE_JOBS_REFRESH table using the value from the @REFRESH_KEY variable and the current date and time.

- While the procedure is running, any query on the ACTIVE_JOBS table should use the filter on the RefreshKey from the MAX RefreshDate in the ACTIVE_JOBS_REFRESH table so that rows being inserted are not considered until all rows have been inserted.

Code Demo

In this section I will go over the steps to review the output from the SQL Agent jobs. For the sake of simplicity, you should follow these steps using a SQL Server instance that does not have any SQL Agent jobs running.

STEP 1: Deploy the sample code by following the instructions in the sample code (link is below in Next Steps).

STEP 2: Run the following SQL Agent jobs to populate the data mart with any existing SQL Agent jobs and their history:

- PROCESS SQL AGENT DATA MART DIMENSIONS

- PROCESS SQL AGENT DATA MART HISTORY

STEP 3: Create a SQL AGENT DATA MART TEST SQL Agent job with a single step named STEP 1 configured as follows:

- Type: Transact-SQL script (T-SQL)

- Database: SQL_AGENT_DATA_MART (or the name of the database you created if different)

- Command as shown below:

EXEC [dbo].[SimulateJobStepDuration] @MINIMUM_MINUTES = 2 , @MAXIMUM_MINUTES = 4;

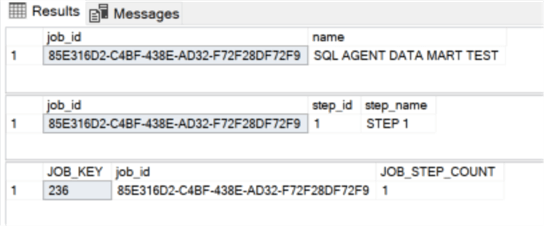

STEP 4: Run the PROCESS SQL AGENT DATA MART DIMENSIONS SQL Agent job. Run the queries as shown below to see the output from the job:

USE SQL_AGENT_DATA_MART GO DECLARE @JOB_KEY INT; SELECT @JOB_KEY = MAX(JOB_KEY) FROM dbo.sysjobs; SELECT job_id , [name] FROM dbo.sysjobs WHERE JOB_KEY = @JOB_KEY; SELECT job_id , step_id , step_name FROM dbo.sysjobsteps WHERE JOB_KEY = @JOB_KEY; SELECT * FROM dbo.JOB_CURRENT WHERE JOB_KEY = @JOB_KEY;

The following is the output from the above query, showing the dimension tables populated:

STEP 5: Run the SQL AGENT DATA MART TEST SQL Agent job.

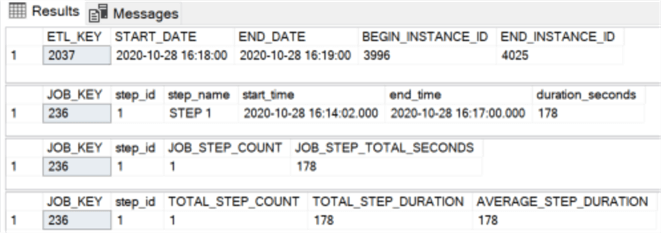

STEP 6: Run the PROCESS SQL AGENT DATA MART HISTORY SQL Agent job. Run the queries as shown below to see the output from the job:

USE [SQL_AGENT_DATA_MART]; GO -- View the job state from the latest run SELECT TOP 1 [ETL_KEY] , [START_DATE] , [END_DATE] , [BEGIN_INSTANCE_ID] , END_INSTANCE_ID FROM [dbo].[ETL_HISTORY_LOG] ORDER BY [ETL_KEY] DESC; DECLARE @JOB_KEY INT; SELECT @JOB_KEY = MAX(JOB_KEY) FROM dbo.sysjobs; SELECT JOB_KEY , step_id , step_name , start_time , end_time , duration_seconds FROM dbo.JOB_STEP_INSTANCE WHERE JOB_KEY = @JOB_KEY SELECT * FROM [dbo].[JOB_STEP_DURATION] WHERE JOB_KEY = @JOB_KEY; SELECT * FROM [dbo].[JOB_STEP_AVERAGE_DURATION] WHERE JOB_KEY = @JOB_KEY;

The following is the output from the above query, showing the fact tables populated:

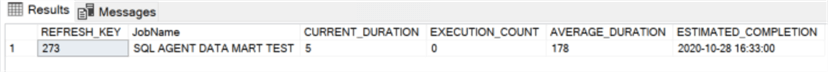

STEP 7: Run the PROCESS SQL AGENT DATA MART HISTORY SQL Agent job. After starting the job, run the Run the PROCESS SQL AGENT DATA MART ACTIVITY job. Run the following query to see the active job:

USE [SQL_AGENT_DATA_MART]; GO SELECT* FROM[dbo].[vACTIVE_JOBS];

The following is the output from the above query, showing the details on the active job:

The above output shows the active job, how long it has been running (CURRENT_DURATION), the average duration, and the estimated completion time based on when the job started and its average duration.

Next Steps

- You can deploy the sample code in this tip to a database on a SQL Server instance where you are running some SQL Server Agent jobs.

- Follow the detailed instructions in the sample code download for deploying the code.

- Leverage the techniques presented in this tip to implement ETL processes that do incremental loads instead of following the truncate and load pattern.

- Take a look at other tips on SQL Server Agent.

About the author

Ray Barley is a Principal Architect at IT Resource Partners and a MSSQLTips.com BI Expert.

Ray Barley is a Principal Architect at IT Resource Partners and a MSSQLTips.com BI Expert.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2020-11-16