By: Ron L'Esteve | Updated: 2021-02-25 | Comments | Related: > Azure Synapse Analytics

Problem

The desire to get near real-time insights on data stored in a transactional system such as Cosmos DB has been a long-standing goal and need for many organizations. Azure Synapse Link for Azure Cosmos DB is a cloud-native hybrid transactional and analytical processing (HTAP) capability that allows users to run near real-time analytics over operational data in Azure Cosmos DB. Data Engineers, Business Analysts and Data Scientists now have the ability to use Spark or SQL pools to get near real-time insights into their data without impacting the performance of their transactional workloads in Cosmos DB. How can we get started with Azure Synapse Link for Cosmos DB?

Solution

There are numerous advantages to Azure Synapse Link for Azure Cosmos DB including reduced complexity since a near real-time analytical store either reduces or eliminates the need for complex E-T-L or change feed job processes. Additionally, there will be little to no impact on operational workloads since the analytical workloads are rendered independently of the transactional workloads and does not consume the provisioned operational throughput. Additionally, it is optimized for large scale analytics workloads by leveraging the power of Spark and SQL on-demand pools which makes it cost effective due to the highly elastic Azure Synapse Analytics compute engines. With a column oriented analytical store for workloads on operational data including aggregations and more, along with decoupled performance for analytical workloads, Azure Synapse Link for Azure Cosmos DB enables and empowers self-service, near real-time insights on transactional data.

In this article, we will learn how to 1) create a basic Azure Cosmos DB Account enabled for Analytical Storage, 2) create a Cosmos DB linked service in Azure Synapse Analytics, and 3) Aggregate and Query Cosmos DB data with Spark from a Synapse Workspace notebook.

Create an Azure Cosmos DB Account

The first step to this process would be to create an Azure Cosmos DB Account from the Azure Portal.

Ensure that the account details are configured as desired and create the Azure Cosmos DB Account.

Enable Azure Synapse Link

Once the Cosmos DB Account is created, we will need to enable the Azure Synapse Link which by default is set to ‘Off'. This can be achieved by clicking on the Azure Synapse Link feature and Enabling Azure Synapse Link.

Once Azure Synapse Link is enabled, the Status will be changed to On.

Create a Cosmos DB Container and Database

Now that we have an Azure Cosmos DB account that is enabled for Azure Synapse Link, we can create a database and container. To get started, the Quick Start section within Cosmos DB allows for an easier experience of choosing a platform, creating a database and container and then getting started with a Cosmos DB notebook to run the code to import data.

For the purposes of my demo, I have chosen to use the Python platform, however, notice the additional wide range of platform options to choose from.

Let's begin by creating a database and container through the UI.

We'll need to configure the Database name, throughput, container name, partition key, and finally it important to remember to switch on the Analytical Store.

Import Data into Azure Cosmos DB

Now that we have created and configured the details for the database and container, let's create a new notebook to import data into the Cosmos database container.

Let's start by reading the database and container that we created in the previous step. Be sure to check out Azure Cosmos DB Python examples for additional API reference commands that could be used within the notebook code.

Also read Configure and use Azure Synapse Link for Azure Cosmos DB (preview) for more detail on additional code snippets including how to define and update the analytical store time to live with the analytical_storage_ttl command.

import azure.cosmos

from azure.cosmos.partition_key import PartitionKey

database = cosmos_client.get_database_client('RetailDemo')

print('Database RetailDemo Read')

container = database.get_container_client('WebsiteData')

print('Container WebsiteData Read')

Once the data is read, can update the throughput of the container to allow for a quicker upload by using the following code.

old_throughput = container.read_offer().offer_throughput

new_throughput = container.replace_throughput(1000).offer_throughput

print("Container WebsiteData's throughput updated from {} RU/s to {} RU/s".format(old_throughput, new_throughput))

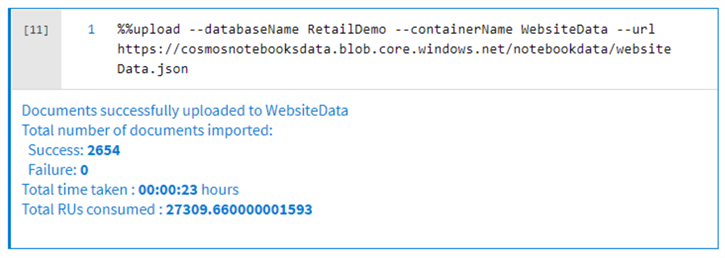

Next, we can use the %%upload magic function to insert items into the container.

%%upload --databaseName RetailDemo --containerName WebsiteData --url https://cosmosnotebooksdata.blob.core.windows.net/notebookdata/websiteData.json

The throughput of the container can be lowered once the data load is complete.

lowered_throughput = container.replace_throughput(400).offer_throughput

print("Container WebsiteData's throughput lowered from {} RU/s to {} RU/s".format(new_throughput, lowered_throughput))

Create a Cosmos DB Linked Service in Azure Synapse Analytics

Now that we have data in the Cosmos DB database container, its time to create a linked service within the Azure Synapse Analytics workspace by following the steps illustrated here:

Remember to select Azure Cosmos DB (SQL API) since that is the configuration of the Azure Cosmos DB API. Note that there is also an option for a Mongo DB API.

Fill in the required connection configuration details and create the new linked service.

Load and Query the Data Using Synapse Spark

Now that we have created a new linked service to the Cosmos DB from Azure Synapse Analytics, let's follow the steps illustrated below to create a new notebook and load the data to a data frame.

I wanted to illustrate the visual differences between an analytical storage enabled container versus one that isn't enabled for analytical storage. Basically, the contained that is enabled for analytical storage will have an additional three lines which represent the columnar storage of the analytical workloads.

Now that we have created a linked service, lets run the following code which will be auto created when we load the data to a dataframe from the previous step. Prior to running the code, remember to create a Synapse Spark pool and attach it to the notebook.

# Read from Cosmos DB analytical store into a Spark DataFrame and display 10 rows from the DataFrame

# To select a preferred list of regions in a multi-region Cosmos DB account, add .option("spark.cosmos.preferredRegions", "<Region1>,<Region2>")

df = spark.read .format("cosmos.olap") .option("spark.synapse.linkedService", "LS_CosmosDb_RetailDemo") .option("spark.cosmos.container", "WebsiteData") .load()

display(df.limit(10))

As we can see from the illustration below, the code ran successfully and used 2 executors and 8 cores for the job. Note that this can be customized to fit your desired workloads. Additionally, the job details can be viewed in the Spark UI.

Below is a preview of the top ten records from the data frame.

Next, lets run an aggregation on the data set to get a sum of the Price column and then display the dataframe.

from pyspark.sql.functions import * df = df.agg(sum(col('Price'))) df.show()

As we can see from the successfully completed job run, we were able to successfully aggregate the price column on the operational Cosmos DB data without having to leverage any custom built E-T-L processes, which demonstrates the capability of leveraging this Azure Synapse Link for Cosmos DB feature for a futuristic approach to empowering self-service data users to have the power to gain insights into their data at near real-time speeds.

Next Steps

- For more information on Azure Synapse Link for Cosmos DB, read: What is Azure Synapse Link for Azure Cosmos DB (Preview)?

- For more information on Azure Cosmos DB, read: Welcome to Azure Cosmos DB.

- For more information on Azure Cosmos DB Analytics Store, read: What is Azure Cosmos DB Analytical Store (Preview)?

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-02-25