By: Nai Biao Zhou | Updated: 2021-03-11 | Comments (1) | Related: More > R Language

Problem

Time series forecasting has been widely employed in organizational activities. With forecasting techniques, a business can make predictions and provide background information for decision-making (Moore et al., 2018). Management may ask IT professionals to study time series and produce forecasts. Developing time series analysis and forecasting skills helps IT professionals tackle these kinds of requests at work.

Solution

A time series, by definition, is a collection of data obtained by observing a response variable (usually denoted by y) over time (William & Sincich 2012). Many forecasting techniques are available to predict future values of a time series. The best forecasting technique is not always the most complicated one. In certain situations, simple methods may work better than the other more complicated methods. In this article, we explore four simple time series forecasting methods:

We start with two simple forecasting methods: the mean and naïve methods. People may have already applied these two methods in daily life, even though their mathematical background did not include a study of time series and forecasting.

Let us look at a real-world situation where we can apply these two time series models. Edgewood Solutions started MSSQLTips.com in 2006. From then on, expert authors join the MSSQLTips.com team every year (MSSQLTips, 2021). The Table 1 dataset presents the numbers of newly joined team members recorded every year. In this article, we always assume time series data is exactly evenly spaced. The CTO at Edgewood Solutions, Jeremy Kadlec, wants to forecast the number of new members in 2021.

Applying no knowledge of time series analysis, we can use the mean (or average) of these numbers to predict the yearly number of new MSSQLTips.com team members. We use all prior observations equally to forecast the future values of the time series when adopting this algorithm. We get the mean value through this calculation:

We then can predict that the MSSQLTips.com team welcomes 15 new members in the

year 2021. The way to make this prediction is called the mean (or average) method,

which uses the mean (or average) of the historical data to predict all future values.

The mean method is suitable for data that fluctuates around a constant mean. Mathematically,

we can write this forecasting method in this form, where

![]() denotes the point forecast at the time point t:

denotes the point forecast at the time point t:

Another simple forecasting method is the naive method. Convenience store owners may like to use this method. For example, if the ice cream sales were $205 yesterday, they forecast the sales will be $205 today. They use yesterday’s sales to predict today’s sales. Similarly, they can use today’s sales to predict tomorrow’s sales. The naive method uses only the most recent observation as future values of the time series. We can use the following mathematical expression to represent the method:

where

![]() denotes a point forecast,

denotes a point forecast,

![]() denotes an observed value, and the subscripts t and (t-1) denote two adjacent time

points.

denotes an observed value, and the subscripts t and (t-1) denote two adjacent time

points.

We now apply this method to forecast the number of new members in 2021. Since there were 11 new members in 2020, we predict that 11 experts join the team in 2021. As the name implies, the naive method is simple. The naïve method does not filter out any random noise, although it can reflect the most recent changes.

The mean method makes use of every piece of historical information equally to produce forecasts. On the other side, the naïve method takes only the most recent observation. The simple moving average method, something between these two extremes, uses several the most recent observations to generate a forecast (Stevenson, 2012). For example, given the numbers of the newly joined members in the years 2017, 2018, and 2019, we then compute the three-period moving average forecast for the year 2020:

At the end of 2020, the actual value, which is 11, becomes available. Then, we can compute the moving average forecast for the year 2021:

The calculation averages only recent observations and then moves along to the next time period; therefore, we call these computed averages one-sided moving averages, not weighted averages. This method can handle step changes or gradual changes in the level of the series. However, it works best for a time series that fluctuates around an average (Stevenson, 2012). Another primary way of using the moving average method is to average the time series values over adjacent periods and obtain two-sided moving averages. We concentrate on the one-sided moving averages in this article.

We have used three forecasting methods to predict the number of new team members in 2021. We got three different forecasted values: 15, 11, and 16. When the actual number becomes available at the end of 2021, none is likely correct, leading to skepticism about forecasting. In reality, people often criticize a forecast for being incorrect. The misunderstanding of forecasting is one of the most fundamental causes of this skepticism (Goodwin, 2018). With an understanding of some forecasting techniques, we can practice forecasting magic and interpret forecasts correctly.

To further explain these forecasting methods, the author arranges the rest of the article to try to help data scientists: Section 1 explores the characteristics of a time series and performs time series analysis. The section also uses some R commands to visualize time series and decompose a time series into three components for deep learning. Section 2 covers some common features of various forecasting techniques, uses four forecasting methods as predictors, and presents measures to evaluate forecast accuracy. Each method has a corresponding R function. The section also demonstrates the way of using an R function to test residuals.

The author tested R code with Microsoft R Open 4.0.2 on Windows 10 Home 10.0 <X64>. For the sake of demonstration, the sample code uses some time series from the "fpp2" open source packages. Several forecasting packages are capable of performing time-series data analysis. This article uses the "forecast" package, a rock-solid framework for time series forecasting (lm, 2020). The article also uses the function "sma()" in the "smooth" package to compute moving averages.

1 – Trend-Seasonal Analysis

The trend-seasonal analysis is one of the most important methods for analyzing time series in business. This method estimates the four basic components of a time series: secular trend (T), cyclical effect (C), seasonal variation (S) and residual effect (R). Since the primary goal of time series analysis is to create forecasts (Siegel, 2012), the first step in time series analysis must carefully examine the plot representing the data for validation (Shumway & Stoffer, 2016).

1.1 Visualizing Time Series

Visualization helps discover the underlying behavior of a time series and detect time series anomalies. We usually see several distinct patterns in a time series. Based on these patterns, we can then select appropriate analysis methods. Many tools, for example, R, Python, and Excel are capable of visualizing time series. We often present time series data in a time series plot, which plots each observation against the time at which we measured (Moore et al., 2018).

When performing time series analysis in R, we can store a time series as a time series object (i.e., a ts object). For example, we use the following R commands to store the data shown in Table 1. When the time intervals are less than one year, for example, "monthly," we should use the "frequency" argument in the function "ts". For monthly time series, we set frequency = 12, while for quarterly time series, we set frequency = 4 (Coghlan, 2018). The "autoplot()" command automatically produces an appropriate plot based on the first argument. When we pass a ts object to the command, the command produces a time series plot.

library(forecast) y <- ts(c(4,8,12,14,22,27,14,17,17,10,12,16,22,15,11), start=2006) autoplot(y, xlab = "Year", ylab = "Number of Newly Joined Members")

Figure 1 shows the yearly number of new members from 2006 to 2020. The time series plot indicates that MSSQLTips.com started with four authors. From 2006 to 2011, the number of newly joined members increased every year, and the number reached the first peak in 2011. The number had a sudden drop in 2012. Then, the number went up and down and reached the dip in 2015. The number in 2011 seems to be an outlier, and the other numbers fluctuate around the average.

Figure 1 The yearly numbers of new team members observed from 2006 to 2020

The time series presented in Table 1 is collected on an annual basis. There was no chance to model a within-year pattern from the observed values recorded once a year; therefore, the plot did not demonstrate seasonality. Seasonality is a characteristic of the time series in which data present predictable and somewhat regular changes that repeat every calendar year. The "fpp2" package contains a monthly Australian electricity demand series (Hyndman & Athanasopoulos, 2018). We use the following R code to plot the time series. It is worth noting that the function "window()" extracts a subset of the time series.

library(fpp2) aelec <- window(elec, start=1980) autoplot(aelec, xlab ="Year", ylab = "GWh")

Figure 2 illustrates the monthly Australian electricity demand from 1980 to 1995. In the time series plot, we observe an increasing trend in demand as well as a seasonal pattern. At the start of each year, the demand was at a dip point. Then the demand exhibited rises. The demand might fall in April, but usually reached a peak in July. After July, the demand decreased. In the rest of the year, the demand might fluctuate, however, reached the next dip point at the end of the year. This pattern repeats every year from 1980 to 1995.

Figure 2 Monthly Australian electricity demand from 1980–1995

In Figure 2, the values fluctuate around the trend, and all the magnitude of the seasonal pattern falls nearly within a band of constant width. In practice, we may find that the variability of the magnitude increases over time, as shown in Figure 3. This figure illustrates that the magnitude changes over time. The variability spreads out as the trend increases. We executed the following R commands to produce the figure:

library(fpp2) autoplot(a10, xlab="Year", ylab="$ million")

Figure 3 Monthly sales of antidiabetic drugs in Australia

1.2 Components of a Time Series

We have seen several time series plots and observed some patterns in time series. These patterns comprise one or several components combined to yield the time series data (Gerbing, 2016). We can analyze each component separately and then combine each component's predictions to produce forecasts (Hanke & Wichern, 2014). The approach of estimating four fundamental components of a time series is called trend-seasonal analysis. Here are the definitions of four components:

(1) Secular trend (T): A long-term increase or decrease in the data. The form of the trend pattern may be linear or non-linear.

(2) Cyclical effect (C): The gradual up-and-down fluctuation (sometimes called business cycles) against the secular trend where the fluctuations are not of a fixed frequency. For most business and economic data, the cycle in the cyclic component is over one year.

(3) Seasonal variation (S): the fluctuations that recur during specific portions of the year (e.g., monthly, or quarterly). In the business and financial world, we usually measure seasonal component in quarters.

(4) Residual effect (R): Residual variations that remain after the secular, cyclical, and seasonal components have been removed. The residual effect is purely random.

In practice, the cyclical variation does not always follow any definite trend; therefore, cycles are often challenging to identify. Many people confuse the cyclic component with the seasonal component. We can examine the frequency to distinguish them. If the frequency is unchanging and associated with some aspect of the calendar, then the pattern is seasonal. The cyclic component rarely has a fixed frequency.

Moreover, the magnitude of cycles appears to be more variable than the magnitude of seasonal patterns. The average length of cycles in a time series is longer than the seasonal patterns (Hyndman & Athanasopoulos, 2018). It is not easy to deal with the cyclic component of a time series. For the sake of simplicity, we combine the trend and cycle into a single trend-cycle component (or simply call it the trend component). Then, we concentrate on only three components, T, S, and R (Hyndman & Athanasopoulos, 2018). We visualize these components in Section 1.3.

1.3 The Additive vs. The Multiplicative Model

The trend (T), cyclic (C), seasonal (S), and residual (R) components combine to form the pattern underlying the time series. To keep things relatively simple, we combined the cyclic component into the trend component. Therefore, we consider only three components, T, S, and R. To describe the mathematical relationship between the components and the original series, we have two widely used models: additive and multiplicative. The additive model treats the time series values as a sum of these components:

On the other side, a multiplicative model that treats the time series values as the product of these components:

The additive model expresses the S and R components as a fixed number of units above or below the underlying trend at a time point. In contrast, the multiplicative model expresses the S and R components as percentages above or below the underlying trend at a time point. For the additive model, the variations of the S and R change little over time. The time series illustrated in Figure 2 indicates this kind of behavior. However, Figure 3, the multiplicative model, reveals a distinct pattern in which the S and R components increase when the T component increases. We can write the multiplicative model in a form of an additive model by taking natural logarithms:

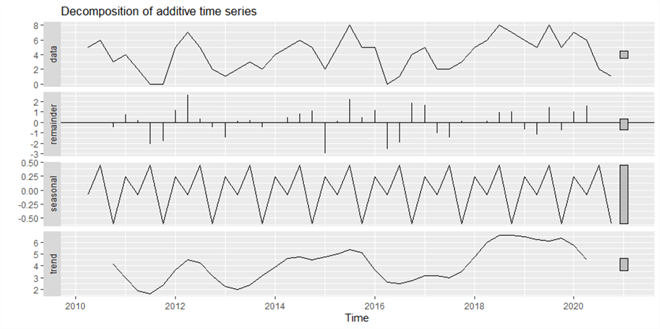

The "forecast" package provides the function "decompose()" to decompose a seasonal time series into the trend, seasonal and residual components. We use the following R code to decompose the time series shown in Figure 2. The output of the R code, shown in Figure 4, illustrates the three components. Then we can obtain forecasts by imposing the forecasts produced from the seasonal component on the forecasts from the trend component.

library(fpp2)

library(forecast)

aelec <- window(elec, start=1980)

aelecComp <- decompose(aelec,type=c("additive"))

autoplot(aelecComp)

Figure 4 The decomposition of the additive time series

Note that the "type" argument used in the function specifies the type of the model. The time series illustrated in Figure 3 is a multiplicative model. We use the following R code to decompose the time series. Figure 5 shows the three components, T, S, and R. We also can access the estimated values of the T, S, and R components through variables "a10Comp$trend," "a10Comp$seasonal" and "a10Comp$random," respectively.

library(fpp2)

library(forecast)

a10Comp <- decompose(a10,type=c("multiplicative"))

autoplot(a10Comp)

Figure 5 The decomposition of the multiplicative time series

2 Forecasts Based on Time Series

A forecast is a prediction of a future value of a time series (Moore et al., 2018). Most people are familiar with the weather forecast which meteorologists prepare for us. In daily business activities, we perform forecasting in many situations, such as budgeting and inventory management. Even though we have scientific methods, forecasting is not an exact science, but is equally art and science. Not only should we create sophisticated mathematical models, but we should apply experience, judgment, and intuition to choose the right models. No single model works all the time. We should continuously evaluate different models and always select appropriate ones.

2.1 Exploring Characteristics of Forecasting

Knowing no other factors that may influence a time series, we assume we can estimate future values of the series from the past values. However, many people say that time series forecasting is always wrong because a forecast does not precisely hit the number it predicts. This skepticism comes from several causes, but perhaps the most fundamental is a misunderstanding of the forecast. We should know some characteristics about forecasting, and then we can interpret forecasts correctly. Even though forecasting techniques vary widely, Stevenson summarized several forecasting characteristics in common (Stevenson, 2012):

(1) Forecasting techniques generally assume that the trend, cyclic, and seasonal components are stable, and past patterns will continue.

(2) Forecast errors are unavoidable because time series contain random errors.

(3) A forecast usually represents an estimate of average. Forecasts for a group are more accurate than forecasts for individuals in the group.

(4) Longer-range forecasts tend to be less accurate than short-range forecasts because the former contains more uncertainties than the latter.

2.2 Simple Forecasting Methods for Time Series

The time series differs from cross-sectional data in that the sequence of observations in a time series is critical. The historical data in the time series can provide clues about the future. In this section, we explore these forecasting methods:

- The mean method

- The Naïve method

- The seasonal naïve method

- The simple moving average method

These forecasting techniques are easy to understand and use. Business users understand the limitations of these techniques, adjust forecasts accordingly, and apply them in the appropriate circumstances. Not surprisingly, simple forecasting techniques enjoy widespread popularity, while sophisticated techniques lack trust (Stevenson, 2012). As Droke mentioned: "Nothing distills the essence of supply and demand like the chart. And nothing distills the chart quite like the moving average" (Droke, 2001).

2.2.1 Preparing Data

Aaron Bertrand is a passionate technologist with rich experience in SQL Server Technologies. He joined the MSSQLTips.com team in 2010. From then on, he has been writing articles for MSSQLTips.com. As of this writing, he has contributed 177 articles. Table 2 shows his quarterly article contributions from 2010 to 2020.

In practice, we may have different kinds of time series data. For example, in some circumstances, times series are only approximately evenly spaced. Sometimes, even though time series are evenly spaced, they miss some values. For simplicity, in this article, we concentrate on analyzing evenly spaced time series that do not miss any values. We use the following R code to construct a time series object and plot the time series. Figure 6 illustrates the time series plot, which does not reveal an apparent seasonality.

Library(forecast) contributions <- c(5,6,3,4,2,0,0,5,7,5,2,1,2,3,2,4,5,6,5,2,5,8,5,5,0,1,4,5,2,2,3,5,6,8,7,6,5,8,5,7,6,2,1) contributions.ts <- ts(contributions, frequency = 4, start = c(2010,2)) autoplot(contributions.ts,xlab = "Year", ylab = "Number of Tips")

Figure 6 Aaron’s quarterly article contributions from 2010 to 2020

We do not forecast noise; therefore, we should remove noise and detect the stable components. As we mentioned in Section 1.2, we concentrate on these three components: T, S, and R. In order to identify these components, we can invoke the function "decompose()" in R. The following code demonstrates a way of decomposing series, accessing these components, and plotting these components.

contributions.comp <- decompose(contributions.ts) # Access components contributions.comp$trend contributions.comp$seasonal contributions.comp$random autoplot(contributions.comp)

Figure 7 illustrates the three components. At any time point, a data value in the time series is the three components' sum. A remainder (i.e., residual) above the mid-line indicates that a data value is larger than the sum of T and S components. If a remainder is below the mid-line, we can conclude that the data value is smaller than the sum of T and S components. Even though the plot illustrates an S component, the S component's magnitude is much smaller than the T component. Therefore, we say that the seasonality is weak. Although the T component moves up and down as time goes on, it exhibits a slightly upward trend.

Figure 7 The time series decomposition into T, S and R components.

Assuming every piece of data in a time series is equally useful to predict all future values, we use the average of the time series to represent the forecasts. This method works best when a time series does not contain significant T and S components, such as the time series shown in Figure 7. The forecasts using this method are stable, but they may not recognize all the patterns. We can use the following formula to implement this method (Hyndman & Athanasopoulos, 2018):

where

![]() denotes the estimate of

denotes the estimate of

![]() based on the values

based on the values

![]() ,

and

,

and

![]() is the forecast horizon.

is the forecast horizon.

Averaging numeric values is a simple calculation. We can compute the mean by hand or using tools such as Excel. Substituting into the formula with values in Table 2, we find the mean value, i.e., 4.069767. The number of contributions is always an integer. We recommend that the numeric format of a forecast be the same way as the historical data. Therefore, using the mean method, loosely speaking, we predict Aaron will write four articles every quarter in 2021.

The mean method presents us with a single number (i.e., point forecast). A point forecast, usually the mean of the probability distribution, represents an estimate of the average number of contributions if the period, such as the first quarter in 2021, repeats many times over. However, we can only see one instance of the first quarter in 2021; therefore, we never know the real average. To make the forecasting more meaningful, we should know other statistical information about the forecasts, such as prediction intervals.

A prediction interval is an interval for the prediction of individual observation. A 95% prediction interval indicates that, if a future event repeats, that chance of a specific characteristic measured from the event fall within the prediction interval is 95%. The function "meanf ()" in the "forecast" package can return forecasts and prediction intervals. The following R statements produce the forecasting model, plot the forecasts, and print the model summary.

library(forecast) contributions <- c(5,6,3,4,2,0,0,5,7,5,2,1,2,3,2,4,5,6,5,2,5,8,5,5,0,1,4,5,2,2,3,5,6,8,7,6,5,8,5,7,6,2,1) contributions.ts <- ts(contributions, frequency = 4, start = c(2010,2)) # Use meanf() to forecast quarterly contributions in 2021 contributions.fc <- meanf(contributions.ts, h=4) # Plot and summarize the forecasts autoplot(contributions.fc,xlab = "Year", ylab = "Contributions") summary(contributions.fc)

Figure 8 illustrates the forecasts of the number of articles Aaron will write in 2021. The short horizontal blue line on the right represents the point forecasts for the year 2021. From the plot, we can also find 80% and 95% prediction intervals. The 95% prediction interval contains the true value of the future values with a probability of 95%, while the chance of the 80% prediction intervals contains the true number is 80%. The 95% prediction interval is wider than the 80% prediction interval.

Figure 8 Predict number of Aaron’s contributions using the mean method

The "summary()" function produces the model information, error measures, point forecasts, an 80% prediction interval for the forecasts, and a 95% prediction interval, shown as follows. We observe some impossible values in the intervals since the number of articles should be non-negative. These prediction intervals do not seem trustworthy. For the sake of simplicity, we can adjust the intervals by removing all impossible values.

The output shows that, loosely speaking, Aaron will write four articles every quarter in 2021, with a 95% prediction interval of [0, 9]. Even though we do not know the true value, we are 95% confident that the true value is between 0 and 9. The mean method does not use the ordering information in the time series; therefore, all point forecasts have the same value and all intervals have the same width.

Forecast method: Mean

Model Information:

$mu

[1] 4.069767

$mu.se

[1] 0.3464667

$sd

[1] 2.271934

$bootstrap

[1] FALSE

$call

meanf(y = contributions.ts, h = 4)

attr(,"class")

[1] "meanf"

Error measures:

ME RMSE MAE MPE MAPE MASE ACF1

Training set -1.239117e-16 2.245361 1.925365 -Inf Inf 0.736169 0.5011576

Forecasts:

Point Forecast Lo 80 Hi 80 Lo 95 Hi 95

2021 Q1 4.069767 1.07743 7.062105 -0.5681876 8.707722

2021 Q2 4.069767 1.07743 7.062105 -0.5681876 8.707722

2021 Q3 4.069767 1.07743 7.062105 -0.5681876 8.707722

2021 Q4 4.069767 1.07743 7.062105 -0.5681876 8.707722

>

The naïve method, one of the simplest forecasting methods, does not consider the effects of T and S components. When using this method, the forecast for a given period is the value of the previous period. The naïve method seems to work better with data reported on a daily or weekly basis or in situations that show no T or S components (Black, 2013). This method provides a useful benchmark for other forecasting methods.

We use the following equation to represent the naïve method mathematically (Hyndman & Athanasopoulos, 2018):

where

![]() denotes the estimate of

denotes the estimate of

![]() based on the data

based on the data

![]() ,

and

,

and

![]() is the forecast horizon.

is the forecast horizon.

According to the equation, given h = 4, we predict Aaron will contribute one article in every quarter of 2021. This obvious calculation only gives point forecasts. To find the prediction intervals, we use the function "naïve()" in the "forecast" package. We use the following R script to visualize the forecasts, and print prediction intervals.

library(forecast) contributions <- c(5,6,3,4,2,0,0,5,7,5,2,1,2,3,2,4,5,6,5,2,5,8,5,5,0,1,4,5,2,2,3,5,6,8,7,6,5,8,5,7,6,2,1) contributions.ts <- ts(contributions, frequency = 4, start = c(2010,2)) # Use naive() to forecast contributions in 2021 contributions.fc <- naive(contributions.ts, h=4) # Plot and summarize the forecasts autoplot(contributions.fc) summary(contributions.fc)

Figure 9 illustrates the forecasts of the number of articles Aaron will write in 2021. The blue line on the right is the point forecasts for the year 2021. The 80% prediction interval is in the dark shaded region, and the 95% prediction interval is in the light shaded regions. We observe that the longer-ranger forecasts have wider prediction intervals. When the prediction interval is wider, the forecasts have more uncertainties, and the predicted values may become less meaningful.

Figure 9 Predict number of contributions using the naive method

The output of the "summary()" function, shown as follows, contains the model information, error measures, point forecasts, 80% prediction intervals for the forecasts, and 95% prediction intervals. By removing all impossible values in the intervals, Aaron’s forecasted number of articles in the first quarter of 2021 is about one, with a 95% prediction interval [0, 5]. That is to say, we are 95% confident that the true number is between 0 and 5.

Forecast method: Naive method

Model Information:

Call: naive(y = contributions.ts, h = 4)

Residual sd: 2.2394

Error measures:

ME RMSE MAE MPE MAPE MASE ACF1

Training set -0.0952381 2.21467 1.857143 -Inf Inf 0.710084 -0.02135027

Forecasts:

Point Forecast Lo 80 Hi 80 Lo 95 Hi 95

2021 Q1 1 -1.838213 3.838213 -3.340673 5.340673

2021 Q2 1 -3.013840 5.013840 -5.138638 7.138638

2021 Q3 1 -3.915930 5.915930 -6.518266 8.518266

2021 Q4 1 -4.676427 6.676427 -7.681346 9.681346

>

2.2.4 The Seasonal Naïve method

We can make several adaptations to the naïve method. When we have highly seasonal data, the forecast for the current season is the actual value of the last season. For example, if the time series for Aaron’s contributions were seasonal, the forecast for the first quarter of 2021 should be the number of articles he wrote in the first quarter of 2020, i.e., 7. This variation of the naïve method is called the seasonal naïve method. We use data from Academy’s tutorial (Academy, 2013) to show the way of using the seasonal naïve method. We present the data in Table 3.

The time series plot shown in Figure 10 demonstrates the high seasonality and an upward trend as well. Other forecasting methods, for example, the method presented in Academy’s lecture (Academy, 2013), may do better than the seasonal naïve method. However, complex methods do not guarantee accuracy. If we can use a more straightforward method and get the same accuracy level, we prefer the simpler one.

When we have quarterly data, the first quarter's forecast in a year is equal to the quarter's observed value in the previous year. Applying the same rule to other quarters, we have forecasts for other quarters. The car sales in quarters 1, 2, 3, and 4 of the year 2020 are 6.3, 5.9, 8.0, and 8.4, respectively. Therefore, the forecasts for quarters 1, 2, 3, and 4 in 2021 are 6.3, 5.9, 8.0, and 8.4, respectively. Hyndman and Athanasopoulos concluded a mathematical expression to represent this method:

where m is the seasonal period, and k is the integer part of (h−1)/m.

The "forecast" package provides a function "snaïve()" to make a forecast model. We use the following R statements to produce the forecasting model, plot the forecasts, and print out a summary of the model.

library(forecast) sales <- c(4.8,4.1,6.0,6.5,5.8,5.2,6.8,7.4,6.0,5.6,7.5,7.8,6.3,5.9,8.0,8.4) sales.ts <- ts(sales,frequency=4, start=c(2017,1)) autoplot(sales.ts,xlab = "Year", ylab = "Sales (in million dollars)") # Use snaive() to forecast sales in 2021 sales.fc <- snaive(sales.ts, h=4) # Plot and summarize the forecasts autoplot(sales.fc) summary(sales.fc)

Figure 11 shows the forecasts for car sales in the four quarters of 2021. The blue line represents the point forecasts, and the shaded regions illustrate predictions regions. We find that the 80% prediction intervals lies inside of the 95% prediction intervals. The seasonal naïve method uses the value from the first quarter of 2020 to predict the first quarter of 2021. The prediction of the second quarter of 2021 uses the second quarter of 2020, and so on. Nevertheless, this method does not recognize the upward trend in the time series.

Figure 11 Predict car sales using the seasonal naïve method

The output of the "summary()" function, shown as follows, contains the model information, error measures, point forecasts, 80% prediction intervals for the forecasts, and 95% prediction intervals. In the first quarter of 2021, the point forecast is 6.3 million dollars, and the 95% prediction interval is [5.0, 7.6]. The prediction intervals express the uncertainty in the forecasts.

Forecast method: Seasonal naive method

Model Information:

Call: snaive(y = sales.ts, h = 4)

Residual sd: 0.2985

Error measures:

ME RMSE MAE MPE MAPE MASE ACF1

Training set 0.6 0.6645801 0.6 9.208278 9.208278 1 0.4693878

Forecasts:

Point Forecast Lo 80 Hi 80 Lo 95 Hi 95

2021 Q1 6.3 5.448306 7.151694 4.997447 7.602553

2021 Q2 5.9 5.048306 6.751694 4.597447 7.202553

2021 Q3 8.0 7.148306 8.851694 6.697447 9.302553

2021 Q4 8.4 7.548306 9.251694 7.097447 9.702553

>

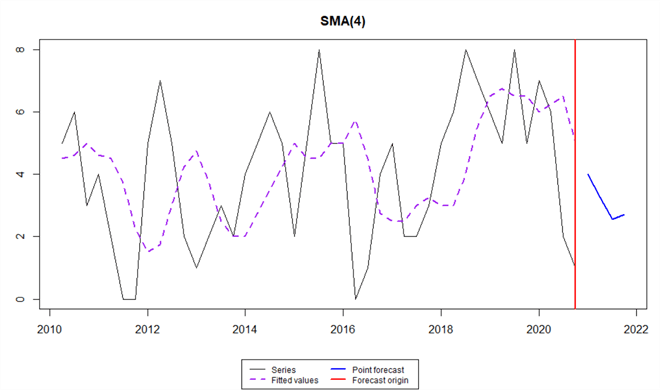

2.2.5 The Simple Moving Average Method

The simple moving average method is, by far, the most widely used (Pring, 2014). We compute an average by adding up a set of data and dividing the sum by the number of items in the set. To "move" the average, we remove the first item in the set, append a new item, and then average the set. When we computer moving averages for a time series, the averages form a new time series. The new time series becomes flat because the calculation process removes the rapid fluctuations. The moving average method works best when the time series is trending.

There are two major ways of using moving averages: one-sided moving averages and two-sided moving averages (Hyndman, 2010). We explore the one-sided moving averages in this article. Given a time point, we average a subset that comprises its most recent actual data values. The average is the forecast of the time point. When the time point moves forward, we get one-sided moving averages (or trailing moving averages). The description of the method looks more complicated than it is. If we use N to denote the length of the subset, we can use this equation to compute the moving averages:

For sake of the simplicity, the calculation for N = 4 should look like the following equation:

Table 4 contains true values in 43 periods. We apply this method to these values. For the sake of demonstration, we select N = 4, which means we can only start the calculation after we have collected four periods’ data. We calculate the first moving average forecast (i.e.,4.50) for period 5. When the true value (i.e.,2) in period 5 is available, we use the true value to compute the forecast for period 6, i.e., 3.75. We repeat the process until we find the forecast (i.e.,4.00) at period 44. Therefore, the forecast for the number in the first quarter of 2021 is four.

This exercise selected four data points; therefore, these averages are called four-point moving averages. When the number of data points, i.e., the subset’s length, is small, the forecast is volatile. When the length is considerable, the forecast is stable. As a rule of thumb, we typically select 1, 2, 3, 4, or 5 data points for a non-seasonal time series. We use the length of an annual cycle as the subset’s length when analyzing seasonal time series, for example, selecting four data points for quarterly data and selecting 12 data points for monthly data (William & Sincich, 2012). Forecast accuracy metrics help select the number of data points. We pick one metric we trust and find out which N can bring us better accuracy.

The "smooth" package in R provides the function "sma" to compute the simple moving average (Svetunkov, 2021). We use the following R statements to produce the model, print out a summary of the model, and print the forecasts and prediction intervals.

library(smooth) contributions <- c(5,6,3,4,2,0,0,5,7,5,2,1,2,3,2,4,5,6,5,2,5,8,5,5,0,1,4,5,2,2,3,5,6,8,7,6,5,8,5,7,6,2,1) contributions.ts <- ts(contributions, frequency = 4, start = c(2010,2)) # Use sma() to forecast number of Aaron's contributions in 2021 contributions.fc <- sma(contributions.ts, order=4, h=4,silent=FALSE) # Print model summary summary(contributions.fc) # Print the forecasts fc <- forecast(contributions.fc) print(fc)

Figure 12 presents the forecasts of the number of articles Aaron will write in 2021. The solid blue line represents the point forecasts for the four quarters in 2021. The plot of the moving averages (i.e., the fitted values) is smoother than the original series. When we increase the value of N, the plot of the moving average becomes flatter. The plot does not illustrate prediction intervals, but we can find the lower bound and upper bound from fc$lower and fc$upper, respectively.

Figure 12 Predicting number of contributions using the simple moving average method

The output of the function "summary()", shown as follows, contains the model information, and accuracy measures. The function "forecast()" gives point forecasts and 95% prediction intervals. By removing all impossible values, Aaron’s forecasted number of articles in the first quarter of 2021 is about four, with a 95% prediction interval [0, 9].

> # Print model summary

> summary(contributions.fc)

Time elapsed: 0.07 seconds

Model estimated: SMA(4)

Initial values were produced using backcasting.

Loss function type: MSE; Loss function value: 6.194

Error standard deviation: 2.5488

Sample size: 43

Number of estimated parameters: 2

Number of degrees of freedom: 41

Information criteria:

AIC AICc BIC BICc

204.4430 204.7430 207.9654 208.5296

> # Print the forecasts

> fc <- forecast(contributions.fc)

> print(fc)

Point forecast Lower bound (2.5%) Upper bound (97.5%)

2021 Q1 4.000000 -1.147330 9.147330

2021 Q2 3.250000 -2.055746 8.555746

2021 Q3 2.562500 -2.981717 8.106717

2021 Q4 2.703125 -3.194431 8.600681

2022 Q1 3.128906 -3.281871 9.539683

2022 Q2 2.911133 -3.762586 9.584852

2022 Q3 2.826416 -4.139641 9.792473

2022 Q4 2.892395 -4.381565 10.166355

2023 Q1 2.939713 -4.635420 10.514845

2023 Q2 2.892414 -4.946159 10.730987

>

We have implemented three methods to forecast how many articles Aaron will write for MSSQLtips.com in each quarter of 2021. Table 5 shows the forecasts produced by the three simple forecasting methods. The RMSE column shows the root-mean-square errors, one of the most meaningful accuracy measures. We introduce the accuracy measures in the next section. Since the 95% prediction intervals are too wide according to the author’s intuition, the forecasts may be accurate but not meaningful. This scenario frequently happens in practice, and we should look at other techniques. We concentrated on the four simple forecasting methods in this article.

2.3 Assessing Forecast Accuracy

We used three methods to forecast the numbers of Aaron’s contributions in 2021. To determine which method made a good forecast, we measure forecast accuracies. We can calculate forecast accuracy by analyzing the forecast errors, which are the differences between the actual future values and the corresponding predicted values. The three widely accepted measures are the mean absolute deviation (MAD), the mean absolute percentage error (MAPE), and the root mean squared error (RMSE). Most software packages can automatically calculate the values of these measures.

2.3.1 The Mean Absolute Deviation (MAD)

The mean absolute deviation (MAD) measures forecast accuracy by averaging the absolute values of the forecast errors. Because MAD is easy to understand and calculate, we can use this measure to compare forecasting methods applied to a single time series (Hyndman & Athanasopoulos, 2018). The MAD is in the same units as the original series; therefore, it is not meaningful to compare this measure between forecasts with different units. We can compute the MAD by the following equation:

Let us compute the MAD of the model produced in Section 2.2.2:

MAD has another name, i.e., MAE (the Mean Absolute Error). The "meanf()" function used in Section 2.2.2 obtained a value of 1.925365 for the MAE. The result from the R function agrees with the manual calculation.

2.3.2 The Mean Absolute Percent Error (MAPE)

Different time series may have different units, for example, million dollars and gallon. When comparing forecasting methods applied to multiple time series with different units, we can use the mean absolute percentage error (MAPE) that does not associate a unit. Sometimes, when the magnitude of observed values is significant, it is easy to interpret the forecast errors in terms of percentage.

To calculate the MAPE, we first find the absolute error at each time point divided by the observed value at this point. We then average these quotients and write the average in percentage. The following expression represents this calculation process:

Let us calculate the MAPE to evaluate the model produced by the mean method in Section 2.2.2:

Because of the value of 0 in the time series, we cannot compute the MAPE for the forecasts, while the "meanf()" function return "Inf" for this case. The MAPE has other limitations. For example, if a time series contains very small values, the MAPE can be very large. Kolassa summarized the limitations of the MAPE when he answered a question on the web (Kolassa, 2017).

2.3.3 The Root Mean Squared Error (RMSE)

The root mean squared error (RMSE), one of the most meaningful measures, is the square root of the average of squared errors. We do not want large errors in the forecast; therefore, we want the measure to be sensitive to large errors. When we square the error, a large error results in a larger value, and a small error produces a smaller value. Here is the formula to compute the RMSE:

Let us compute the RMSE to evaluate the model in Section 2.2.2. The value of RMSE computed by the function "meanf()" is 2.245361, which is close to the manual calculation from the following equation.

The RMSE measures the variance in forecast errors, while the MAD estimates the standard deviation of the forecast error and allows users to estimate the expected value. The MAPE measures the degree of error in predicting compared with the true value. Each measure tells us a different story about the forecast accuracy. When comparing forecasting methods, a good rule is to choose the method with the smallest RMSE.

2.3.4 Examining the Residuals

We covered four methods to compute point forecasts. However, as Hyndman mentioned, point forecasts can be of almost no value without the accompanying prediction intervals (Hyndman & Athanasopoulos, 2018). Furthermore, when the prediction intervals are wide, for example, the intervals produced in 2.2.2, the forecasts may also not be meaningful. To compute a prediction interval at a time point, we consider the forecast at the time point to be a probability distribution. The point forecast is the mean of the distribution. We make the following four standard assumptions [Jost, 2017] for computing predictions intervals:

- Independence: the residuals associated with any two different observations are independent, i.e., the residuals are uncorrelated.

- Unbiasedness: the mean value of the residuals is zero in any thin vertical rectangle in the residual plot. The forecasts are biased if the mean value differs from zero.

- Homoscedasticity: the standard deviation of the errors is the same in any thin rectangle in the residual plot.

- Normality: at any observation, the error component has a normal distribution.

The first two assumptions determine whether a forecasting method uses all information in data to make a prediction. If the residuals do not satisfy the first two assumptions, we can improve the forecasting method. The forecasts might still provide useful information even with residuals that fail the white noise (i.e., a purely random time series) test. The last two assumptions are not restrictive. Moderate departures from these assumptions have less effect on the statistical tests and the confidence interval constructions.

The "forecast" package provides the function "checkresiduals()" to test these assumptions. The function gives the results of a Ljung-Box test, in which a small p-value indicates the data are probably not white noise. Moreover, the function produces a time series plot of the residuals, the corresponding ACF, and a histogram. We invoke this function through the following R script to test the residuals produced from the mean method:

library(forecast) contributions <- c(5,6,3,4,2,0,0,5,7,5,2,1,2,3,2,4,5,6,5,2,5,8,5,5,0,1,4,5,2,2,3,5,6,8,7,6,5,8,5,7,6,2,1) contributions.ts <- ts(contributions, frequency = 4, start = c(2010,2)) # Use meanf() to forecast contributions in 2021 contributions.fc <- meanf(contributions.ts, h=4) # Examining the Residuals checkresiduals(contributions.fc)

The function prints the result of the Ljung-Box test as follows. The p-value is 0.03228. If we select a significance level of 0.05, we have statistical evidence to reject the null hypothesis that the residuals are independently distributed. When the residuals are not white noise, there is much room for improvement to the forecasting method.

> checkresiduals(contributions.fc)

Ljung-Box test

data: Residuals from Mean

Q* = 15.305, df = 7, p-value = 0.03228

Model df: 1. Total lags used: 8

>

Figure 13 presents the time series plot, the corresponding ACF, and the histogram. The time series plot reveals that the residuals are not random. If one value in the series is greater than the mean, the next value is likely greater than the mean. The ACF plot shows the first spike is outside the blue lines, which means some information in the residuals is useful in forecasting. The histogram has two peaks; therefore, the residuals have a bimodal distribution. According to Professor Hyndman, if residuals do not meet these four assumptions, we can still use the point forecasts. However, we should not take the prediction intervals too seriously (Hyndman, datacamp).

Figure 13 Residual plots produced by the "checkresiduals()" function

Summary

The article just scratched the surface of forecasting techniques, but we hope we have opened some doors for performing time series analysis. We covered four simple forecasting methods: the mean method, the naïve method, the seasonal naïve method, and the simple moving average method. Next, we implemented these methods to forecast time-series data. To compare these methods, we defined some accuracy measures. After examining residual assumptions, we know how to use the forecasts in business activities. We also explored some characteristics of forecasting. Knowing these characteristics helps sweep away skepticism about forecasting.

To practice these methods, we showed a way of using R functions to explore time series data and implement forecasting methods. First, we presented R code for visualizing time series data. We then introduced a function to decompose a time series into three components: trend, seasonal, and residual. Next, we used four R functions to implement the four forecasting methods, respectively, and checked forecast accuracy in the function outputs. Finally, we introduced a function to test the residual assumptions.

Reference

Academy, J. (2013). Excel – Time Series Forecasting – Part 1 of 3. https://youtu.be/gHdYEZA50KE.

Black, K. (2013). Business Statistics: For Contemporary Decision Making (8th Edition). Wiley.

Coghlan, A., (2018). A Little Book of R For Time Series. https://a-little-book-of-r-for-time-series.readthedocs.io/.

Droke, C. (2001). Moving Averages Simplified. Marketplace Books.

Gerbing, D., (2016). Time Series Components. http://web.pdx.edu/~gerbing/515/Resources/ts.pdf.

Goodwin, P., (2018). How to Respond to a Forecasting Sceptic, Foresight: The International Journal of Applied Forecasting, International Institute of Forecasters, issue 48, pages 13-16, Winter.

Hanke, E. J., & Wichern, D. (2014). Business Forecasting (9th Edition). Pearson.

Hyndman, R. J. (datacamp). Fitted values and residuals. https://campus.datacamp.com/courses/forecasting-in-r/benchmark-methods-and-forecast-accuracy?ex=3.

Hyndman, R. J., (2010) Moving Averages. Contribution to the International Encyclopedia of Statistical Science, ed. Miodrag Lovric, Springer. pp.866-869.

Hyndman, R.J., & Athanasopoulos, G. (2018) Forecasting: principles and practice, 2nd edition, OTexts: Melbourne, Australia. https://otexts.com/fpp2/.

Jost, S. (2017). CSC 423: Data Analysis and Regression. http://facweb.cs.depaul.edu/sjost/csc423/.

Kolassa, S. (2017). What are the shortcomings of the Mean Absolute Percentage Error (MAPE)? https://stats.stackexchange.com/questions/299712/what-are-the-shortcomings-of-the-mean-absolute-percentage-error-mape.

Lm, J. (2020). Moving to Tidy Forecasting in R: How to Visualize Time Series Data. https://medium.com/@JoonSF/moving-to-tidy-forecasting-in-r-how-to-visualize-time-series-data-1d0e42aef11a/.

Moore, S. D., McCabe, Y. G., Alwan, C. L., Craig, A. B. & Duckworth, M. W. (2010). The Practice of Statistics for Business and Economics (Third Edition). W. H. Freeman.

MSSQLTips (2021). MSSQLTips Authors. https://www.mssqltips.com/sql-server-mssqltips-authors/

Pring, J. M. (2014). Technical Analysis Explained: The Successful Investor's Guide to Spotting Investment Trends and Turning Points (5th Edition). McGraw-Hill Education.

Siegel, F. A. (2012). Practical Business Statistics (6th Edith). Academic Press

Shumway, H. R., & Stoffer, S. D. (2016). Time Series Analysis and Its Applications With R Examples (4th Edition). Springer

Stevenson, J. W. (2012). Operations Management (11th Edition). McGraw-Hill.

Svetunkov, I. (2021). sma() - Simple Moving Average. https://cran.r-project.org/web/packages/smooth/vignettes/sma.html.

William, M., & Sincich, T. (2012). A Second Course in Statistics: Regression Analysis (7th Edition). Prentice Hall.

Next Steps

- The author presented four simple forecasting methods in this article. Because they are simple and easy to understand, people like using them in practice. To learn these methods, we need to practice them with lots of different time series. After gaining much experience, we can build an intuition to determine which method is better for a particular circumstance. However, in some situations, these methods do not work very well. We then should make some improvements or select other techniques. This article concentrated on the trend-seasonal analysis. Another method for analyzing time series is to use the Box–Jenkins ARIMA processes. For further studying, the author recommends Professor Hyndman’s book that is available at https://otexts.com/fpp3/. Rick Dobson also published several articles about time series and forecasting on MSSQLTips.com.

- Check out these data science related tips:

- Basic Concepts of Probability Explained with Examples in SQL Server and R

- Discovering Insights in SQL Server Data with Statistical Hypothesis Testing

- Selecting a Simple Random Sample from a SQL Server Database

- Statistical Parameter Estimation Examples in SQL Server and R

- Using Simple Linear Regression to Make Predictions

- How to Compute Simple Moving Averages with Time Series Data in SQL Server

- Mining Time Series Data by Calculating Moving Averages with T-SQL Code in SQL Server

- Time Series Data Fact and Dimension Tables for SQL Server

- Collecting Time Series Data for Stock Market with SQL Server

- Exponential Moving Average Calculation in SQL Server

- Weighted vs Simple Moving Average with SQL Server T-SQL Code

- Mining Time Series with Exponential Moving Averages in SQL Server

- Introduction to SQL Server Machine Learning Services with Python

About the author

Nai Biao Zhou is a Senior Software Developer with 20+ years of experience in software development, specializing in Data Warehousing, Business Intelligence, Data Mining and solution architecture design.

Nai Biao Zhou is a Senior Software Developer with 20+ years of experience in software development, specializing in Data Warehousing, Business Intelligence, Data Mining and solution architecture design.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-03-11