By: Alejandro Cobar | Updated: 2021-04-12 | Comments (6) | Related: 1 | 2 | 3 | More > DBA Best Practices

Problem

As SQL Server DBAs, we need to make sure that the SQL Server instances we support are setup correctly and consistently. One way to do this is to set a baseline of best practices and check each instance. A simple way to run and test for best practice settings is to use PowerShell which can be run against any instance. In this article we look at a set of best practices and a PowerShell script you can use and update to meet your needs.

Solution

As a part of a series of articles, called PowerShell tools for the DBA, I’m presenting a PowerShell tool that can help any SQL Server DBA to perform best practices checks against their instances.

Initial considerations

Here are some assumptions before diving into the details of the script:

- The script heavily uses the Invoke-Sqlcmd cmdlet, that’s part of the SQLPS module. If you don’t have it installed, Google it and it’ll come up right away. The script will also let you know if you don’t have such module installed in your system.

- It would be better if you have control of a test environment where you can test the script first.

- For all the tests to work flawlessly, please make sure to grant "sysadmin" server role to the account that will be executing the operations. However, I strongly suggest that you assign the least required privileges, but that would add an extra layer of complexity to the script which I’m currently not covering.

- This version of the script has only been tested with on-prem deployments of SQL Server (no Azure nor Linux deployments).

- In its current version (1.0), the script performs 10 basic best practices checks against the specified SQL Server instance.

- The items are not displayed in any particular order.

Using the PowerShell Script

General Note: If you run the script from PowerShell ISE, then you will see pop-ups asking you to choose among several options. However, if you run it directly from a PowerShell console, instead of pop-ups you will see the options presented to you in the command line interface (you will see the difference in the screenshots below).

You can run the script a few different ways:

- Either by right clicking on the PowerShell script and selecting "Run with PowerShell" (non-ISE variant)

- Open the PowerShell ISE (ISE variant)

- either copy and paste the script into the PowerShell script pane

- or run the script as follows in the PowerShell command line (I saved the file in the C:\temp folder.)

- if you don't specify the SERVER\INSTANCE it will default to using the local default instance

PS C:\temp> .\Best-Practices.ps1 SERVER\INSTANCE

The script will ask for:

- The type of login you want to use that has the necessary privileges

- Trusted = the connection to the SQL Server instance will be made with the same user executing the PowerShell script (basically, you don't have to specify any credentials, it will assume them based on the context).

- Windows Login = A Windows login has to be provided for the correct authentication.

- SQL Login = A SQL login has to be provided for the correct authentication.

(non-ISE version)

If you choose a type of login that requires you to input credentials, the script will notify you if such credentials fail to connect to the specified instance.

(non-ISE version)

If you specify an instance that either doesn’t exist or there are issues to connect to it, the script will notify you so.

SQL Server Best Practices

With the correct information specified, the script will start to check each best practice one by one and will notify you if each passed or failed. The script shows you all at once, I will present them individually just for demonstration purposes.

Cost Threshold for Parallelism

You probably know by now that the default value of 5 is just not good enough for today's modern hardware. My test instance has the default value, and this is the output.

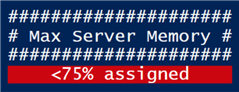

Max Server Memory

Ideally, a value between 75% - 80% of the memory in the server is a good target. However, there will be some cases where this can be treated differently (especially if the server is not a dedicated database server). My test instance is using 2GB of the 4GB on the server.

Lock Pages in Memory

With this setting on, the OS will not touch any pages in memory that the SQL Server process already has allocated. I don’t have it enabled for my test instance.

Instant File Initialization

With this setting on, every time a new database file is either created or more space is allocated to it, this setting allows it to do it quicker because it is not zeroed out in the process (this doesn’t apply for transaction log files). In my instance, I have it enabled because I’m using SQL Server 2017 and the installation wizard allows you to enable it during the installation process (good Microsoft change I guess).

DB Files in C:\ drive

Almost as a rule of thumb, user database files should not be placed on the C:\ drive. In my instance, I have everything on the C:\ drive because that’s the only drive I have in my test VM.

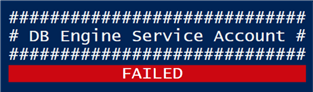

Database Engine Service Account

It is a good practice to run the Database Engine service using a domain service account with less privileges. Sometimes it is a bit tough to achieve due to certain restrictions from 3rd party vendors. In my case I have the default account that SQL Server assigns to a new instance.

SQL Agent Service Account

It is a good practice to run the SQL Agent service using a domain service account with less privileges. Sometimes it is a bit tough to achieve due to certain restrictions from 3rd party vendors. However, this service is not used for the SQL Server Express Edition. In my case I have the default account that SQL Server assigns to a new instance.

Logins with sysadmin privilege

Generally speaking, the more logins with sysadmin privilege there are in an instance, the higher the risk of having it compromised by somebody that is able to get their hands on any of the accounts. There are times where such accounts are required, but that will depend on your own particular case. For this check, I have left the sa account and the System accounts as the ones "acceptable" to have sysadmin privilege.

Dedicated Disk Drives

Generally speaking, it is better to have database files split across a set of isolated storage subsystems, to enhance the performance of SQL Server. A drive for database data files, a drive for transaction log files, a drive just for TempDB, and a drive for backups. In my test instance, I have everything in the exact same drive.

Database files with growth in %

If your database files are set to grow in a percentage, rather than in MB, then you face the risk of having at least one that eventually grows so large that the next increment will not be possible because it will completely fill the disk where it’s hosted (impacting anything else stored in there). These are the databases on my system.

Download the PowerShell Script

- You can download the PowerShell script here.

Next Steps

- If you’d like to check the best practices for multiple instances, you can span multiple PowerShell windows and throw a different instance at each, which can be handy overall.

- My take on this particular tool is to continue to grow it as large as possible to give DBAs a wide range of indicators of how their instances are currently setup, in terms of applied best practices. Therefore, if you have any suggestions or specific checks that would be useful to include, feel free to let me know in the comments below and I will update the script as needed.

About the author

Alejandro Cobar is an MCP, SQL Server DBA and Developer with 10+ years of experience working in a variety of environments.

Alejandro Cobar is an MCP, SQL Server DBA and Developer with 10+ years of experience working in a variety of environments.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-04-12