By: Ron L'Esteve | Updated: 2021-05-07 | Comments | Related: > Azure Databricks

Problem

With Azure Databricks 7.2 and above, there is now the capability of Cloning source data to create a copy of an existing Delta table at a specified version by using the CLONE command. What are some of the features, benefits, and use cases of Deep and Shallow clones and how can we get started with using the CLONE command in Azure Databricks?

Solution

Clones have many use cases such as data archiving, reproducing ML datasets, data sharing and more. Additionally, clones can be either deep or shallow and there are a few notable differences between the two. A shallow clone does not copy the data files to clone the target, relies on the metadata as the source, and are cheaper to create. Deep clones will copy the source table data to the target location. From a streaming perspective, deep clones have the capability of stopping any writes to the source Delta table and continuing the writes to the cloned target location. In this tutorial, we will:

- Explore practical examples of both deep and shallow clones in action

- Explore time travel and versioning for cones

- Explore how to read the delta logs with capture deep and shallow cloning metrics.

Shallow Clone in Azure Databricks

In my previous article, Performance Tuning Apache Spark with Z-Ordering and Data Skipping in Azure Databricks, I demonstrated how to use the OPTIMIZE and Z-ORDER commands. Additionally, in this previous article, I created a Hive table called nyctaxi from the databricks-datasets that originally contained 400 files. After OPTIMIZING the table, there were only 10 active files. These are the 10 files that will be used during the cloning process since they are now active in the table. As a recap, here is the image of the OPTIMIZE ZORDER command that was run in this previous article.

To confirm that we have data in the nyctaxi hive table, we can run the following select statement.

%sql SELECT * from nyctaxi

Next, we can create the Shallow Clone using the following script which contains the SHALLOW CLONE command.

%sql CREATE TABLE IF NOT EXISTS nyctaxi_shallow_clone SHALLOW CLONE nyctaxi LOCATION 'abfss://[email protected]/raw/delta/nyctaxi_delta_shallow_clone'

There is also an option to specify the Version with both deep and shallow clones.

CREATE TABLE [IF NOT EXISTS] [db_name.]target_table [SHALLOW | DEEP] CLONE [db_name.]source_table [<time_travel_version>] [LOCATION 'path']

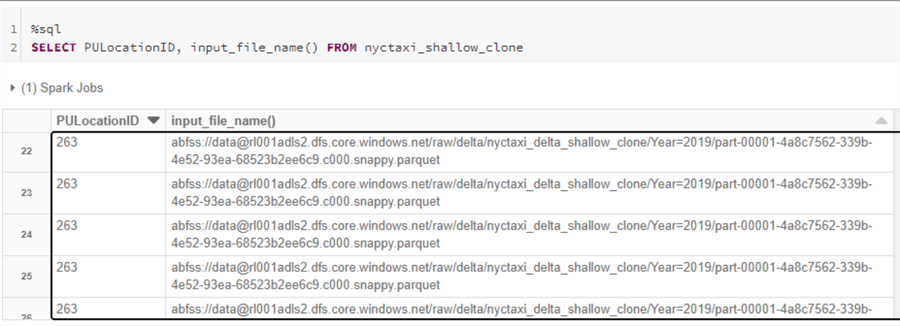

Next, we can run the following select SQL query to query the shallow clone along with its source file.

%sql SELECT *, input_file_name() FROM nyctaxi_shallow_clone

The results for the input_file_name() indicate that the actual source file was not copied which goes back to our definition of shallow clone, which states that it does not copy the data files to clone the target and only relies on the metadata as the source.

Additionally, this meta data / no source data operation is further confirmed since there are no physical files in the nyctaxi_delta_shallow_clone folder.

On the other hand, the delta log will contain files which capture metrics and logs around the creation of the shallow clone.

As we can see, the log indicates a CLONE operation with isShallow = true.

Next, let’s update the shallow clone to see how this operation impacts the logs and persisting of data.

%sql UPDATE nyctaxi_shallow_clone SET PULocationID = 263 WHERE PULocationID = 262

Notice that there were 17 new files that were created in the shallow clone folder since any new files created based on operations on the shallow clone’s folder will persist these files in the shallow clone folder going forward rather than continuing with meta data pointers to the original files. This is valuable since, if the shallow clone needs to be deleted for any reason, it will have no impact to the original files. This is good for short term experiments on a production table without affecting production workloads.

Based on a select with the input_file_name(), we can see the data now points to the shallow clone folder since there has been an update since the shallow clone was created.

Deep Clone in Azure Databricks

Now that we have a basic understanding of shallow clones, let's explore deep clones next. Similar to the script to create shallow clones, we can create a deep clone with the following script.

%sql CREATE TABLE IF NOT EXISTS nyctaxi_deep_clone DEEP CLONE nyctaxi LOCATION 'abfss://[email protected]/raw/delta/nyctaxi_delta_deep_clone'

After running the deep clone creation script, we can see the 10 active files from the original folder being copied to the deep clone folder.

Similar to the shallow clone’s delta log, there is are files created within the delta_log.

Within the json log file, we can once again see that this operation is a CLONE and this time isShallow is set to False.

After running a select on the deep clone’s hive table, we can see from the input_file_name() that the data is persisted in the deep clone folder.

There is one more scenario that is worth testing to demonstrate the capability of time travel which allows us to revert back to a specific version of the original dataset.

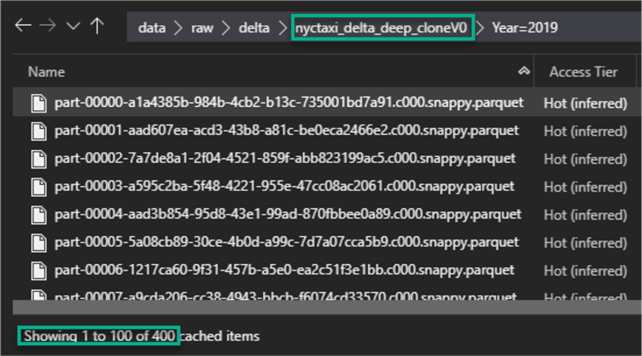

For my scenario, Version 1 contains 10 optimized files and Version 0 contains 400 original files, therefore, let’s specify Version 0 in the following script to create the deep clone.

%sql CREATE TABLE IF NOT EXISTS nyctaxi_deep_cloneV0 DEEP CLONE nyctaxi VERSION AS OF 0 LOCATION 'abfss://[email protected]/raw/delta/nyctaxi_delta_deep_cloneV0'

This time, we can see the expected 400 files in the deep clone V0 folder.

Additionally, we can see the addition of the 400 files in the new delta log file.

Summary

In this article, we explored how to create both deep and shallow clones. It is important to note that since shallow clones reference the original source data, if a VACCUM command is run on the original files, the shallow clones may throw errors. Recreating the clone will repair it. For this scenario, it would be a good idea to create a deep clone instead. Shallow clones are less costly to create since deep clones create copies of both the data and metadata. Finally, cloning is not to be confused with CTAS since it copies both the data and meta data.

Next Steps

- For more information on deep and shallow clones and their features, read Clone a Delta Table.

- Read more about Clone Use Cases for more information on when to use both deep and shallow clones.

- Read more about CLONE (Delta Lake on Databricks).

- Read more about Backing up Full Delta Lake using Delta DEEP CLONE and Dynamic Python/ Spark SQL scripting in Databricks.

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-05-07