By: Ron L'Esteve | Updated: 2021-08-24 | Comments (1) | Related: > Azure Databricks

Problem

As Data Engineers, Citizen Data Integrators, and various other Databricks enthusiasts begin to understand the various benefits of Spark as a valuable and scalable compute resource to work with data at scale, they would need to know how to work with this data that is stored in their Azure Data Lake Storage Gen2 (ADLS gen2) containers. Azure Databricks offers the capability of mounting a Data Lake storage account to easily read and write data in your lake. While there are many methods of connecting to your Data Lake for the purposes or reading and writing data, this tutorial will describe how to securely mount and access your ADLS gen2 account from Databricks.

Solution

While there are a few methods of connecting to ADLS gen 2 from Databricks, in this tutorial I will walk through a clear end-to-end process of securely mounting your ADLS gen2 account in Databricks. Towards the end of the article, you will learn how to read data from your mounted ADLS gen2 account within a Databricks notebook.

Getting Started

To proceed with this exercise, you will need to create the following Azure resources in your subscription.

Azure Data Lake Storage Gen2 account: Please create an Azure Data Lake Storage Gen2 account. While creating your Azure Data Lake Storage Gen2 account through the Azure Portal, ensure that you enable hierarchical namespace in the Advanced configuration tab so that your storage account will be optimized for big data analytics workloads and enabled for file-level access control lists (ACLs).

Also remember to create a container in your ADLS gen2 account once your storage account is successfully deployed. For the purposes of this exercise, you’ll also need a folder (e.g.: raw) along with some sample files that you can test reading from your Databricks notebook once you have successfully mounted the ADLS gen2 account in Databricks.

Azure Databricks Workspace (Premium Pricing Tier): Please create an Azure Databricks Workspace. While creating your Databricks Workspace through the Azure portal, ensure that you select the Premium Pricing Tier which will include Role-based access controls. This Premium pricing tier will ensure that you will be able to create an Azure Key Vault-backed Secret Scope in Azure Databricks. Additionally, this tier selection will prevent you from encountering any errors while creating this secret scope in Databricks.

Azure Active Directory App Registration: Please register an application by navigating to Azure Active Directory, clicking +New registration.

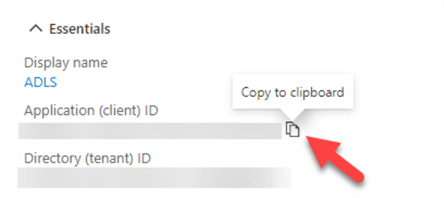

Once your App is registered, navigate to the Overview tab of your newly registered app, and copy the Application (client) ID and Directory (tenant) ID and save them in a Notepad for now. These values will need to be entered and stored as Key Vault secrets in the next step of the process.

To complete the App registration process, you will need one last value, which is the Client Secret. To obtain this value, navigate to the Certificates & secrets tab within your registered app, create a New client secret, copy the value and save it in your Notepad as Client Secret. You will need to add this to the Key Vault secrets in the next step.

Now that your app is registered, you will need to navigate to the Access Control settings of the ADLS gen2 storage account, and give the app role access level Storage Blob Data Contributor rights to the Storage account.

Azure Key Vault: Please create a Key vault in your Azure subscription and add the ClientID, TenantID, and ClientSecret as Secrets. Once created, ensure that these secrets have a Status of Enabled.

Create a Secret Scope

In Azure Databricks, you will need to create an Azure Key Vault-backed secret scope to manage the secrets. A secret scope is collection of secrets identified by a name. Prior to creating this secret scope in Databricks, you will need to copy your Key vault URI and Resource ID from the Properties tab of your Key Vault in Azure portal.

To create this secret scope in Databricks, navigate to

https://<DATABRICKS-INSTANCE>#secrets/createScope and replace <DATABRICKS-INSTANCE> with your own Databricks URL instance.

This URL will take you to the UI where you can create your secret scope. Paste the

Key Vault URI and Resource ID which you copied in the previous step into the respective

DNS Name and Resource ID section.

Finally, by clicking Create you will see that the secret scope will be successfully added. This confirms that you have successfully created a secret scope in Databricks and you are now ready to proceed with mounting your ADLS gen2 account from your Databricks notebook.

Mount Data Lake Storage Gen2

All the steps that you have created in this exercise until now are leading to mounting your ADLS gen2 account within your Databricks notebook. Before you prepare to execute the mounting code, ensure that you have an appropriate cluster up and running in a Python notebook.

Paste the following code into your Python Databricks notebook and replace the adlsAccountName, adlsContainerName, adlsFolderName, and mountpoint with your own ADLS gen2 values. Also ensure that the ClientId, ClientSecret, and TenantId match the secret names that your provided in your Key Vault in Azure portal.

# Python code to mount and access Azure Data Lake Storage Gen2 Account from Azure Databricks with Service Principal and OAuth

# Define the variables used for creating connection strings

adlsAccountName = "adlsg2v001"

adlsContainerName = "data"

adlsFolderName = "raw"

mountPoint = "/mnt/raw"

# Application (Client) ID

applicationId = dbutils.secrets.get(scope="akv-0011",key="ClientId")

# Application (Client) Secret Key

authenticationKey = dbutils.secrets.get(scope="akv-0011",key="ClientSecret")

# Directory (Tenant) ID

tenandId = dbutils.secrets.get(scope="akv-0011",key="TenantId")

endpoint = "https://login.microsoftonline.com/" + tenandId + "/oauth2/token"

source = "abfss://" + adlsContainerName + "@" + adlsAccountName + ".dfs.core.windows.net/" + adlsFolderName

# Connecting using Service Principal secrets and OAuth

configs = {"fs.azure.account.auth.type": "OAuth",

"fs.azure.account.oauth.provider.type": "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider",

"fs.azure.account.oauth2.client.id": applicationId,

"fs.azure.account.oauth2.client.secret": authenticationKey,

"fs.azure.account.oauth2.client.endpoint": endpoint}

# Mount ADLS Storage to DBFS only if the directory is not already mounted

if not any(mount.mountPoint == mountPoint for mount in dbutils.fs.mounts()):

dbutils.fs.mount(

source = source,

mount_point = mountPoint,

extra_configs = configs)

Finally, run the code in the notebook and notice the successful completion of the Spark job. Congratulations, your ADLS gen2 storage account has successfully been mounted and you are now ready to work with the data.

Read Data Lake Storage Gen2 from Databricks

In the previous section, you learned how to securely mount an ADLS gen2 storage account from within your Databricks notebook. In this section, you will learn about some commands that your can execute to get more information about your mounts, read data, and finally you will also learn how to unmount your account if needed.

Run the following command to list the content on your mounted store.

dbutils.fs.ls('mnt/raw')

Notice that this dbutils.fs.ls command lists the file info which includes the path, name, and size.

Alternatively, use the %fs magic command to view the same list in tabular format.

#dbutils.fs.ls('mnt/raw')

%fs

ls "mnt/raw"

By running this could, you will notice an error. I deliberately added the commented out #dbutils.fs.ls code to show that if you happen to have comments in the same code block as the %fs command, you will receive an error.

To get around this error, remove any comments in the code block which contains the %fs command.

%fs ls "mnt/raw"

Notice that the same results as the dbutils command are displayed, this time in a well-organized tabular format.

Read the data from the mount point by simply creating a data frame to read the file by using the spark.read command.

df = spark.read.json("/mnt/raw/Customer1.json")

display(df)

For this scenario, we are reading a json file stored in the ADLS gen2 mount point. Notice that the data from the file can be read directly from the mount point.

Run the following command to list the mounts that have been created on this account.

display(dbutils.fs.mounts())

Notice that the mount you created in this exercise is in the list, along with other mount points that have previously been created.

Run the following command to unmount the mounted directory.

# Unmount only if directory is mounted if any(mount.mountPoint == mountPoint for mount in dbutils.fs.mounts()): dbutils.fs.unmount(mountPoint)

Notice that mount /mnt/raw has successfully been unmounted by this command.

Summary

In this article, you learned how to mount and Azure Data Lake Storage Gen2 account to an Azure Databricks notebook by creating and configuring the Azure resources needed for the process. You also learned how to write and execute the script needed to create the mount. Finally, you learned how to read files, list mounts that have been created, and unmount previously mounted directories.

Next Steps

- For more detail on understanding Azure Blob Storage, see Azure Blob storage - Azure Databricks - Workspace | Microsoft Docs

- For more detail about the Databricks File System (DBFS), see Databricks File System (DBFS) - Azure Databricks - Workspace | Microsoft Docs

- For more information on reading and writing from ADLS gen2 with Databricks, see Reading and Writing data in Azure Data Lake Storage Gen 2 with Azure Databricks (mssqltips.com)

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-08-24