By: Rick Dobson | Updated: 2021-08-16 | Comments | Related: > Other Database Platforms

Problem

Demonstrate how to transfer data via a .csv file from a SQL Server database to a Pandas dataframe and then subset the dataframe in Python.

Solution

A Pandas dataframe is an in-memory data store that can be manipulated with the Python programming language. The Python programming language is popular with many developers because of its easy programming style and its applicability to a wide variety of use cases. Python is particularly popular among professionals charged with mining a dataset, implementing data science projects, or building web-based HTML solutions.

After creating and populating a Pandas dataframe from a more durable data store, such as a SQL Server table or a results set created by a T-SQL statement, you can readily perform many classic data science applications with Python. Examples include data visualization, modeling, clustering, machine learning, and statistical analyses. When implementing one of these use cases, there is sometimes a need to reconfigure the design of a Pandas dataframe as well as subset the data in it; this tutorial illustrates approaches for achieving these goals.

After reading this tutorial, you will be equipped to create, populate, and subset a Pandas dataframe from a dataset that comes from SQL Server. Selected specific topics covered include:

- Exporting a .csv file for a results set based on a T-SQL query statement.

- Importing a .csv file into a Pandas dataframe. This dataframe serves as a vehicle for acquainting you with dataframe design Issues. For example, the tutorial illustrates how to add a new index and drop a column that is no longer needed.

- Demonstrating how to subset the contents of a dataframe by displaying

- A subset of the dataframe columns or

- A subset of the dataframe rows

Learning about dataframes by creating and populating them

To run the code samples demonstrated in this tutorial, you will need Python and the Pandas library installed on a computer workstation. The Python programming application and the Pandas library are available without charge.

You can download Python from this link. Installation guidance for the Pandas library is available from this link. All the Python and Pandas custom code demonstrated in this tip is available in the download for this tip.

You can use whatever developer tool you wish for examining and modifying the Python script files referenced in this tutorial. However, this tutorial demonstrates the use of IDLE functionality, an integrated developer environment, for reading and editing, and running Python code. IDLE downloads automatically with the installation of Python from the Python.org website.

Exporting a .csv file for a SQL Server results set

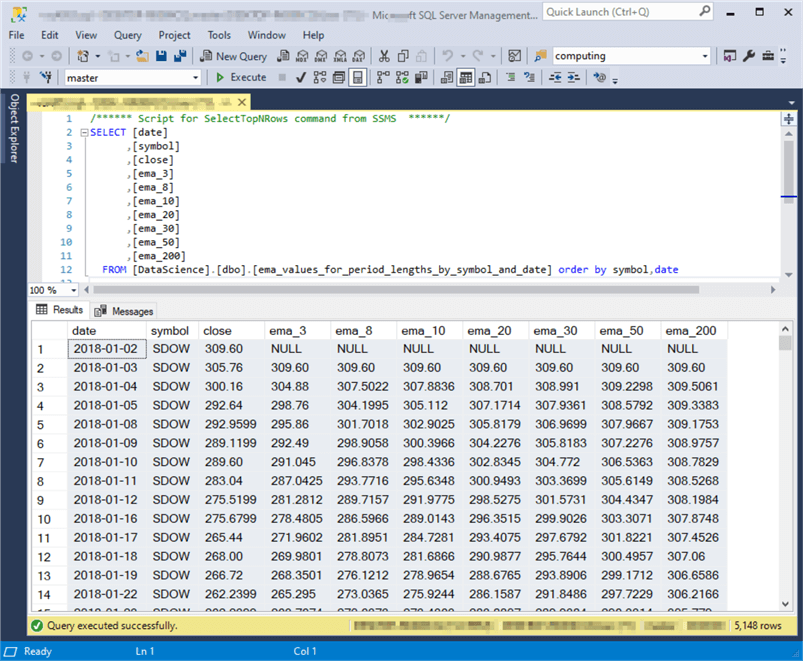

The following screen shot shows a SQL Server Management Studio (SSMS) session containing a simple query and an excerpt from its results set. The full results set contains close prices and exponential moving averages for the close prices based on seven different period lengths (ema_3 through ema_200) for six ticker symbols (SDOW, UDOW, TQQQ, SPXL, SQQQ, SPXS). By right-clicking the rectangle to the top left of the results set and just below the Results tab, you can save the contents of the results set to a .csv file in a destination of your choice. For this demonstration, the results set was saved in a file named ema_values_for_period_length_by_symbol_and_date.csv within the DataScienceSamples directory of the C drive.

Reading a .csv file into a Pandas dataframe

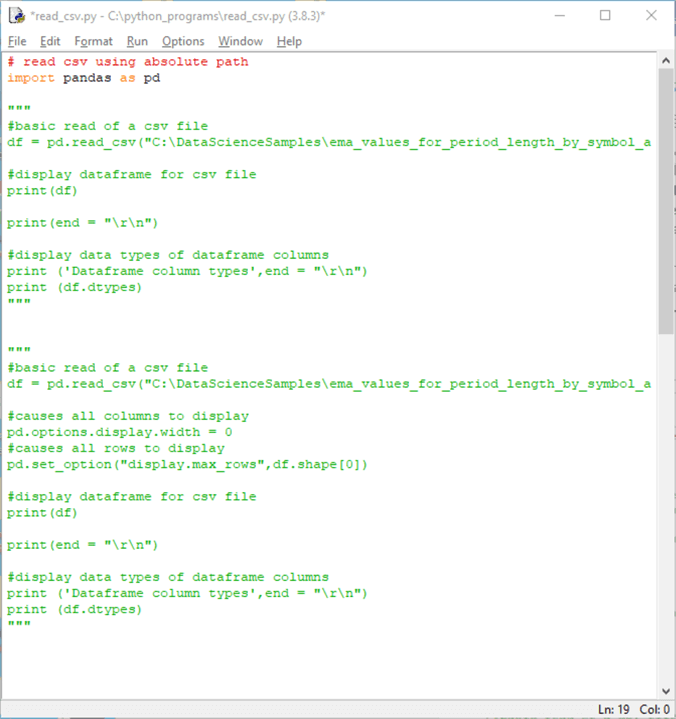

The following screen shot is a listing from the read_csv.py file that shows how to read the .csv file created in the preceding subsection into a Pandas dataframe. The screen shot also illustrates two different ways to display the contents of the dataframe.

- The main section of each approach to displaying the contents of a dataframe resides within block comment markers (“””…”””).

- At the top of the code window is an import statement which references the Pandas library; both approaches for displaying dataframe contents require the import statement.

- The import statement at the top of the code window assigns an alias name of pd to the Pandas library.

- You can run either (or both) of the Python scripts by putting a single line comment marker (#) before both the leading and trailing block comment markers of each script. For example, the leading and trailing block comment marker would change from “”” to #””” for either (or both) of the approaches that you want to run.

- The core statements from either script shows how to invoke the read_csv

function and a print function.

- The read_csv function reads the ema_values_for_period_length_by_symbol_and_date.csv file created and saved from SSMS. The contents of the file are saved in a Pandas dataframe object named df.

- The Python print function displays in the Python Shell window the contents of the df function according to default Python set_options settings.

- Unless you explicitly change the default settings, this results in the display of the first and last five rows along with a subset of the beginning and ending dataframe columns. The display does not by default show all the rows and columns from df.

- The second script includes two lines that can cause a print statement

to display all columns within all rows from a dataframe

- Setting the pd.options.display.width parameter equal to 0 can cause Python to print all characters from each row of your source dataframe.

- Also, assigning df.shape[0] to the display.max.rows parameter of set_options causes all rows to display.

The top Python script in the preceding screen shot generates the following output in the Python Shell window.

- The first row shows NaN values instead of NULL values in the first row for ema_30, ema_50, and ema_200. The read_csv function automatically translates NULL values into NaN values. This conforms to Python conventions for denoting missing numeric values.

- Highlighting has been added to emphasize the native RangeIndex value identifiers for distinct dataframe rows. These values, which appear at the left edge of the dataframe, commence at 0 and extend through one less than the count of dataframe rows (5147 in this example).

- The full set of dataframe column names and their data types appear below

the print function output for the dataframe.

- The first column name is date, and the last column name is ema_200.

- As you can see, the default excerpt for displaying the dataframe excludes columns named ema_3, ema_8, ema_10, and ema_20.

The next two screen shots show excerpts from the output from the second commented script block in the read_csv.py file listing.

The first screen shot is for the top ten dataframe rows.

- The values 0 through 9 are RangeIndex values for the first set of ten rows. These RangeIndex values are not in the .csv file, but they are added by the read_csv function to track the rows in a dataframe.

- All ten columns appear in the output from the column named date through the column named ema_200. This outcome is because of the pd.options.display.width = 0 statement. Recall that the output from the first script omitted columns ema_3, ema_8, ema_10, and ema_20.

The second screen shot is for the bottom four dataframe rows and the column metadata for the dataframe.

- The output confirms again that the RangeIndex values extend through 5147.

- Recall that 5147 is the count of dataframe rows less one.

- Also, this is the same final RangeIndex value from the first commented output script block.

- In other words, the excerpted values appearing in the output from the first commented script block do not affect the actual number of rows transferred from the .csv file to the dataframe.

- The display of column metadata is unaffected by the changes in the second

script block. You can still see the column names and their data types.

- Python stores string values with an object data type. Although the date column values have a format that appears to represent a date value, they are saved as string values because the read_csv function outputs just numeric and string data types to dataframes.

- The data types for close through ema_200 all have a float64 data type. These columns store floating point numeric values with 64 bits of precision.

Configuring a datetime index for a dataframe from a column of date string values

As you can tell from the preceding screen shot, the date column in the df dataframe does not represent datetime values. Instead, date column values are string objects. Therefore, if you want to perform tasks, such as extract date and/or time values from a datetime value, you cannot use the built-in Python functions and properties for working with datetime values. However, you can easily use these functions and properties after converting the date column values to datetime values and saving the converted values as the index for the dataframe. This section shows one way to perform the conversion and indicates its impact on the dataframe metadata.

Here is a Python script to perform the conversion. There are two major parts to the script. First, it illustrates how to reconstruct the dataframe from the .csv file created in the previous section. The initial dataframe is based on the application of the csv_read function for the .csv file. Next, three additional Python statements display the dataframe contents as well as reveal metadata about the dataframe.

- The first statement after the read_csv function displays the contents of the df dataframe with default excerpts, such as the first and last five rows.

- The second statement provides typical metadata about the df dataframe, such as the column names and their data types.

- The third statement shows the name for the df dataframe index as well as some information about the design of the index.

The second major portion of the script commences with a single-line comment about replacing the original index for the dataframe.

- This section begins by applying the to_datetime Pandas method to the string values in the date column that is populated from reading the .csv file. The converted values are assigned to a column named datetime_index.

- Next, the set_index method for the df dataframe is invoked to assign the datetime_index column as the index for the df dataframe.

- After performing this operation, the date column in the df dataframe from the imported .csv file, which is merely a series of string values, is dropped because it is no longer needed.

- The second major portion of the script concludes by printing the values, displaying typical metadata, and displaying routine information about the index for the df dataframe.

Here is an excerpt from the output from the preceding Python script. The excerpt shows the output from the script’s first major portion. Highlighting is added to three areas of the output.

- The shape of the df dataframe is 5148 rows by 10 columns. These shape values appear in square brackets (they are highlighted) below the df dataframe.

- The 10 dataframe columns are comprised of two object columns and eight float64 columns. Note especially that the name of the first column is date. The column names and an index for them are highlighted.

- The index name for the dataframe is RangeIndex.

- Its starting value is 0.

- Successive index values start at 0 and increase by 1 from the preceding index value.

- The index values stop at 5148 so they do not include this row number.

- This information is highlighted at the bottom of the following screen shot.

Here is a screen shot with output from the second major portion of the script. Recall that the second major portion of the script adds a new index to the df dataframe based on the datetime converted date column values from the read_csv version of the df dataframe. Additionally, the second major portion of the script drops the original date column from the df dataframe after using it to create a datetime index.

- The output for this portion of the script has a shape of 5148 rows with just 9, instead of 10, columns. This is because the original date column is dropped in the second portion of the script. There is a column of dates in the df dataframe, but its column header name appears one row below the nine column names comprising the shape of the df dataframe.

- The listing of columns from df.info() does not include the date column – again, this is because the original date column is dropped in the second portion of the script.

- The last segment of the output appearing in the second portion of the script

is for the index values of the df dataframe.

- The name of the index is DatetimeIndex instead of RangeIndex as is the case for the dataframe’s first version. However, the printed output from the index appears with a name of date, which is because a column named date served as the original source for generating the source DatetimeIndex values.

- Each of the 5148 index values has a datetime64[ns] type. This data type can be processed as datetime values in Pandas. Recall that the source date column values are just string values; they look like dates, but they are just strings.

- The row count (or dataframe length) of index values is 5148 – one index value for each row in the dataframe.

How to subset columns and rows from within a dataframe

It can often be useful to extract a subset of columns or rows from a dataframe. This can make it easier to focus on what matters most in a data science project or to exclude columns with an unacceptably high proportion of missing values. Some data science models, such as random forests, call for using multiple sets of columns and rows and then averaging the results across different sets of columns from a source dataframe.

Extracting a subset of the rows in a dataframe can also be useful for data analysis projects. For example, the sample dataset for this tip contains close price and moving averages with different period lengths for six symbols. If you build a model to predict prices for one symbol, will it predict prices validly for another symbol? Are there sets of symbols that exhibit similar price trends over time? By extracting different sets of rows that match different symbols, then you can answer questions like those just posed.

No matter the reason, it can often be useful to form a new dataframe based on a subset of columns and/or rows from a larger source dataframe.

Compiling a row subset based on boolean values

The following screen shot shows the Python script for creating a subset of the rows for the sample dataframe used with this tip. The script file has the name df_filter_query_demo_1.py. The header for the IDLE window in which the Python script displays also indicates the file resides in the python_programs directory of the C drive. The numbered red bullets identify parts of the script.

- The first bullet references the Pandas library.

- The second bullet invokes the read_csv function to import the .csv file created in the first section of this tip.

- The third bullet converts the string values in the date column before setting

the converted column as an index for the df dataframe.

- When a column is set as an index for the dataframe, then the approach silently drops the source column from the dataframe.

- The syntax for converting the string values in the date column to datetime values and making the converted values dataframe index values is slightly more compact than in the preceding section. Just as with T-SQL, Python offers multiple approaches to achieving some tasks.

- Also, you should keep in mind that an index for a pandas dataframe has different performance implications than does an index for a table in SQL Server. A comparison of Python versus SQL Server indexes is beyond the scope of this tip.

- The fourth bullet displays an excerpt from the dataframe created with the preceding steps. The excerpt is based on default set_options parameter settings for displaying a dataframe.

- Dataframe row filtering begins with the fifth bullet.

- The code associated with this bullet creates a new dataframe named df_is_sdow with two columns.

- The first column contains datetime index values from the df dataframe.

- The second column contains boolean values of True or False.

- A boolean column value is True when its symbol value is SDOW.

- Otherwise, the boolean value for an index is False. In other words, a symbol value that is different than SDOW in the df dataframe corresponds to a value of False in the boolean column of the df_is_sdow dataframe.

- The sixth bullet prints out the rows from the df_is_sdow dataframe.

- The seventh bullet prints out the counts for rows with True or False values.

- The syntax for the eighth bullet embeds the df_is_sdow dataframe name in a square selection bracket set ([]) for the df dataframe. The syntax for this expression returns just rows from the df dataframe that correspond to a boolean value of True in the matching row from the df_is_sdow dataframe.

Here is an excerpt of a screen shot of the output from running the preceding script. The excerpt is from the Python shell window. Especially significant blocks of output have numbers in square boxes to the right of output. These numbers correspond to the bullet numbers in the preceding script.

- The first significant block of output corresponds to the print function

from the fourth bullet in the preceding script.

- It uses the default print settings to display the first and last five rows. Also, you can see the first three columns and the last three columns.

- The datetime index values with a name of date appear to the left of the first column (symbol)

- The shape of the df dataframe appears in square brackets below the df dataframe excerpt. The df dataframe has 5148 rows and 9 columns.

- The second major block of output corresponds to the sixth bullet in the

preceding script. This output is for df_is_sdow.

- The df_is_sdow dataframe contains filtered values represented by Boolean values of True and False.

- The filter is based on the symbol value from the df dataframe.

- The boolean value is from

- The filter is based on the symbol value from the df dataframe.

The boolean value in the df_is_sdow is

- True when the corresponding symbol is SDOW in the df dataframe

- False when the corresponding symbol is not SDOW in the df dataframe.

- The third major block of output corresponds to the seventh bullet in the preceding script. The output consists of counts for rows with True and False from the df_is_sdow dataframe. There are 858 rows with True 4290 rows with False.

- The last major block of output corresponds to the eighth bullet in the preceding

script. This output consists of the filtered df dataframe output.

- The filter is based on the rows of the df_is_sdow dataframe.

- The shape of the filtered dataframe indicates 858 rows, which corresponds to the number of True values in the df_is_sdow dataframe.

Selecting a column subset

Selecting a subset of one or more columns from a dataframe is very straightforward. Merely take the original dataframe name, such as df, and follow it with square brackets. Then, include either one column name in quote marks (“column_name”) or a comma separated list of two or more column names where each name is in quote marks. Here is a Python script to illustrate both approaches. There are three major sections to the script.

- The beginning section configures a dataframe to filter.

- The import pandas statement at the top of the script makes a reference to the pandas library.

- Then, additional code focuses on configuring a dataframe to filter.

- The read_csv function reads the .csv file created from SQL Server in the first section of this tip.

- The dataframe created from the output of the read_csv function receives

additional processing such as

- Converting the date column to a datetime index named date. This operation is performed with the help of the inplace parameter for the set_index method of the df dataframe object. Setting the inplace parameter to True eliminates the need to drop the date column after the conversion.

- Assigning a value of 0 to the options.display.width pandas parameter enables printing more dataframe columns on a single row to avoid truncation of output with the print function.

- The beginning section ends by displaying the df dataframe. This is the dataframe that will be filtered to populate another series or dataframe object based on a filtered version of the original dataframe.

- The second section starts with a comment line about displaying a filtered

version of the original dataframe with just one column of values.

- The filtered output from the original dataframe populates just one column

of values in a pandas series object.

- A pandas dataframe is composed of a set of two or more series objects.

- When the output from filtering a dataframe consists of a single column of values, then the output populates a series.

- Otherwise, the filtered output populates a dataframe.

- The assignment statement “series_closes = df[“close”]“ populates the series_closes object with the set of close column values from the df dataframe.

- After displaying the series values, the Python code returns the name of the object returned by the df[“close”] term.

- The filtered output from the original dataframe populates just one column

of values in a pandas series object.

- The third section starts with a comment line about returning multiple columns

of values. The third section of code performs three functions in a way

that is roughly like the code in the second section.

- First, it filters an original dataframe (df) and populates another dataframe (df_syms_closes_and_some_emas) with the filtered output.

- Second, it displays the values in the freshly populated dataframe.

- Third, it displays the object type for the output from the filtering operation.

Here is an image of the output from the preceding script in the Python Shell window.

- The dataframe towards the top of the image is the dataframe to be filtered in one of two ways.

- The dataframe in the middle of the image displays the series output along with the datetime index values from filtering the original dataframe for just one column. Note that the object type is Series.

- The dataframe at the bottom of the image shows the dataframe output from

filtering the original dataframe by more than one column.

- The filtering process returns six columns from the original dataframe. The columns retained by the filtering process are named symbol, close, ema_10, ema_30, ema_50, and ema_200

- The object type returned by the filtering process is another dataframe named df_syms_close_and_some_emas.

Trading date range and shapes for filtered row sets

Instead of creating filtered dataframe row sets based on boolean values as in the “Compiling a row subset based on boolean values” subsection, you can use criteria directly inserted into dataframe column selection criteria brackets. Unlike the dataframe brackets in the prior subsection, these brackets filter rowsets with specified settings. This subsection illustrates two demonstrations on how to achieve this kind of row filtering with one or two distinct criteria.

The script for implementing both demonstrations appears below. The script is divided into three parts by repeated single-line comment markers (##f…###) across the width of the IDLE window.

- The top part prepares a dataframe to facilitate the two demonstrations.

- The first two code segments in this part read a .csv file into a dataframe and then converts a column of strings representing dates into a datetime series that is set as the index for the dataframe.

- Next, the dataframe’s index is used to add two new columns that

are derived from the datetime series serving as an index.

- The first new column has the name Year in the df dataframe.

- The second new column has the name Month in the df dataframe.

- The top part closes by presenting metadata for the dataframe before printing the top and bottom five rows.

- The second part of the script shows the syntax for successively filtering

a rowset based on the df dataframe.

- The syntax for defining a filtered rowset is: (df[df["Year"] == YYYY]) where YYYY are four digits representing a year.

- The YYYY values in this tip represent four years from 2018 through 2021.

- The trading dates for 2018, 2019, and 2020 are for all trading dates throughout each year.

- The trading dates for 2021 are for the trading dates through the end of May.

- Four print functions in the second part of the script display in the Python shell window the rowset for each of the four years.

- The third part of the script shows the syntax for filtering based on a combination

of two date criteria.

- There is just one example created in the final part of the script. The filtered rowset in this part reveals a syntax for creating a filtered rowset based concurrently on two criteria.

- The vertical bar operator (|) performs an “or” operation between two criteria – one for rows from 2021 and the other for rows from 2020.

- The output from both criteria are stored in df_year_21_or_20, and a print function displays the combined filtered outputs in the Python shell window.

The following screen shot shows the metadata along with the first and last five rows for the source dataframe in this subsection.

- There are eleven columns in the dataframe.

- Nine of these columns are from the read_csv function that reads into Python a .csv file exported from SQL Server.

- Two additional columns are derived from the datetimeindex for the new

sample dataframe. The datetimeindex is derived originally from the

date column in the .csv file exported from SQL Server.

- One additional column has the name Year. It has an int64 data type.

- The second additional column has the name Month. It also has an int64 data type.

- The metadata for datetimeindex indicates its data type is datetime64[ns], which is an integer data type with 64 bits of precision that can denote datetime values down to the level of a nanosecond.

- Below the metadata is a df dataframe excerpt displaying the top and bottom

five rows.

- The rows remain sorted in the same order as they were in SQL Server. This order is described in the “Exporting a .csv file for a SQL Server results set” subsection.

- There are a total 5148 rows in the df dataframe. This is confirmed by the first number in the shape brackets below the listing of excerpted df dataframe values.

The following table summarizes key design features and output from the first example.

- Recall that the example is to create four filtered dataframes. Each filtered dataframe contains the rows from one year of rows in the df dataframe.

- The table has five rows – one for each of the four years in the df dataframe followed by a fifth row with summary data for all the years.

- The four filtered dataframes completely segment all the rows in the df dataframe by year.

- The first number in the Shape column denotes the count of rows in the filtered

dataframe for each year.

- The count of trading days per year is not invariant across years because of the days on which market holidays fall as well as other special issues.

- Additionally, the year 2021 only has 612 trading days because the trading data was pulled through the end of May in 2021 – not all the trading days in a year as is true for the dataframes based on 2018, 2019, and 2020.

- The most important point about the rows per filtered dataframe and the rows for the df dataframe is that the sum of the rows (612, 1518, 1512, and 1506) for the filtered dataframes equals the number or rows in the df dataframe (5148).

| Trading Date Range, Trading Date Criteria vs. Rows x Columns in Filtered Rows Shape | ||

|---|---|---|

| Trading Date Range | Trading Date Criteria | Shape [Rows x Columns] |

| 2021 | df["Year"] == 2021 | [612 rows x 11 columns] |

| 2020 | df["Year"] == 2020 | [1518 rows x 11 columns] |

| 2019 | df["Year"] == 2019 | [1512 rows x 11 columns] |

| 2018 | df["Year"] == 2018 | [1506 rows x 11 columns] |

| Jan2018 – May2021 | All trading dates in Trading Date Range | [5148 rows x 11 columns] |

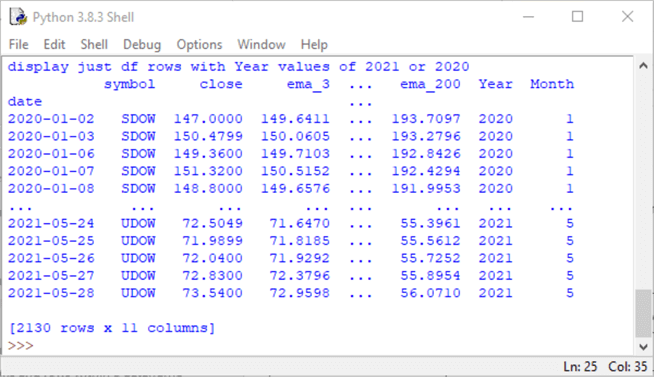

The last screen shot in this tip shows the output for the script that creates a filtered dataframe based on two criteria – one for rows with a date in 2020 and another for rows with a date in 2021. The following screen shot shows the two criteria generate a filtered dataframe with 2130 rows. This outcome is consistent with the sum of the dataframe row counts for 2020 (1518) and 2021(612).

You may be wondering which is the best approach (boolean values or criteria expressions) to creating a filtered dataframe based on the rows in a source dataframe. The answer may be that it depends.

- On the one hand, it takes more lines of code to set up dataframe filtering based on boolean values. This is because a developer must create a dataframe with boolean values before you can attempt to perform filtering.

- The criteria-based approach allows you to specify the criteria and do the filtering in one step.

- The directness of the criteria-based may appeal to those who are comfortable specifying criteria in different dataframe filtering contexts.

- On the other hand, the boolean approach allows you to easily verify your criteria for filtering before you invoke the filtering for the criteria.

Next Steps

This tip’s download file contains six files to help you get a hands-on feel for implementing the Python solutions in this tip.

- A key file is a .csv file with the sample data referenced by the Python script files.

- There are also five Python script files with sample code for all the solutions presented in this tip. Each Python script file has a .py extension.

- You may need to edit the Python script files based on where you save the .csv file. This is because the Python script files have hard code to expect the .csv file in the C:\DataScienceSamples folder.

After verifying the code works as described with the sample data file, you are encouraged to use your own custom data files. This will most likely require changes to the Python scripts in this sample that depend on column names and row values in your custom sample data.

About the author

Rick Dobson is an author and an individual trader. He is also a SQL Server professional with decades of T-SQL experience that includes authoring books, running a national seminar practice, working for businesses on finance and healthcare development projects, and serving as a regular contributor to MSSQLTips.com. He has been growing his Python skills for more than the past half decade -- especially for data visualization and ETL tasks with JSON and CSV files. His most recent professional passions include financial time series data and analyses, AI models, and statistics. He believes the proper application of these skills can help traders and investors to make more profitable decisions.

Rick Dobson is an author and an individual trader. He is also a SQL Server professional with decades of T-SQL experience that includes authoring books, running a national seminar practice, working for businesses on finance and healthcare development projects, and serving as a regular contributor to MSSQLTips.com. He has been growing his Python skills for more than the past half decade -- especially for data visualization and ETL tasks with JSON and CSV files. His most recent professional passions include financial time series data and analyses, AI models, and statistics. He believes the proper application of these skills can help traders and investors to make more profitable decisions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-08-16