By: Koen Verbeeck | Updated: 2021-08-09 | Comments | Related: More > Snowflake

Problem

I'm creating a cloud data warehouse using Snowflake. I need to automate some SQL statements, but Snowflake doesn't have a scheduling tool like SQL Server. Is there a way to schedule SQL statements without using a third party tool?

Solution

Snowflake is a cloud data warehouse offering which is available on multiple cloud platforms, including Azure. To learn more about Snowflake itself, check out this tutorial. Snowflake has introduced the concept of tasks. A task can execute a single SQL statement or call a stored procedure for automation purposes. Keep in mind stored procedures in Snowflake are written in JavaScript.

A task can either be scheduled to run every X minutes, or you can use a cron expression. For more info about cron, check out the tip Scheduling SQL Server Tasks on Linux.

The general task definition syntax looks like this:

CREATE TASK myTask

WAREHOUSE = myWarehouse

[SCHEDULE = x MINUTE | USING CRON cron_expression]

[parameters…]

[AFTER otherTask]

[WHEN Boolean_expression]

AS

<some SQL statement>

Some remarks:

- When using the cron expression, you can also specify a time zone. This would execute the task using the local time zone. You have to be careful around daylight saving time changes, as this may result in the task being executed twice or not at all. You can find more info in the documentation.

- Not all tasks need to be scheduled. Using the AFTER clause, you can configure the task to run after another task. This way, you can create a simple execution tree with dependencies.

- The WHEN clause only supports one single Boolean expression for the moment: SYSTEM$STREAM_HAS_DATA. This is related to a change tracking feature in Snowflake.

With tasks, we can thus create a tree like the following:

The root SQL statement is scheduled, but all the other tasks are dependent on a predecessor task. The rules are simple: a task can have only one parent. This means you cannot create Directed Acyclic Graphs (also called DAGs) as in Airflow for example, where a task can have multiple parents. A task can have a maximum of 100 children and the whole tree is limited to maximum 1,000 tasks.

Loading a Dimension using Snowflake Tasks

Let's illustrate the concept of tasks by loading data into a dimension table. Our tree will look like this:

In the first step, we'll load data from Azure Blob Storage into a staging table. Then we'll update existing rows in the dimensions and finally we'll insert new attributes into the dimension (you could combine the last two steps into a single MERGE statement, but I wanted a slightly bigger tree).

Test Set-up

We can create the staging table and the dimension table with the following DDL statements (make sure there's a STAGING and DBO schema):

CREATE TABLE IF NOT EXISTS STAGING.CustomerStaging( CustomerName VARCHAR(50) NOT NULL, Location VARCHAR(50) NOT NULL, Email VARCHAR(50) NULL ); CREATE TABLE IF NOT EXISTS dbo.DimCustomer( SK_Customer INT IDENTITY(1,1) NOT NULL, CustomerName VARCHAR(50) NOT NULL, Location VARCHAR(50) NULL, Email VARCHAR(50) NULL );

In Azure Blob Storage, there's a CSV file with some sample data. You can download the sample file here.

To be able to load this data, a stage needs to be created. You can follow the steps from the tutorial to create a stage if you haven't created one already.

Creating the Tasks

Use the following script to create the three tasks:

CREATE OR REPLACE TASK dbo.ROOT_COPYINTO

WAREHOUSE=COMPUTE_WH

SCHEDULE='1 MINUTE'

AS

COPY INTO STAGING.CustomerStaging

FROM @MSSQLTIPS_STAGE/tasks

FILE_FORMAT=(TYPE=CSV COMPRESSION=NONE FIELD_DELIMITER=';' SKIP_HEADER=1 TRIM_SPACE=TRUE)

PATTERN ='.*csv'

PURGE = TRUE

ON_ERROR='CONTINUE';

CREATE TASK dbo.CHILD1_UPDATE

WAREHOUSE=COMPUTE_WH

AFTER dbo.ROOT_COPYINTO

AS

UPDATE dbo.DimCustomer d

SET Location = u.Location

,Email = u.Email

FROM STAGING.CustomerStaging u

WHERE u.CustomerName = d.CustomerName

AND ( d.Email IS DISTINCT FROM u.Email

OR d.Location IS DISTINCT FROM u.Location

);

CREATE TASK dbo.GRANDCHILD1_INSERT

WAREHOUSE=COMPUTE_WH

AFTER dbo.CHILD1_UPDATE

AS

INSERT INTO dbo.DimCustomer

(

CustomerName

,Location

,Email

)

SELECT

CustomerName

,Location

,Email

FROM STAGING.CustomerStaging s

WHERE NOT EXISTS (SELECT 1 FROM dbo.DimCustomer c WHERE s.CustomerName = c.CustomerName);

We can take a look at the created tasks with the query SHOW TASKS.

Another method to view the tasks is to use the system function TASK_DEPENDENTS.

select * from table(information_schema.task_dependents(task_name => 'TEST.dbo.ROOT_COPYINTO', recursive => true));

Executing the Tasks

By default, when you create tasks, they are disabled. You can use the command ALTER TASK … RESUME to enable a task.

ALTER TASK dbo.ROOT_COPYINTO RESUME;

It's possible you're greeted with the following error message if security is not set-up correctly:

You can assign the necessary privileges to a role with the following statement (using you have the permissions to manage GRANTS):

GRANT EXECUTE TASK ON ACCOUNT TO ROLE myRole;

After the root task has been enabled, we can see that it will run every minute with the following query:

select * from table(information_schema.task_history()) where schema_name = 'STAGING' order by scheduled_time;

But, only the root task has been executed. All the other tasks are still in the suspended state! Luckily, we can all enable them at the same time, instead of executing the ALTER TASK … RESUME for each task. We can do this with the system function TASK_DEPENDENTS_ENABLE.

SELECT system$task_dependents_enable('STAGING.ROOT_COPYINTO');

We can see all tasks are now enabled and in the "started" state:

After waiting some time, we can see the tasks have been executed:

If you want to take a look at the SQL statements executed by the tasks in the query history, you have to enable the following checkbox:

We can add a new file to the Blob Container and it will be picked up automatically by the tasks. Here's a file with an update to an existing row and a new customer:

You can download the sample file here.

Adding a Task to an Existing Tree

After creating an execution tree with tasks, it's possible to add new tasks to the tree. For example, in our initial set-up we didn't truncate the staging table, which can lead to issues as multiple versions of the same dimension member are loaded into the staging table. Let's add a task to the tree that will truncate the staging table once we've updated our dimension.

CREATE TASK STAGING.GREATGRANDCHILD1_TRUNCATE

WAREHOUSE=COMPUTE_WH

AFTER STAGING.GRANDCHILD1_INSERT

AS

TRUNCATE TABLE staging.CustomerStaging;

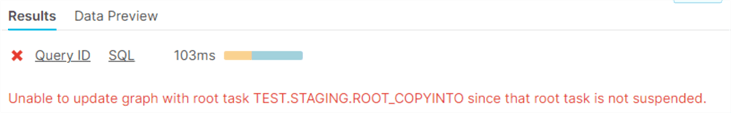

But it's not that easy, Snowflake won't let us update the tree:

It's not possible to update a tree while the root task is enabled. Let's disable it first:

ALTER TASK STAGING.ROOT_COPYINTO SUSPEND;

This will disable the root task, while other tasks remain enabled.

We can now add the extra task and re-enable the tree.

SQL Server Comparison

SQL Server doesn't have the concept of a "task", where a SQL statement can be scheduled or the concept of an execution tree like we discussed in this tip. However, SQL Server provides many powerful (and perhaps even better alternatives): SQL Server Agent Jobs. With a job, you can schedule T-SQL statements (and more than 1 single statements), but you also have the additional capabilities of automatic retries, other types of tasks (SSAS, SSIS, PowerShell …), alerting and so on.

You can also create complex workflows using SSIS packages and schedule those.

Conclusion

Since Snowflake is lacking a tool like SQL Server Agent, tasks are a welcome addition to the Snowflake toolset. Using tasks, you can schedule your SQL statements to build an ELT flow into Snowflake. The concepts are promising, but currently a task can have only one single SQL statement. You can call a stored procedure though, if you like programming in JavaScript.

Next Steps

- You can download the sample files used in this tip here.

- To learn more about Snowflake, check out the tutorial.

- Check out this tip - Getting Started with Snowflake Tasks.

- Some useful documentation about tasks:

- Cancel running tasks with USER_TASK_CANCEL_ONGOING_EXECUTIONS

- You can fetch the name of the task inside the SQL statement (to insert it into a metadata column for example) with the function CURRENT_USER_TASK_NAME

- CREATE and ALTER TASK

- Introduction to Tasks

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2021-08-09