By: Koen Verbeeck | Updated: 2022-01-03 | Comments (5) | Related: 1 | 2 | 3 | > Azure Data Factory

Problem

We're starting a new data warehouse project soon and we've not yet decided on which business intelligence ETL tool to use for our data pipelines. Our team has a lot of experience developing Integration Services (SSIS) packages, but the data warehouse will use a lot of data sources in Azure so Azure Data Factory is also on the table. Which tool is the best option for data integration, orchestration and automation?

Solution

As it usually goes with these types of questions, the answer is "it depends". In this tutorial, we will cover some pro and cons of each tool so you can make an informed decision in the end. For an introduction to both tools, see the following tips and tutorial:

- SQL Server Integration Services SSIS 2016 Tutorial

- What is SSIS?

- What is an SSIS Package

- Azure Data Factory Overview

- Getting Started with Azure Data Factory - Part 1 and Part 2

- What are Data Flows in Azure Data Factory?

Purpose

Both tools are built for reading from data sources, writing and transforming data. This means both can cover a lot of the same use cases. However, SSIS was released in 2005. At that point in time, there was no Azure. SSIS is mainly an on-premises tool and is most suited for on-premises use cases. Microsoft Azure Data Factory (ADF) on the other hand is a cloud-based tool. Its use cases are thus typically situated in the cloud.

SSIS is an ETL tool (extract-transform-load). It is designed to extract data from one or more sources, transform the data in memory - in the data flow - and then write the results to a destination.

ADF on the other hand is more of an ELT tool (extract-load-transform) for data movement. You extract data from one source and then write it to another source. There are possible data transformations during this transfer, such as converting from one file format to another, but these are rather limited. You can roughly compare ADF with a control flow in SSIS.

ADF is typically used as an orchestrator. You load data from one place to another and then you kick off some other process (Azure Functions, Azure Logic Apps, a stored procedure, etc.) that does the actual transformations using another compute engine. That being said, SSIS can also be perfectly used for ELT scenarios: you just load data from one location to another in a data flow task and then you orchestrate SQL statements for example using the Execute SQL Task.

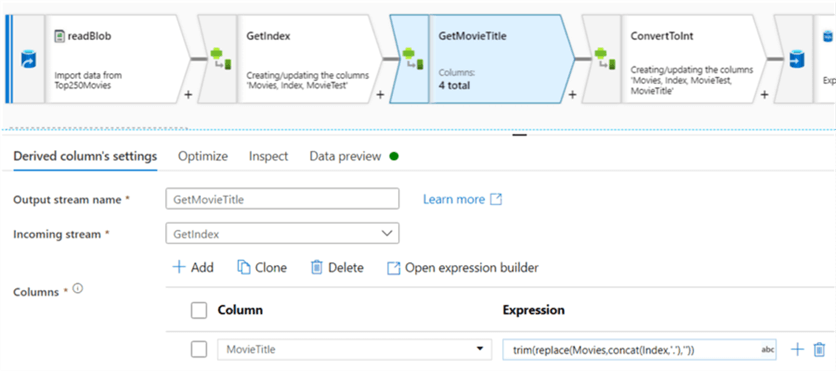

ADF supports ETL scenarios using data flows. You either have the normal data flow (previously called mapping data flow) which uses Azure Databricks behind the scenes and which bears some resemblance with SSIS data flows.

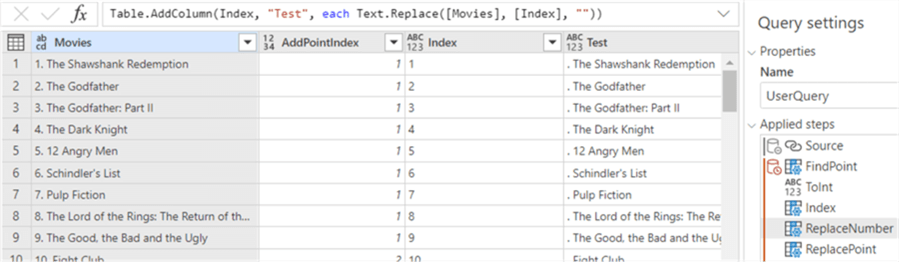

The other type of data flow is the Power Query data flow (previously called the wrangling data flow). This uses the Power Query mashup engine. In contrast with all the other options, here you can see the actual data being transformed while you develop the flow.

The big difference between SSIS and ADF in ETL scenarios is that data flows in ADF are meant for big data scenarios, while SSIS is typically used in smaller to medium data sets. We will come back to this when we talk about performance in the next section.

To conclude this paragraph: SSIS is an on-premises ETL tool which can also be used for ELT. ADF is a cloud tool for building ELT-type data pipelines. Using the data flow features, it's also possible to support ETL use cases in ADF.

Performance

SSIS can be very fast, if designed correctly. Since most transformations (such as data conversion, adding new columns using expressions, splitting the data based on conditions etc.) are done on data in memory buffers, it goes very fast. However, some transformations like sorting need to read all the data in memory first before they start to output any rows. This means that an SSIS package can grind to a stop if you're not careful. You can find some performance best practices in the following tips:

- Improve SSIS data flow buffer performance

- Semi-blocking Transformations in SQL Server Integration Services

If your data flow has no blocking transformations, performance is typically defined by either the read speed of the source or the write speed of the destination. If you use SSIS as an ELT tool, meaning most transformations are not done in SSIS itself but rather in a database engine for example, SSIS doesn't have much impact. SSIS can then be run on a lightweight server. The number of CPU cores will determine how much tasks SSIS can run in parallel:

#tasks in parallel = #cores + 2

SSIS is a great orchestrator, since it allows you to run SQL statements in parallel, which is not possible in a stored procedure or in a SQL Server Agent job.

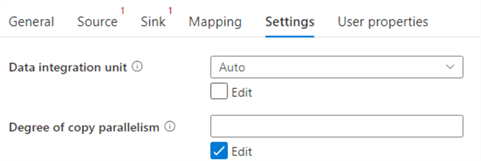

ADF is a cloud tool and thus benefits from the elastic nature of the cloud. When using the Copy Activity, ADF will take care of scale and parallelism automatically when using the default settings:

Data flows in ADF use Apache Spark behind the scenes and it has some optimization features such as partitioning. If a data flow starts up for the first time, the Spark cluster needs to be started as well which brings some additional overhead. If you have long running jobs this overhead is negligible, but for small datasets it's possible the cluster start-up time is much longer than the actual runtime of your data flow. This is why ADF data flows are more suited for bigger data sets while SSIS is better suited for small to medium data sets.

For more info on ADF performance, check out the official documentation:

- Copy activity performance and scalability guide

- Copy activity performance optimization features

- Mapping data flows performance and tuning guide

- Optimizing performance of the Azure Integration Runtime

Developer Experience

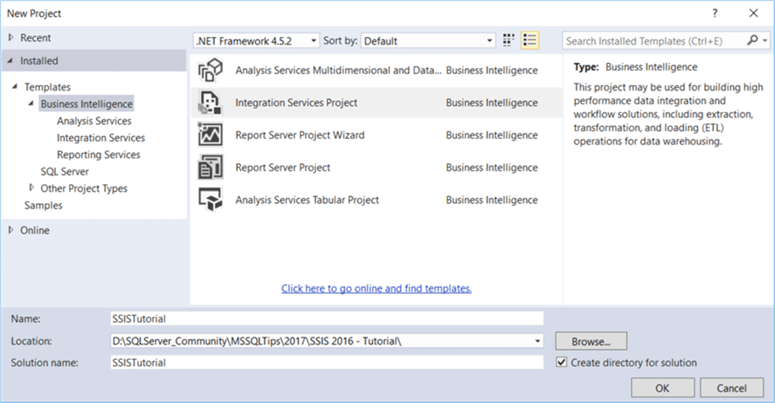

Since SSIS is an on-premises tool, packages are developed on-premises as well. These packages are developed in Visual Studio. This means every developer needs to have some sort of Visual Studio installed (either a full-blown licensed version, or the free community edition or a SSDT shell for older versions) on their machine. You can find more info in SQL Server Integration Services SSIS Versions and Tools.

When debugging an SSIS package, the package is run inside Visual Studio on the machine of the developer. This can result in heavier memory and CPU requirements for the work machine, especially if you're dealing with large datasets in the data flow. Visual Studio sometimes crashes, which can lead to a frustrating developer experience. You can also only debug one single package at a time in Visual Studio, but you can create a parent package though which can execute multiple child packages.

For new features, you either have to upgrade to a newer version of SSIS (and possibly Visual Studio as well) or you have to upgrade the SSIS add-on.

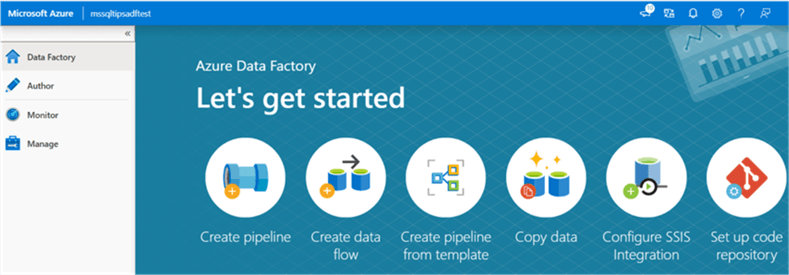

ADF on the other hand is only available in the cloud. Pipeline development is done in Azure Data Factory Studio in the browser. There's no installation of tools required, you only need a machine with an Internet connection.

An advantage of ADF is that it is automatically upgraded by Microsoft for you. You can also debug multiple pipelines at once.

DevOps

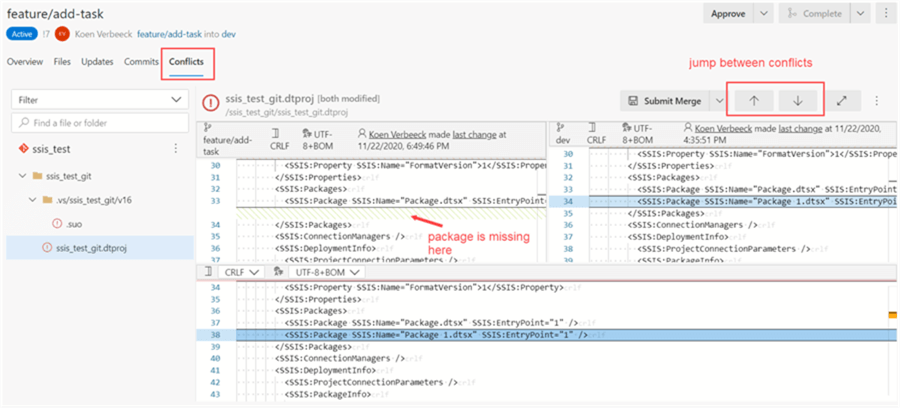

Both tools support version control. You can integrate SSIS with TFS and git (and actually any type of version control that can monitor files on your local machine), while ADF can be integrated into Azure Devops git or Github. This means both SSIS and ADF support team-based development using branching, merging, etc. However, SSIS is stored as XML behind the scenes. Since SSIS stores the layout of all the tasks and the components, as well as all connection information and possible .NET script tasks/components, this XML tends to be really complex. It is advised that only one single developer works on an given SSIS package at a given point in time. Manually merging SSIS files is a real nightmare. You also have the SSIS project file, which can also lead to merge conflicts if multiple developers are working on the same project. Luckily this file is somewhat easier to merge.

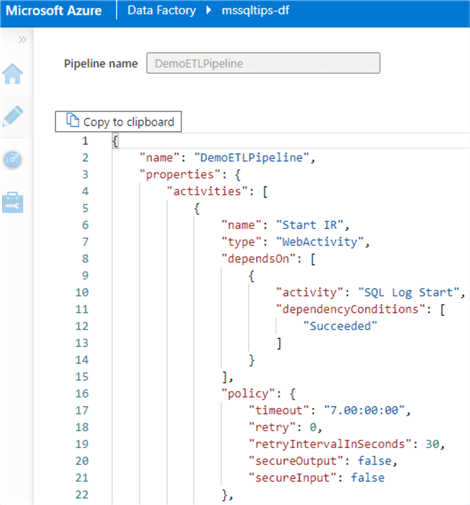

All ADF artifacts are JSON code (in contrast with the XML of SSIS) and since ADF doesn't store layout info the JSON tends to be relatively easy to understand.

SSIS packages and ADF artifacts can be deployed using release pipelines in Azure Devops (or similar tools). The tip Using Azure DevOps CI CD to Deploy Azure Data Factory Environments gives an excellent explanation on how you can set this up for ADF.

More info:

- How to Add SQL Server Integration Services Projects to Azure Devops for Version Control

- Resolve Git Merge Conflict for SSIS Projects

Code Generation

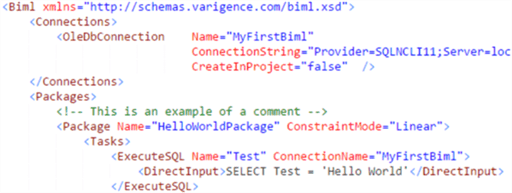

Developing many SSIS packages can be tedious and time consuming. If you need to develop a lot of packages with a similar pattern, for example staging packages who copy data from one server to another, it might be beneficial to generate the SSIS packages instead of creating the manually. One option is to use the Import/Export wizard in SQL Server Management Studio, but a more flexible and powerful one is using the Biml language to generate SSIS packages. Biml is an XML dialect where you specify the contents of an SSIS package. Using .NET code and metadata, you can make this XML specification dynamic and generate hundreds of SSIS packages on the fly.

You can learn all about it in the Biml tutorial. Biml is definitely nice to have in your toolbelt if you need to develop a lot with SSIS. The problem is it comes with a learning curve and the coupling of .NET with XML can be confusing. It can take some time to develop an SSIS pattern in Biml.

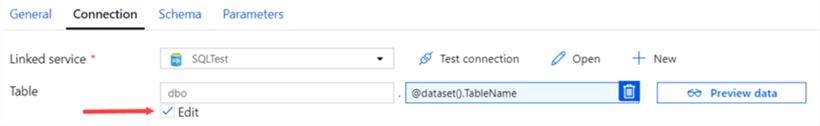

Because ADF has a simpler JSON schema, you could write an app that generates the JSON code for all your artifacts. But you actually don't need to. If you for example need to load 100 csv files to an Azure SQL DB, you can create one single pipeline with two data sets (one for csv, one for the database) and you parameterize everything. The automatic mapping of in the Copy Activity takes care of the rest. This pattern is described in the tip How to Load Multiple Files in Parallel in Azure Data Factory - Part 1 and part 2. The blog post Dynamic Datasets in Azure Data Factory also gives a good explanation.

Cloud vs On-Premises

It's clear that in some use cases it's straight forward to choose which tool to use. If your project is going to be fully in the cloud, ADF seems to be the obvious choice. If everything is on-premises, SSIS seems a no-brainer. However, there are some interesting use cases where we could choose for a hybrid approach.

SSIS in the Cloud

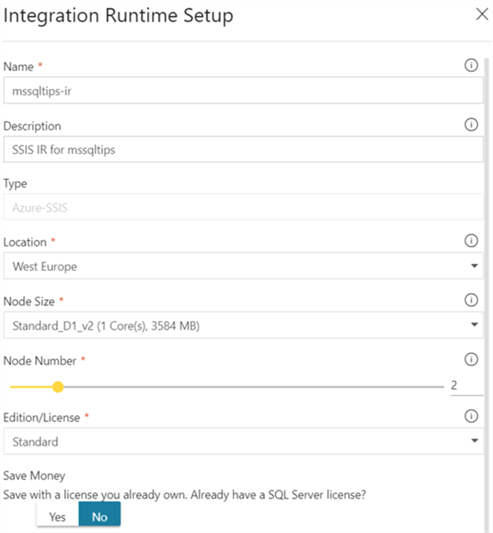

You can run SSIS packages inside ADF by using the Azure-SSIS Integration Runtime. This is a cluster of virtual machines, managed by ADF, where you can execute SSIS packages. You pay for each second the cluster is running. The SSIS project itself is stored in an SSIS catalog in either Azure SQL DB or in Azure SQL Managed Instance.

The tip Configure an Azure SQL Server Integration Services Integration Runtime explains how to set-up an Azure-SSIS IR. Why would you use this type of solution?

- You already have existing SSIS projects and you don't wish to convert them all the ADF pipelines. You rather want to "lift-and-shift" everything as-is to the cloud.

- You have a lot of experience of developing SSIS projects but very little in ADF. You can leverage your existing knowledge and immediately start developing at your project full speed by running SSIS in ADF.

- SSIS is very extensible using .NET code or by calling external processes. Maybe there's some functionality missing in ADF which is very easy to implement in SSIS.

Moving Local Data with ADF

You cannot run ADF on-premises. However, there's the self-hosted integration runtime.. If you need to reach data on-premises in ADF, you need to install this runtime on your local network so ADF can make a connection to your data. This runtime acts like a gateway. But what if both the source and the sink are on-premises? Turns out you can still use ADF! Through the runtime, you can copy data from one location in your local network to another local location. The runtime is smart enough to realize everything is local, and doesn't send the data to Azure and back.

This hybrid scenario is useful when you simply want to copy data between two systems and you don't want to create dozens of SSIS packages. Using parameterized data sets and some metadata (as mentioned in the previous section), you can very quickly create a solution for migrating data for a low cost.

Conclusion

Both SSIS and ADF are used to move and transform data. SSIS is a mature tool that's being around for more than a decade. But there's hasn't been much changes to the product in the last releases. ADF on the other hand is a cloud-native tool which is still continuously evolving.

When you need to choose between the two, the following questions might help reach a decision:

- Is the project in the cloud? If yes, ADF is the logical choice most of the time. If not, SSIS is probably better suited.

- Are you going to handle large amounts of data? If yes, ADF and its data flows using the Spark engine are typically better suited for big data workloads.

- Do you already have a lot invested in SSIS projects? In that case, you can lift-and-shift your existing projects to ADF if you want to migrate to the cloud. You can choose to create new projects in ADF or in SSIS, depending on your team's skills and desire to learn new technologies.

- What kind of development machines are being used? Developing SSIS packages requires Visual Studio which means Windows development machines. If your company uses another operating system like iOS, ADF might be a better option.

Next Steps

- What criteria do you use? Let us know in the comments!

- More info on the moving SSIS to ADF:

- Joost van Rossum has written about SSIS and Azure Devops:

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-01-03