By: Svetlana Golovko | Updated: 2022-01-18 | Comments | Related: > Database Design

Problem

Sparse columns were added with SQL Server 2008 as a new feature that helps save space when columns have a high ratio of NULL values. We have a couple of very large and wide tables with Nullable columns and most of them don't have data. We'd like to take advantage of sparse columns to save space. We ran SSIS (SQL Server Integration Services) Data Profiling Task to find sparse columns candidates (Null Ratio profile). With more than 500 candidate columns it will be hard to review and alter each column individually. How can we use the SSIS Data Profiling output XML file? Can we generate a script to modify columns based on Data Profiling output and apply to this script sparse columns requirements/restrictions?

Solution

As per this Microsoft article you can: "Consider using sparse columns when the space saved is at least 20 percent to 40 percent." The same article has a table that provides estimated space saving by data types.

In our example below we will consider only columns that have at least 60% of Nullable columns. To check if a column is already set as sparse we can query sys.columns system catalog view (is_sparse or is_column_set columns):

SELECT [name] AS column_name, is_sparse, is_column_set

FROM sys.columns

WHERE OBJECT_NAME(object_id) = 'sparce_tbl_test' -- table name

AND (is_sparse = 1 OR is_column_set = 1)

There are some limitations for the columns that might be converted to sparse. We will include most of these restrictions as filters in our solution/script. We will create a test table with nullable columns and populate this table with some data. Then, we will run SSIS Data Profiling Task to estimate Null Ratio in our columns.

Create and Populate Test Table

We will use this table to use for our tests and to create and test a demo script. Note, that indexes created only for the sparse columns limitations demonstration:

CREATE TABLE [dbo].[sparce_tbl_test](

[col1] [int] IDENTITY(1,1) NOT NULL,

[col2] [varchar](20) NOT NULL,

[col3] [varchar](20) NULL,

[col4] [varchar](20) NULL,

[col5] [varchar](20) NULL,

[col6] [varchar](20) NULL,

[col7] [varchar](20) NULL,

[col8] [int] NULL,

[col9] [int] NULL,

[col10] [int] NULL,

[col11] [int] NULL

) ON [PRIMARY]

GO

CREATE CLUSTERED INDEX [CLIX_sparce_tbl_test] ON [dbo].[sparce_tbl_test] ([col8] ASC)

GO

CREATE NONCLUSTERED INDEX [NCLIX_sparce_tbl_test_1] ON [dbo].[sparce_tbl_test] ([col10] ASC)

GO

We will populate the first two columns with some data:

SET NOCOUNT ON

GO

INSERT INTO dbo.sparce_tbl_test (col2,col3) SELECT CAST(RAND() AS VARCHAR(20)),' c3'

GO 10

INSERT INTO dbo.sparce_tbl_test (col2) SELECT CAST(RAND() AS VARCHAR(20))

GO 20000

SSIS Data Profiling Task

Now we will create SSIS package and run the SSIS Data Profiling Task to estimate Null values percentage in our test table.

Here are high-level steps:

- Create the new SSIS package under the new or existing SSIS solution

- Add "Data Profiling Task" from the SSIS Toolbox to the package's Control Flow:

- Configure SSIS Destination (file that will have XML output with results of the Data Profiling):

- We'll configure Profile by clicking on "Quick Profile…" button and selecting to compute only "Column Null Ratio Profile":

- And after configuring and running a "Quick Profile" we will have Request configuration that has SQL Server name, database name, and our test table name:

-

The "ConnectionManager" will be our SQL Server and database, "TableOrView" – the sparse candidate table and all columns (*) as the "Column" property.

This tip has more details on how to configure and run SSIS Data Profiling Task (mssqltips.com).

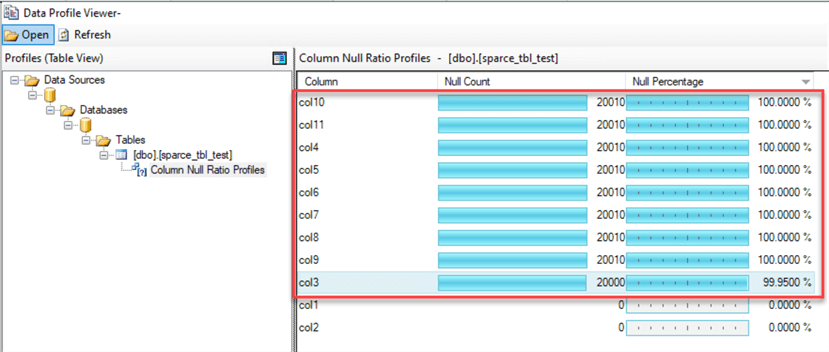

After executing the SSIS package we can see in a Data Profile Viewer that all columns except col1 and col2 might be good candidates for converting to the sparse columns:

You can also identify columns qualified for the sparse columns conversion by using the T-SQL script as described in this tip.

Using Data Profiling Output File

During Data Profiling Task configuration in SSIS we specified the Destination file:

This file has an XML structure, and we will use it in our script to analyze the output.

We will join XML output with System Catalog Views that contain information about columns, indexes, and data types (sys.columns, sys.indexes, sys.index_columns, and sys.types).

Note, that the output file should be located on a server from where we run our script. In our case we copied it over from SSIS server to the SQL Server with our "db_sparse_test" database to this location: "C:\temp\DataProfileOutput.txt". We will use this location in our script below.

We will also incorporate in our query some filters that will return qualified columns based on the sparse columns restrictions:

USE db_sparse

GO

DECLARE @profiles xml

SELECT @profiles = P

FROM OPENROWSET (BULK 'C:\temp\DataProfileOutput.txt', SINGLE_BLOB) AS Profiles(P)

DECLARE @hdoc int

EXEC sys.sp_xml_preparedocument @hdoc OUTPUT, @profiles, N'<root xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema" xmlns:d="http://schemas.microsoft.com/sqlserver/2008/DataDebugger/"/>'

;WITH dp AS

(SELECT * FROM OPENXML(@hdoc, N'/d:DataProfile/d:DataProfileOutput/d:Profiles/d:ColumnNullRatioProfile/d:Column')

WITH (

[Schema] VARCHAR (200) '../d:Table/@Schema',

[Table] VARCHAR (200) '../d:Table/@Table',

[ColName] VARCHAR(100) '@Name' ,

[RowCount] INT '../d:Table/@RowCount',

[NullCount] INT '../d:NullCount')

)

SELECT DISTINCT dp.* ,

NullCount*100.0/[RowCount] PercentOfNullable,

t.[name] data_type_name,

c.max_length,

c.[precision],

c.scale,

CASE WHEN ISNULL(ic.index_id, 0) <> 0 THEN '-- ' ELSE '' END +

'ALTER TABLE dbo.' + dp.[Table] + ' ALTER COLUMN ' + dp.[ColName] + ' ' + t.[name] +

CASE WHEN t.[name] LIKE '%char%' THEN '(' + CAST(c.max_length AS VARCHAR(20)) + ')'

WHEN t.[name] = 'decimal' THEN '(' + CAST(c.[precision] AS VARCHAR(20)) + CAST(c.scale AS VARCHAR(20)) + ')'

ELSE ''

END

+ ' SPARSE NULL; ' AS AlterScriptSample,

INDEXPROPERTY(ic.OBJECT_ID, i.name,'IsClustered') AS HasClusteredIndex, --A sparse column cannot be part of a clustered index or a unique primary key index.

INDEXPROPERTY(ic.OBJECT_ID, i.name,'IsUnique') AS HasUniqueIndex,

CASE WHEN ISNULL(ic.index_id, 0) <> 0 THEN 'Review indexes dependencies'

ELSE '' END AS Notes

FROM dp JOIN sys.columns c

ON dp.[Schema] = OBJECT_SCHEMA_NAME(c.object_id)

AND dp.[Table] = OBJECT_NAME(c.object_id) AND dp.[ColName] = c.[name]

JOIN sys.partitions p

ON c.object_id = p.object_id

JOIN sys.types t

ON c.system_type_id = t.system_type_id

LEFT JOIN sys.index_columns ic

ON c.object_id = ic.object_id AND c.column_id = ic.column_id

LEFT JOIN sys.indexes i

ON ic.object_id = i.object_id AND ic.index_id = i.index_id

WHERE NullCount*100.0/[RowCount] > 60 -- more than 60% of data has NULLs

AND c.is_nullable = 1 -- only NULLable columns can be sparse

AND t.[name] NOT IN ('geography', 'geometry', 'image', 'ntext', 'text' ,'timestamp') -- unsupported data types (can't be sparse)

AND t.is_user_defined = 0 -- unsupported data types (can't be sparse)

AND c.is_identity = 0 -- can't be sparse

AND c.is_filestream = 0 -- can't be sparse

AND c.is_rowguidcol = 0 -- can't be sparse

AND c.rule_object_id = 0 -- can't be sparse

AND c.default_object_id = 0 -- can't be sparse

AND c.is_computed = 0 -- can't be sparse

AND c.is_sparse = 0 -- already sparse

AND p.data_compression_desc = 'NONE' -- table can't be compressed

ORDER BY 11 DESC;

EXEC sys.sp_xml_removedocument @hdoc

After applying these rules we have got the results below:

- Only Nullable columns will be displayed as candidates to the sparse columns conversion

- Some data types (including user-defined data types) can't be used with the sparse columns

- Column cannot have the Filestream attribute

- Column cannot have the Identity property

- Column cannot have the Rowguidcol property

- Candidate columns cannot have rules or defaults bound to them

- We exclude columns that are already sparse

- Only return columns where 60% or more data has NULLs

- The table cannot be compressed

- Columns with indexes on them should be reviewed. The line with these columns in the script will be commented out. Some indexes might need to be dropped and re-created after the column conversion to sparse. Other indexes cannot be used with the sparse columns (see "restrictions" section of the Use Sparse Columns - SQL Server | Microsoft Docs).

Note, that we commented out part of the script where indexes have to be potentially dropped and recreated (or where additional review is required based on Microsoft's article):

ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col9 int SPARSE NULL;

ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col7 varchar(20) SPARSE NULL;

ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col6 varchar(20) SPARSE NULL;

ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col5 varchar(20) SPARSE NULL;

ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col4 varchar(20) SPARSE NULL;

ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col3 varchar(20) SPARSE NULL;

ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col11 int SPARSE NULL;

-- ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col8 int SPARSE NULL;

-- ALTER TABLE dbo.sparce_tbl_test ALTER COLUMN col10 int SPARSE NULL;

Testing the Script with a Real Table

We ran the same process on our large table that has 581 columns.

After running SSIS Data Profiling Task only 13 columns had less than 60% of NULLs and 1 column didn't qualify (had timestamp data type). The space used by the data (sp_spaceused) after converting columns to sparse dropped from 3,076 MB to 206 MB:

Note, that this script was tested with specific tables and data types. Make sure you run it in a Non-Production environment first and test dependent application(s) before making any changes in Production. Also, review additional sparse columns restrictions in this Microsoft's article before altering columns.

Next Steps

- Read this tip: SQL Server Sparse Columns Identifying Columns For Conversion. This is another way (without SSIS) of finding sparse columns candidates.

- Check this blog SQL SERVER - Performance Benefit of Using SPARSE Columns? and find more links with sparse columns posts on the same page.

About the author

Svetlana Golovko is a DBA with 13 years of the IT experience (including SQL Server and Oracle) with main focus on performance.

Svetlana Golovko is a DBA with 13 years of the IT experience (including SQL Server and Oracle) with main focus on performance. This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-01-18