By: Ron L'Esteve | Updated: 2022-03-03 | Comments | Related: > Azure Synapse Analytics

Problem

Synapse Analytics is a data and analytics platform as a service that unifies data integration, data warehousing, big data analytics, reporting, CI CD and much more within the Modern Azure Data Platform. It supports a variety of tools such as workspaces for developing code for BI, ML, and ELT within the Lakehouse. The workspace supports notebook and pipeline development, scheduling, monitoring, reporting, collaboration through repos and more. Customers are interested in understanding the capabilities of Synapse Analytics workspaces, specifically around storage, compute, development, integration, management, and monitoring.

Solution

Synapse Analytics workspace is an Azure-native unified data and analytics platform that supports data integration, data warehousing, advanced analytics and real-time analytics on data of any volume, velocity, and variety. With Synapse Analytics workspaces, you can build ELT pipelines, analyze and query big data in your data lakes through the secure and well-governed features within the platform.

In the following sections, you will learn more about some of the feature offerings of Synapse Analytics workspaces which include robust storage offerings, extensive development notebooks which include scripts and templates, and integration capabilities through pipelines similar to Data Factory along with reusable templates. Finally, you will learn more about the monitoring and management capabilities within Synapse Analytics workspaces including compute, repos and more.

The figure below shows the home page of Synapse Analytics workspace.

The Knowledge Center, shown in the figure below offers open datasets and sample code via a gallery containing reusable templates for notebooks, scripts, datasets, pipelines, and databases. If you're new to the workspace, you'll have the option to tour the Synapse Studio as well.

Storage

Synapse Analytics offers a variety of Data Storage options from within its workspace. These options include SQL databases, Lake databases, Data Explorer database. You'll also have the ability to connect to external data, integration datasets, and a selection of openly available datasets from the gallery.

SQL Database

When creating a SQL Database within the Synapse Analytics workspace, you'll have the option to select from either a Serverless or Dedicated SQL Database.

The Dedicated option is a provisioned MPP style SQL data warehouse measured in Data Warehousing Units (DWU). An MPP engine runs on a Control Node to orchestrate the execution of queries in parallel while the Compute Nodes execute queries by distributing the work equally among them from 1 to 60 based on the Data Warehouse Units (DWU). With Dedicated SQL Pools, you'll have the benefit of Sharding patterns such as round-robin, replicated, and hash distributions. Dedicated SQL Pools also bring the benefit of indexes such as clustered column store indexes. You'll also have access to partitioning options within Dedicated SQL Pools. A Serverless SQL pool, on the other hand, does not need to be provisioned since there is no infrastructure to set up. Additionally, with its pay-per-query model, users only pay for data processed by queries that are run.

Lake Database

With Lake Databases, you'll be able to create, organize, and manage external tables in Synapse Analytics workspace from data stored in your ADLS gen2 account. In addition, you'll have access to a collection of database templates and a UI driven database designer, which also allows you to create a data model and refine relationships, metadata, and much more. When you query data in your Lake Database, it would be using Apache Spark pool so you would only pay per query executed. The available database templates are specific to industry schemas and data models and can easily be customized.

With Lake Databases, you'll also be able to run cross database queries across ADLSgen2 accounts in different regions that have been persisted as an External Table within the Lake Database. Since Lake Databases leverage Apache Spark heavily, you'll have access to its open-source indexing subsystem called Hyperspace. Similar to a SQL Server non-clustered index, Hyperspace will allow you to create an index across a specified data-frame, create a separate optimized and re-organized data store for the columns that are being indexed, and include additional columns in the optimized and re-organized data store, much like a non-clustered SQL Server index.

Integration Datasets

Since your Synapse Analytics workspace is truly a unified data platform, you'll have the ability to create ELT Synapse pipelines and data flows, much like how you would create them in Data Factory (ADF). Similar to ADF, you'll need to create linked services and datasets containing connection strings and references to your source data. An Integration Dataset within Synapse Analytics workspace follows this same concept. When configuring a new Integration Dataset, you'll have access to a variety of Azure and non-Azure services that you'll be able to create datasets for.

External Datasets

By default, when you create a Synapse Analytics workspace, you'll need to link an ADLSgen2 account. You'll be able to run queries against data in this and other accounts that you add by creating additional External Tables to these sources which can include Cosmos DB (MongoDB and SQL API), ADLS gen2, Blob, and Data Explorer. Once your connection is verified and you've created the External Table, you can run an OPENROWSET command within your SQL Query.

Development

There are a few ways to get started with development in Synapse Analytics workspaces. You can write SQL scripts, which run on SQL Serverless Pools to query either your Lake or SQL database. Since Data Explorer is also integrated with Synapse Analytics workspaces, you can query logs and telemetry data from the workspace by writing Kusto Query Language (KQL) scripts. You can also create a Notebook to write scripts in either PySpark(Python), Spark(Scala), .NET Spark (C#), or Spark SQL. On your scripts are developed and published, they can be run on Apache Spark Pools. With Data Flows, you be able to create Apache Spark based custom GUI driven transformations, similar to Mapping Data flows in ADF. Apache Spark job definitions allow you to upload definition and reference files along with customize submission details related to Spark pools, executors and more. Finally, within the Gallery, you'll have access to Notebooks containing reusable scripts that can be customized for a variety of use cases across multiple languages.

Integration

Integrating data within Synapse Analytics workspaces has never been easier with its Data Factory like UI experience. With Pipelines, you can create custom ELT workloads to move, transform, and integrate a variety of data platform services and functions such as ADLSgen2, Databricks, Snowflake, Functions, SQL Database, Lake Database, and Dedicate SQL Pools using Spark Jobs, Stored Procedures, Data Flows and more.

The Copy Data Tool, provides a GUI driven experience for building reusable pipelines based on templates to copy data from over 90+ sources to various sinks, which also include Synapse Dedicated SQL Pools and more. By entering the configurations required in the UI, the Copy Data Tool will generate artifacts including pipelines, datasets, and linked services and will create a task cadence and schedule for you. Similarly, the meta data-driven copy task will provide you with parameterized pipelines and a control table. The pipelines will come pre-configured to connect to this control table and read meta data. You will be able to access and update this control table without the need to re-deploy your pipelines. Other Integration services offered by Synapse Analytics workspaces include Gallery pipeline templates which include numerous re-usable use case ELT pipelines, along with the capability of importing pipeline templates and resources from support files.

Monitoring

The Synapse Analytics workspace Monitor hub provides a GUI based experience for monitoring workloads related to SQL, Apache Spark, and Data Explorer pools. You'll be able to track status of these analytics pools including if they are on-line, size, CPU and memory utilization. You will also be able to track activities related to SQL and KQL requests, Spark applications, and Data flow debug sessions. Some of the metrics that you can track for these activities include request content, submission times, duration, data processed, submitter, and status. Finally, similar to ADF, you will be able to monitor pipeline and trigger runs, and integration runtimes.

Management

It is within the Manage hub of Synapse Analytics workspace where you will be able to create and configure SQL, Spark, and Data Explorer pools which will be available immediately for your workspace. External connections such as Linked Services and connectivity to your Azure Purview account can also be created in this hub. From an integration stand-point, you'll be able to create a variety of triggers such as tumbling windows, storage, and custom events with custom recurrences along with integration runtimes which could include either Azure or Self-hosted IRs. From the perspective of security management, access controls and can be managed from this hub to grant others access to the workspace or workspace items using Role Based Access Controls (RBAC). Credentials can be created through managed identities and service principals based on AAD authentications. With workspace packages, .whl or .jar files can be uploaded and used by the notebooks scripts which run on Apache Spark pools with the workspace.

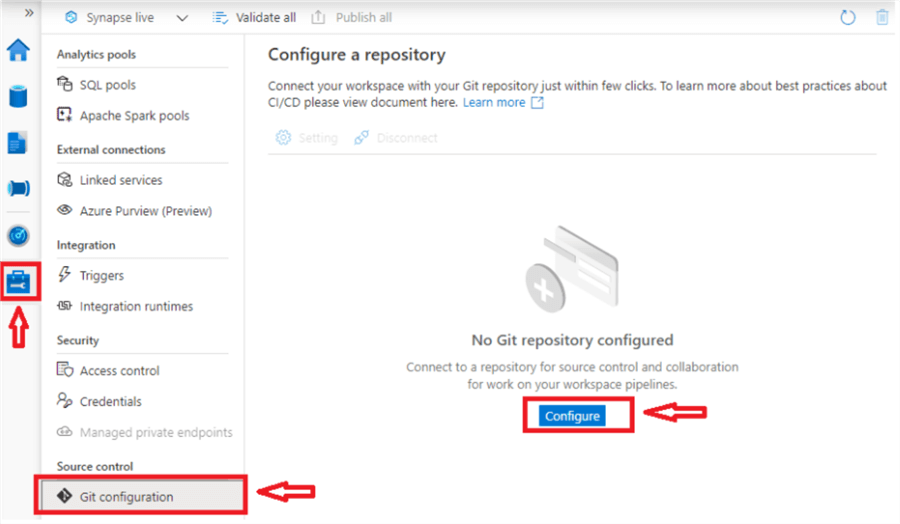

For source controls and configurations, by default, your Synapse Analytics workspace will be connected to Synapse Live, which is similar to the Data Factory's publish branch which basically offers the capability of collaborating and committing code without the need for a repo. Since there are numerous benefits of checking your code into a source repository, the Synapse Analytics workspace also offers the capability of connecting your entire workspace to the source control repository, which includes Azure DevOps (ADO) as an option. This enables collaborative development and the ability to build CI / CD pipelines to incrementally test and deploy development changes to the higher environments. Once you are connected to your development repo, you will be able to commit and publish changes incrementally.

Next Steps

- Read more about Quickstart: create a Synapse workspace - Azure Synapse Analytics | Microsoft Docs

- Read more about Azure Synapse Analytics Workspace Samples (mssqltips.com)

- Read more about Azure Synapse Spark and SQL Serverless External Tables (mssqltips.com)

- Read more about What is Azure Synapse Analytics? - Azure Synapse Analytics | Microsoft Docs

- Read more about What's new? - Azure Synapse Analytics | Microsoft Docs

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-03-03