By: Koen Verbeeck | Updated: 2022-05-02 | Comments | Related: 1 | 2 | More > Import and Export

Problem

We're embarking on a data warehouse project. Our company has Microsoft as a preferred software vendor, but there are so many choices for moving data around from various data sources. Which tool should we choose for our ETL processes?

Solution

In this tutorial, we try to give you an overview of all the possible ETL (Extract, Transform and Load) tools in the Microsoft Data Platform. There are many different options for ELT and ELT processes, as anything that allows you to write some code can be used to ingest, transform or write data. You could even use VBA in Excel if you really want to! We're limiting ourselves to tools that are typically used in data warehousing, business intelligence and data engineering projects.

We can make distinctions between data integration tools based on a couple of criteria:

- Does it exist on-premises or in the cloud only?

- Does it involve writing a lot of code, or does the tool use a low/no-code approach?

In this part of the tip, we'll look at solutions that don't require a lot of code.

Integration Services

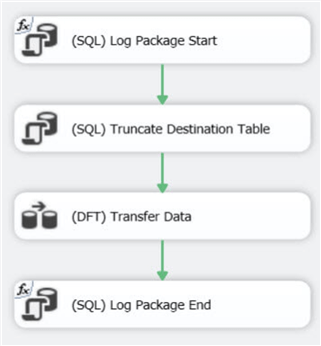

Probably the best known ETL tool of the Microsoft Data Platform is Integration Services (SSIS). It has been around since SQL Server 2005 and offers a robust environment for all your data integration needs. Development is done in Visual Studio. You create packages that perform one or more tasks in a control flow. You can have tasks like executing a SQL script, executing a .exe file, sending a mail, copying files in a file system and so on. Dependencies between tasks can be defined using precedence constraints.

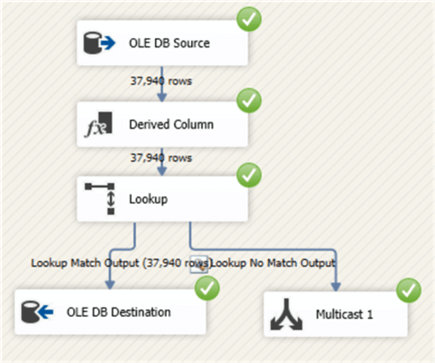

There's one special task in the control flow: the data flow. In a data flow, SSIS can extract data from various source systems, transform them on-the-fly while the data is in-memory and write it to a target system. Data transformations are for example performing calculations, converting data types, redirecting rows depending on certain criteria, sorting rows etc. The data flow is a true embodiment of the ETL principle: data is extracted from different sources, transformed and loaded in one single process to a target database perhaps a staging area or production database.

SSIS is a low code solution: you drag the boxes (tasks or transformations) onto the canvas, you connect them with each other, and you configure each component. There's some minor coding involved by writing expressions, and possibly some SQL scripting. You can also extend the functionality of SSIS using .NET scripts (either VB.NET or C#.NET).

SSIS comes together with SQL Server: if you have a SQL Server license, SSIS is free of charge. However, if you want to install SSIS on a dedicated server, this means you need a SQL Server license just to have SSIS. Most features of SSIS are covered in the Standard license, but some advanced features need an Enterprise license.

SSIS is typically used on-premises, but you can use it in a cloud environment using either of two options:

- You install SSIS in a virtual machine hosted in Azure. This means you're still responsible for managing updates and other maintenance.

- You can create an Azure-SSIS Integration Runtime in Azure Data Factory. This IR will create a cluster of virtual machines for you that will run the SSIS packages. Maintenance is being taken care off for you, you only need to worry about your SSIS packages. This option is great for lift-n-shift scenarios, where you want to migrate existing SSIS solutions to the cloud.

Azure Data Factory

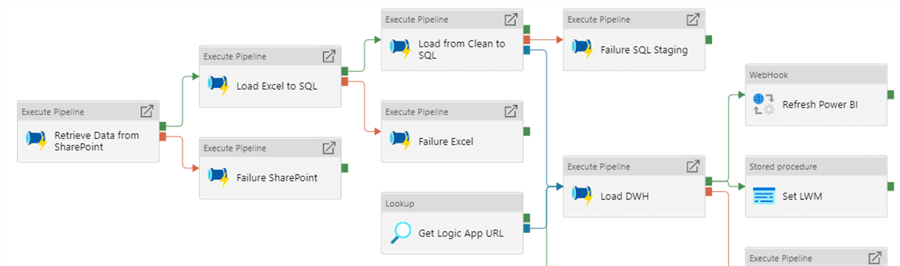

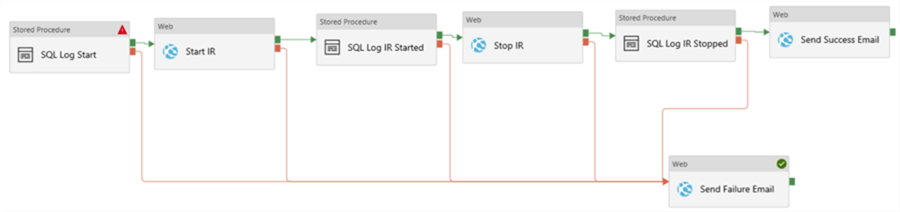

While SSIS is mainly an on-premises tool which can be used in the cloud for some use cases, Azure Data Factory (ADF) is the exact opposite. It's a cloud ETL tool and development is done in the browser. ADF executes tasks – called activities – in a ETL pipeline. Such a data pipeline resembles the SSIS control flow.

Like in SSIS, you have precedence constraints – such as success, failure or completion – that connect various activities, and which can be used to establish dependencies. However, precedence constraints in SSIS are more flexible as you can put expressions on them, and you can configure them to behave like an OR constraint. For example, if one task depends on two previous tasks, an OR constraint means that only one of the two tasks needs to finish successfully so that the third task can start. This is not possible in ADF. Let's illustrate with an example. In the following pipeline, the “Send Failure Email” activity will never be executed, because that would mean all 5 tasks would need to fail, which is not possible.

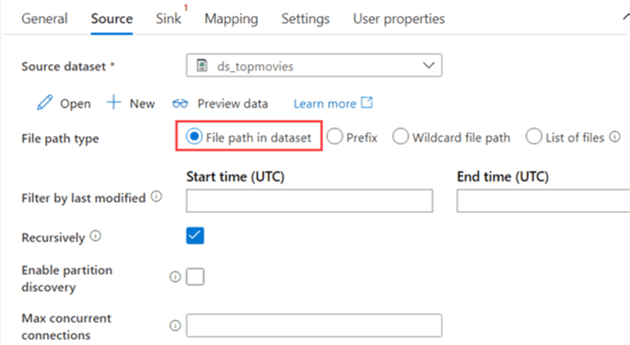

ADF has various data processing activities at your disposal, such as executing a stored procedure or a SQL script, fetching metadata from a file system, starting a notebook in Azure Databricks and so on. The most interesting one is perhaps the Copy activity. This activity will ingest data from a source and write the data to a destination (called sink). It can transform the file format on the fly. For example, you can read a JSON file from Azure Blob Storage and write the data to a table in Azure SQL DB. The Copy activity is a bit the same as a data flow in SSIS, but only with a source and a destination.

Aside from pipelines, ADF also has two types of data flows.. The regular data flow (previously called mapping data flow) resembles a data flow like in SSIS.

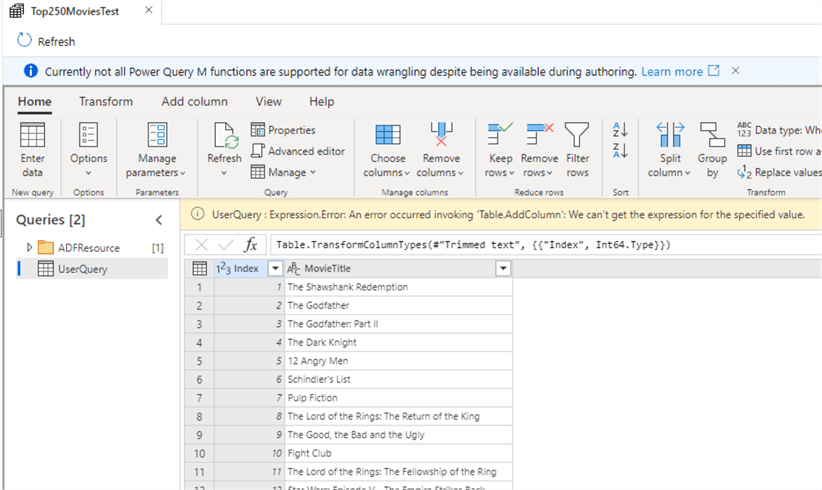

The other data flow option is Power Query, which you might already know from Power BI. The big difference between Power Query and the other data flow (and the general pipelines) is that you actually see the data while you are still developing the query.

Both Power Query and the data flow in ADF are abstractions on top of Azure Databricks (this tool will be featured in part 2). Both are typically used in big data type scenarios, as they require an underlying server cluster to spin up when you're using the data flows. For smaller volumes of data, it's recommended to use the Copy activity to fetch the data and write it to a location where you can easily execute transformations, e.g. a database where you can execute SQL scripts.

If you want to access on-premises data, such as files on your local network or a local installation of SQL Server, you can install a self-hosted integration runtime. This IR acts as a gateway between ADF and your local environment, so that ADF can fetch data from your local network. It's also possible to execute activities locally on this IR, for example if regulations prescribe data should not be sent to the cloud.

Like SSIS, ADF is low code. You can use expressions to make properties more dynamic, but most code you write will probably be scripts executed on other tools which are then orchestrated by ADF. For example, SQL scripts executed in a database, or Python scripts executed in Azure Databricks.

Azure Logic Apps

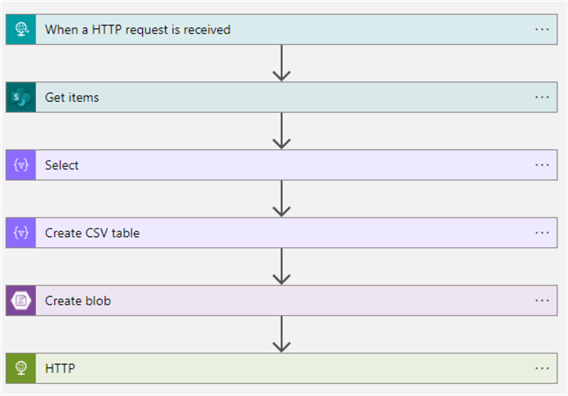

Logic Apps are a user-friendly method of creating serverless workflows that automate the integration between various services without the need of writing code. For example, here's a Logic App that reads data from a SharePoint List and writes it to a CSV file in Azure Blob Storage:

All of this is configured without writing a single line of code. Logic Apps are a feature of Azure, but there's also Power Automate in Office 365 which is more geared towards business users. Both are essentially the same. Logic Apps are typically a short workflow, triggered by an event such as an HTTP call or a file trigger on a blob container. They are not meant for long running batch flows like SSIS or ADF. You can use them to fill gaps in functionality of ADF. For example, ADF cannot send an email, or it cannot read data from a SharePoint folder, but both are easy to accomplish with Logic Apps.

Next Steps

- Stay tuned for part 2, where we'll cover code driven ETL tools in the Microsoft Data Platform.

- More tips about SSIS:

- SQL Server Integration Services SSIS 2016 Tutorial

- Tips about control flow

- Tips about data flow

- More tips about ADF:

- A couple of tips about Logic Apps:

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-05-02