By: Koen Verbeeck | Updated: 2022-05-25 | Comments | Related: 1 | 2 | More > Import and Export

Problem

In this two-part tutorial series, we're composing an overview of possible ETL tools in the Microsoft Data Platform. Part 1 focuses on tools or services that don't require the developer to write code (or as little as possible) for ETL processes when working on data warehousing or data management use cases. In this part, we'll look at more code-intensive solutions to build your data pipelines.

Solution

In this tutorial we will look at code intensive solutions for ETL (Extract, Transform and Load) activity when working with the Microsoft data platform such as T-SQL, Azure Functions and Azure Data Bricks for business intelligence solutions.

ETL Tools with Code Intensive Solutions

T-SQL

When building a data warehouse (or any data integration solution) and the data resides in a on-premises relational database like SQL Server (i.e. data source), you can choose to implement all the logic in T-SQL. Many data transformations can be perfectly build using plain SQL. Most likely, this logic is encapsulated in stored procedures and constructs like TRY … CATCH and transactions are used to make the SQL code more robust. T-SQL constructs like WHILE or cursors allow the developer to use more traditional programming concepts. With the use of dynamic SQL, it's possible to create flexible solutions.

In fact, the concept of "ELT" can rely heavily on the use of SQL. In ELT – in contrast with ETL where transformations are done on-the-fly, the data is loaded into the destination (the relational database) and is transformed over there. If the data is in a database, SQL is obviously the preferred scripting language to deal with the data.

There are a couple of disadvantages though when working purely with SQL alone:

- It's much harder to run code in parallel. There's no language construct in SQL to execute multiple SQL statements at the same time. It's also not possible in a SQL Server Agent job.

- T-SQL can lack some functionality. For example, there's no native support for regular expressions. You cannot extend the language easily, except for SQL CLR. However, not everyone is fond of this work around as there might be performance and security issues.

- You need to be well versed in writing SQL scripts. Some tools, like Azure Logic Apps and perhaps SSIS & ADF, make it easy for a developer to create data pipelines. When writing SQL, you need to write everything yourself. It can take a bit of a learning curve to write high-quality and performant SQL code, especially with larger datasets.

- There's limited integration with other parts of the ecosystem. Sure, there's database mail if you want to send some mails, but you cannot start a Logic App or an Azure Function from a stored procedure. If the source data is outside of the network or it isn't in a format that SQL Server supports (when using import tools like BULK INSERT or BCP), it's hard to get data in. Or if you want to start an executable, you'll need to resort to xp_cmdshell in SQL Server (which is again not preferred because of security concerns). But if your database is in Azure, you don't even have access to xp_cmdshell.

To get around those limitations, ETL pipelines aren't always fully developed in SQL alone, but rather in a combination with another tool. For example, you can use ADF or SSIS to orchestrate the execution of your SQL statements. Both tools allow for parallel execution, and they have many connectors allowing you to import/export data from various source systems and load the transformed data into target systems. They also have better integration with the many parts of the Microsoft Data Platform, especially in Azure.

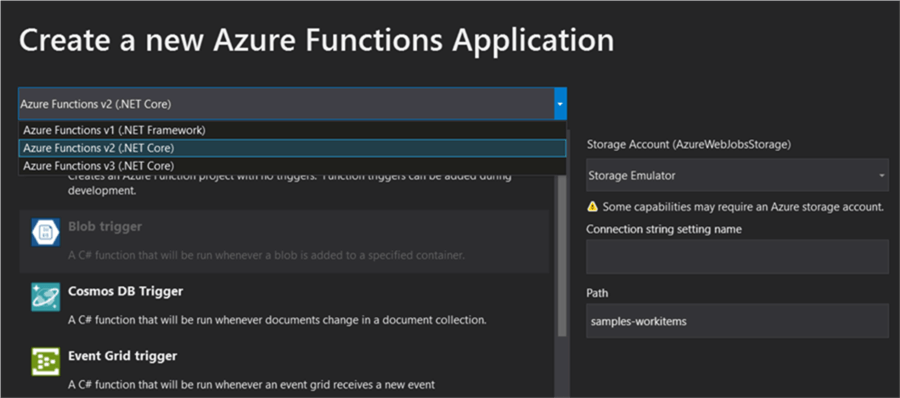

Azure Functions

Azure Functions are a bit like Azure Logic Apps. They are also serverless, event-driven and lightweight solutions, but in contrast with Logic Apps there's no visual designer. You'll need to code everything yourself! Azure Functions has support for many popular languages: C#, JavaScript, F#, Java, PowerShell, Python and TypeScript.

Azure Functions are intended to be short-lived and stateless, although it is possible to create durable functions that can keep state. Like T-SQL, using Azure Functions alone for your entire ETL implementation is probably not the best idea, but you rather implement them in a larger framework. You can for example start an Azure Function from ADF.

Some examples of Azure Functions:

- Process Blob Files Automatically using an Azure Function with Blob Trigger

- Create an Azure Function to Connect to a Snowflake Database - Part 1 and Part 2

- How to Schedule T-SQL Statements to Run with Azure Functions

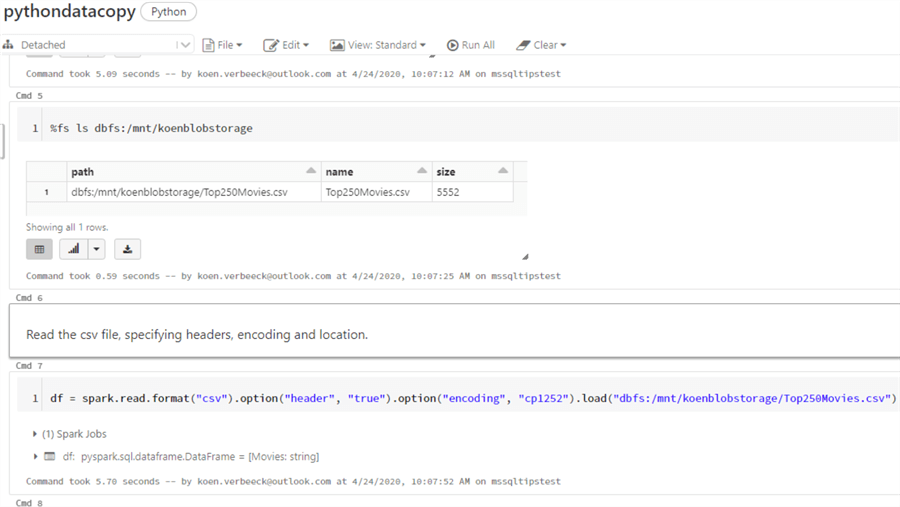

Azure Databricks

Databricks is a powerful unified data and analytics platform built on top of Apache Spark. Azure Databricks is the version that is available on the Azure platform. Azure Databricks is typically used in scenarios where the data is stored inside a data lake. It allows you to decouple storage from compute. In SQL Server, storage (data is stored in pages on disk) and compute (executing SQL scripts) are both managed within the same system. With Azure Databricks, you can store the data in cheap storage (like Azure Data Lake storage, which can hold terabytes of data for a low cost) and execute the compute in Databricks itself.

Code in Azure Databricks is written in notebooks, which can support a couple of languages: Scala, Python, R and SQL. It is possible to switch between languages in the same notebook, allowing the developer to use their language of choice.

In contrast with Azure Functions, Azure Databricks is typically used in big data scenarios. With Delta tables, you're able to keep track of changes on top of Parquet files. This allows you to build a data warehouse-style solution in Azure Databricks, sometimes referred to as "the data lakehouse".

There are many tips about Azure Databricks:

- How to Implement a Databricks Delta Change Data Feed Process

- Databricks Delta Live Tables Getting Started Guide

- Using Delta Schema Evolution in Azure Databricks

- Storage, Compute and Workspaces in Databricks

Next Steps

- You can find even more Databricks tips in this overview.

- The tip Azure Data Factory vs SSIS vs Azure Databricks gives an overview of these 3 ETL tools.

- There's a tutorial on how to create stored procedures in T-SQL.

About the author

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.

Koen Verbeeck is a seasoned business intelligence consultant at AE. He has over a decade of experience with the Microsoft Data Platform in numerous industries. He holds several certifications and is a prolific writer contributing content about SSIS, ADF, SSAS, SSRS, MDS, Power BI, Snowflake and Azure services. He has spoken at PASS, SQLBits, dataMinds Connect and delivers webinars on MSSQLTips.com. Koen has been awarded the Microsoft MVP data platform award for many years.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2022-05-25