By: Jared Westover | Updated: 2023-10-10 | Comments (5) | Related: > Fragmentation and Index Maintenance

Problem

Hundreds of articles are floating around online about index fragmentation and SQL Server. Several are 10 or 15 years old. Hardware capability has changed a lot in the past decades. We have access to cheaper SSDs, more memory, and faster CPUs. With modern hardware, do you need to worry about index fragmentation?

Solution

My hope in the coming text is to answer a few simple questions. With modern hardware, do you still need to worry about index fragmentation? How about logical fragmentation, where pages' physical and logical order don't match? Should you stop index maintenance altogether with SSDs? By the end of this article, you'll know how to make an informed decision tailored to your environment.

What is SQL Server Fragmentation?

I won't spend a lot of time explaining fragmentation. There are many articles, and I'll link to a few by Paul Randal, Brent Ozar, and others. Like the binary system, fragmentation comes in two types. The first is when your leaf data pages' logical and physical order don't match. People often refer to this type as logical fragmentation.

The second is when a data page has a bunch of empty space, called low page density. The typical side effect of low page density forces SQL to perform more page reads when you execute a query. Extra data pages lead to wasted space in the buffer pool, larger backups, and slower queries. I'm more concerned with low page density, even with older hardware.

Modern Hardware Impact on SQL Server Fragmentation

People tell me with modern hardware—SSDs for the win—worrying about index fragmentation is silly. Find something else to do, and stop rebuilding or reorganizing your indexes. Also, don't apply a fill factor because you're wasting space and causing fragmentation. What am I left to do? I can only watch so many videos on YouTube with conflicting information before I give up.

I'm not a hardware guy, but I have a fast machine with an SSD, lots of RAM, and several cores. With this modern marvel, fragmentation shouldn't cause performance issues, right? Let's build a dataset and test whether this holds true.

Building Our Dataset to Test SQL Server Fragmentation

Let's build a dataset with one table containing 20 million rows. I'll create a clustered index on the primary key, an ever-increasing integer—my preference. The non-clustered index covers a query the business runs. For non-clustered indexes, I often wait until we have usage data before creating them. Unless someone reviews index usage, they tend to stick around.

You'll need about 10GB for the database and another 5GB for the logfile. Below is the code. On my system, the query only takes about three minutes to execute. If you run this on an HDD, expect it to take much longer.

-- https://www.mssqltips.com

USE [master];

GO

IF DATABASEPROPERTYEX('FragmentationImpact', 'Version') IS NOT NULL

BEGIN

ALTER DATABASE FragmentationImpact

SET SINGLE_USER

WITH ROLLBACK IMMEDIATE;

DROP DATABASE FragmentationImpact;

END;

GO

CREATE DATABASE FragmentationImpact

ON PRIMARY

(

NAME = N'FragmentationImpact',

FILENAME = N'C:\code\MSSQLTips\SQLFiles\FragmentationImpact.mdf'

)

LOG ON

(

NAME = N'FragmentationImpact_log',

FILENAME = N'C:\code\MSSQLTips\SQLFiles\FragmentationImpact.ldf'

);

GO

ALTER DATABASE FragmentationImpact SET RECOVERY SIMPLE;

GO

USE FragmentationImpact;

GO

DECLARE @UpperBound INT = 20000000;

;WITH cteN (Number)

AS (SELECT ROW_NUMBER() OVER (ORDER BY s1.[object_id])

FROM sys.all_columns AS s1

CROSS JOIN sys.all_columns AS s2)

SELECT [Number]

INTO dbo.Numbers

FROM cteN

WHERE [Number] <= @UpperBound;

CREATE UNIQUE CLUSTERED INDEX CIX_Number -- CREATE UNIQUE INDEX

ON dbo.Numbers ([Number])

WITH (FILLFACTOR = 100);

CREATE TABLE dbo.Employees

(

Id INT IDENTITY(1, 1) NOT NULL,

FirstName VARCHAR(25) NOT NULL,

LastName VARCHAR(50) NOT NULL,

NickName VARCHAR(10) NULL,

FavoriteFood INT NULL,

BirthDate DATE NOT NULL,

HireDate DATE NOT NULL,

Salary DECIMAL(10, 2) NOT NULL,

Status VARCHAR(10) NOT NULL,

StateId INT NOT NULL,

CreateDate DATETIME NOT NULL

DEFAULT GETDATE(),

ModifyDate DATETIME NULL,

CONSTRAINT PK_Parent_Id

PRIMARY KEY CLUSTERED (Id)

);

GO

INSERT INTO dbo.Employees

(

FirstName,

LastName,

BirthDate,

HireDate,

Salary,

StateId,

Status

)

SELECT SUBSTRING(

'ABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZ',

(ABS(CHECKSUM(NEWID())) % 26) + 1,

8

) AS FirstName,

SUBSTRING(

'ABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZ',

(ABS(CHECKSUM(NEWID())) % 52) + 1,

15

) AS LastName,

DATEADD(DAY, RAND(CHECKSUM(NEWID())) * (1 + 7300), '1980-01-01') AS Birthdate,

DATEADD(DAY, RAND(CHECKSUM(NEWID())) * (1 + 3650), '1995-01-01') AS HireDate,

ABS(CHECKSUM(NEWID()) % 100000) + 25000 AS Salary,

ABS(CHECKSUM(NEWID()) % 50) + 1 AS StateId,

CASE

WHEN n.Number % 1000 = 1 THEN

'Inactive'

ELSE

'Active'

END AS Status

FROM dbo.Numbers n

WHERE n.Number <= 1000;

GO

CHECKPOINT;

CREATE NONCLUSTERED INDEX [IX_Birthdate]

ON dbo.Employees (BirthDate)

INCLUDE (

FirstName,

LastName,

NickName,

FavoriteFood

);

INSERT INTO dbo.Employees

(

FirstName,

LastName,

BirthDate,

HireDate,

Salary,

StateId,

Status

)

SELECT SUBSTRING(

'ABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZ',

(ABS(CHECKSUM(NEWID())) % 26) + 1,

8

) AS FirstName,

SUBSTRING(

'ABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZ',

(ABS(CHECKSUM(NEWID())) % 52) + 1,

15

) AS LastName,

DATEADD(DAY, RAND(CHECKSUM(NEWID())) * (1 + 7300), '1980-01-01') AS Birthdate,

DATEADD(DAY, RAND(CHECKSUM(NEWID())) * (1 + 3650), '1995-01-01') AS HireDate,

ABS(CHECKSUM(NEWID()) % 100000) + 25000 AS Salary,

ABS(CHECKSUM(NEWID()) % 50) + 1 AS StateId,

CASE

WHEN n.Number % 1000 = 1 THEN

'Inactive'

ELSE

'Active'

END AS Status

FROM dbo.Numbers n

WHERE n.Number > 1000;

GO

DROP TABLE dbo.Numbers;

CHECKPOINT;

GO

Below is an example query dozens of business people run hourly. It pulls information about the employees within a birth date range. An example date range is 01-01-1980 through 03-31-1980.

-- https://www.mssqltips.com

SELECT CONCAT(e.LastName, ', ', e.FirstName) AS FullName,

e.BirthDate,

e.NickName,

e.FavoriteFood

FROM dbo.Employees e

WHERE e.BirthDate >= @startdate

AND e.BirthDate <= @enddate;

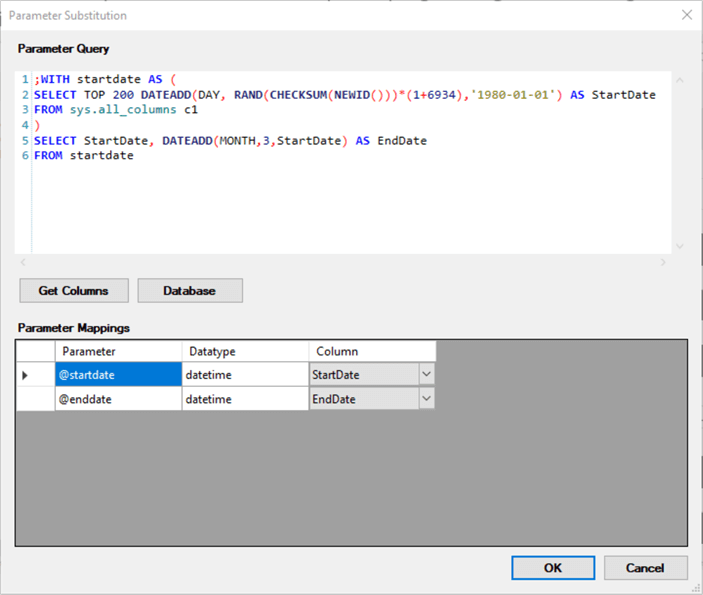

To simulate a real-world workload, I'll launch Adam Machanic's SQLQueryStress. I'm passing in the query below for the parameter. This query returns random date ranges spanning three months. The dates are always present in the Employees table.

-- https://www.mssqltips.com ;WITH startdate AS ( SELECT TOP 200 DATEADD(DAY, RAND(CHECKSUM(NEWID()))*(1+6934),'1980-01-01') AS StartDate FROM sys.all_columns c1 ) SELECT StartDate, DATEADD(MONTH,3,StartDate) AS EndDate FROM startdate;

I'll use Extended Events to capture a few performance metrics. You can use Adam's application, but I want to capture physical reads.

Before running our workload, let's see what our indexes look like. We'll use the function sys.dm_db_index_physical_stats.

-- https://www.mssqltips.com

SELECT i.index_id,

i.[name],

ips.index_type_desc,

ips.index_depth,

ips.page_count,

ips.record_count,

ips.avg_page_space_used_in_percent,

ips.avg_fragmentation_in_percent

FROM sys.dm_db_index_physical_stats(DB_ID(), OBJECT_ID('dbo.FragmentationImpact'), NULL, NULL, 'DETAILED') ips

INNER JOIN sys.indexes i

ON i.index_id = ips.index_id

AND [ips].[object_id] = [i].[object_id]

WHERE ips.index_level = 0;

GO

We hardly phased the clustered index by inserting 20 million rows. However, the non-clustered is over 98% fragmented, with the page fullness around 92%, indicative of logical fragmentation. I'll create a copy-only database backup to restore it to the baseline further along.

-- https://www.mssqltips.com

BACKUP DATABASE [FragmentationImpact]

TO DISK = N'C:\code\MSSQLTips\SQLFiles\FragmentationImpact.bak'

WITH COPY_ONLY,

NOFORMAT,

INIT,

NAME = N'FragmentationImpact-Full Database Backup',

COMPRESSION,

STATS = 10;

GO

Now, it's time to execute our workload for 200 iterations.

Let's review the metrics from the 200 executions. The query returns approximately 250,000 rows. Below are the results from my extended events session.

| Iterations | Logical Reads - AVG | CPU - AVG | Duration - AVG | Physical Reads - SUM |

|---|---|---|---|---|

| 200 | 1579 | 135ms | 221ms | 289,356 |

Let's rebuild our non-clustered index and then repeat the test. I'm not touching the clustered index in any demo.

-- https://www.mssqltips.com ALTER INDEX [IX_Birthdate] ON dbo.Employees REBUILD; GO

Before we execute, let's look at our indexes again.

Our non-clustered index is down to .01% fragmented, and we've trimmed over 8,000 pages, a 7% difference from the baseline. Let's execute our workload with the newly rebuilt index.

| Iterations | Logical Reads - AVG | CPU - AVG | Duration - AVG | Physical Reads - SUM |

|---|---|---|---|---|

| 200 | 1444 | 124ms | 202ms | 207,470 |

The results are in the table above. We could explain the 8% improvement in most metrics above by SQL performing less logical reads. One of the numbers stands out, Physical Reads—a 28% decrease. Why such a substantial reduction in physical reads?

My explanation is logical fragmentation impaired the read-ahead mechanism. SQL Server cannot efficiently fetch data pages from the index into the buffer pool. This may be fine if we want the extra data pages in memory anyway. If you have limited memory, like in a lower Azure tier, it could turn into a problem. The physical reads stop after SQL pulls all the pages into memory.

Erik Darling created a helpful view called whatsupmemory to look into the buffer pool. Below is what one execution looks like before and after the index rebuild. After the rebuild, there are fewer pages in cache.

Page Density Problems for SQL Server Fragmentation

The other type of fragmentation is low page density. This type appears when pages have a lot of free space. Updates on variable-with columns frequently cause page split and, as a byproduct, lower page density.

Paul Randal outlines ways to keep this from happening. Some ideas include using a lower fill factor or performing a delete plus insert instead of an update. Paul says in the article limiting this is easier said than done. Setting a fill factor seems the most practical of all the ideas. Ask app developers to change design patterns, and you'll likely face resistance. If you use temporal for history tracking, the delete, then insert adds complexity.

Before we look at another test, I'll restore the database from the backup taken after creating the initial dataset.

-- https://www.mssqltips.com

USE [master];

ALTER DATABASE [FragmentationImpact]

SET SINGLE_USER

WITH ROLLBACK IMMEDIATE;

RESTORE DATABASE [FragmentationImpact]

FROM DISK = N'C:\code\MSSQLTips\SQLFiles\FragmentationImpact.bak'

WITH FILE = 1,

NOUNLOAD,

REPLACE,

STATS = 10;

ALTER DATABASE [FragmentationImpact] SET MULTI_USER;

USE [FragmentationImpact];

GO

Below, I perform an update on 1% of our rows. I'm updating the Nickname column to a string from 1-6 characters long. I assume we have a food table with 1,000 values, then setting the ModifyDate with the GETDATE() function.

-- https://www.mssqltips.com

UPDATE dbo.Employees

SET NickName = SUBSTRING('ABCDEFGHIJKLMNOPQRSTUVWXYZ', (ABS(CHECKSUM(NEWID())) % 26) + 1, 6),

FavoriteFood = ABS(CHECKSUM(NEWID()) % 1000) + 1,

ModifyDate = GETDATE()

FROM dbo.Employees e

WHERE Id % 100 = 0;

GO

Let's see what happens to our index.

You can see from the image above our average space on a page lowered to 53%, and our fragmentation went up. Our page count increased by 74%. If I had introduced a lower percentage fill factor, 70-90%, the effect would have been less dramatic.

We'll run the same workload as before and review the numbers below.

| Iterations | Logical Reads - AVG | CPU - AVG | Duration - AVG | Physical Reads - SUM |

|---|---|---|---|---|

| 200 | 2737 | 135ms | 237ms | 477,228 |

As expected, everything went up.

Given our page count and low page density, I'll rebuild the index again.

-- https://www.mssqltips.com ALTER INDEX [IX_Birthdate] ON dbo.Employees REBUILD; GO

Finally, we'll rerun the workload.

| Iterations | Logical Reads - AVG | CPU - AVG | Duration - AVG | Physical Reads - SUM |

|---|---|---|---|---|

| 200 | 1452 | 130ms | 211ms | 207,308 |

We're almost back to the same stats after our first rebuild. In this test, we reduced the physical reads by 57% and the logical reads by 47%.

Don't forget to drop the database and the backup file after you finish.

Summary

Does logical fragmentation affect performance with an SSD? Maybe, but the impact appears small. For larger tables, the effect becomes visible due to read-ahead. Once pages are in memory, SQL quickly sorts through them. Does low page density have a performance impact? Yes, as seen in the demo above, SQL performs more logical reads, has less space in the buffer pool, backups are larger, etc.

When should you worry about fragmentation on newer hardware? Volatile tables over 5,000-10,000 pages might be a good starting point. It depends on your workload and how often page density drops. If you plan to perform a mass update on a large table, plan to rebuild indexes that reference the column. Coming to a rebuild threshold that considers page count, page density, and fragmentation percentage is a step in the right direction.

If someone performs a similar test as the one above on a lower-tier Azure SQL offering, I would love to hear the results. In the comments below, let me know if you have a different experience or opinion with logical fragmentation.

Key Points about Fragmentation

- Logical fragmentation has a tiny impact on performance with modern hardware. Low page density has a deeper effect on query performance.

- When performing mass updates outside your application, consider if an index rebuild might be appropriate.

- Focus index maintenance on larger, volatile tables. The standard advice is anything over 1,000 pages, but that's too low. I like 5,000-10,000 pages as a starting number.

- One thing every database professional can agree on is to update your statistics often.

Next Steps

- Jeff Moden has an excellent video on fragmentation on the GroupBy YouTube channel. I watched at 1x speed because Jeff's filled with more energy than all my kids combined. He covers lots of helpful information, and I recommend watching it.

- Have you decided to start rebuilding your indexes? It's essential to know the difference between an offline and online rebuild. Here is an article I wrote on the topic: Impact of Offline SQL Server Index Rebuilds.

- Can't get enough of fragmentation? Jonathan Kehayias wrote an informative article on the impact of fragmentation over at the SQLPerformance.com site.

- Do you want to start using Adam Machanic's SQLQueryStress? I wrote an article to get you started: Getting Started with SqlQueryStress for SQL Server Load Testing.

About the author

Jared Westover is a passionate technology specialist at Crowe, helping to build data solutions. For the past two decades, he has focused on SQL Server, Azure, Power BI, and Oracle. Besides writing for MSSQLTips.com, he has published 12 Pluralsight courses about SQL Server and Reporting Services. Jared enjoys reading and spending time with his wife and three sons when he's not trying to make queries go faster.

Jared Westover is a passionate technology specialist at Crowe, helping to build data solutions. For the past two decades, he has focused on SQL Server, Azure, Power BI, and Oracle. Besides writing for MSSQLTips.com, he has published 12 Pluralsight courses about SQL Server and Reporting Services. Jared enjoys reading and spending time with his wife and three sons when he's not trying to make queries go faster.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2023-10-10