By: John Miner | Updated: 2023-11-17 | Comments | Related: 1 | 2 | 3 | 4 | 5 | > Apache Spark

Problem

The big data engineer can choose to use Spark dataframe methods or Spark SQL functions to transform their information. When working with clients, I lean towards using the SQL syntax since it is more widely used than dataframe methods. Every language has at least three core data types: strings, numbers, and date/time. How do we manipulate data/time values using Spark SQL?

Solution

In the next three articles, I will review the syntax for string, number, and date/time Spark SQL functions. This tip will focus on learning the available date/time functions.

Business Problem

Explore the syntax of the date/time functions available in Azure Databricks. When appropriate, we will use the Adventure Works data files that are exposed as hive tables in my star database. In the examples, I will execute Spark SQL using the magic command in a Python notebook. The code below lists the tables in the star database. This is how I test my SQL syntax before embedding it in a spark.sql call.

%sql use star; show tables;

The output shows the 20 tables available in the database. We will only use the fact table for internet sales.

Current Date, Time, or Zone

The Spark ecosystem has evolved since its creation in 2012. The engine is written in Scala, but the library (PySpark) that we are using in the examples is written in Python. Therefore, it is not surprising that some functions return the same result but are named slightly differently.

%sql -- -- 1 - Current date, time or zone -- SELECT curdate() as the_date1, current_date() as the_date2, current_timestamp() as the_timestamp1, now() as the_timestamp2, current_timezone() as the_timezone1, from_utc_timestamp(current_timestamp(), 'America/Detroit') as the_est_time

The above code snippet returns the current date, timestamp, and time zone. The hardest part is to find the correct string to use for time zones. Please see the Wikipedia link for more information on time zones. The table below provides links to the functions that were explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | curdate | Return date object |

| 2 | current_date | Return date object |

| 3 | current_timestamp | Return timestamp object (UTC) |

| 4 | now | Return timestamp object (UTC) |

| 5 | current_timezone | Return a string representation of zone |

| 6 | from_utc_timestamp | Time for zone without offset. |

The output below shows the results of executing the Spark SQL query. In short, retrieving the current date, timestamp, or time zone is relatively easy.

Date Categories

The Adventure Works company ships products all over the world. While the order date might be interesting, it might be more interesting to find the month that has the most orders. How do we convert a date into a numeric category for aggregation? The following Spark SQL query finds the year, quarter, month, week, and day for a given order date in the fact table for internet sales.

%sql -- -- 2 - year, quarter, month, week and day functions -- select OrderDate, year(OrderDate) as order_date_year, quarter(OrderDate) as order_date_qtr, month(OrderDate) as order_date_month, weekofyear(OrderDate) as order_date_week_no, day(OrderDate) as order_date_day from star.fact_internet_sales limit 5;

Please see the table below that has links to the functions that were explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | year | Return date part as integer. |

| 2 | quarter | Calculate quarter of year as an integer. |

| 3 | month | Return date part as integer. |

| 4 | weekofyear | Calculate week of year as an integer. |

| 5 | day | Return date part as integer. |

The output below shows the results of executing the Spark SQL query for testing date category functions. I used the limit statement to list just the first five rows.

Converting a date into a numeric category used for grouping is a very common task. I cannot count how many times I have written Spark SQL queries that group by a given date part.

Day Of Functions

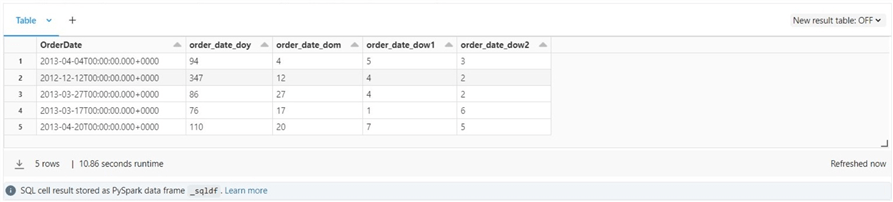

The business line sometimes wants to know the day of the year, the day of the month, or the day of the week an order was placed. The Spark SQL language has two day of week functions; the only difference is how the enumeration is converted to a category. The dayofweek function is one-based and starts on Sunday, while the weekday function is zero-based and starts on Monday.

The following Spark SQL query uses the order date in the fact table for internet sales to explore these functions.

%sql -- -- 3 - day of functions (year, month, week) -- select OrderDate, dayofyear(OrderDate) as order_date_doy, dayofmonth(OrderDate) as order_date_dom, dayofweek(OrderDate) as order_date_dow1, weekday(OrderDate) as order_date_dow2 from star.fact_internet_sales limit 5;

The output below shows the results of executing the Spark SQL query for day of functions. I used the limit statement to list only the first five rows.

If we look at the second record in the list, the calendar date of 12-12-2012 occurred on a Wednesday. It is 19 days away from the end of the year. The total remaining days and day of the year equals 366 days, which makes sense since it was a leap year. The last two fields contain the day of the week, which is correct. The developer needs to translate this numeric into a string representing the day.

Please see the table below for links to the functions explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | dayofyear | Return day count as integer. |

| 2 | dayofmonth | Return day count as integer. |

| 3 | dayofweek | Return day count as integer. |

| 4 | weekday | Return day count as integer. |

I do not use the day of functions as much; however, they are available for your use in your Spark SQL queries.

String To Date Functions

Each computer system and/or language has different precision on date/time values. Therefore, it is not uncommon to receive a date or timestamp in a string format within a data file. It is up to the data engineer to convert the string to the correct data type.

The following Spark SQL query uses my sister's birthday as a string to test the two available functions. This is a dedication to my sister since she lost her battle with cancer in 2017. I truly miss her.

%sql

--

-- 4 - string to date functions

--

select

to_date('1978-09-06', 'yyyy-MM-dd') as to_date_fmt_ymd,

to_date('06-09-1978', 'dd-MM-yyyy') as to_date_fmt_dmy,

date('1978-09-06') as str_to_date

The output below shows the results of executing the Spark SQL query for the string to date functions.

Please see the table below that has links to the string to date functions that were explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | to_date | Accepts many different formats. |

| 2 | date | Returns date object |

The string to date functions exist not only in Spark SQL but in most relational databases. It is not uncommon to see the use of these functions in everyday Spark SQL queries.

Time Categories

It might be interesting to look at all the Adventure Works orders placed by hour. That way, we can have staff to cover the sales call volume for a given hour. We can't get valid results for this query since the source system is keeping track of order dates, not order timestamps. Thus, all orders happen at midnight or at zero hours. If we wanted this information in our data lake, we would have to change the upstream system to include time.

The example below uses the current timestamp to show how to extract the hour, minute, and second.

%sql -- -- 5 - parts of time as numerics -- select current_timestamp() as cur_time, hour(current_timestamp()) as cur_hour, minute(current_timestamp()) as cur_minute, second(current_timestamp()) as cur_second

The output below shows the results of executing the Spark SQL query for time part functions.

Please see the table below with links to the functions explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | hour | Return hours as an integer |

| 2 | minute | Return minutes as an integer |

| 3 | second | Return seconds as an integer |

Converting a timestamp into a categorical numeric used for grouping is a very common task. Again, many of the functions that we talk about today have their origins in the Relational Database Management Systems (RDBMS).

Compose Date, Time, or Intervals

Some older systems, such as the mainframe, might store the date as three separate numeric values. How can we compose a date or timestamp from a set of numeric values? The example below shows how to use the two make functions to create a date and timestamp out of numeric values.

%sql -- -- 6 - compose date, time or interval from numerics -- select make_date(1978, 9, 6) as the_date, make_timestamp(1978, 9, 6, 7, 30, 15.887) as the_timestamp

The output below is from the above query.

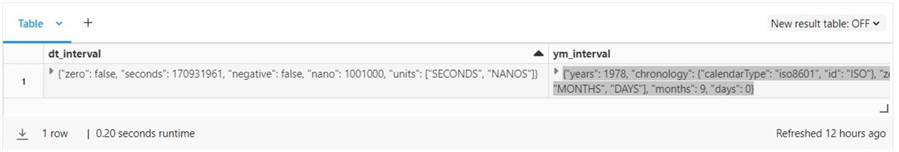

Composing a date or timestamp from numeric values is available in most relational database systems. What is not that common is the interval functions. What is an interval data type? It is a JSON representation of a given date/time.

The example below creates date, year/month, and regular interval data types.

%sql select make_dt_interval(1978, 9, 6, 01.001001) as dt_interval, make_ym_interval(1978, 9) as ym_interval, make_interval(1978, 9, 6, 7, 30, 15, 0.887) as the_interval;

The screenshot below was taken from the output of the above query.

Please see the table below that has links to the functions that were explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | make_date | Return a date object |

| 2 | make_timestamp | Return timestamp object |

| 3 | make_dt_interval | Return date interval object |

| 4 | make_ym_interval | Return year/month interval object |

| 5 | make_interval | Return interval object |

The make functions are handy when you have dates or times stored as numeric values.

Date Calculations

Various business problems might need to know the following: the number of months between two dates, the number of days between two dates, the number of years between two dates, what day of the week is a date, what is the next day, and what is the last day of the month. There are Spark SQL functions that can help you solve these problems.

The example query below explores these questions using my birthday and my sister's birthday.

%sql

--

-- 7 – date calculations

--

SELECT

abs(months_between('1968-09-05', '1978-09-06')) as month_diff_1,

datediff('1978-09-06', '1968-09-05') / 30.437 as month_diff_2,

date_diff('1978-09-06', '1968-09-05') / 365.25 as year_diff,

date_format('1968-09-05', 'E') as birth_day,

next_day('1968-09-05', 'TU') as first_tue,

last_day('1968-09-05') as end_of_month

The screenshot below shows the output of the date calculations. We estimate the difference between months and years using an average number of days. Yes, we are off a couple of hundredths between the first two numbers. But usually, that is good enough for most use cases.

Please see the table below for links to the functions explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | months_between | Returns a double |

| 2 | datediff | Returns an integer |

| 3 | date_diff | Returns a big integer |

| 4 | date_format | Returns a string |

| 5 | next_day | Returns a date |

| 6 | last_day | Returns a date |

Calculating date differences is a great way to find orders that take the longest number of days to ship.

Date Arithmetic

There are three functions to perform addition and subtraction with dates: add months, date add, and date sub.

%sql

--

-- 8 - add or sub dates

--

select

add_months(date(now()), 1) as next_month1,

date_add(curdate(), 1) as next_day1,

date_sub(current_date(), 1) as prev_day1

The screenshot below shows the output of the date arithmetic.

Please see the table below for links to the functions explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | add_months | Returns a date |

| 2 | date_add | Returns a timestamp |

| 3 | date_sub | Returns a timestamp |

The Adventure Works company wants to follow up with each customer order one week after an item is shipped. We can easily calculate the callback date using the date add function.

Date Parts vs. Date Truncation

There might be a business requirement to return part of a date or truncate a date/timestamp to the nearest part. The query below explores these problems.

%sql

--

-- 9 - date parts and date truncate

--

%sql

select

date_part('YEAR', current_date()) as the_year1,

datepart('YEAR', current_date()) as the_year2,

current_timestamp() as the_date_time,

date_trunc('YEAR', current_timestamp()) as the_year_start,

date_trunc('MONTH', current_timestamp()) as the_month_start,

date_trunc('DAY', current_timestamp()) as the_day_start,

date_trunc('HOUR', current_timestamp()) as the_hour_start

The output from executing the above Spark SQL statement is seen below.

Please see the table below for links to the functions explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | date_part | Returns a decimal or integer |

| 2 | datepart | Returns a decimal or integer |

| 3 | date_trunc | Returns a timestamp |

The date part function is a more complex way to convert a timestamp to a numerical category. The date truncate function comes in handy when we do not care about certain parts of a date. For instance, return the current date and hour that an event occurred.

Unix Date/Time

The Unix operating system became available to companies in 1970. Therefore, it was natural for the system creators to count the number of seconds from January 1, 1970. In this section, we will talk about seven functions that you can use with these date/time values.

%sql

--

-- 10 – working with Unix time

--

select

unix_date(date('1968-09-05')) as unix_days1,

unix_micros(timestamp('1968-09-05')) as unix_micros1,

unix_millis(timestamp('1968-09-05')) as unix_millis1,

unix_seconds(timestamp('1968-09-05')) as unix_secs1,

unix_timestamp('1970-01-01', 'yyyy-MM-dd') as unix_ts1,

date_from_unix_date(1) as unix_ts2,

from_unixtime(0) as unix_ts3

The first four calculations are based on my birthday, 483 days before the Unix epoch. Therefore, these numbers are negative. Of course, asking for the number of seconds from the epoch is always zero. The last two functions convert from a numeric to a date or timestamp. The output from executing the above Spark SQL statement is seen below.

Please see the table below for links to the functions explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | unix_date | Returns an integer |

| 2 | unix_micros | Returns a big integer |

| 3 | unix_millis | Returns a big integer |

| 4 | unix_seconds | Returns a big integer |

| 5 | unix_timestamp | Returns a big integer |

| 6 | date_from_unix_date | Returns a date |

| 7 | from_unixtime | Returns a string |

Here is some food for thought: Since a Unix timestamp is the number of seconds from an epoch, we can use arithmetic to get the number of seconds between two timestamps, then use the correct divisor to convert to days, weeks, months, or years.

Unix To Timestamp

What happens if we capture the number of seconds since January 1, 1970, in a data file and then want to know the current date from this field? There are three functions to convert seconds, milliseconds, and microseconds to a timestamp.

%sql

--

-- 11 – Unix time to timestamp

--

select

timestamp_micros(unix_micros(timestamp('1968-09-05 07:30:15'))) as ts1,

timestamp_millis(unix_millis(timestamp('1968-09-05 07:45:30'))) as ts2,

timestamp_seconds(unix_seconds(timestamp('1968-09-05 08:00:15'))) as ts3

The output from executing the above Spark SQL statement is seen below. I did change the time slightly on the timestamps to make sure the functions work.

Please see the table below for links to the functions explored in this simple query.

| Example | Function | Description |

|---|---|---|

| 1 | timestamp_micros | Returns a timestamp |

| 2 | timestamp_millis | Returns a timestamp |

| 3 | timestamp_seconds | Returns a timestamp |

In short, we can convert from and to Unix timestamps stored in seconds, milliseconds, and microseconds.

Working With Intervals

The last way to perform arithmetic with date/time values is to use intervals. The keywords TIMESTAMP and INTERVAL are used in the example below. We are taking a string and converting it to a time stamp. Next, we add the interval to the given timestamp to arrive at our adjusted value as a timestamp.

%sql -- -- 12 - interval examples -- SELECT TIMESTAMP '1968-09-05 07:30:15' + INTERVAL 3 YEARS AS birth_plus_3yy, TIMESTAMP '1968-09-05 07:30:15' + INTERVAL 3 MONTHS AS birth_plus_3mm, TIMESTAMP '1968-09-05 07:30:15' + INTERVAL 3 DAYS AS birth_plus_3dd, TIMESTAMP '1968-09-05 07:30:15' + INTERVAL 3 HOURS AS birth_plus_3hr, TIMESTAMP '1968-09-05 07:30:15' + INTERVAL 3 MINUTES AS birth_plus_3mi, TIMESTAMP '1968-09-05 07:30:15' + INTERVAL 3 SECONDS AS birth_plus_3ss

The image below shows my birthday adjusted by three years, three months, three days, three hours, three minutes, and three seconds.

The Spark SQL language supports the addition and subtraction of an interval from a timestamp. This is a great way to calculate future or past events. Please refer to the online documentation for more details.

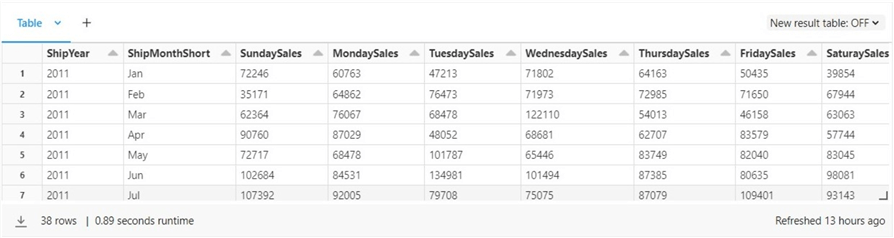

Capstone Project

The previous sections were focused on the syntax of all the date/time functions supported by Spark SQL. Now, we have a capstone project to complete. How can we calculate the total shipment value by workday for January 2011? That way, we can ensure we have enough staff in the shipment department for high-demand days.

I am going to break this solution into two parts. We want to pivot the data by week for a given year and month. It is important to note that the PIVOT keyword is not supported with delta live tables. Therefore, it is important to know how to bucket data using straight SQL syntax.

In the first part of the solution, we will create a view with the data placed into the correct day bucket. I wanted to introduce the reader to both the case and if statements. I am using the case statement to convert a month represented as an integer to a string value. This can be done more efficiently with the date format function in one line of code. Please see the field called ShipMonthShort. The if statement is used to bucket the sales by a given workday (ship date) column.

--

-- 13 - Create view for ship data

--

%sql

create or replace view star.vw_ship_data as

select

year(ShipDate) as ShipYear,

month(ShipDate) as ShipOrder,

date_format(ShipDate, 'MMM') as ShipMonthShort,

case

when month(ShipDate) == 1 then 'Jan'

when month(ShipDate) == 2 then 'Feb'

when month(ShipDate) == 3 then 'Mar'

when month(ShipDate) == 4 then 'Apr'

when month(ShipDate) == 5 then 'May'

when month(ShipDate) == 6 then 'Jun'

when month(ShipDate) == 7 then 'Jul'

when month(ShipDate) == 8 then 'Aug'

when month(ShipDate) == 9 then 'Sep'

when month(ShipDate) == 10 then 'Oct'

when month(ShipDate) == 11 then 'Nov'

when month(ShipDate) == 12 then 'Dec'

else 'unknown'

end as ShipMonthLong,

if(dayofweek(ShipDate) == 1, ExtendedAmount, 0) as SundaySale,

if(dayofweek(ShipDate) == 2, ExtendedAmount, 0) as MondaySale,

if(dayofweek(ShipDate) == 3, ExtendedAmount, 0) as TuesdaySale,

if(dayofweek(ShipDate) == 4, ExtendedAmount, 0) as WednesdaySale,

if(dayofweek(ShipDate) == 5, ExtendedAmount, 0) as ThursdaySale,

if(dayofweek(ShipDate) == 6, ExtendedAmount, 0) as FridaySale,

if(dayofweek(ShipDate) == 7, ExtendedAmount, 0) as SaturdaySale

from

star.fact_internet_sales

The image below shows the output from executing a select statement against the newly created view.

The second part of the solution is to aggregate and filter the data. The query below solves our capstone problem.

-- -- 14 - Create view for ship data -- %sql select ShipYear, ShipMonthShort, Sum(SundaySale) as SundaySales, Sum(MondaySale) as MondaySales, Sum(TuesdaySale) as TuesdaySales, Sum(WednesdaySale) as WednesdaySales, Sum(ThursdaySale) as ThursdaySales, Sum(FridaySale) as FridaySales, Sum(SaturdaySale) as SaturdaySales from star.vw_ship_data where ShipYear = 2011 and ShipMonthShort = 'Jan' group by ShipYear, ShipOrder, ShipMonthShort order by ShipYear, ShipOrder

The output below shows the unfiltered data sorted by year and month.

The cliché of a "picture is worth a thousand words" can be used now. Let's create a bar graph and determine which days we need more staff.

We can see that Sunday had the most products in terms of dollars being shipped from the Adventure Works warehouse. However, Wednesday came in as a close second. The least active day in the shipping department in January is Saturday.

Summary

Many functions deal with dates, timestamps, and time zones. A given occurrence (event) can be captured as a string, an internal date, or a timestamp object. We even learned that Unix systems have kept track of time as the number of seconds since January 1, 1970. Older systems like mainframes might have stored dates or times as separate integers for each category.

The functions in this article can be used by a big data developer to convert incoming file data into a modern format, such as date or timestamp, before storing it in a delta table. When creating summarized information for reporting, the developer might want to categorize the data before aggregating. Looking at every single order is very boring. However, the summarized sales value by shipping day interests the shipping manager. Now, he can staff appropriately for the busiest days of the week.

A good understanding of manipulating the basic data types (date/time, numbers, and strings) is a very important skill for the data engineer. Enclosed is the notebook used in this article. Next time, we will focus on working with numeric functions. Since many of these functions are algebraic in nature, we will be solving some math problems.

Next Steps

- Learn about numeric functions.

- Learn about string functions.

About the author

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.

John Miner is a Data Architect at Insight Digital Innovation helping corporations solve their business needs with various data platform solutions.This author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2023-11-17