By: Ron L'Esteve | Updated: 2024-01-23 | Comments | Related: More > Artificial Intelligence

Problem

LangChain is a framework for developing applications powered by large language models (LLMs). They enable applications to connect a language model to other sources of data and interact with its environment. LangChain can be combined with various data sources and targets while developing prompt templates. A prompt template is a predefined structure or format used to guide the generation of text in a specific context or for a specific task. How can LangChain be combined with prompt templates to be utilized with OpenAI's LLMs effectively to enhance the quality of generated text and improve the efficiency of natural language processing tasks?

Solution

LangChain can be used when designing prompt engineering templates. They can leverage OpenAI, HuggingFace, or other LLMs through APIs. They can structure the input prompts in a way that guides the LLMs to generate high-quality text, thereby improving the efficiency of natural language processing tasks. LangChain can also be integrated with various vector databases such as Pinecone and Chroma and with Cloud technologies like Databricks, Azure, and more. Note that a vector database is a specialized storage system for managing vector embeddings, which are numerical representations of data that AI models can process.

Prompt templates can be designed for several unique custom needs, including summarization, translation, content generation, question answering, information extraction, sentiment analysis, synthetic data generation, and more. The key to effective, prompt engineering is to be clear and specific in your question to guide the AI towards the desired output.

In this tip, we will explore how to start with a few sample prompt templates by using the gpt-4 LLM within OpenAI and LangChain in a Databricks notebook. If you are following along, be sure to create an ML Runtime-based Databricks compute cluster for running the Python code.

Zero-Shot Prompt Template

Zero-shot prompt engineering is a methodology that allows a language model to generate accurate predictions or responses to new prompts without requiring any additional training. This Python code defines a prompt template for an LLM to act as an IT business idea consultant. The Prompt Template class from the LangChain module is used to create a new prompt template. The input_variables parameter is set to ["Product"], meaning the template expects a product name as input. The template parameter is a string that defines the structure of the prompt. In this case, the language model is asked to provide three unique business ideas for a company that wants to get into the business of selling a specific product. The product name will be inserted into the {Product} placeholder in the template.

#Zero Shot Prompt Template

from langchain import PromptTemplate

template = "I want you to act as an IT business idea consultant and provide three unique business ideas for a company that wants to get into the business of selling {Product}?"

prompt_template = PromptTemplate(

input_variables=["Product"],

template=template

)

By running the following code, we are using the OpenAI gpt-4 LLM and the LangChain prompt template we created in the previous step to have the AI assistant generate three unique business ideas for a company that wants to get into the business of selling Generative AI. Since we did not provide examples within the prompt template, this is an example of Zero-Shot Prompt Engineering.

print(openai(

prompt_template.format(

Product="GenerativeAI"

)

))

And here are the Generative AI product ideas that the assistant provided. Not bad at all!

One-Shot Prompt Template

One-shot prompt engineering is a methodology that provides a single example or demonstration of the desired behavior to the AI model, enabling it to grasp the essence of the task and generate the desired output. The following Python code defines a one-shot prompt template for our LLM to act as a Data and AI Expert specializing in Azure and Databricks Data Engineering and Architecture. The input_variables parameter is set to ["query"], meaning the template expects a user query as an input. The template parameter is a string that defines the structure of the prompt. In this case, the language model is provided with context to answer a question about Azure and Databricks Data and AI with as much detail as possible. It must respond with "I don't know" if the question is out of the scope of the context.

#One Shot Prompt Template

from langchain import PromptTemplate

template = """Answer the question based on the context below. If the

question cannot be answered using the information provided answer

with "I don't know".

Context: You are a Data and AI Expert that specializes in Azure and Databricks Data Engineering and Architecture. When asked a question about Azure and Databricks Data and AI questions, answer with as much detail as possible with answers that are directly related to either Azure, Databricks, or both.

Question: {query}

Answer: """

prompt_template = PromptTemplate(

input_variables=["query"],

template=template

)

Let us ask the AI assistant where we can learn more about Data and AI Engineering.

print(openai(

prompt_template.format(

query="Where can I learn more about Data and AI Engineering?"

)

))

Notice from the response that the AI model provides a collection of resources for learning about Data and AI Engineering within the context of Azure and/or Databricks only.

Let's try asking another question about what Tableau is. Notice that it responds with an answer and explains that Tableau can be integrated with Azure or Databricks. This shows that it can respond within the context of the prompt template.

Now, let's try to ask a question out of context with no relation to either Azure or Databricks. Let's ask the model, "What is the meaning of life?" Sure enough, it responded to the instructions provided within the prompt template by answering, "I don't know."

Few-Shot Prompt Templates

Few-shot prompting is a technique that enables an LLM to generate coherent text with limited training data, typically in the range of 1 to 10 examples. In this Python code, we import the FewShotPromptTemplate from LangChain and then add a few examples. Next, we create the sample template, prompt example, and break out the prompt into prefix and suffix. In few-shot prompting, a prefix and suffix are used to set the context and task for the model. For example, to prompt a model to translate English to French, the prefix could be "Translate the following English sentences into French:" followed by examples, and the suffix could be "Now, translate this sentence:" followed by the sentence to translate.

In the code below, the examples demonstrate how precisely we expect the model to answer a question based on the user's query and within the expected template. Furthermore, we are telling the model to keep the responses accurate and related to Prompt Engineering or tell the user that the question is unrelated to Prompt Engineering and doesn't know the answer.

from langchain import FewShotPromptTemplate

# create our examples

examples = [

{

"query": "What is prompt engineering?",

"answer": "Prompt engineering is the process of structuring text that can be interpreted and understood by a generative AI model, guiding it to produce high-quality and relevant outputs."

}, {

"query": "What are some applications of prompt engineering?",

"answer": "Prompt engineering is used in text summarization, information extraction, question answering, translation, and content generation among others."

}

]

# create an example template

example_template = """

User: {query}

AI: {answer}

"""

# create a prompt example from above template

example_prompt = PromptTemplate(

input_variables=["query", "answer"],

template=example_template

)

# now break our previous prompt into a prefix and suffix

# the prefix is our instructions

prefix = """The following are excerpts from conversations with an AI

assistant. The assistant is typically precise, producing

accurate responses to the users questions. Here are some

examples. Keep all responses related to Prompt Engineering, else

tell the user the question is un-related to Prompt Engineering

and that you don't know:

"""

# and the suffix our user input and output indicator

suffix = """

User: {query}

AI: """

# now create the few shot prompt template

few_shot_prompt_template = FewShotPromptTemplate(

examples=examples,

example_prompt=example_prompt,

prefix=prefix,

suffix=suffix,

input_variables=["query"],

example_separator="\n\n"

)

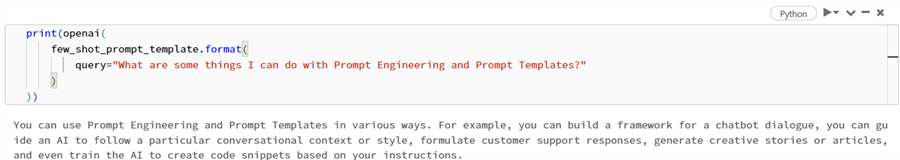

Let's ask the AI model the following question: "What are some things I can do with Prompt Engineering and Prompt Templates?"

print(openai(

few_shot_prompt_template.format(

query="What are some things I can do with Prompt Engineering and Prompt Templates?"

)

))

Sure enough, the model gave me an accurate answer within the context of Prompt Engineering.

Again, let's also ask a question out of context of the prompt template: "What is the meaning of life?" As expected, notice how it responds that the question is unrelated to Prompt Engineering and doesn't have a definitive answer for that query.

Conclusion

This demo shows that both LangChain and Prompt Templates can be deeply customized to meet specific business goals. This fundamental example demonstrates the capability of prompt templates that can run on Databricks runtime compute with OpenAI LLMs. Prompt engineering is an art and science that includes development, testing, and creative thinking to construct customized Prompt Templates effectively with meaningful context and choose the best LLM for the task. LLMs from OpenAI or HuggingFace can be used based on the use case. Several cloud platforms offer the capability of building these prompt templates since LangChain and LLMs can be called via open-source APIs, allowing it to run on either Azure Synapse, Fabric, Databricks, AML, or even locally in VS Code-based environments. This flexibility promotes framework re-usability, and when combined with LLMOps technologies such as MLFlow, these customizable models can be deployed and consumed easily in real time. Furthermore, UIs can be built with Gradio, AML Prompt Flow, and more to enhance the full interactive user experience for consuming these models in real time. As always, remember to research and monitor the costs and consumption trends associated with building and consuming these models in production-grade environments.

Next Steps

- Read more about Prompt Engineering and LLMs with Langchain | Pinecone

- Explore Hugging Face Hub | Langchain

- Explore GPT prompts available on Hugging Face: fka/awesome-chatgpt-prompts · Datasets at Hugging Face

- Read more about Few-shot examples for chat models | Langchain

About the author

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tec

Ron L'Esteve is a trusted information technology thought leader and professional Author residing in Illinois. He brings over 20 years of IT experience and is well-known for his impactful books and article publications on Data & AI Architecture, Engineering, and Cloud Leadership. Ron completed his Master�s in Business Administration and Finance from Loyola University in Chicago. Ron brings deep tecThis author pledges the content of this article is based on professional experience and not AI generated.

View all my tips

Article Last Updated: 2024-01-23